The transition to microservices architectures offers unparalleled scalability and agility, yet it introduces a significant hurdle: data fragmentation. Scattered across various services, each employing its own storage solutions and data formats, the valuable information generated becomes siloed, hindering comprehensive analysis and effective decision-making. This exploration delves into the intricacies of consolidating this dispersed data, examining the challenges and offering a comprehensive guide to the techniques and technologies essential for achieving unified data aggregation.

This analysis examines the critical need for robust data aggregation in microservices environments, from understanding the diverse data sources and formats to evaluating various aggregation strategies. We will dissect real-time and batch processing approaches, explore the role of message queues, data lakes, and data warehouses, and examine architectural patterns like API Gateways and Sidecar patterns. Furthermore, this work will Artikel monitoring and troubleshooting methodologies to ensure the reliability and performance of data aggregation pipelines, providing a holistic understanding of this critical aspect of microservices management.

The Challenge of Data Aggregation in Microservices

The adoption of a microservices architecture, while offering numerous benefits such as increased scalability and independent deployments, introduces a significant challenge: data scattering. Data, once neatly contained within a monolithic application, is now distributed across multiple, independently deployable services, each responsible for its own data store. This fragmentation complicates data aggregation, a crucial process for gaining comprehensive insights and making informed decisions.Consider an e-commerce platform built on microservices.

Customer data, including purchase history, browsing behavior, and support interactions, resides in different services (e.g., the order service, the product catalog service, and the customer support service). Analyzing customer lifetime value (CLTV) requires aggregating data from all these sources.

Data Fragmentation and Its Impact

Poor data aggregation directly hinders effective decision-making. Without a consolidated view of the data, organizations struggle to understand customer behavior, identify trends, and optimize business processes.The implications of inadequate data aggregation manifest in several key areas:

- Inaccurate Reporting: Data scattered across services leads to incomplete or inconsistent reports. For example, if order data and customer data are not properly synchronized, sales figures may be inaccurate, impacting revenue forecasting and financial planning. This can result in significant financial losses.

- Impaired Business Intelligence: Without a unified data view, business intelligence initiatives are severely limited. Analyzing customer churn, for example, requires combining data from multiple services (e.g., order cancellations, support tickets, and product usage). Without this aggregation, the business is unable to identify the factors contributing to churn and develop effective retention strategies.

- Inefficient Operations: The lack of aggregated data can lead to operational inefficiencies. For instance, inventory management becomes challenging if product sales data is not readily available across different services. This can result in stockouts, overstocking, and increased operational costs.

- Delayed Decision-Making: The process of gathering and consolidating data from disparate sources is often time-consuming. This delay can hinder the ability to respond quickly to market changes, customer feedback, or competitive pressures.

The ability to aggregate data effectively is therefore paramount to realizing the full potential of a microservices architecture.

Understanding Microservices Data Sources

Microservices architectures, by their distributed nature, necessitate a thorough understanding of the data sources they utilize. Each microservice typically operates independently, responsible for its own data management and persistence. This autonomy leads to a diverse landscape of data storage technologies and formats, creating both flexibility and complexity in data aggregation efforts. Effective aggregation strategies must therefore account for the inherent heterogeneity of these data sources.

Common Data Storage Technologies

The choice of data storage technology within a microservices architecture is often driven by the specific requirements of each service. Different services might prioritize different aspects, such as read/write performance, data consistency, or scalability, leading to a diverse ecosystem of data stores.

- Relational Databases (SQL Databases): Relational databases, such as PostgreSQL, MySQL, and Microsoft SQL Server, are commonly used for microservices requiring structured data, strong consistency, and complex querying capabilities. They are well-suited for applications where data integrity and transactional support are paramount. These databases typically enforce the ACID properties: Atomicity, Consistency, Isolation, and Durability.

For example, an e-commerce platform might use a relational database to manage product catalogs, order details, and customer information, ensuring data accuracy and consistency across transactions.

- NoSQL Databases: NoSQL databases, encompassing a variety of models (e.g., document, key-value, graph, and column-family), offer flexibility and scalability, particularly for handling large volumes of unstructured or semi-structured data. They often provide eventual consistency, trading immediate consistency for performance and availability. Popular examples include MongoDB (document), Redis (key-value), and Cassandra (column-family).

For instance, a social media microservice might employ a NoSQL database to store user profiles, posts, and connections, allowing for rapid scaling and handling of high-volume data.

- Message Queues: While not primarily data storage solutions, message queues like Kafka and RabbitMQ play a crucial role in data distribution and event processing within microservices. They act as intermediaries, enabling asynchronous communication and decoupling services, which can indirectly impact how data is handled and accessed.

A service publishing order updates might use a message queue to notify other services (e.g., inventory, shipping) about new orders, facilitating data consistency and eventual data synchronization.

- Object Storage: Object storage services, such as Amazon S3 or Google Cloud Storage, are often used for storing large binary objects, such as images, videos, and documents. Microservices might utilize object storage for managing assets associated with their operations.

An image processing microservice, for example, might use object storage to store original and processed images, facilitating scalable storage and retrieval of media content.

Various Data Formats Used by Microservices

Microservices employ a variety of data formats for internal data representation and external communication. The choice of format impacts data serialization, deserialization, and the overall efficiency of data exchange between services.

- JSON (JavaScript Object Notation): JSON is a widely adopted text-based format for representing structured data. Its human-readability and ease of parsing make it a popular choice for API communication and data storage. Its lightweight nature contributes to faster data transfer and lower bandwidth consumption.

For example, a microservice providing product details might use JSON to represent product attributes like name, description, price, and inventory status.

- XML (Extensible Markup Language): XML, a markup language designed for encoding documents in a format that is both human-readable and machine-readable, provides a more verbose format compared to JSON. It supports schema validation and offers robust features for representing complex data structures. However, it tends to be less efficient in terms of data size and parsing speed compared to JSON.

A microservice exchanging financial data with another system might use XML to ensure data integrity and compatibility with existing systems that may rely on XML-based standards.

- Protocol Buffers (Protobuf): Developed by Google, Protocol Buffers is a binary format that offers a compact and efficient way to serialize structured data. It requires the definition of a schema, which facilitates data validation and versioning. Protobuf’s efficiency makes it a strong choice for high-performance communication.

Microservices exchanging large volumes of data, such as telemetry data or sensor readings, might utilize Protobuf to optimize data transfer speed and reduce network overhead.

- Avro: Avro is a row-oriented remote procedure call (RPC) and data serialization framework. It provides a schema-based data format with support for schema evolution, making it suitable for handling evolving data structures.

A data streaming service might use Avro to serialize data records in a Kafka topic, enabling efficient data processing and schema evolution.

- CSV (Comma-Separated Values): CSV is a simple text-based format used for representing tabular data. It’s easy to generate and parse but lacks support for complex data structures or data types.

A microservice exporting data for reporting purposes might use CSV to generate flat files containing data summaries.

Challenges of Dealing with Data Heterogeneity

Data heterogeneity, stemming from the independent evolution of microservices and their respective data stores, poses significant challenges for data aggregation. Addressing these challenges requires careful planning and the adoption of appropriate strategies.

- Schema Differences: Different microservices might use different schemas to represent similar data concepts. For example, the format for a customer’s address might vary across different services. This requires data transformation during aggregation.

Consider a scenario where one service stores customer addresses with a ‘zip_code’ field, while another uses ‘postal_code’. An aggregation service would need to map these fields appropriately.

- Data Type Inconsistencies: Data types can differ across services. A date might be stored as a string in one service and as a timestamp in another. This requires type conversion during aggregation.

If one service stores a date as “YYYY-MM-DD” and another as a Unix timestamp, the aggregation process needs to convert between these formats.

- Data Location: Data might be stored in various data stores with different access patterns and query capabilities. Aggregation requires determining the optimal method for retrieving data from these diverse sources.

Aggregating data from a relational database and a NoSQL database requires adapting to their respective query languages and access methods.

- Data Consistency: Maintaining data consistency across different services, especially during aggregation, is a significant challenge. Ensuring that aggregated data reflects the latest state of each service’s data is critical.

To address data consistency issues, consider strategies like eventual consistency, using techniques like compensating transactions, or leveraging a data warehouse for centralized data management.

- Data Security and Access Control: Ensuring secure access to data from multiple microservices during aggregation requires careful consideration of access control policies and data privacy regulations.

Implement robust authentication and authorization mechanisms to control access to data and comply with relevant regulations, such as GDPR or HIPAA.

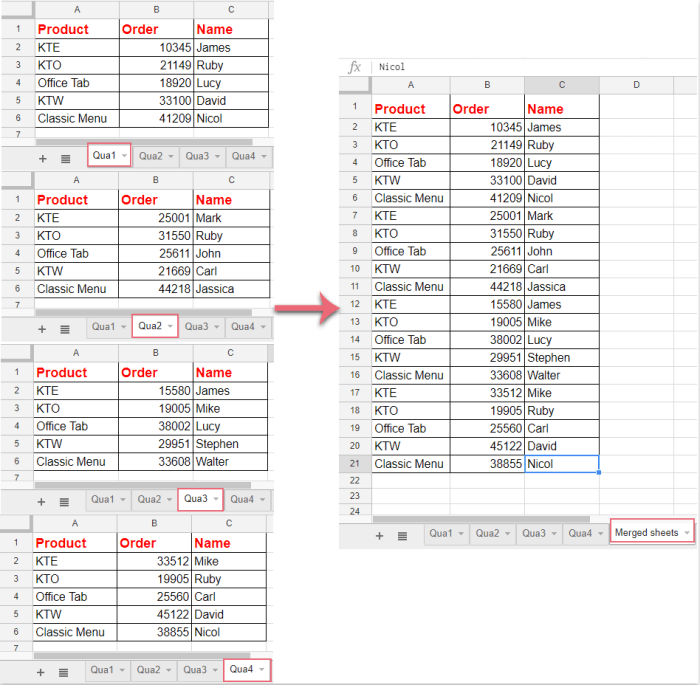

Aggregation Strategies

Data aggregation in a microservices architecture necessitates careful consideration of various strategies to combine information from disparate sources effectively. One crucial aspect involves extracting, transforming, and loading data (ETL) to prepare it for aggregation and subsequent analysis. This section will delve into ETL processes and data transformation techniques in the context of microservices.

ETL Process in Microservices

The ETL process is fundamental to data integration and aggregation, particularly in microservices environments where data resides in isolated services. It involves three primary stages: Extract, Transform, and Load. The

- Extract* phase retrieves data from multiple source systems. The

- Transform* phase cleans, converts, and prepares the extracted data. Finally, the

- Load* phase integrates the transformed data into a target system, such as a data warehouse or a dedicated aggregation service.

The ETL process in microservices is often distributed and can be implemented using various technologies, including:

- Data Extraction: This stage involves accessing and retrieving data from the individual microservices. The extraction process should consider the data formats, access methods (e.g., REST APIs, message queues, database connections), and data volume of each service.

- Data Transformation: This is where the extracted data undergoes a series of operations to convert it into a consistent and usable format. Transformations may include data type conversions, data cleansing, data enrichment, and aggregation.

- Data Loading: The transformed data is then loaded into a destination system. The loading process should be designed to handle data integrity, performance, and scalability. Common destinations include data warehouses, data lakes, or dedicated aggregation services.

Data Transformation Techniques

Data transformation is a critical component of the ETL process, enabling the conversion of raw data into a format suitable for analysis and aggregation. This involves a variety of techniques to ensure data quality and consistency.Here are some common data transformation techniques:

- Data Type Conversion: Converts data from one type to another to ensure consistency across the aggregated dataset. For instance, converting string representations of numbers to numeric data types for calculations. For example, converting the string “123” to the integer 123 for use in calculations.

- Data Cleansing: Addresses errors, inconsistencies, and missing values in the data. This includes handling null values, correcting typos, and removing duplicate entries. For instance, replacing “N/A” or “NULL” with a default value or removing incomplete records.

- Data Standardization: Applies consistent formats and units to the data. This might involve standardizing date formats, currency symbols, or units of measurement. For example, converting all date entries to the “YYYY-MM-DD” format.

- Data Enrichment: Adds new information to the dataset by combining data from multiple sources. This might involve looking up additional information based on existing data. For example, enriching customer records with demographic data from a third-party provider.

- Data Aggregation: Summarizes data by grouping it based on certain criteria and performing calculations such as sums, averages, or counts. For example, calculating the total sales per product category.

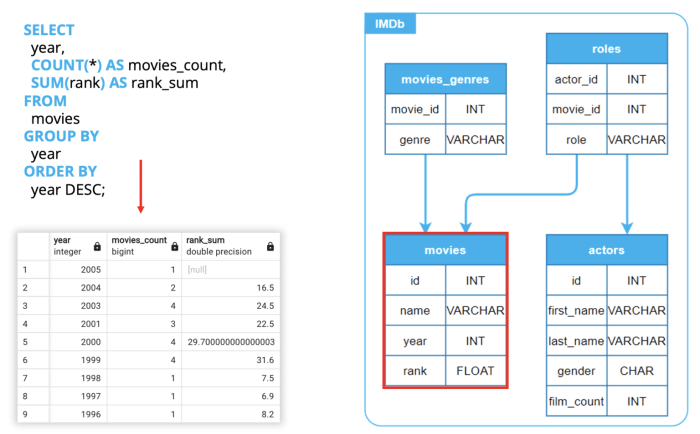

Data Extraction Process Example

Designing an effective data extraction process requires understanding the data structures and access methods of each microservice. This example Artikels a data extraction process from three hypothetical microservices: `Order Service`, `Customer Service`, and `Product Service`. The data will be extracted and prepared for aggregation.Here are the hypothetical data structures of the microservices:

- Order Service: This service manages order information.

- `order_id` (INT, Primary Key)

- `customer_id` (INT, Foreign Key)

- `product_id` (INT, Foreign Key)

- `order_date` (DATE)

- `quantity` (INT)

- `total_amount` (DECIMAL)

- Customer Service: This service stores customer details.

- `customer_id` (INT, Primary Key)

- `first_name` (VARCHAR)

- `last_name` (VARCHAR)

- `email` (VARCHAR)

- `registration_date` (DATE)

- Product Service: This service holds product information.

- `product_id` (INT, Primary Key)

- `product_name` (VARCHAR)

- `category` (VARCHAR)

- `price` (DECIMAL)

The data extraction process involves the following steps:

- Extraction from Order Service: Extract `order_id`, `customer_id`, `product_id`, `order_date`, `quantity`, and `total_amount` from the Order Service’s database or API.

- Extraction from Customer Service: Extract `customer_id`, `first_name`, `last_name`, and `email` from the Customer Service’s database or API, using `customer_id` as the key.

- Extraction from Product Service: Extract `product_id`, `product_name`, `category`, and `price` from the Product Service’s database or API, using `product_id` as the key.

The extracted data from each service will then be transformed to prepare it for loading into an aggregation service or data warehouse. For example, the `order_date` might be standardized to a consistent format, and calculations could be performed to determine the total revenue per customer or product category. The data extraction process is a critical first step in the overall aggregation strategy.

Aggregation Strategies

Data aggregation in microservices necessitates careful consideration of the timing and volume of data processing. The choice between real-time and batch processing significantly impacts system architecture, resource utilization, and the timeliness of insights derived from the aggregated data. Understanding the nuances of each approach is crucial for selecting the most appropriate strategy for a given use case.

Real-time vs. Batch Processing

Real-time and batch processing represent fundamentally different approaches to data aggregation, each with its own set of trade-offs. Real-time processing aims to aggregate data as it arrives, providing immediate or near-immediate results. Batch processing, conversely, collects data over a period and processes it in discrete intervals.Real-time processing offers the advantage of providing up-to-the-minute insights. This is particularly valuable in scenarios where rapid decision-making is critical, such as fraud detection or real-time bidding in advertising.

However, real-time systems often require more complex infrastructure and are more susceptible to transient failures. Batch processing, on the other hand, is typically simpler to implement and more resilient to failures. It is well-suited for tasks where latency is less critical, such as generating daily or weekly reports. However, the inherent delay in batch processing can make it unsuitable for applications requiring immediate responses.

- Real-time Processing: This approach aggregates data as it becomes available. It typically involves stream processing technologies like Apache Kafka, Apache Flink, or cloud-based services like AWS Kinesis or Google Cloud Dataflow. The goal is to provide results with minimal latency.

- Batch Processing: Data is collected over a specific period and then processed in batches. Technologies like Apache Hadoop, Apache Spark, or cloud-based services like AWS EMR or Google Cloud Dataproc are commonly used. Batch processing prioritizes throughput and fault tolerance over low latency.

Advantages and Disadvantages

The choice between real-time and batch processing hinges on a careful evaluation of their respective advantages and disadvantages. Both strategies have their strengths and weaknesses, and the optimal choice depends on the specific requirements of the application.

- Real-time Processing:

- Advantages:

- Low Latency: Provides immediate or near-immediate results, enabling real-time decision-making.

- Responsiveness: Allows for rapid responses to changing conditions.

- Proactive Actions: Facilitates immediate reactions to critical events, such as fraud detection.

- Disadvantages:

- Complexity: Requires sophisticated infrastructure and careful design to handle high-velocity data streams.

- Cost: Can be more expensive due to the need for specialized hardware and software.

- Scalability Challenges: Scaling real-time systems can be complex and require significant engineering effort.

- Error Handling: Handling errors and ensuring data consistency in real-time systems can be challenging.

- Advantages:

- Batch Processing:

- Advantages:

- Simplicity: Easier to implement and manage compared to real-time systems.

- Scalability: Can handle large volumes of data with relative ease.

- Fault Tolerance: Designed to handle failures gracefully, with built-in mechanisms for retries and recovery.

- Cost-Effectiveness: Generally less expensive than real-time processing, especially for large-scale data processing.

- Disadvantages:

- Latency: Introduces delays in processing, making it unsuitable for applications requiring immediate results.

- Data Staleness: Data is only updated periodically, leading to potentially stale insights.

- Resource Consumption: Can consume significant resources during batch processing windows, potentially impacting other system components.

- Advantages:

Use Cases for Real-time and Batch Processing

The appropriate use case for each approach depends on the specific requirements of the application, including the need for low latency, the volume of data, and the criticality of the insights. The following table provides examples of scenarios where each processing strategy is best suited:

| Feature | Real-time Processing | Batch Processing |

|---|---|---|

| Use Case Examples |

|

|

| Key Considerations |

|

|

| Example Technologies |

|

|

Technologies for Data Aggregation

Message queues offer a powerful and asynchronous mechanism for aggregating data from microservices. They decouple services, allowing them to operate independently while facilitating reliable data transfer and aggregation. This approach is particularly beneficial in distributed systems where data consistency and scalability are paramount.

Message Queues for Data Aggregation

Message queues act as intermediaries, receiving messages from various microservices and routing them to consumers for aggregation. This asynchronous communication model improves system resilience and responsiveness. Instead of direct calls, services publish messages to the queue, and consumers subscribe to specific message types. This allows services to continue operating even if some consumers are temporarily unavailable. This decoupling also allows for independent scaling of producers and consumers.

- Asynchronous Communication: Services publish messages to the queue without waiting for a response. This improves performance and reduces latency.

- Decoupling: Producers and consumers are independent and do not need to know about each other’s implementation details.

- Reliability: Message queues typically offer features like message persistence and guaranteed delivery, ensuring data is not lost.

- Scalability: Message queues can handle large volumes of messages, allowing for horizontal scaling of both producers and consumers.

Example: Aggregating Order Data

Consider an e-commerce platform with several microservices: `Order Service`, `Payment Service`, and `Inventory Service`. The goal is to aggregate order details for reporting. Using a message queue, such as Apache Kafka, the process would unfold as follows:

- Message Production: Each service publishes messages to the queue when an event occurs (e.g., an order is created, a payment is processed, or inventory is updated). These messages contain relevant data, such as order IDs, payment amounts, and inventory quantities.

- Message Consumption and Aggregation: A dedicated `Reporting Service` subscribes to the relevant topics (e.g., `orders`, `payments`, `inventory`). It consumes these messages and aggregates the data. For example, it might join order details with payment information to calculate total revenue.

- Data Storage: The aggregated data is then stored in a data warehouse or reporting database for analysis.

This approach ensures that even if one service is temporarily unavailable, the data aggregation process can continue, as the messages are persisted in the queue. The reporting service can then catch up once the service recovers.

Simplified Architecture Diagram: Message Queue-Based Aggregation

A simplified architecture diagram illustrates the data flow:

The diagram depicts a system where multiple microservices (Order Service, Payment Service, Inventory Service) send messages to a central message queue (e.g., Kafka). A Reporting Service, acting as a consumer, subscribes to the queue and receives these messages. The Reporting Service then processes and aggregates the data, ultimately storing the aggregated results in a Data Warehouse or Reporting Database. Arrows represent the flow of data, showing messages originating from microservices, flowing through the message queue, and being consumed by the Reporting Service.

The Reporting Service then populates the Data Warehouse/Reporting Database.

Key components and data flow:

- Microservices (Producers): Order Service, Payment Service, Inventory Service. They produce messages.

- Message Queue (e.g., Kafka): Central message broker. Stores and distributes messages.

- Reporting Service (Consumer): Aggregates data from messages.

- Data Warehouse/Reporting Database: Stores aggregated data.

- Arrows: Represent message flow.

Technologies for Data Aggregation

Data lakes and data warehouses offer robust solutions for aggregating data from microservices, addressing the complexities inherent in distributed architectures. They provide centralized repositories for storing, processing, and analyzing large volumes of data, enabling comprehensive insights that would be difficult or impossible to achieve by querying individual microservices directly. Their scalability and analytical capabilities are crucial for modern applications that demand real-time or near-real-time data-driven decision-making.

Data Lakes and Data Warehouses in Microservices Data Aggregation

Data lakes and data warehouses serve distinct but complementary roles in aggregating microservices data. A data lake acts as a central repository for raw, unstructured, and semi-structured data, providing flexibility in storing diverse data formats without pre-defined schemas. This is particularly useful for capturing the variety of data emitted by microservices, which may include logs, events, and transactional data. A data warehouse, on the other hand, is designed for structured data and optimized for analytical queries.

It transforms and structures the data from the data lake or directly from microservices, enabling efficient reporting and business intelligence. The choice between a data lake, a data warehouse, or a combination of both depends on the specific analytical needs and the nature of the data generated by the microservices. Data warehouses often employ techniques like star schema or snowflake schema to optimize query performance.

Data Warehousing Technologies

Various data warehousing technologies are available, each offering different features and capabilities. The selection of a particular technology depends on factors such as data volume, query complexity, scalability requirements, and budget.

- Snowflake: A cloud-based data warehouse known for its scalability, performance, and ease of use. Snowflake separates compute and storage, allowing for independent scaling of these resources. It supports various data formats and offers features like data sharing and secure data collaboration. For example, a retail company with numerous microservices handling product catalogs, sales transactions, and customer interactions could use Snowflake to consolidate data from these services for comprehensive sales analysis, customer segmentation, and inventory management.

The architecture is designed to handle large volumes of data with high concurrency.

- Amazon Redshift: A fully managed, petabyte-scale data warehouse service offered by Amazon Web Services (AWS). Redshift is based on PostgreSQL and supports SQL-based queries. It offers features like columnar storage, data compression, and parallel processing for efficient data analysis. For instance, a financial institution with microservices managing trading activities, risk assessments, and regulatory compliance could use Redshift to aggregate and analyze financial data for generating reports, identifying trends, and detecting anomalies.

Redshift’s performance is optimized for complex analytical queries, making it suitable for business intelligence and reporting workloads.

- Google BigQuery: A serverless data warehouse offered by Google Cloud Platform (GCP). BigQuery allows users to query massive datasets using SQL without managing infrastructure. It offers features like automatic scaling, high availability, and integration with other GCP services. A media company utilizing microservices for content delivery, user engagement tracking, and advertising analytics might leverage BigQuery to analyze user behavior, optimize content recommendations, and measure the effectiveness of advertising campaigns.

BigQuery’s serverless nature simplifies data warehousing operations, and its scalability is designed to accommodate growing data volumes.

Ingesting Data from Microservices into a Data Warehouse

Ingesting data from microservices into a data warehouse typically involves several key steps:

- Data Extraction: Identify the data sources within the microservices and determine the relevant data to extract. This may involve accessing databases, message queues, or API endpoints.

- Data Transformation: Transform the extracted data into a consistent format suitable for the data warehouse. This may include cleaning, filtering, aggregating, and enriching the data. For example, converting currency values to a common standard or standardizing date formats.

- Data Loading: Load the transformed data into the data warehouse. This can be done using various methods, such as batch loading or real-time streaming.

- Schema Definition: Define the schema for the data warehouse, including the tables, columns, and data types. This ensures data integrity and facilitates efficient querying.

- Data Governance: Implement data governance policies and procedures to ensure data quality, security, and compliance. This includes data validation, access control, and data lineage tracking.

- Monitoring and Optimization: Continuously monitor the data ingestion pipeline and optimize its performance. This may involve tuning queries, optimizing data storage, and scaling resources.

Aggregation Patterns

The aggregation of data in microservices architectures often necessitates the adoption of specific design patterns to manage complexity, improve performance, and maintain service autonomy. These patterns address the challenges of retrieving and combining data from disparate services, providing a unified view for client applications. Two prominent patterns are the API Gateway and the Backend-for-Frontend (BFF), each offering distinct advantages and trade-offs.

API Gateway Pattern for Data Aggregation

The API Gateway pattern acts as a central point of entry for all client requests, abstracting the internal microservices architecture from the external world. This approach provides a layer of indirection, enabling data aggregation, request routing, authentication, and other cross-cutting concerns to be handled in a centralized location.The primary function of an API Gateway in data aggregation involves orchestrating requests to multiple microservices and combining the responses into a single, coherent response for the client.

This process typically involves:

- Request Routing: Directing incoming requests to the appropriate microservices based on the request’s path, headers, or other criteria.

- Request Transformation: Modifying requests before forwarding them to microservices, such as adding headers, transforming data formats, or enriching the request with additional information.

- Data Aggregation: Collecting responses from multiple microservices, potentially performing data transformations, and combining the data into a single response. This may involve simple concatenation, complex data merging, or the application of business logic to derive a new result.

- Response Transformation: Modifying the aggregated response before sending it back to the client, such as changing data formats or filtering sensitive information.

- Authentication and Authorization: Handling user authentication and authorization, ensuring that clients have the necessary permissions to access the requested resources.

Examples of API Gateway implementations include:

- Netflix Zuul: A widely adopted open-source API gateway developed by Netflix. Zuul provides features like dynamic routing, monitoring, resilience, and security. It is written in Java and integrates well with the Spring Cloud ecosystem.

- Amazon API Gateway: A fully managed service offered by Amazon Web Services (AWS). It allows developers to create, publish, maintain, monitor, and secure APIs at scale. It supports various API types, including REST, WebSocket, and HTTP APIs.

- Kong: An open-source API gateway and service mesh platform. Kong is designed for high performance and scalability, offering features like traffic control, authentication, and logging. It supports plugins for extending its functionality and can be deployed on various infrastructure platforms.

- Apigee: A comprehensive API management platform offered by Google Cloud. Apigee provides tools for API design, security, analytics, and monetization. It supports various API protocols and integrates with other Google Cloud services.

A detailed diagram illustrating the API Gateway pattern with service interactions would depict the following:

| Component | Description |

|---|---|

| Client Application | Initiates requests to the API Gateway. This can be a web application, mobile app, or another service. |

| API Gateway | The central entry point for all client requests. It receives requests, routes them to the appropriate microservices, aggregates responses, and returns a consolidated response to the client. The API Gateway also handles authentication, authorization, and other cross-cutting concerns. |

| Microservice A | A microservice responsible for handling a specific set of business functionalities, such as retrieving product details. |

| Microservice B | A microservice responsible for another set of business functionalities, such as retrieving customer reviews. |

| Microservice C | A microservice responsible for yet another set of business functionalities, such as retrieving inventory levels. |

| Service Discovery | A component that allows the API Gateway to locate and communicate with microservices. It provides a registry of service instances and their network locations. |

Diagram Description:1. Client Request: The client application sends a request to the API Gateway.2. Authentication and Authorization: The API Gateway authenticates the client and authorizes the request based on the client's credentials and permissions.3. Request Routing: The API Gateway routes the request to the appropriate microservices based on the request's path, headers, or other criteria.4. Request to Microservices: The API Gateway forwards the request to Microservice A, Microservice B, and Microservice C.5.Microservice Responses: Microservices A, B, and C process the requests and return their respective responses to the API Gateway.6. Data Aggregation: The API Gateway aggregates the responses from the microservices, potentially transforming the data and combining it into a single response. This might involve joining data, filtering information, or applying business rules.7. Response to Client: The API Gateway returns the aggregated response to the client application.8.

Service Discovery Interaction: The API Gateway uses service discovery to locate the network addresses of the microservices. This is an ongoing process, especially in dynamic environments where microservice instances may come and go.

Aggregation Patterns

The evolution of microservices architecture has brought forth various patterns to address the challenges of data aggregation. These patterns offer different approaches to collecting and combining data from disparate microservices, each with its own trade-offs in terms of complexity, performance, and data consistency.

This section delves into two prominent aggregation patterns: the Sidecar pattern and the Data Mesh.

Sidecar Pattern in Data Aggregation

The Sidecar pattern, in the context of data aggregation, involves deploying a separate, dedicated service alongside each microservice. This sidecar service acts as a proxy, intermediary, or aggregator, responsible for handling data aggregation tasks specific to its paired microservice. This approach decouples the aggregation logic from the core microservice functionality, promoting modularity and maintainability.

The Sidecar pattern offers several benefits:

- Decoupling Concerns: The core microservice remains focused on its primary business logic, while the sidecar handles data aggregation, transformation, and enrichment. This separation simplifies development and reduces the risk of affecting the core service’s performance or stability.

- Technology Flexibility: Sidecars can be implemented using different technologies than the core microservice, allowing for specialized tools and frameworks optimized for data processing, such as Apache Spark or Apache Kafka, without impacting the core service’s technology stack.

- Improved Performance: Sidecars can cache frequently accessed data or pre-aggregate results, reducing the load on the core microservice and improving overall response times. For example, a sidecar could cache user profile data, preventing the need to query the user service repeatedly for each request.

- Enhanced Observability: Sidecars can provide detailed metrics and logging related to data aggregation, offering valuable insights into performance bottlenecks and data quality issues. This facilitates proactive monitoring and troubleshooting.

Consider a scenario where an e-commerce platform uses microservices for product catalogs, user profiles, and order management. A sidecar could be deployed alongside the product catalog service. This sidecar could be responsible for aggregating product information, including reviews, ratings, and pricing from other microservices. The sidecar could then cache this aggregated data, making it available quickly for presentation on the product detail pages.

The architecture would resemble the following:

Simplified Sidecar Architecture Example

+---------------------+ +---------------------+ +---------------------+ | Product Catalog | | User Profile | | Order Management | | Microservice | | Microservice | | Microservice | +---------+-----------+ +---------+-----------+ +---------+-----------+ | | | | (Data Request) | | |-------------------->| | | | | +---------v-----------+ +---------+-----------+ +---------+-----------+ | Product Catalog | | | | | | Sidecar | | | | | +---------+-----------+ +---------+-----------+ +---------+-----------+ | | | | (Aggregated Data) | (User Data) | (Order Data) | | <--------------------|-------------------->|------------------------>| | | | +---------+-----------+ +---------------------+ +---------------------+ | API Gateway | | Database | | Database | +---------------------+ +---------------------+ +---------------------+

In this diagram:

- Three microservices are presented: Product Catalog, User Profile, and Order Management.

- A Sidecar is attached to the Product Catalog microservice.

- Arrows depict data requests and aggregated data flow between services and the Sidecar.

- Databases are used for storing data.

- An API Gateway handles incoming requests and directs them to the appropriate microservice.

Data Mesh and its Application in Microservices

The Data Mesh is a decentralized data architecture that treats data as a product. It addresses the limitations of traditional, centralized data architectures, particularly in complex microservices environments where data is distributed across numerous services. The core principles of Data Mesh include:

- Domain-Oriented Ownership: Data is owned and managed by the teams responsible for the domains from which the data originates. This promotes data quality and understanding, as the domain experts have the most context about their data.

- Data as a Product: Data is treated as a product, with data products being discoverable, addressable, trustworthy, and self-describing. This ensures that data consumers can easily find and use the data they need.

- Self-Serve Data Infrastructure: A platform provides the infrastructure and tools necessary for domain teams to create, manage, and share their data products independently. This includes data pipelines, data catalogs, and governance frameworks.

- Federated Computational Governance: A governance model balances autonomy and standardization, ensuring data interoperability and compliance with organizational policies. This involves establishing common standards, metrics, and security protocols.

Applying Data Mesh in a microservices environment involves several steps:

- Identify Domains: Define the business domains relevant to your organization. Each domain will be responsible for managing its own data products. For example, in an e-commerce platform, domains could include “Product Catalog,” “User Profiles,” and “Orders.”

- Establish Data Product Owners: Assign ownership of each data product to the domain teams. These owners are responsible for the data’s quality, discoverability, and usability.

- Build a Self-Serve Data Platform: Implement a platform that provides tools and infrastructure for data ingestion, transformation, storage, and access. This platform should include features like data catalogs, data pipelines, and data governance tools.

- Define Data Contracts: Establish contracts for data exchange between domains, ensuring that data products are compatible and can be easily integrated.

- Implement Federated Governance: Define and enforce governance policies to ensure data quality, security, and compliance. This includes defining data standards, access controls, and data lineage tracking.

Simplified Architecture for Data Mesh Implementation

A simplified architecture for a Data Mesh implementation in a microservices environment would involve the following components:

Simplified Data Mesh Architecture Example

+---------------------+ +---------------------+ +---------------------+ | Domain: | | Domain: | | Domain: | | Product Catalog | | User Profiles | | Orders | | Microservice | | Microservice | | Microservice | +---------+-----------+ +---------+-----------+ +---------+-----------+ | | | | (Data Product) | (Data Product) | (Data Product) | |-------------------->|-------------------->|---------------------->| | | | +---------v-----------+ +---------v-----------+ +---------v-----------+ | Data Product | | Data Product | | Data Product | | (Product Catalog) | | (User Profiles) | | (Orders) | +---------+-----------+ +---------+-----------+ +---------+-----------+ | | | | (Data Pipelines, | (Data Pipelines, | (Data Pipelines, | | Data Catalog, | Data Catalog, | Data Catalog, | | Governance) | Governance) | Governance) | +---------+-----------+ +---------+-----------+ +---------+-----------+ | | | +---------v-----------+ +---------v-----------+ +---------v-----------+ | Data Lake/Warehouse | | Data Lake/Warehouse | | Data Lake/Warehouse | +---------------------+ +---------------------+ +---------------------+ | | | +---------v-----------+ | Data Consumers | +---------------------+

In this diagram:

- Three distinct domains are depicted: Product Catalog, User Profiles, and Orders.

- Each domain owns its Microservice and Data Product.

- Each domain has Data Pipelines, a Data Catalog, and Governance tools to manage its Data Product.

- Data Products are ingested into a Data Lake or Data Warehouse for each domain.

- Data Consumers can access the data from the Data Lake/Warehouse.

The Data Mesh architecture promotes a more agile and scalable approach to data aggregation by empowering domain teams to manage their data as products. This leads to improved data quality, increased data discoverability, and faster time to insights. Real-world examples of successful Data Mesh implementations can be found at companies like Netflix and Spotify, where it has facilitated the efficient handling of massive datasets generated by their microservices architectures.

These companies have reported significant improvements in data accessibility and the ability to derive valuable business intelligence from their data.

Monitoring and Troubleshooting Data Aggregation Pipelines

Monitoring and troubleshooting are crucial for maintaining the reliability, performance, and data integrity of data aggregation pipelines in microservices architectures. A robust monitoring strategy enables proactive identification of issues, facilitates rapid resolution, and ensures the continuous delivery of accurate aggregated data. Effective troubleshooting minimizes downtime, prevents data inconsistencies, and supports the overall health of the microservices ecosystem.

Importance of Monitoring Data Aggregation Processes

Monitoring data aggregation processes is vital for several reasons, ensuring the system’s operational efficiency and data quality. It provides insights into the performance of the aggregation pipeline, allowing for the identification of bottlenecks and inefficiencies. Regular monitoring also aids in detecting data inconsistencies, errors, and anomalies that could compromise the accuracy of aggregated data. Moreover, monitoring facilitates proactive alerting, enabling teams to respond promptly to issues before they impact end-users or downstream systems.

Finally, monitoring supports capacity planning and resource allocation by providing data on resource utilization and performance trends.

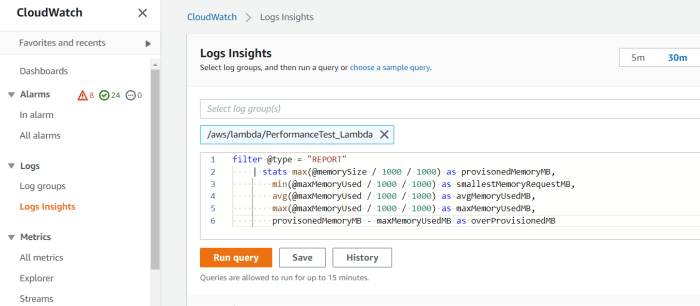

Examples of Monitoring Tools

Several tools are available for monitoring data aggregation pipelines, each offering different features and capabilities. The choice of tool often depends on the specific requirements of the system and the existing infrastructure.

- Prometheus: Prometheus is a popular open-source monitoring system designed for collecting and processing metrics. It excels at monitoring time-series data, making it suitable for tracking the performance of aggregation pipelines. Prometheus uses a pull-based model, where it periodically scrapes metrics from configured targets. These targets can be the microservices themselves, middleware components, or dedicated exporters that expose metrics in a Prometheus-compatible format.

Prometheus provides a powerful query language (PromQL) for analyzing metrics and creating alerts. Visualization is typically done using Grafana, which can be integrated with Prometheus to create dashboards. For instance, one could monitor the latency of API calls within the aggregation service, the number of processed events per second, or the memory usage of the aggregation containers.

- Grafana: Grafana is a data visualization and monitoring tool that integrates with various data sources, including Prometheus, InfluxDB, and Elasticsearch. It allows users to create interactive dashboards that display metrics, logs, and alerts in a visually appealing and customizable format. Grafana supports a wide range of graph types, gauges, and tables, enabling users to gain insights into the performance and health of their systems.

For data aggregation, Grafana can be used to visualize the performance of the aggregation pipeline, track key metrics, and monitor the overall health of the system.

- Jaeger/Zipkin (Distributed Tracing): These tools provide distributed tracing capabilities, which are crucial for understanding the flow of requests through a microservices architecture. Distributed tracing helps identify performance bottlenecks, pinpoint the source of errors, and understand the relationships between different microservices involved in the data aggregation process. By tracing requests across multiple services, developers can gain insights into the time spent in each service and the overall latency of the aggregation pipeline.

For example, a trace might reveal that a specific microservice is experiencing high latency, which is impacting the overall aggregation time.

- ELK Stack (Elasticsearch, Logstash, Kibana): The ELK Stack is a powerful combination of tools for log management and analysis. Elasticsearch is a search and analytics engine that stores and indexes logs. Logstash is a data processing pipeline that collects, parses, and transforms logs. Kibana is a visualization and dashboarding tool that allows users to analyze and visualize log data. In the context of data aggregation, the ELK Stack can be used to collect, process, and analyze logs from microservices and aggregation components.

This allows developers to identify errors, track performance, and gain insights into the behavior of the aggregation pipeline.

Common Issues and Troubleshooting Steps in a Data Aggregation Pipeline

Data aggregation pipelines can encounter various issues that require careful troubleshooting. These issues can range from performance bottlenecks to data inconsistencies and errors. Effective troubleshooting involves a systematic approach to identify the root cause of the problem and implement appropriate solutions.

- Performance Bottlenecks: Performance bottlenecks can arise from various factors, including slow network connections, inefficient code, or resource constraints. To troubleshoot performance issues, it is important to identify the source of the bottleneck.

- Monitoring Metrics: Start by monitoring key metrics such as latency, throughput, CPU utilization, and memory usage. Prometheus and Grafana can be used to visualize these metrics and identify performance trends.

- Profiling Code: Use profiling tools to identify performance hotspots in the code. This can help pinpoint areas where optimization is needed.

- Network Analysis: Analyze network traffic to identify slow network connections or other network-related issues. Tools like Wireshark can be used for network analysis.

- Resource Optimization: Optimize resource allocation by increasing the resources allocated to the aggregation service or scaling the service horizontally.

- Data Inconsistencies and Errors: Data inconsistencies and errors can occur due to various reasons, including incorrect data transformations, data corruption, or issues with data sources.

- Log Analysis: Analyze logs from microservices and aggregation components to identify error messages and data anomalies. The ELK Stack or other log management tools can be used for log analysis.

- Data Validation: Implement data validation checks to ensure that data is accurate and consistent. This can involve validating data formats, ranges, and relationships.

- Data Transformation Review: Review data transformation logic to ensure that data is being transformed correctly. This includes verifying that the transformations are accurate and that data is not being lost or corrupted during the transformation process.

- Data Source Verification: Verify the integrity and accuracy of data sources. This involves checking for data corruption, inconsistencies, and other issues that could impact the aggregation process.

- Connectivity Issues: Connectivity issues can arise due to network outages, misconfigured network settings, or issues with service discovery.

- Network Monitoring: Monitor network connectivity between microservices and aggregation components. This can involve using ping, traceroute, or other network monitoring tools.

- Service Discovery Verification: Verify that service discovery is working correctly and that the aggregation service can discover and connect to the necessary microservices.

- Firewall Rules Review: Review firewall rules to ensure that they are not blocking traffic between microservices and aggregation components.

- DNS Resolution: Check DNS resolution to ensure that the aggregation service can resolve the hostnames of the microservices.

- Data Loss: Data loss can occur due to various reasons, including failures in data sources, issues with data transformation, or problems with data storage.

- Implement Redundancy: Implement redundancy in data sources and data storage to minimize the risk of data loss. This can involve using multiple data sources or replicating data across multiple storage locations.

- Data Backup and Recovery: Implement a data backup and recovery strategy to ensure that data can be recovered in case of data loss. This involves backing up data regularly and testing the recovery process.

- Monitor Data Integrity: Monitor data integrity by regularly validating data and checking for inconsistencies.

- Transaction Management: Use transactions to ensure that data is written consistently to all relevant storage locations.

Final Thoughts

In conclusion, the successful aggregation of data from multiple microservices is paramount for unlocking the full potential of a microservices architecture. By understanding the inherent challenges, embracing appropriate strategies, and leveraging the right technologies, organizations can transform scattered data into actionable insights. From ETL processes and real-time versus batch processing to API gateways and data meshes, the approaches discussed provide a comprehensive roadmap for building robust and scalable data aggregation pipelines.

Consistent monitoring and diligent troubleshooting are essential for maintaining the integrity and performance of these critical systems, ultimately empowering data-driven decision-making and fostering business success in a microservices environment.

Essential FAQs

What are the primary challenges in aggregating data from microservices?

The primary challenges include data scattering across various databases and formats, data heterogeneity, ensuring data consistency, handling real-time data streams, and building scalable and fault-tolerant aggregation pipelines.

What is the role of an API Gateway in data aggregation?

An API Gateway can act as a central point for aggregating data from multiple microservices. It can orchestrate requests to different services, transform the data, and return a consolidated response to the client, simplifying data access and improving performance.

How do you choose between real-time and batch data aggregation?

The choice depends on the use case. Real-time aggregation is suitable for scenarios requiring up-to-the-minute insights (e.g., fraud detection). Batch processing is better for large datasets and less time-sensitive reporting (e.g., monthly sales analysis).

What is a Data Mesh, and how does it relate to data aggregation?

A Data Mesh is a decentralized approach to data management where data is treated as a product, owned by the teams that produce it. In a microservices environment, it promotes data discoverability, accessibility, and interoperability, facilitating effective data aggregation across different domains.

How do you ensure data consistency during aggregation?

Data consistency can be ensured through techniques such as transaction management (e.g., two-phase commit, Saga pattern), data normalization, and careful data transformation processes during the ETL phase. Eventual consistency models can also be used in some scenarios.