Understanding the financial implications of your software workload is crucial for effective resource management and budget planning. Analyzing the cost of a specific workload involves a deep dive into its components, resource consumption, and the pricing models of the services it utilizes. This process enables you to identify potential cost-saving opportunities and optimize your infrastructure for efficiency.

This guide provides a structured approach to dissecting the expenses associated with a particular workload. We’ll explore key aspects such as defining the workload, identifying cost drivers, measuring resource consumption, and implementing cost optimization strategies. By following this comprehensive framework, you can gain valuable insights into your workload’s financial footprint and make informed decisions to reduce expenses without compromising performance.

Defining the Workload

Understanding and precisely defining the workload is the cornerstone of any effective cost analysis. A poorly defined workload will inevitably lead to inaccurate cost estimations and flawed optimization strategies. This initial step sets the stage for the entire analysis, influencing every subsequent decision and the overall success of the cost management effort.

Scope and Components

Establishing the boundaries of the workload is critical. This involves clearly specifying what is included and, equally important, what is excluded from the analysis. It prevents scope creep and ensures that the cost analysis remains focused and relevant. This clarity also aids in accurately allocating costs and identifying potential areas for optimization.A software workload typically comprises a variety of interconnected components.

Understanding these components is essential for a comprehensive cost analysis.

- Compute Resources: These encompass the virtual machines (VMs), containers, or physical servers that execute the application code. Cost factors include instance type, size, and utilization. For example, a web application might run on a fleet of EC2 instances (AWS) or VMs in Azure or Google Cloud. The choice of instance type (e.g., general purpose, compute optimized) significantly impacts cost.

- Storage: This component includes the various storage solutions used to store data, such as object storage (e.g., S3, Azure Blob Storage), block storage (e.g., EBS, Azure Disks), and database storage. Costs depend on storage capacity, performance tier, and data access patterns. Consider a database storing user profiles; the storage cost will vary depending on the chosen database service and storage capacity.

- Networking: This includes all network-related costs, such as data transfer, bandwidth, and inter-region traffic. Costs can fluctuate based on the volume of data transferred and the geographical locations involved. A content delivery network (CDN) can significantly impact networking costs.

- Databases: Databases store and manage the application’s data. Costs depend on the database type (e.g., relational, NoSQL), instance size, storage, and read/write operations. For example, a large e-commerce platform will likely use a database to manage product catalogs, user accounts, and order information.

- Application Services: These are the services that provide specific functionalities for the application, such as message queues, caching services, and monitoring tools. Costs depend on the service used and the volume of data processed. For instance, a message queue like RabbitMQ or AWS SQS will incur costs based on the number of messages sent and received.

- Monitoring and Logging: These components are essential for observing the workload’s behavior and performance. Costs are related to the volume of logs generated and the frequency of monitoring data.

- Operating System (OS): The operating system is the foundation upon which the workload runs. Costs associated with the OS can include licensing fees, support, and maintenance.

- Security Services: These encompass services like firewalls, intrusion detection systems, and identity and access management (IAM) solutions. Security costs depend on the chosen security services and the level of protection required.

Identifying Workload Boundaries

Clearly defining the boundaries of the workload is critical to avoid inaccurate cost estimations. This involves determining what resources and services are directly associated with the workload and excluding those that are not.

- Application Scope: Define the specific application or set of applications the analysis will cover. Consider a web application serving user requests. The scope would include all the services required to handle those requests.

- Resource Allocation: Identify all the resources used by the application, including compute instances, storage, networking, and databases.

- Dependencies: Document any dependencies on external services or components. If the application relies on a third-party API, factor in the cost of using that API.

- Data Flow: Map the data flow within the application to understand how data moves between different components and services.

- Service Level Agreements (SLAs): Review any SLAs associated with the workload, as they may influence the choice of resources and services, thus affecting costs.

- Cost Allocation Tags: Utilize cost allocation tags to categorize and track the costs associated with the workload. This allows for granular cost analysis and optimization. For example, tag resources with application names or environment identifiers (e.g., “production,” “staging”).

- Example: Consider an e-commerce platform. The workload boundaries would include the web servers, database servers, caching services, and CDN used to deliver the platform’s content and handle user transactions. The analysis would exclude any internal infrastructure or services not directly involved in the platform’s operation.

Identifying Cost Drivers

Understanding the factors that contribute to the cost of a workload is crucial for effective cost management. This involves identifying the specific resources consumed and how their utilization translates into financial expenses. A thorough analysis of cost drivers allows for informed decisions regarding resource allocation, optimization strategies, and ultimately, cost reduction. This section will detail the primary cost drivers and how to categorize them effectively.

Resource Utilization and Cost Implications

Resource utilization directly impacts the cost of a workload. Different resources contribute to the overall expense, and understanding their individual cost implications is essential.

- CPU Utilization: Central Processing Unit (CPU) usage is a significant cost driver. The cost is directly proportional to the CPU hours consumed and the instance type. Higher CPU utilization, especially sustained high usage, leads to increased costs. For example, a virtual machine running at 80% CPU utilization for an entire month will cost significantly more than one running at 20% utilization, assuming all other factors are equal.

- Memory Utilization: The amount of Random Access Memory (RAM) allocated and utilized is another cost factor. Insufficient memory can lead to performance degradation and increased CPU usage (due to swapping), indirectly increasing costs. Over-provisioning memory, on the other hand, leads to wasted resources and unnecessary expenses.

- Storage Utilization: Storage costs are determined by the volume of data stored and the type of storage used (e.g., SSD, HDD, object storage). Factors like data access frequency and data transfer rates can also influence costs. Regularly monitoring storage capacity and data access patterns is important to optimize storage costs. For example, archiving infrequently accessed data to a cheaper storage tier can significantly reduce expenses.

- Network Utilization: Network costs arise from data transfer in and out of the workload’s environment. This includes bandwidth charges and data transfer fees. The volume of data transferred and the location of the workload (e.g., data transfer across regions) affect network costs. Monitoring network traffic patterns and optimizing data transfer strategies can minimize these expenses.

Categorizing Cost Drivers

To effectively analyze and manage costs, it’s helpful to categorize cost drivers. This structured approach enables a more organized and insightful assessment of the workload’s expenses.

One effective categorization structure involves the following groups:

- Compute Costs: This category includes all expenses related to the processing power used by the workload.

- Instance Costs: Costs associated with the virtual machines or other compute instances used. This includes the instance type, size, and duration of use.

- CPU Usage: Direct costs related to the amount of CPU time consumed.

- Memory Usage: Costs linked to the amount of memory allocated and used.

- Storage Costs: This category encompasses all expenses related to data storage.

- Storage Volume: Costs related to the amount of storage space used.

- Storage Type: Costs are influenced by the storage type selected (e.g., SSD, HDD, object storage), with different cost per gigabyte.

- Data Transfer Costs: Expenses associated with moving data in and out of storage.

- Network Costs: This category covers expenses related to network traffic.

- Data Transfer Ingress: Costs for data entering the workload’s environment.

- Data Transfer Egress: Costs for data leaving the workload’s environment.

- Network Bandwidth: Costs related to the amount of network bandwidth used.

- Operational Costs: This category includes expenses associated with managing and operating the workload.

- Monitoring and Logging: Costs for tools and services used for monitoring and logging.

- Automation Tools: Expenses associated with automation tools used for infrastructure management.

- Support and Maintenance: Costs related to technical support and system maintenance.

By using this categorization, organizations can gain a clearer understanding of their spending and identify areas for optimization. For instance, if compute costs are high, analyzing instance types and CPU utilization can reveal opportunities for downsizing or optimization. Similarly, if storage costs are excessive, reviewing storage tiering strategies and data retention policies can provide savings.

Resource Consumption Measurement

Understanding how your workload utilizes resources is critical for accurate cost analysis. Measuring resource consumption provides the data needed to correlate workload activity with associated costs. This section details the methods for effectively tracking and quantifying resource usage.

Methods for Tracking Resource Consumption

There are several methods for tracking resource consumption, each with its strengths and weaknesses. Choosing the appropriate method depends on the specific workload, the infrastructure, and the desired level of detail.

- Native Operating System Tools: Operating systems provide built-in tools for monitoring resource usage. These tools often offer real-time metrics and historical data. Examples include `top`, `htop`, `perfmon` (Windows), and `vmstat`. The advantage is that they are readily available and provide basic information. A disadvantage is that they may not provide the granular detail needed for complex workloads or cloud environments.

- Application Performance Monitoring (APM) Tools: APM tools offer deep visibility into application performance and resource consumption. They monitor code-level performance, database queries, and network interactions. They can often identify bottlenecks and correlate resource usage with specific application components. Examples include Dynatrace, New Relic, and AppDynamics. These are particularly useful for understanding how applications are consuming resources and how to optimize them.

- Cloud Provider Monitoring Services: Cloud providers offer their own monitoring services that provide detailed resource consumption metrics for their services. These services typically integrate seamlessly with the cloud platform and offer advanced features like alerting and dashboards. Examples include Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring. The advantage is that they are specifically designed for the cloud environment and provide comprehensive data.

- Infrastructure Monitoring Tools: Infrastructure monitoring tools focus on monitoring the underlying infrastructure, such as servers, networks, and storage. They collect metrics on resource usage, performance, and availability. Examples include Prometheus, Nagios, and Zabbix. They are suitable for monitoring on-premises or hybrid environments.

- Log Analysis: Analyzing application and system logs can provide valuable insights into resource consumption. Logs often contain information about resource usage, errors, and performance issues. Tools like the ELK stack (Elasticsearch, Logstash, Kibana) and Splunk are used for log analysis. This method is useful for understanding the sequence of events that lead to resource consumption.

Procedure for Collecting Data on CPU Usage, Memory Allocation, and I/O Operations

A structured procedure ensures consistent and reliable data collection for CPU usage, memory allocation, and I/O operations. This procedure should be repeatable and adaptable to different workloads and environments.

- Define Metrics: Precisely define the metrics to be collected.

- CPU Usage: Percentage of CPU utilization, CPU time spent in user mode, and CPU time spent in system mode.

- Memory Allocation: Total memory used, memory used by specific processes, and memory used for caching.

- I/O Operations: Disk read/write operations per second, data transferred (bytes), and latency.

- Select Monitoring Tools: Choose appropriate monitoring tools based on the environment and the level of detail required. Cloud provider monitoring services, APM tools, or infrastructure monitoring tools are suitable options.

- Configure Monitoring Agents: Install and configure monitoring agents on the relevant systems. Configure the agents to collect the defined metrics at a specific interval. Ensure the agents have sufficient permissions to access the required data.

- Data Collection Interval: Determine the appropriate data collection interval. Shorter intervals provide more granular data but can increase overhead. Longer intervals reduce overhead but may miss short-lived performance spikes. A typical interval is between 1 minute and 5 minutes.

- Data Storage: Choose a suitable data storage solution for the collected metrics. Time-series databases, such as Prometheus, are well-suited for storing and querying time-series data. Cloud provider monitoring services also offer storage capabilities.

- Data Validation: Regularly validate the collected data to ensure its accuracy. Verify that the metrics are being collected correctly and that the data is consistent. Compare the collected data with other sources of information, such as system logs.

- Data Analysis: Analyze the collected data to identify trends, patterns, and anomalies in resource consumption. Use dashboards and reports to visualize the data and identify areas for optimization.

Using Monitoring Tools to Gather Resource Consumption Metrics

Monitoring tools are essential for collecting and visualizing resource consumption metrics. The specific steps vary depending on the tool used, but the general principles remain the same. The following example demonstrates how to use Amazon CloudWatch to gather metrics.

- Access the CloudWatch Console: Log in to the AWS Management Console and navigate to the CloudWatch service.

- Select the Resource to Monitor: Choose the AWS resource you want to monitor, such as an EC2 instance, an RDS database, or an S3 bucket.

- View Predefined Metrics: CloudWatch provides a set of predefined metrics for each AWS service. These metrics include CPU utilization, memory utilization, disk I/O, and network traffic.

- Create Custom Metrics (Optional): If the predefined metrics do not meet your needs, you can create custom metrics. This involves writing code to send custom metrics to CloudWatch.

- Create Dashboards: Create dashboards to visualize the collected metrics. Dashboards allow you to track resource consumption over time and identify trends.

- Set up Alarms: Configure alarms to be notified when resource consumption exceeds predefined thresholds. Alarms can trigger automated actions, such as scaling up resources or sending notifications.

For example, consider an EC2 instance running a web server. Using CloudWatch, you can monitor the CPU utilization, memory utilization, and network traffic. If the CPU utilization consistently exceeds 80%, you can set up an alarm to notify you. This helps you understand if the server is overloaded and needs to be scaled. You could also monitor disk I/O to determine if the storage is a bottleneck.

This allows you to identify and address potential performance issues and optimize resource usage. CloudWatch provides valuable data for understanding the workload’s resource consumption and its impact on costs.

Pricing Models and Cost Calculation

Understanding the pricing models employed by cloud providers and other service vendors is crucial for accurately analyzing the cost of a specific workload. These models dictate how resources are charged, impacting the overall expenses. This section will delve into the various pricing structures, provide practical examples of cost calculations, and explore the influence of discounts and pricing tiers on workload costs.

Cloud Provider Pricing Models

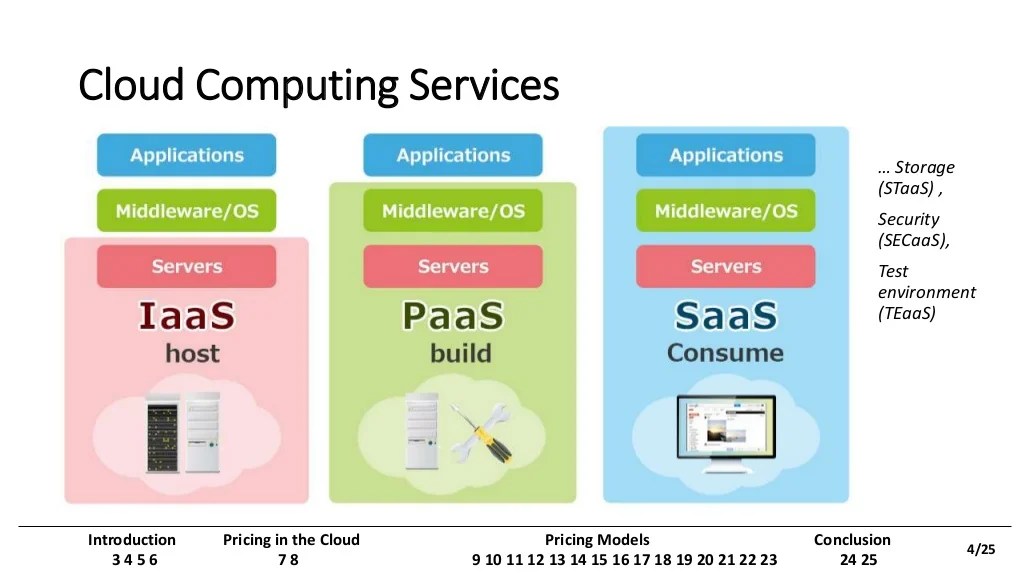

Cloud providers offer a variety of pricing models to cater to different usage patterns and requirements. Each model has its own advantages and disadvantages, influencing the cost-effectiveness of a workload.

- Pay-as-you-go (On-Demand): This model charges for resources consumed on an hourly or per-second basis, without any upfront commitment. It’s suitable for unpredictable workloads and testing environments.

- Reserved Instances/Committed Use Discounts: These models involve committing to using a specific amount of resources for a defined period (e.g., one or three years) in exchange for significant discounts compared to on-demand pricing. This is beneficial for steady-state workloads.

- Spot Instances/Preemptible Instances: Cloud providers offer spare compute capacity at significantly reduced prices. However, these instances can be terminated with short notice if the provider needs the capacity back. This model is best suited for fault-tolerant and interruptible workloads.

- Subscription-Based Pricing: Certain services are priced based on a monthly or annual subscription fee, providing access to a specific set of features or resources. This is common for software-as-a-service (SaaS) offerings.

- Tiered Pricing: Some services use tiered pricing, where the cost per unit decreases as usage volume increases. This incentivizes higher usage and is common for storage and data transfer services.

- Hybrid Pricing: Some providers offer hybrid pricing models, combining different pricing strategies to provide flexibility and cost optimization. For instance, a provider might offer a combination of on-demand and reserved instances.

Cost Calculation Examples

Calculating the cost of a workload requires understanding the pricing model of each resource used. Let’s examine some examples:

- Compute Instance Cost: Suppose you’re running a virtual machine (VM) instance with the following specifications:

- Instance Type: `t2.medium` (2 vCPUs, 4 GB RAM)

- Region: US East (N. Virginia)

- Pricing Model: On-Demand

- Hourly Rate: $0.0464

- Monthly Usage: 720 hours (assuming a full month)

The monthly cost would be calculated as:

Monthly Cost = Hourly Rate

- Monthly Usage = $0.0464/hour

- 720 hours = $33.41

- Storage Cost: Consider using Amazon S3 for object storage.

- Storage Type: Standard

- Region: US East (N. Virginia)

- Price per GB: $0.023 per GB/month

- Storage Used: 100 GB

The monthly cost is:

Monthly Cost = Storage Used

- Price per GB = 100 GB

- $0.023/GB = $2.30

- Data Transfer Cost: Data transfer costs often apply when moving data out of a cloud provider’s network. Let’s say you transfer 100 GB of data out of Amazon S3 in a given month.

- Region: US East (N. Virginia)

- First 1 GB/month: Free

- Next 9.999 GB/month: $0.09 per GB

- Next 39.999 GB/month: $0.085 per GB

- Remaining data: $0.08 per GB

The data transfer cost calculation would be:

Cost = (9.999 GB

- $0.09) + (39.999 GB

- $0.085) + (50 GB

- $0.08) = $0.90 + $3.40 + $4.00 = $8.30

Impact of Pricing Tiers and Discounts

Pricing tiers and discounts can significantly affect the overall cost of a workload. Understanding these aspects is crucial for cost optimization.

- Pricing Tiers: As usage increases, tiered pricing can result in lower per-unit costs. For example, a storage service might charge $0.03 per GB for the first 1 TB, $0.02 per GB for the next 4 TB, and $0.01 per GB for anything beyond that. This incentivizes higher storage usage by reducing the average cost per GB.

- Reserved Instances/Committed Use Discounts: These discounts can lead to substantial savings compared to on-demand pricing. However, they require upfront commitments and are most effective for workloads with predictable resource needs. For instance, a reserved instance can reduce the cost of a compute instance by 30-70% compared to on-demand pricing, depending on the term length and instance type.

- Volume Discounts: Cloud providers often offer discounts based on the volume of resources used. This is particularly common for storage, data transfer, and certain compute services.

- Promotional Credits and Free Tiers: Cloud providers often offer promotional credits or free tiers for new customers, which can significantly reduce the initial costs of a workload. Free tiers usually include a limited amount of free usage for certain services.

- Negotiated Pricing: Large organizations can sometimes negotiate custom pricing agreements with cloud providers, potentially achieving further cost reductions based on their specific needs and usage patterns.

Cost Optimization Strategies

Optimizing the cost of a workload is an ongoing process that involves identifying areas for improvement and implementing strategies to reduce expenses without sacrificing performance. This section details various approaches to achieve cost efficiency, focusing on right-sizing, resource utilization, and other proactive measures. The goal is to ensure the workload operates at the lowest possible cost while maintaining its required level of service.

Right-Sizing Resources

Right-sizing involves matching the resources allocated to a workload with its actual demand. This prevents over-provisioning, which leads to unnecessary costs, and under-provisioning, which can negatively impact performance. Regularly monitoring resource utilization is crucial for identifying opportunities to right-size.

- Analyzing CPU Utilization: Observe CPU usage metrics, such as average and peak utilization, to determine if virtual machines (VMs) or instances are over-provisioned. For example, if a VM consistently runs at 20% CPU utilization, it can likely be downsized to a smaller instance type.

- Evaluating Memory Usage: Monitor memory consumption to identify memory bottlenecks or underutilized memory. If a server consistently uses a small percentage of its allocated memory, consider reducing the memory allocation to save costs.

- Assessing Storage Requirements: Analyze storage usage patterns to optimize storage costs. Identify infrequently accessed data that can be moved to cheaper storage tiers (e.g., from SSD to HDD or object storage).

- Network Throughput Analysis: Monitor network traffic to ensure that network resources are appropriately sized. Identify instances where network bandwidth is underutilized and potentially downsize the network configuration.

- Example: A web application server running on an instance with 8 vCPUs and 32GB of RAM consistently shows average CPU utilization of 15% and memory utilization of 40%. Right-sizing would involve migrating the application to an instance with fewer vCPUs and less RAM, resulting in cost savings without affecting performance.

Implementing Cost-Saving Measures

Implementing cost-saving measures requires a strategic approach that balances cost reduction with performance and availability. This involves choosing the right instance types, leveraging reserved instances or commitments, and utilizing automation.

- Selecting Cost-Effective Instance Types: Choose instance types that are optimized for the workload’s requirements. For example, use burstable instances for workloads with variable resource needs or spot instances for fault-tolerant, non-critical workloads. Consider the trade-offs between cost and performance for each instance type.

- Leveraging Reserved Instances and Commitments: Take advantage of reserved instances, committed use discounts, or savings plans to reduce costs for stable workloads. These options provide significant discounts in exchange for a commitment to use resources for a specific period.

- Automating Resource Management: Automate resource scaling to dynamically adjust resources based on demand. This includes auto-scaling, which automatically adds or removes instances based on pre-defined metrics (e.g., CPU utilization, network traffic), and scheduled scaling, which adjusts resources based on predictable patterns (e.g., peak hours).

- Optimizing Data Storage: Employ data lifecycle management to automatically move data to lower-cost storage tiers based on access frequency. This ensures that frequently accessed data resides on high-performance storage while less frequently accessed data is stored on cheaper options.

- Implementing Cost Allocation and Tagging: Utilize cost allocation tags to track and categorize costs by department, project, or application. This allows for better cost visibility and accountability.

- Example: A company uses auto-scaling to automatically increase the number of web servers during peak hours and decrease them during off-peak hours. This ensures the application can handle traffic spikes without over-provisioning resources, thereby reducing costs.

Monitoring and Continuous Improvement

Continuous monitoring and improvement are essential for sustaining cost optimization efforts. Regularly reviewing resource utilization, analyzing cost trends, and making adjustments as needed ensure that the workload remains cost-efficient over time.

- Establishing Monitoring Metrics: Define key performance indicators (KPIs) to track the effectiveness of cost optimization strategies. These metrics include cost per transaction, resource utilization rates, and overall cost savings.

- Utilizing Cost Management Tools: Employ cloud provider-specific or third-party cost management tools to monitor spending, identify cost anomalies, and receive recommendations for optimization. These tools provide dashboards, reports, and alerts to help manage costs proactively.

- Regular Cost Reviews: Conduct regular cost reviews to analyze spending patterns, identify areas for improvement, and validate the effectiveness of implemented cost-saving measures. These reviews should involve cross-functional teams, including engineering, finance, and operations.

- Iterative Optimization: Approach cost optimization as an iterative process. Continuously experiment with different optimization strategies, monitor the results, and refine the approach based on the findings. This ensures that the workload adapts to changing demands and technologies.

- Example: A company implements a monthly cost review process. During the review, they identify that a specific application’s storage costs have increased significantly. After investigation, they discover that infrequently accessed data is not being moved to a cheaper storage tier. They then implement a data lifecycle management policy to automate this process, resulting in cost savings in the following months.

Cost Reporting and Visualization

Effectively presenting and visualizing workload cost data is crucial for understanding spending patterns, identifying inefficiencies, and making informed decisions about resource allocation. This section details how to design a clear and actionable reporting format and leverage visualization techniques to drive cost optimization.

Designing an Easy-to-Understand Reporting Format

A well-designed report should be accessible to both technical and non-technical stakeholders. It should provide a concise overview of costs, highlighting key trends and areas of concern. The primary goal is to ensure that the cost data is readily interpretable and actionable.To achieve this, consider the following:* Use a clear and consistent layout: The report should follow a logical structure, with consistent headings, formatting, and terminology.

Prioritize key metrics

Focus on the most important cost drivers and performance indicators, such as total cost, cost per unit of work, and cost trends over time.

Provide context

Include explanations of any significant changes in cost, along with the reasons behind them.

Use clear and concise language

Avoid technical jargon and use plain language that is easy to understand.

Include actionable insights

The report should provide recommendations for cost optimization based on the data analysis.A key element of a clear report is a well-structured table. Here’s how to structure a 4-column responsive HTML table showing expenses over time.“`html

| Month | Workload Component | Cost ($) | Percentage of Total Cost (%) |

|---|---|---|---|

| January | Compute | 1000 | 40% |

| January | Storage | 500 | 20% |

| January | Network | 250 | 10% |

| January | Other | 750 | 30% |

| February | Compute | 1100 | 44% |

| February | Storage | 550 | 22% |

| February | Network | 275 | 11% |

| February | Other | 675 | 27% |

| March | Compute | 1200 | 48% |

| March | Storage | 600 | 24% |

| March | Network | 300 | 12% |

| March | Other | 400 | 16% |

“`This table is structured to be easily readable and provides a breakdown of costs by month and workload component. The “Percentage of Total Cost” column allows for quick identification of the most significant cost drivers.

Visualizing Cost Trends and Identifying Areas for Improvement

Visualizations are essential for identifying trends, outliers, and areas where costs can be optimized. They make complex data more accessible and facilitate quicker understanding.Consider the following visualization techniques:* Line Charts: Ideal for showing cost trends over time. Plotting total cost, or the cost of individual components, can reveal patterns such as seasonal fluctuations or the impact of scaling events.

Bar Charts

Useful for comparing costs across different categories or periods. For instance, a bar chart can compare the cost of different workload components within a single month or compare the total cost for each month of the year.

Pie Charts

Effective for illustrating the proportion of costs allocated to different components within a specific period. They can highlight the relative contribution of each element to the total cost.

Scatter Plots

Can be used to identify correlations between cost and other variables, such as the number of users or the volume of data processed.Consider the following example. A line chart shows the monthly cost of compute resources for a web application. The chart reveals a steady increase in cost over the first six months, followed by a significant spike in month seven, and then a stabilization.* Analysis: The initial increase could be attributed to organic growth.

The spike in month seven might indicate an unexpected increase in traffic or a misconfiguration causing resource over-provisioning. The stabilization period suggests that optimization efforts were successful.

Actionable Insights

Investigate the cause of the spike in month seven. Review resource utilization during the stabilization period to ensure continued efficiency. Consider implementing auto-scaling to handle traffic fluctuations more efficiently.Another example involves a pie chart showing the distribution of cloud spending for a specific month. The chart shows that storage accounts for 40% of the total cost, compute 30%, and networking 20%, with the remaining 10% attributed to other services.* Analysis: Storage costs are the largest component, suggesting an area for potential optimization.

Actionable Insights

Analyze storage usage patterns to identify opportunities for data tiering, compression, or deletion of unused data. Evaluate the cost of different storage classes to ensure the most cost-effective solution is being used.

Workload Profiling and Benchmarking

Understanding a workload’s behavior and resource requirements is critical for effective cost management. Profiling and benchmarking provide the necessary insights to optimize resource allocation and predict future expenses. This section explores the value of these techniques and offers a practical guide to implementing them.

Understanding Workload Profiling

Workload profiling involves analyzing a workload’s characteristics to identify its resource consumption patterns. This understanding is essential for making informed decisions about resource allocation and cost optimization. Profiling provides a detailed view of how the workload uses resources such as CPU, memory, storage, and network bandwidth over time. This information helps pinpoint bottlenecks, inefficiencies, and areas where costs can be reduced.

Profiling is an ongoing process, as workload behavior can change over time due to factors like increased user activity, code updates, or changes in data volume.

Establishing a Baseline Performance and Cost Benchmark

Establishing a baseline benchmark is a fundamental step in workload cost analysis. This benchmark serves as a reference point against which future performance and cost changes can be measured.To establish a baseline, follow these steps:

- Define the Scope: Clearly define the workload you are benchmarking. Specify the applications, services, and data involved. Ensure you have a clear understanding of the workload’s purpose and function.

- Choose Metrics: Select key performance indicators (KPIs) and cost metrics to track. KPIs might include transaction processing rate, latency, and error rates. Cost metrics include resource consumption costs (e.g., compute, storage, network) and operational costs (e.g., monitoring, support).

- Gather Data: Collect data on resource usage and costs over a representative period. This period should be long enough to capture typical workload patterns, such as peak and off-peak hours. Use monitoring tools to gather detailed data on resource consumption.

- Establish a Baseline: Calculate average, minimum, and maximum values for each KPI and cost metric during the baseline period. Document the baseline values, including the date and time the data was collected, and the specific environment configuration.

- Document the Environment: Thoroughly document the environment in which the benchmark was performed. This includes hardware specifications, software versions, configuration settings, and any relevant dependencies.

For example, consider a web application. The baseline period could be one week. The chosen KPIs might include the number of page views per minute, average page load time, and the number of database queries per second. Cost metrics would include the cost of compute instances, storage used for the application and its data, and network bandwidth costs. The baseline data, including the date and time of the data collection and specific environment configuration, would then be documented for future reference.

Using Benchmark Results to Make Informed Decisions

Benchmark results are powerful tools for driving cost optimization and performance improvements. By comparing current performance and costs to the baseline, you can identify trends, detect anomalies, and evaluate the impact of changes.Here’s how to use benchmark results effectively:

- Monitor Regularly: Continuously monitor KPIs and cost metrics. Compare the current data with the baseline to identify any deviations. Set up alerts to notify you of significant changes.

- Analyze Trends: Analyze the data over time to identify trends. For example, is the cost increasing steadily? Is the application slowing down during peak hours? Understanding these trends helps anticipate future needs and proactively manage resources.

- Evaluate Changes: Before implementing any changes to the workload, establish a new benchmark under the proposed configuration. After the changes, compare the performance and cost metrics with the baseline and the new benchmark.

- Optimize Resources: Use the data to optimize resource allocation. For instance, if the workload consistently uses less CPU during off-peak hours, you might consider scaling down the compute instances to save costs.

- Predict Future Costs: Use the benchmark data to predict future costs. If the workload is growing, you can use the trends to estimate future resource needs and associated costs. This helps with budgeting and capacity planning.

For instance, if the baseline benchmark showed that the web application consistently experienced high latency during peak hours, and the benchmark was used to analyze the effect of upgrading to a larger instance type. The results after the upgrade would then be compared with the baseline to see if the upgrade effectively reduced latency and at what cost. If the latency was reduced significantly without a disproportionate increase in cost, the upgrade could be considered a successful optimization.

Comparative Analysis of Alternatives

Evaluating different deployment options is crucial for making informed decisions about your workload’s infrastructure. This involves comparing the cost implications of various technologies, service providers, and deployment models. Understanding these differences allows you to select the most cost-effective solution that aligns with your performance requirements and business goals.

Deployment Options: On-Premise vs. Cloud

Choosing between on-premise and cloud deployment significantly impacts costs. On-premise solutions involve purchasing and maintaining hardware, software, and infrastructure, including staffing costs for IT personnel. Cloud deployments, on the other hand, offer a pay-as-you-go model, potentially reducing upfront capital expenditures. However, cloud costs can fluctuate based on resource consumption and chosen services.The following table provides a comparative analysis of the costs associated with on-premise and cloud deployments, highlighting key considerations:

| Cost Factor | On-Premise | Cloud |

|---|---|---|

| Hardware Costs | Significant upfront investment for servers, storage, and networking equipment. | No upfront hardware costs; pay for resources consumed (e.g., compute, storage). |

| Software Licensing | Purchase of perpetual or subscription licenses; maintenance fees. | Often pay-as-you-go or subscription-based licensing models, included in service costs. |

| Infrastructure Costs | Data center costs (power, cooling, space), network infrastructure. | Included in service fees; provider manages infrastructure. |

| IT Staffing | Salaries for IT personnel (system administrators, network engineers, etc.). | Reduced need for on-site IT staff; provider handles infrastructure management. |

| Maintenance & Upgrades | Ongoing maintenance, hardware replacements, software upgrades. | Provider handles maintenance and upgrades; often transparent to the user. |

| Scalability | Limited scalability; requires purchasing additional hardware. | Highly scalable; resources can be provisioned or de-provisioned dynamically. |

| Disaster Recovery | Requires investments in backup and disaster recovery solutions. | Built-in disaster recovery options and redundancy provided by the cloud provider. |

For example, a small e-commerce business might find that cloud deployment is more cost-effective initially, due to the lack of significant upfront capital expenditure for hardware and infrastructure. As the business grows, cloud scalability can be advantageous. Conversely, a large enterprise with substantial existing infrastructure and specialized requirements might find on-premise solutions more cost-effective, particularly if they have already invested in their own data centers and have a large IT staff.

Cost Implications of Technologies and Service Providers

The choice of technologies and service providers significantly impacts the overall cost structure of your workload. Different technologies have varying pricing models and resource consumption characteristics. Service providers offer diverse pricing strategies, service level agreements (SLAs), and support options.The following points discuss the cost implications of various technologies and service providers:

- Compute Instances: Different instance types (e.g., general-purpose, compute-optimized, memory-optimized) have varying costs based on their resource allocation (CPU, memory, storage). Selecting the appropriate instance type for your workload’s needs can significantly affect costs. For instance, using a compute-optimized instance for a CPU-intensive task will be more efficient and cost-effective than using a general-purpose instance.

- Storage Options: Storage costs vary based on storage class (e.g., hot, cold, archive) and data access frequency. Choosing the right storage class based on data access patterns helps optimize costs. For example, storing infrequently accessed data in a cheaper archive storage tier will reduce storage expenses.

- Database Services: Managed database services offer different pricing models (e.g., pay-per-use, reserved instances). The selection of the appropriate database service and pricing model depends on the workload’s resource requirements and expected usage patterns. Reserved instances often provide significant cost savings for predictable database workloads.

- Service Provider Pricing: Different cloud providers (e.g., AWS, Azure, Google Cloud) offer varying pricing structures for similar services. Comparing pricing across providers is essential to identify the most cost-effective solution. For instance, the pricing for compute instances, storage, and data transfer can vary significantly between different cloud providers.

- Technology Choices: The choice of programming languages, frameworks, and libraries impacts resource consumption and, consequently, costs. Using optimized code and efficient algorithms can minimize resource usage and reduce costs. For example, choosing a memory-efficient programming language can lower the memory footprint of an application.

Consider the following scenario: A company developing a web application has the choice of using AWS, Azure, or Google Cloud. AWS might offer the lowest prices for compute instances in a particular region, while Azure might provide more cost-effective storage solutions. Google Cloud might offer specialized services that are particularly suited to the application’s needs. The best choice will depend on a detailed comparison of costs, performance, and service offerings from each provider.

Automation for Cost Management

Automating workload cost management is crucial for achieving efficiency and controlling expenses in cloud environments. It streamlines processes, reduces manual effort, and enables proactive cost optimization. By implementing automation, organizations can respond rapidly to changes in resource demand, ensure optimal resource utilization, and minimize unnecessary spending.

Streamlining Workload Cost Management with Automation

Automation significantly streamlines workload cost management by eliminating manual tasks and providing real-time insights. This leads to quicker identification of cost-saving opportunities and more efficient resource allocation.* Automated Resource Provisioning: Automating the process of deploying and configuring resources based on workload needs ensures resources are available when needed, avoiding over-provisioning and associated costs.

Automated Monitoring and Alerting

Automation tools monitor resource usage, identify anomalies, and trigger alerts when predefined thresholds are exceeded. This allows for immediate intervention to prevent unexpected costs.

Automated Reporting and Analysis

Automating the generation of cost reports and the analysis of spending patterns provides visibility into cost trends, enabling data-driven decision-making.

Automated Cost Optimization

Automating tasks such as rightsizing instances, scheduling resources, and identifying idle resources enables proactive cost optimization.

Policy Enforcement

Automation can enforce cost management policies consistently across the entire infrastructure, ensuring adherence to budget constraints and best practices.

Automating Resource Scaling to Reduce Expenses

Automating resource scaling is a powerful strategy for cost reduction. By dynamically adjusting resources based on demand, organizations can avoid paying for idle capacity and ensure optimal performance.The steps involved in automating resource scaling to reduce expenses are:

1. Define Scaling Policies

Establish clear rules for scaling resources. These policies should be based on metrics such as CPU utilization, memory usage, network traffic, and queue depth. For example, a policy might state that if CPU utilization exceeds 70% for more than 5 minutes, a new instance should be launched.

2. Implement Auto-Scaling Groups

Utilize auto-scaling groups provided by cloud providers (e.g., AWS Auto Scaling, Azure Virtual Machine Scale Sets, Google Cloud Managed Instance Groups). These groups automatically manage the number of instances based on the defined scaling policies.

3. Configure Monitoring and Alerting

Set up monitoring tools to collect metrics and trigger alerts when scaling thresholds are met. These alerts notify the auto-scaling groups to initiate scaling actions.

4. Test and Refine

Regularly test the scaling policies and adjust them based on performance and cost data. Monitor the impact of scaling actions on application performance and cost. For example, consider an e-commerce website that experiences peak traffic during holiday seasons. With automated scaling, the system can automatically launch additional instances to handle the increased load during these periods.

Once the peak traffic subsides, the system can automatically scale down the number of instances, reducing costs.

Automation Tools and Their Benefits in Cost Management

A variety of automation tools are available to streamline cost management. These tools offer different functionalities and benefits.* Cloud Provider Native Tools:

Benefits

Tight integration with cloud services, ease of use, and cost-effectiveness.

Examples

AWS Cost Explorer, Azure Cost Management + Billing, Google Cloud Billing.

Third-Party Cost Management Platforms

Benefits

Advanced analytics, multi-cloud support, and custom reporting.

Examples

CloudHealth by VMware, Apptio Cloudability, and Flexera.

Infrastructure as Code (IaC) Tools

Benefits

Automated resource provisioning, version control, and infrastructure consistency.

Examples

Terraform, AWS CloudFormation, and Azure Resource Manager.

Configuration Management Tools

Benefits

Automated configuration of resources, compliance enforcement, and reduced operational overhead.

Examples

Ansible, Chef, and Puppet.

Scheduling Tools

Benefits

Automated resource scheduling to reduce costs during off-peak hours.

Examples

Cloud provider-specific scheduling tools, third-party scheduling platforms.

Custom Scripting and Automation

Benefits

Flexibility to automate specific tasks and integrate with other tools.

Examples

Python scripts using cloud provider APIs, shell scripts.

Final Thoughts

In conclusion, effectively analyzing the cost of a specific workload requires a methodical approach that encompasses all facets, from initial definition to ongoing monitoring and optimization. By understanding the various cost drivers, leveraging monitoring tools, and implementing cost-saving strategies, you can gain control over your expenses and ensure that your workload operates in a financially sustainable manner. Continuous analysis and adaptation are key to long-term cost efficiency.

FAQ Corner

What is the difference between “right-sizing” and “auto-scaling”?

Right-sizing involves adjusting the resources allocated to a workload to match its actual needs, based on historical data and anticipated future demands. Auto-scaling, on the other hand, automatically adjusts resources (e.g., CPU, memory) in response to real-time demand, scaling up during peak periods and down during periods of low activity. Both strategies contribute to cost optimization, but they operate on different time scales.

How often should I review my workload costs?

Regular review is essential. The frequency depends on the workload’s volatility and the size of your budget. Ideally, review your costs at least monthly, but more frequent reviews (weekly or even daily) may be beneficial for high-traffic workloads or those with fluctuating resource needs. Set up alerts to notify you of significant cost changes.

What are some free or open-source tools for cost analysis?

Several open-source tools can assist with cost analysis. These include Prometheus for metrics collection, Grafana for visualization, and various cloud provider-specific cost monitoring tools (which often offer free tiers for basic usage). These tools allow you to track resource usage, identify cost drivers, and create custom dashboards.

How can I estimate the cost of a new workload before deployment?

Estimating the cost of a new workload involves several steps. First, define the workload’s anticipated resource requirements (CPU, memory, storage, network). Then, research the pricing models of your chosen cloud provider or service vendors. Use cost calculators provided by these vendors to estimate the monthly expenses based on your projected resource usage. Consider including a buffer for unexpected growth.