Embarking on a journey into the heart of distributed systems, we encounter a fundamental challenge: how to choose between availability and consistency. This decision, often dictated by the CAP theorem, shapes the architecture of our applications and influences their behavior in the face of network partitions. Understanding this trade-off is crucial for designing resilient and efficient systems.

This exploration delves into the intricacies of availability, ensuring data accessibility, and consistency, guaranteeing data integrity. We will examine various strategies, from replication and failover to strong and eventual consistency models. Furthermore, we will analyze real-world scenarios where one characteristic takes precedence over the other, considering the impact on user experience, system resilience, and the overall success of your application.

Understanding the CAP Theorem

The CAP theorem is a fundamental concept in distributed computing, highlighting the inherent trade-offs when designing and implementing distributed systems. It states that a distributed system can only guarantee two out of three desirable properties: Consistency, Availability, and Partition Tolerance. This forces architects to make informed decisions about which characteristics are most crucial for their specific application and its requirements.

Understanding the CAP theorem is critical for making the right design choices for distributed databases, cloud services, and other distributed applications.

The Core Dilemma

The CAP theorem’s core dilemma arises from the realities of distributed systems, where network partitions – temporary or permanent failures that isolate parts of the system – are a common occurrence. The theorem explains how systems are affected when a network partition occurs.

Availability, Consistency, and Partition Tolerance Breakdown

The three properties of the CAP theorem are defined as follows:

- Consistency: All nodes in the system see the same data at the same time. When a client writes data to one node, all other nodes must eventually reflect that same data. This can be achieved through techniques like synchronous replication, where the write operation is not considered complete until all nodes have acknowledged the change.

- Availability: Every request to a non-failing node in the system will eventually receive a response. The system remains operational even if some nodes are unavailable. This means that the system continues to function even if some parts of it are experiencing failures. For example, a web server can continue to serve content even if one of its database servers is down.

- Partition Tolerance: The system continues to operate despite the loss of communication between some nodes. This is essential in distributed systems, where network failures are inevitable. The system must be designed to handle these failures gracefully, maintaining its ability to function and provide data.

The CAP theorem dictates that in the presence of a network partition, a system must choose between consistency and availability. If a system prioritizes consistency, it might temporarily become unavailable to ensure data integrity. If it prioritizes availability, it might return potentially stale data to maintain responsiveness.

Real-World System Examples

Real-world systems often prioritize one characteristic over another depending on their specific use cases.

- CP (Consistency and Partition Tolerance): Systems like traditional relational databases often prioritize consistency and partition tolerance. When a partition occurs, these systems might become unavailable to ensure that data is not corrupted. For example, consider a financial transaction system. During a network partition, it is more important to ensure that transactions are accurately recorded, even if it means temporarily halting new transactions.

- AP (Availability and Partition Tolerance): Systems like content delivery networks (CDNs) and some NoSQL databases often prioritize availability and partition tolerance. They are designed to remain available even if some nodes are unavailable. This means that users can always access data, even if it is not perfectly consistent across all nodes. Consider a social media platform where users can still view their feeds and post updates, even if some of the data might be slightly out of sync temporarily.

- CA (Consistency and Availability): In practice, achieving both consistency and availability simultaneously in the presence of network partitions is impossible. Systems that attempt to be both consistent and available, such as a single-server database, are not truly distributed and thus do not face the same trade-offs. In a CA system, the system may become unavailable during network issues, as it prioritizes consistency.

The choice between CP and AP depends on the application’s requirements. For example, a system handling financial transactions would typically prioritize consistency (CP) to prevent data corruption, while a social media platform might prioritize availability (AP) to ensure users can always access content, even if some updates are slightly delayed. The selection of the right approach requires a thorough understanding of the application’s needs and the potential trade-offs involved.

Defining Availability

Availability, in the realm of data systems, represents the guarantee that data remains accessible and operational when needed. It’s a crucial aspect of system design, directly impacting user experience and the overall success of any application or service. Achieving high availability means minimizing downtime and ensuring users can interact with the system without interruption, regardless of internal failures or external factors.

Meaning of Availability in Data Systems

Availability is defined as the percentage of time a system is operational and accessible to users. A system with high availability aims to minimize downtime, which is the period when the system is unavailable. This is often quantified using “nines,” such as “five nines” (99.999% availability), which translates to a very small amount of permissible downtime per year. This metric is crucial for systems where constant access is critical, such as online banking, e-commerce platforms, and communication services.

The focus is on providing continuous access to data, allowing users to read, write, and modify data as needed, even in the face of potential system failures.

Strategies for Achieving High Availability

Several strategies are employed to achieve high availability in data systems. These techniques are designed to mitigate the impact of failures and ensure continued operation.

- Replication: This involves creating multiple copies of data across different servers or data centers. If one server fails, the system can automatically switch to a replica, ensuring data accessibility. There are different replication strategies, including synchronous and asynchronous replication.

- Synchronous replication guarantees that all data is written to multiple servers before acknowledging the write operation, ensuring data consistency but potentially increasing latency.

- Asynchronous replication writes data to the primary server and then replicates it to other servers in the background, offering better performance but potentially leading to data inconsistencies in case of a primary server failure.

- Failover: This is an automated process that switches to a backup system or server when the primary system fails. Failover mechanisms can be designed to detect failures quickly and seamlessly redirect traffic to the backup, minimizing downtime. The failover process often involves monitoring the health of the primary system and automatically initiating a switch when a failure is detected.

- Load Balancing: Distributes incoming network traffic across multiple servers to prevent any single server from becoming overloaded. This ensures that resources are used efficiently and that no single point of failure exists. Load balancers can distribute traffic based on various criteria, such as server load, geographical location, or user session affinity.

- Redundancy: This involves having multiple components (servers, network connections, etc.) that can take over if one fails. Redundancy is a fundamental principle in high-availability design, providing a backup for every critical component. This is often achieved by using multiple servers in a cluster, with each server capable of handling the workload.

Impact of Availability on User Experience and System Resilience

High availability directly impacts user experience and system resilience. A system with high availability ensures that users can access the data and services they need whenever they need them, without experiencing interruptions.

- Improved User Experience: Users are more likely to trust and rely on a system that is consistently available. Reduced downtime translates to a better user experience, leading to increased user satisfaction and loyalty. Consider an e-commerce platform: if it is unavailable during a peak shopping period, it could lead to lost sales and damage to the business’s reputation.

- Enhanced System Resilience: Systems designed for high availability are inherently more resilient to failures. They can withstand hardware failures, software bugs, and even natural disasters without significant disruption. This resilience translates to greater business continuity and reduced risk of data loss or corruption.

- Increased Business Value: High availability contributes to increased business value by ensuring that critical services are always accessible. This can lead to higher revenues, improved operational efficiency, and a stronger competitive advantage. For example, financial institutions depend heavily on high availability to process transactions and provide services to their customers. Any downtime can have significant financial implications.

Defining Consistency

In the realm of distributed systems and data management, consistency represents the cornerstone of data integrity. It ensures that all data copies across a system remain synchronized and accurate, reflecting the latest updates. This is critical for maintaining data reliability and preventing conflicting information. Understanding consistency is vital for building systems that provide a consistent view of data to all users, regardless of their location or the number of concurrent operations.

Maintaining Data Integrity

Consistency, in essence, is the guarantee that all nodes in a distributed system agree on the same data value at a given point in time. This agreement can be achieved through various strategies, each offering different trade-offs in terms of performance and availability. The goal is to prevent conflicting information and ensure that data modifications are reflected uniformly across the system.

- Definition of Consistency: Consistency, in the context of distributed systems, means that every read operation retrieves the most recent write operation or an updated value. It implies that data is always up-to-date and consistent across all nodes.

- Significance of Data Integrity: Maintaining data integrity is paramount for several reasons. It ensures that users and applications operate on accurate information, prevents data corruption, and facilitates reliable decision-making. In financial systems, for example, consistent data is crucial for processing transactions and maintaining account balances. In e-commerce, consistency ensures that product inventory and order details are accurate.

- Impact of Inconsistency: Inconsistent data can lead to a variety of problems. Users might see different versions of the same information, leading to confusion and frustration. Applications might make incorrect decisions based on outdated or conflicting data. Data corruption and loss can occur if updates are not properly synchronized.

Strong and Eventual Consistency Models

Consistency models define the guarantees that a distributed system provides regarding data consistency. Different models offer varying levels of consistency, impacting the trade-off between data accuracy and system availability.

- Strong Consistency: Strong consistency, also known as immediate consistency, guarantees that all nodes see the same data at the same time. Any write operation is immediately reflected across all replicas before the operation is considered successful. This model ensures that read operations always return the latest version of the data. It is often achieved through mechanisms like synchronous replication, where every write operation must be acknowledged by all replicas before the operation is considered complete.

For example, imagine a bank account. With strong consistency, if you deposit money, all views of your account balance (on your phone, at the ATM, and in the bank’s database) will instantly reflect the new balance.

- Eventual Consistency: Eventual consistency allows for temporary inconsistencies. After a write operation, it may take some time for all nodes to receive and apply the update. Eventually, all nodes will converge to the same data value, but there may be a period where different nodes have different versions of the data. This model prioritizes availability over immediate consistency. It’s common in systems that require high availability and can tolerate some degree of data lag.

Eventual consistency is often implemented using asynchronous replication.

An example is a social media platform. When you update your profile picture, it might take a few moments for the new picture to appear on all your friend’s feeds. During this time, some users might see the old picture while others see the new one.

- Other Consistency Models: Beyond strong and eventual consistency, there are various other consistency models, including:

- Read-after-write consistency: Ensures that a user sees their own writes immediately.

- Session consistency: Guarantees consistency within a user’s session.

- Monotonic read consistency: Ensures that if a user reads a value, subsequent reads will return the same or a more recent value.

- Monotonic write consistency: Ensures that writes are performed in the order they are issued.

Trade-offs in Consistency Models

Choosing a consistency model involves careful consideration of trade-offs between consistency, availability, and partition tolerance (the CAP theorem). Different models offer varying levels of these properties, and the optimal choice depends on the specific requirements of the application.

- Strong Consistency Trade-offs: Strong consistency typically prioritizes data accuracy. However, it can impact availability, especially during network partitions. If a node is unavailable, write operations might need to be blocked until the node recovers to maintain consistency.

For example, in a database with strong consistency, if the primary database server goes down, the system might become unavailable for writes until a new primary is elected and the data is synchronized.

- Eventual Consistency Trade-offs: Eventual consistency prioritizes availability and partition tolerance. The system remains available even during network partitions. However, it can introduce data inconsistencies, as different nodes might have different versions of the data for a period. This can lead to challenges in certain applications.

For example, in a distributed cache, eventual consistency might allow for quick reads, but it could also mean that the latest data is not always immediately available.

- Choosing the Right Model: The choice of consistency model depends on the application’s specific needs. For applications where data accuracy is paramount, strong consistency might be preferred, even if it means sacrificing some availability. For applications where high availability is critical, eventual consistency might be a better choice, even if it introduces some data inconsistencies.

For instance, a financial system dealing with account balances might need strong consistency, while a social media platform might be able to tolerate eventual consistency for displaying user profiles.

Partition Tolerance

In the realm of distributed systems, the ability to withstand network disruptions is paramount. Partition tolerance, the “P” in the CAP theorem, directly addresses this critical aspect. A system that is partition-tolerant continues to operate despite network failures that isolate parts of the system. This resilience is not optional; it is a fundamental requirement for any distributed system operating in a real-world environment where network hiccups are inevitable.

Handling Network Disruptions and Data Inconsistencies

Network partitions occur when a network fails, splitting a distributed system into isolated segments. This can happen due to various reasons, including hardware failures, software bugs, or network congestion. During a partition, nodes in different segments can no longer communicate with each other. The challenge lies in how a system manages data consistency and availability in the face of these disruptions.

The choice between availability and consistency becomes critical during these periods. A system can prioritize either the availability of data (potentially allowing inconsistent reads) or the consistency of data (potentially making some data unavailable).To illustrate the complexities, consider a distributed e-commerce platform. A network partition might occur between the database servers responsible for managing inventory and order processing. During this partition, the system must decide whether to allow customers to continue placing orders (prioritizing availability) even if the inventory information might be temporarily inconsistent across the isolated segments, or to prevent new orders until the partition is resolved (prioritizing consistency), which would affect availability.Different strategies can be employed to handle network failures and data inconsistencies.

These strategies represent trade-offs between availability and consistency, reflecting the core tenets of the CAP theorem.Here is a comparison of partition handling strategies:

| Strategy | Description | Implications for Availability | Implications for Consistency |

|---|---|---|---|

| Prioritize Availability (AP) | The system continues to process requests even during a partition, potentially serving stale or inconsistent data. Writes might be accepted by one partition and not replicated to others immediately. | High availability. Users can generally continue to access the system and perform operations. | Data consistency is compromised. Data across partitions might diverge temporarily. Eventually consistency is a common approach. |

| Prioritize Consistency (CP) | The system sacrifices availability to maintain data consistency. During a partition, the system might block writes or return errors if it cannot guarantee data consistency across all partitions. | Low availability during a partition. Users might experience downtime or read errors. | High data consistency. Data across partitions is eventually consistent when the partition is resolved. |

| Eventual Consistency | Data changes are propagated across partitions asynchronously. Reads might return stale data initially, but eventually, all nodes will converge to the same data state. | High availability. The system remains operational even during a partition. | Data consistency is eventually achieved, but not immediately. There is a period of potential inconsistency. |

| Quorum-Based Systems | Reads and writes require a quorum (a majority) of nodes to succeed. This ensures that the system maintains a certain level of consistency. | Availability is reduced if a majority of nodes are unavailable during a partition. | Stronger consistency than eventual consistency. Data is generally consistent across a quorum. |

Scenarios Where Availability Takes Priority

In certain situations, the unwavering availability of a system outweighs the need for absolute consistency. This means that the system will always attempt to respond to requests, even if the data might not be perfectly up-to-date across all nodes. The primary goal is to prevent service disruptions, ensuring that users can continue to interact with the system, even if the information they see is slightly stale.

This trade-off is a deliberate design choice, made to prioritize user experience and business continuity.

Applications Prioritizing Availability

Several types of applications prioritize availability over strict consistency. The following examples highlight common scenarios and the reasoning behind this prioritization:

- E-commerce Platforms: E-commerce websites frequently prioritize availability.

- Social Media Feeds: Social media platforms prioritize availability to ensure a constant stream of content.

- Online Gaming: Online multiplayer games heavily rely on availability to maintain a smooth gameplay experience.

- DNS (Domain Name System) Services: DNS servers must be highly available to resolve domain names to IP addresses.

- Real-time Streaming Services: Streaming services, such as video and audio platforms, emphasize availability.

Users need to be able to browse products, add items to their cart, and place orders without interruption. Even if there’s a brief delay in reflecting the latest inventory updates across all servers, the ability to complete a purchase is paramount. Imagine a customer attempting to buy a limited-edition item. A consistent system might refuse the purchase if inventory data is temporarily inconsistent across nodes, losing the sale.

Prioritizing availability ensures the transaction can proceed, even if the inventory count is updated later.

Users expect to see their feeds, post updates, and interact with others without significant delays. A temporary inconsistency in showing the most recent likes or comments is less critical than the entire feed becoming unavailable. Consider a user scrolling through their feed. A consistent system might delay loading new posts if there’s a brief network partition, potentially causing a frustrating user experience.

Availability prioritizes a continuous flow of information.

Players expect to be able to join games, interact with other players, and have their actions reflected in real-time. A slight delay in updating the scores or player positions across all servers is preferable to a complete game outage. Consider a fast-paced shooter game. A consistent system might freeze a player’s actions if it struggles to synchronize with the game server, leading to frustration.

Prioritizing availability ensures continuous gameplay, even with potential minor discrepancies.

If a DNS server becomes unavailable, users cannot access websites. While a DNS server may propagate updates across different servers with a delay, the primary goal is to respond to DNS queries. A temporary inconsistency is acceptable, but unavailability is not. Think of a scenario where a company’s website is down because of a DNS failure. Availability is essential to maintain online presence.

Users expect continuous playback without interruptions. While there might be minor buffering or slight inconsistencies in recommendations, the core function is to stream content. Imagine a user watching a live sporting event. A consistent system might pause the stream if it struggles to retrieve data. Availability prioritizes continuous playback, even if there are occasional quality hiccups.

Consequences of Sacrificing Availability

The decision to prioritize availability has potential consequences, particularly concerning data consistency.

- Stale Data: Users might see outdated information.

- Data Conflicts: Concurrent updates to the same data can result in conflicts.

- Lost Updates: In certain cases, updates might be lost.

- Complexity in Data Management: Handling eventual consistency and resolving conflicts adds complexity.

- Potential for Incorrect Decisions: Users or systems may make decisions based on inaccurate information.

For example, in an e-commerce platform, a user might add an item to their cart that has already been purchased by another user. This could lead to frustration and potential issues with order fulfillment.

Consider a scenario where two users try to update the same profile information simultaneously. Without proper conflict resolution mechanisms, one user’s changes might be overwritten. This situation requires careful design considerations, such as “last write wins” or more sophisticated merging strategies.

If a system is designed with eventual consistency, there’s a small chance that an update might be overwritten before it can be propagated to all nodes. This risk must be carefully weighed against the benefits of increased availability.

Developers need to implement mechanisms to detect and resolve conflicts, manage data replication, and ensure data integrity. These mechanisms add to the overall complexity of the system, increasing development and operational costs.

If a user is viewing outdated inventory data, they might make a purchase that cannot be fulfilled. This can lead to customer dissatisfaction and potentially damage the business’s reputation.

Scenarios Where Consistency Takes Priority

In certain applications, maintaining data consistency is more critical than ensuring constant availability. This means that the system might sacrifice the ability to respond to every request immediately to guarantee that the data remains accurate and reliable. The choice between availability and consistency depends heavily on the specific requirements of the application and the potential consequences of data inconsistencies. Prioritizing consistency ensures that users always see the correct and up-to-date information, which is crucial for many business operations.

Applications Prioritizing Consistency

A variety of applications require strict data consistency to function correctly and maintain user trust. These applications often deal with financial transactions, critical infrastructure, or sensitive personal data. The following list provides examples of applications where consistency is paramount:

- Financial Transactions: Applications like online banking, stock trading platforms, and payment processing systems prioritize consistency. The accuracy of financial data is non-negotiable.

- Reason: Even minor inconsistencies, such as incorrect balances or duplicated transactions, can lead to significant financial losses, legal issues, and a loss of customer trust. For example, a double-charged transaction or an incorrect account balance can cause significant distress to a customer and require extensive reconciliation efforts.

- E-commerce Platforms: E-commerce platforms, especially those managing inventory and order fulfillment, benefit from strong consistency. Accurate inventory counts and order processing are essential.

- Reason: Inconsistent data can lead to overselling products (promising items that are out of stock), incorrect order fulfillment, and ultimately, dissatisfied customers. Imagine a customer ordering an item only to find out it is unavailable after the payment has been processed.

- Healthcare Systems: Systems that store patient medical records, diagnostic results, and treatment plans require strong consistency to ensure patient safety and effective care.

- Reason: Inconsistent data, such as incorrect medication dosages or inaccurate diagnoses, can have severe consequences for patient health. A doctor relying on outdated or incorrect medical information could make a critical error in treatment.

- Database Management Systems (DBMS): Traditional relational databases (e.g., PostgreSQL, MySQL) prioritize consistency through features like ACID (Atomicity, Consistency, Isolation, Durability) properties.

- Reason: ACID properties guarantee that database transactions are reliable and consistent. This is vital for data integrity and the ability to recover from system failures. The database system is designed to maintain the integrity of data even during failures.

- Government and Legal Systems: Applications used for maintaining legal records, tax information, and citizen data require consistency to ensure accuracy and compliance with legal regulations.

- Reason: Inconsistencies in these systems can lead to legal disputes, incorrect tax assessments, and other serious consequences. For example, incorrect property records can cause issues with ownership and property rights.

Risks of Compromising Consistency

Compromising data consistency in applications where it’s crucial can lead to several significant risks. These risks often outweigh the benefits of increased availability. Understanding these potential problems helps to justify the decision to prioritize consistency in specific contexts.

- Data Corruption: Inconsistent data can lead to corrupted data, where the information stored is inaccurate or incomplete. This corruption can spread throughout the system, making it difficult to trust any data.

- Financial Losses: Inconsistent financial data can directly lead to financial losses through incorrect transactions, fraud, and legal penalties. For example, if a stock trading platform experiences data inconsistencies, trades might be executed incorrectly, causing significant financial harm to both the platform and its users.

- Legal and Regulatory Violations: Inconsistent data in regulated industries, such as healthcare and finance, can lead to violations of legal and regulatory requirements. This can result in fines, lawsuits, and damage to reputation.

- Loss of Trust: Users and customers will lose trust in a system that frequently displays inconsistent or incorrect information. This loss of trust can be difficult to recover and can lead to a decline in usage and revenue.

- Operational Disruptions: Inconsistent data can disrupt business operations by causing incorrect inventory levels, order fulfillment errors, and other operational inefficiencies. For example, an e-commerce platform with inconsistent inventory data might oversell products, leading to delayed shipments and dissatisfied customers.

The Role of Data Models in the Choice

The selection between prioritizing availability and consistency is significantly influenced by the chosen data model. Different data models are designed with inherent trade-offs, often leaning towards either availability or consistency based on their underlying structure and operational characteristics. Understanding these biases is crucial when designing a system that meets specific requirements for data integrity and responsiveness.

Data Models and Their Bias

The data model employed in a system profoundly affects its ability to balance availability and consistency. Certain data models are inherently better suited for applications that demand high availability, while others prioritize strong consistency. The choice of data model therefore predetermines, to a large extent, the trade-offs a system will make in the face of network partitions.

- Relational Databases (RDBMS): Relational databases, with their ACID (Atomicity, Consistency, Isolation, Durability) properties, are strongly biased towards consistency. They ensure data integrity through strict adherence to constraints and transactions. However, this can lead to reduced availability during network partitions, as transactions may need to be aborted or delayed to maintain consistency.

- NoSQL Databases: NoSQL databases offer a variety of data models, each with different trade-offs.

- Key-Value Stores: Key-value stores, known for their simplicity, typically prioritize availability. They can often handle partitions by replicating data across multiple nodes and allowing reads and writes to continue even if some nodes are unavailable. Consistency might be eventually consistent, meaning that data changes propagate across the system over time.

- Document Databases: Document databases often offer a balance between consistency and availability. They can support both strong consistency and eventual consistency, depending on the configuration. The ability to store complex, semi-structured data can also contribute to flexibility in handling various consistency requirements.

- Column-Family Databases: Column-family databases are designed for high availability and scalability. They are optimized for writes and can often tolerate partitions by allowing writes to continue even if some nodes are down. Consistency is typically eventually consistent.

- Graph Databases: Graph databases, designed to model relationships between data, often prioritize consistency, especially when dealing with critical relationships. However, some graph database implementations can be configured to favor availability over strict consistency, depending on the application’s needs.

- Data Warehouses: Data warehouses, designed for analytical processing, often focus on consistency within the warehouse itself, even if the source data is eventually consistent. They typically prioritize data integrity for accurate reporting and analysis.

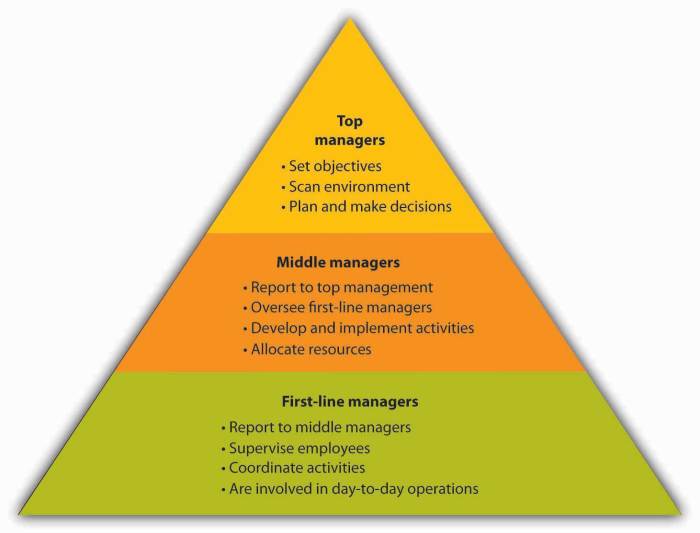

Visual Representation of Data Model Trade-offs

A visual representation can effectively illustrate the trade-offs inherent in different data models.

Imagine a two-dimensional chart. The x-axis represents Availability, increasing from left to right, and the y-axis represents Consistency, increasing from bottom to top.

- Relational Databases: Represented as a point in the upper-left quadrant. They score high on consistency but lower on availability.

- Key-Value Stores: Represented as a point in the lower-right quadrant. They score high on availability but lower on consistency.

- Document Databases: Represented as a point near the center. They demonstrate a balance between availability and consistency, positioned slightly higher on the y-axis depending on configuration.

- Column-Family Databases: Positioned in the lower-right quadrant, similar to key-value stores, but with a slightly higher emphasis on availability.

- Graph Databases: Positioned in the upper-middle section, demonstrating a stronger focus on consistency compared to document databases, with varying degrees of availability depending on the implementation.

The chart would also include a visual representation of the CAP theorem, showing the inherent impossibility of simultaneously achieving perfect consistency and availability in the presence of partitions. A line, representing the trade-off, could be drawn diagonally across the chart, highlighting the varying degrees to which different data models prioritize either availability or consistency. The closer a data model is to one of the axes, the stronger its bias towards that characteristic.

This visual aids in understanding the implications of choosing a specific data model in terms of its consistency and availability characteristics.

Practical Strategies for Choosing the Right Approach

Choosing between availability and consistency is a crucial decision in distributed systems design. The optimal choice hinges on a thorough understanding of the system’s requirements and a careful evaluation of the trade-offs involved. This section Artikels practical strategies to guide this decision-making process, ensuring that the chosen approach aligns with the application’s performance, scalability, and data integrity needs.

Evaluating Trade-offs Between Availability and Consistency

The decision between availability and consistency requires a balanced evaluation of their respective impacts. Consider the following points to weigh the trade-offs effectively.

- Identify Critical Data: Determine which data is most crucial for the application’s core functionality. For data that is highly sensitive or essential for accurate decision-making (e.g., financial transactions), consistency might be prioritized. For less critical data, or where eventual consistency is acceptable, availability might take precedence.

- Assess Tolerance for Data Staleness: Evaluate how long the system can tolerate potentially stale data. In scenarios where near real-time data is essential (e.g., stock prices), consistency is often preferred. If a slight delay in data propagation is acceptable (e.g., social media feeds), availability may be a more viable option.

- Analyze Read/Write Ratio: Consider the balance between read and write operations. Systems with a high read-to-write ratio often benefit from prioritizing availability, as they can serve more read requests even during network partitions. Systems with a high write-to-read ratio may benefit from prioritizing consistency to ensure data integrity during updates.

- Consider the Cost of Inconsistency: Evaluate the potential consequences of data inconsistency. If inconsistent data could lead to significant errors, financial losses, or reputational damage, prioritize consistency. If the impact of minor inconsistencies is minimal, availability can be favored.

- Evaluate Network Reliability: Assess the reliability of the network infrastructure. In unreliable networks, prioritizing availability might be more practical, as it allows the system to continue operating even during network disruptions. In reliable networks, the focus can shift towards consistency.

Step-by-Step Procedure for Selecting the Appropriate Approach

A structured approach simplifies the selection process, ensuring a well-informed decision.

- Define System Requirements: Clearly define the application’s functional and non-functional requirements. This includes understanding the types of data handled, the expected read/write patterns, the acceptable latency, and the tolerance for data loss or inconsistency.

- Analyze Data Characteristics: Examine the nature of the data being managed. Identify data dependencies, the criticality of data integrity, and the frequency of updates. Categorize data based on its sensitivity and the impact of potential inconsistencies.

- Evaluate CAP Theorem Implications: Apply the CAP Theorem to understand the inherent trade-offs. Recognize that in the presence of a network partition, the system must choose between consistency and availability.

- Prioritize Based on Requirements: Based on the analysis, prioritize either availability or consistency. Consider the following:

- If data integrity is paramount, prioritize consistency.

- If continuous operation is essential, prioritize availability.

- Select Data Model and Technologies: Choose data models and technologies that align with the chosen priority.

- For consistency, consider using ACID-compliant databases or distributed consensus algorithms.

- For availability, consider using eventual consistency models, caching, and geographically distributed data centers.

- Design for Fault Tolerance: Implement mechanisms to handle network partitions and failures. This may include using replication, redundancy, and failover strategies.

- Implement Monitoring and Alerting: Set up monitoring and alerting systems to track system performance, data consistency, and availability. This allows for proactive identification and resolution of issues.

- Test and Iterate: Rigorously test the system under various conditions, including network partitions and high load. Continuously monitor and iterate on the design based on performance and feedback.

Assessing the Potential Impact of Each Choice on Performance and Scalability

The chosen approach significantly impacts system performance and scalability. Understanding these impacts is crucial for making an informed decision.

- Consistency-Focused Systems:

- Performance: Consistency often comes at the cost of performance. Implementing strong consistency models, like two-phase commit (2PC) or Paxos, can introduce latency due to the need for coordination between nodes.

- Scalability: Scaling consistent systems can be challenging. Distributed consensus algorithms require a majority of nodes to agree on updates, which can limit scalability.

- Example: A financial transaction system prioritizes consistency to ensure the accuracy of account balances. While this approach guarantees data integrity, it may experience higher latency during transactions compared to an eventually consistent system.

- Availability-Focused Systems:

- Performance: Availability-focused systems generally offer higher performance. Data is often cached and served from multiple locations, reducing latency.

- Scalability: Availability-focused systems are often highly scalable. Data can be replicated across multiple nodes and data centers, enabling the system to handle a large number of requests.

- Example: A social media platform prioritizes availability to ensure users can access their feeds even during network disruptions. This approach may tolerate minor data inconsistencies, such as temporary delays in the propagation of updates.

- Hybrid Approaches:

- Performance and Scalability: Hybrid approaches attempt to balance consistency and availability. These systems may use different consistency models for different data sets or transactions, optimizing for both performance and scalability.

- Example: An e-commerce platform might prioritize consistency for critical operations like order processing and payment, while using eventual consistency for less critical data like product recommendations or user reviews.

- Impact of Data Models: The choice of data model can influence the trade-offs.

- ACID-compliant databases: Prioritize consistency, potentially impacting performance and scalability.

- NoSQL databases: Often prioritize availability and scalability, potentially trading off consistency.

Techniques for Mitigating the Trade-Offs

The CAP theorem presents a fundamental trade-off, but it doesn’t mean we’re entirely helpless in the face of it. Several techniques exist to minimize the negative impacts of choosing between availability and consistency. These strategies aim to provide a balance, offering a system that is both responsive and reliable, even in the presence of network partitions.

Eventual Consistency with Conflict Resolution

Eventual consistency allows for high availability by permitting writes to multiple nodes, even if some nodes are temporarily unavailable or partitioned. The data eventually propagates to all nodes, ensuring consistency over time. However, this approach introduces the possibility of conflicts, which need to be addressed.The key to making eventual consistency effective is conflict resolution. This involves strategies to handle situations where different nodes have different versions of the same data.

These strategies can be implemented at the application level or within the database system itself. Some common approaches include:

- Last Write Wins (LWW): The most recent write to a data item is considered the definitive version. This is simple but can lead to data loss if concurrent updates are lost.

- Version Vectors: Each data item has a version vector that tracks the updates made by each node. This helps determine the causal order of updates and resolve conflicts.

- Operational Transforms (OT): Used primarily in collaborative editing systems, OT transforms concurrent operations to ensure they converge to a consistent state.

- Application-Specific Conflict Resolution: The application logic defines how to resolve conflicts based on the specific data and use case. This offers the most flexibility but requires careful design.

Here’s an example of a simple conflict resolution strategy, where the application prioritizes the most recent modification timestamp:

// Simplified example in pseudo-codefunction resolveConflict(localData, remoteData) if (localData.timestamp > remoteData.timestamp) return localData; // Local data is more recent else if (remoteData.timestamp > localData.timestamp) return remoteData; // Remote data is more recent else // Timestamp collision - use a secondary tie-breaker (e.g., node ID) if (localData.nodeId > remoteData.nodeId) return localData; else return remoteData;

In this example, the `resolveConflict` function takes two versions of a data item and compares their timestamps. The version with the later timestamp is chosen as the definitive version. If timestamps are equal, a secondary tie-breaker, such as the node ID, is used. This approach, while simple, illustrates the fundamental principle of conflict resolution: defining a strategy to merge or choose between conflicting versions of data.

This allows the system to maintain availability while eventually achieving consistency.

Future Trends and Considerations

The landscape of distributed systems is constantly evolving, driven by advancements in technology and the increasing demands of modern applications. Understanding these emerging trends and their implications for the availability-consistency trade-off is crucial for making informed design decisions. This section explores these trends, including the impact of cloud and edge computing, and offers insights into the future of data management.

Emerging Trends in Distributed Systems and the Availability-Consistency Dilemma

Several trends are reshaping how distributed systems are designed and operated, each presenting new challenges and opportunities regarding the CAP theorem. These trends influence the choice between prioritizing availability or consistency.

- Microservices Architecture: The shift towards microservices, where applications are broken down into smaller, independently deployable services, promotes greater availability. Each service can be scaled and updated independently, reducing the impact of failures. However, this architecture also increases the complexity of maintaining consistency across multiple services, often necessitating eventual consistency models. For example, consider an e-commerce platform. A user’s shopping cart (a microservice) might be highly available, allowing users to continue adding items even if the inventory service (another microservice) is temporarily unavailable.

However, this could lead to temporary inconsistencies in stock levels, which are eventually resolved through background processes.

- Serverless Computing: Serverless architectures abstract away the underlying infrastructure, allowing developers to focus on code. This model promotes high availability by automatically scaling resources based on demand. The inherent elasticity of serverless environments often favors availability over strict consistency, as data consistency can be challenging to manage in these highly distributed and ephemeral systems.

- Eventual Consistency: The rise of systems that embrace eventual consistency is a significant trend. This approach prioritizes availability and partition tolerance, accepting that data may not be immediately consistent across all nodes. Techniques like conflict resolution and reconciliation are crucial in these systems. Databases like Cassandra and DynamoDB are examples of systems designed with eventual consistency as a core principle.

- Multi-Cloud and Hybrid Cloud: Organizations are increasingly adopting multi-cloud and hybrid cloud strategies to improve resilience and avoid vendor lock-in. This approach, however, introduces new complexities in data consistency, as data needs to be synchronized across different cloud providers or between on-premises and cloud environments. Choosing between availability and consistency becomes even more critical in these scenarios, as the network latency and potential for partitions increase.

Cloud Computing and Edge Computing’s Influence

Cloud computing and edge computing are fundamentally altering how applications are deployed and data is managed, directly impacting the choices between availability and consistency.

- Cloud Computing: Cloud platforms provide scalable and resilient infrastructure, enabling organizations to build highly available systems. However, the distributed nature of cloud environments can also introduce challenges in maintaining data consistency. The inherent flexibility of cloud services allows developers to tailor their approach to the CAP theorem based on their specific needs. For instance, a content delivery network (CDN) prioritizes availability by caching content at edge locations, even if the content is not immediately consistent with the origin server.

- Edge Computing: Edge computing brings processing closer to the data source, reducing latency and enabling real-time applications. Edge deployments often prioritize availability and partition tolerance due to the inherent challenges of maintaining constant connectivity with a central data center. Data is frequently cached at the edge, and local processing is favored, even if it means accepting eventual consistency. Examples include autonomous vehicles that need to process data locally for immediate decisions, even when connectivity to a central server is intermittent.

- Data Locality and Proximity: Both cloud and edge computing emphasize data locality. Placing data closer to the users or the processing units improves performance and reduces latency. However, this can also complicate consistency management, particularly in edge environments where network conditions are unpredictable.

The Future of Data Management and System Design

The future of data management will likely involve a hybrid approach, combining the benefits of both strong consistency and high availability. This requires innovative solutions and a deeper understanding of the trade-offs involved.

- Converging Consistency Models: Future systems will likely incorporate a spectrum of consistency models, allowing developers to choose the appropriate model for each specific use case. This could involve using strong consistency for critical transactions and eventual consistency for less critical data.

- Intelligent Data Management: Artificial intelligence (AI) and machine learning (ML) will play a greater role in managing data consistency. These technologies can be used to predict and resolve conflicts, optimize data replication, and automatically adjust consistency levels based on system conditions. For example, an AI system could detect network congestion and temporarily relax consistency requirements to maintain availability.

- Data Governance and Observability: Data governance frameworks and enhanced observability tools will be essential for managing complex distributed systems. These tools will provide insights into data consistency, performance, and system behavior, allowing developers to proactively address potential issues. This includes monitoring data replication lag, detecting inconsistencies, and providing tools for data reconciliation.

- Specialized Databases: The emergence of specialized databases, optimized for specific workloads, will continue. These databases will be designed with particular consistency and availability requirements in mind, allowing developers to choose the best tool for the job. For example, time-series databases are optimized for high-volume data ingestion and are often designed with eventual consistency in mind.

End of Discussion

In conclusion, the decision between availability and consistency is not a one-size-fits-all solution. It demands a thorough understanding of system requirements, data models, and the potential consequences of each choice. By carefully evaluating these factors and employing techniques to mitigate the trade-offs, we can design robust and scalable systems that meet the demands of modern applications. The future of data management hinges on our ability to navigate this complex landscape with informed decisions and innovative approaches.

Essential FAQs

What is the CAP Theorem?

The CAP Theorem states that in a distributed system, you can only guarantee two out of three properties: Consistency, Availability, and Partition tolerance. This theorem helps guide the design choices in distributed systems.

What is the difference between strong and eventual consistency?

Strong consistency ensures that all users see the same data at the same time. Eventual consistency allows for temporary inconsistencies, but guarantees that the data will eventually become consistent across all nodes.

How does data modeling influence the choice between availability and consistency?

Different data models have inherent biases. For example, relational databases often prioritize consistency, while NoSQL databases might favor availability. The choice of data model significantly impacts how you approach the CAP theorem trade-off.

What are some techniques for mitigating the availability-consistency trade-off?

Techniques like eventual consistency with conflict resolution, optimistic locking, and careful design of data replication strategies can help minimize the negative impacts of the trade-off. These approaches aim to balance the need for data integrity with the need for system responsiveness.