How to measure DevOps performance with DORA metrics, is a journey into the heart of software delivery excellence. In today’s fast-paced tech landscape, understanding and optimizing your DevOps practices is no longer optional; it’s essential for staying competitive. This guide delves into the practical application of DORA (DevOps Research and Assessment) metrics, providing a roadmap for teams aiming to enhance their software delivery performance and achieve measurable improvements.

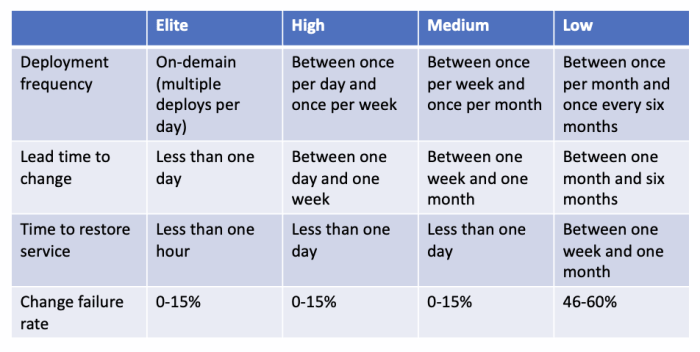

We will explore the core DORA metrics – Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Time to Restore Service – providing actionable insights and practical strategies for measurement and improvement. From setting up your measurement system to analyzing data and fostering a culture of continuous improvement, this resource equips you with the knowledge and tools to transform your DevOps practices and drive impactful results.

Introduction to DevOps Performance Measurement

Measuring DevOps performance is crucial for understanding the effectiveness of your DevOps practices and driving continuous improvement. It provides insights into how quickly and reliably you can deliver software, allowing you to identify bottlenecks, optimize processes, and ultimately achieve better business outcomes. Without data-driven measurement, improvements are often based on assumptions, leading to wasted effort and missed opportunities.Data-driven insights empower teams to make informed decisions, track progress, and demonstrate the value of their work.

By regularly analyzing key metrics, organizations can identify areas for improvement, validate the impact of changes, and foster a culture of continuous learning. This approach shifts the focus from subjective opinions to objective evidence, enabling data-backed decisions.

General Principles of DevOps Performance Evaluation

The evaluation of DevOps performance is guided by several key principles, which ensure a consistent and effective approach to measurement and improvement. These principles focus on data-driven insights, continuous feedback, and a culture of learning.

- Focus on Outcomes: DevOps performance evaluation should focus on the outcomes that matter most to the business. This includes metrics related to software delivery speed, stability, and customer satisfaction. The ultimate goal is to improve the business value delivered by the software.

- Use Data-Driven Insights: Rely on data to inform decisions. Collect and analyze metrics to understand current performance, identify areas for improvement, and track the impact of changes. This data-driven approach reduces reliance on guesswork and subjective opinions.

- Establish Baselines and Set Targets: Before implementing changes, establish a baseline performance. This provides a point of reference for measuring improvement. Set realistic and measurable targets to drive progress and track success.

- Promote Continuous Feedback: Implement a feedback loop that allows teams to regularly review performance data and make adjustments. This iterative approach enables teams to quickly adapt to changing requirements and optimize their processes.

- Foster a Culture of Learning: Encourage a culture of experimentation and learning. View failures as opportunities to learn and improve. Promote collaboration and knowledge sharing across teams.

- Automate Measurement: Automate the collection and analysis of metrics to reduce manual effort and ensure data accuracy. Automating the process ensures metrics are readily available for decision-making.

Understanding DORA Metrics

Deployment Frequency is a critical DORA metric that reflects how often an organization successfully releases new code to production. It provides a direct measure of the speed at which a team can deliver value to its users. Improving Deployment Frequency is a key goal for teams aiming to achieve faster software delivery cycles and a more agile development process.

Deployment Frequency: Definition and Significance

Deployment Frequency measures the number of code deployments to production per unit of time. This metric is a direct indicator of a team’s ability to release changes quickly and reliably. High Deployment Frequency, when coupled with other DORA metrics like Lead Time for Changes, often signifies a high-performing DevOps team. It allows for faster feedback loops, quicker bug fixes, and the ability to respond rapidly to market changes.

The significance of this metric lies in its ability to accelerate the delivery of value to end-users and improve the overall responsiveness of the organization.

Tracking and Calculating Deployment Frequency

To effectively track Deployment Frequency, a robust system for capturing deployment events is essential. This involves integrating tools and processes that automatically log each deployment.To calculate Deployment Frequency, use the following formula:

Deployment Frequency = (Number of Deployments) / (Time Period)

For example:If a team deploys 10 times in a week, the Deployment Frequency is 10 deployments/week.Here’s a method for tracking and calculating Deployment Frequency:

- Implement Automated Deployment Pipelines: Utilize CI/CD pipelines that automatically deploy code to production upon successful completion of tests and code reviews. These pipelines should log each deployment.

- Integrate with Version Control Systems: Connect the deployment pipeline to your version control system (e.g., Git) to track which code changes are included in each deployment.

- Use Deployment Tracking Tools: Employ tools specifically designed for tracking deployments, such as monitoring tools, CI/CD platforms (e.g., Jenkins, GitLab CI, CircleCI), or custom scripts that log deployment events.

- Define a Time Period: Choose a consistent time period for measurement (e.g., weekly, bi-weekly, or monthly).

- Collect Deployment Data: Gather data from the deployment tracking tools for the chosen time period.

- Calculate the Metric: Use the formula above to calculate the Deployment Frequency.

- Visualize and Report: Use dashboards to visualize Deployment Frequency trends over time, allowing for easy monitoring and identification of areas for improvement.

Improving Deployment Frequency: An Example

Improving Deployment Frequency requires a focus on streamlining the software delivery pipeline. This includes automating processes, reducing bottlenecks, and improving team collaboration.Consider a scenario where a team deploys once a month. To improve this, the team can implement the following steps:

- Automate Testing: Implement automated unit, integration, and end-to-end tests to ensure code quality and reduce manual testing time. This helps to ensure that more code changes are ready for deployment.

- Implement Continuous Integration (CI): Set up a CI system that automatically builds, tests, and integrates code changes frequently. This helps to catch integration issues early.

- Implement Continuous Delivery (CD): Automate the deployment process, so code can be deployed to production with a single click or automatically after successful testing.

- Optimize the Deployment Process: Review and optimize the deployment pipeline for efficiency. This includes reducing the time it takes to build, test, and deploy code. Identify and address bottlenecks in the pipeline.

- Use Feature Flags: Implement feature flags to decouple code releases from feature releases. This allows the team to deploy code frequently without necessarily making new features immediately available to users.

- Improve Team Collaboration: Foster a culture of collaboration between development, operations, and QA teams. This includes using communication tools, shared documentation, and regular meetings.

- Implement Infrastructure as Code (IaC): Automate the provisioning and management of infrastructure using IaC tools. This enables faster and more consistent infrastructure deployments, which supports quicker application deployments.

Understanding DORA Metrics

In the realm of DevOps, measuring performance is crucial for continuous improvement. The DORA (DevOps Research and Assessment) metrics provide a standardized framework for evaluating the effectiveness of DevOps practices. This section delves into one of the core DORA metrics: Lead Time for Changes, outlining its definition, measurement, and impact on software delivery performance.

Lead Time for Changes

Lead Time for Changes is a pivotal metric in DevOps, representing the time elapsed from when a code change is committed to the code repository to the moment it is successfully running in production. It provides a direct measure of the speed at which an organization can deliver value to its users.Lead Time for Changes offers insights into the efficiency of the entire software delivery pipeline.

The formula is: Lead Time for Changes = Time of Deployment – Time of Code Commit.

This metric is a key indicator of a team’s ability to rapidly respond to market demands, fix bugs, and deliver new features. Shorter lead times are generally associated with more efficient processes and a higher performing DevOps team. Long lead times, conversely, can indicate bottlenecks, inefficient processes, and a slower pace of innovation.To measure Lead Time for Changes effectively, several steps must be followed.

These steps involve tracking the lifecycle of a code change from its inception to its deployment in production.

- Establish Clear Version Control: Implement a robust version control system (e.g., Git) to track all code changes. This is the starting point for measuring Lead Time.

- Define a Code Commit: Precisely define when a code commit is considered made, marking the beginning of the Lead Time measurement. This should be consistent across all teams.

- Monitor Deployment: Implement automated deployment processes and logging to track the exact time when a code change is deployed to production. This marks the end of the Lead Time.

- Use Automation: Automate as much of the software delivery pipeline as possible, including build, test, and deployment processes, to minimize manual intervention and streamline the process.

- Utilize Monitoring Tools: Employ tools that can automatically collect and analyze data related to code commits and deployments. Many CI/CD (Continuous Integration/Continuous Delivery) platforms provide built-in analytics for this purpose.

- Calculate and Analyze: Calculate the Lead Time for each change using the timestamps of the commit and deployment. Analyze the data to identify trends, bottlenecks, and areas for improvement.

Analyzing the Lead Time for Changes across different teams within an organization can reveal valuable insights into their respective performance levels. The following table compares hypothetical Lead Time data for four teams:

| Team | Average Lead Time (Hours) | Deployment Frequency | Key Contributing Factors | Improvement Areas |

|---|---|---|---|---|

| Team Alpha | 2 | Multiple times per day | Automated CI/CD pipeline, strong testing, effective code reviews | N/A – already high-performing |

| Team Beta | 8 | Once per day | Automated CI/CD, manual testing, some code review delays | Optimize testing, streamline code review process |

| Team Gamma | 24 | Once per week | Partially automated pipeline, manual testing, complex deployments | Automate deployments, improve testing, address deployment complexities |

| Team Delta | 72 | Once per month | Manual processes, infrequent testing, significant deployment challenges | Automate processes, improve testing, address deployment challenges, increase collaboration |

This table provides a snapshot of the Lead Time for Changes for each team. Team Alpha, with the shortest lead time, likely has the most mature DevOps practices. Team Delta, with the longest lead time, faces significant challenges in their software delivery process. By comparing these metrics, organizations can identify best practices, areas for improvement, and opportunities to foster a culture of continuous learning and improvement.

Understanding DORA Metrics

Change Failure Rate is a crucial DORA metric that helps organizations understand the reliability of their software releases. It provides insights into how frequently changes introduced into production lead to incidents. By monitoring this metric, teams can identify areas for improvement in their development and deployment processes.

Change Failure Rate

Change Failure Rate (CFR) measures the percentage of deployments that result in a service outage or require remediation (e.g., a hotfix, rollback, or a fix-forward). A high CFR indicates that deployments are frequently causing problems, impacting service availability and potentially affecting user experience. A low CFR suggests a more stable and reliable deployment pipeline.To calculate Change Failure Rate, follow this procedure:

1. Define a Failure

Establish a clear definition of what constitutes a failed change. This could include incidents like:

Service outages

Significant performance degradation

Rollbacks to a previous version

Hotfixes required within a specific timeframe (e.g., within 24 hours)

2. Track Deployments

Maintain a record of all deployments to production. This should include the date, time, and a description of the change.

3. Monitor Incidents

Implement a system to track incidents that are directly related to deployments. This might involve using an incident management system, monitoring tools, or a post-incident review process.

4. Calculate the Rate

Divide the number of failed changes by the total number of deployments during a specific period (e.g., weekly, monthly, or quarterly). Multiply the result by 100 to express it as a percentage.

Change Failure Rate = (Number of Failed Changes / Total Number of Deployments) – 100

For example, if a team had 100 deployments in a month and 5 of them resulted in failures, the CFR would be 5%.A high Change Failure Rate can be caused by several factors. Here are some common scenarios and possible solutions:

- Poor Testing Practices: Inadequate testing, including insufficient unit tests, integration tests, or end-to-end tests, can lead to bugs and issues that are only discovered in production.

- Solution: Implement comprehensive automated testing at all levels, including unit, integration, and end-to-end tests. Increase test coverage to ensure a higher percentage of code is tested. Introduce test environments that closely resemble production to catch environment-specific issues.

- Insufficient Code Reviews: Code reviews help identify potential problems, security vulnerabilities, and areas for improvement before code is merged and deployed.

- Solution: Enforce mandatory code reviews for all changes. Establish clear code review guidelines and checklists. Train developers on effective code review techniques. Implement tools to automate code review processes.

- Complex or Risky Changes: Large, complex changes or changes involving risky technologies are more likely to introduce errors.

- Solution: Break down large changes into smaller, more manageable chunks. Implement feature flags to enable and disable features without deploying new code. Prioritize changes based on their potential impact and risk. Consider using techniques like canary deployments to test changes on a small subset of users before a full rollout.

- Environment Differences: Differences between development, testing, and production environments can lead to unexpected issues.

- Solution: Adopt infrastructure as code (IaC) to ensure consistency across environments. Use containerization technologies like Docker to package applications and their dependencies. Implement environment parity, making environments as similar as possible.

- Lack of Monitoring and Observability: Without adequate monitoring and observability, teams may not detect issues quickly or have the data needed to diagnose and resolve them.

- Solution: Implement robust monitoring and alerting systems to detect anomalies and performance degradation. Utilize logging, tracing, and metrics to gain insights into application behavior. Establish a centralized logging and monitoring platform to correlate events across different services.

Understanding DORA Metrics

In the realm of DevOps, measuring performance is crucial for continuous improvement. The DORA (DevOps Research and Assessment) metrics provide a standardized framework for evaluating a team’s DevOps capabilities. This section delves into the specifics of one of these vital metrics: Time to Restore Service.

Time to Restore Service

Time to Restore Service (Mean Time to Recovery or MTTR) is a critical DORA metric that measures the average time it takes for an organization to recover from an incident or service disruption. It is a direct indicator of the effectiveness of incident management processes and the overall resilience of the system. A shorter MTTR signifies a more robust and reliable system, minimizing the impact of outages on users and the business.

This metric is often expressed in minutes or hours.Measuring Time to Restore Service involves several key processes. Accurately tracking MTTR requires a structured approach to incident management and robust monitoring tools.

- Incident Detection and Reporting: The process begins with detecting an incident, which can be triggered by automated monitoring systems, user reports, or other alerts. Accurate and timely reporting is crucial.

- Incident Classification and Prioritization: Once an incident is reported, it must be classified based on its severity and impact. Prioritization ensures that critical incidents are addressed first.

- Incident Investigation and Diagnosis: This involves identifying the root cause of the incident. Tools such as log analysis, monitoring dashboards, and debugging tools are essential.

- Remediation and Resolution: This stage involves implementing the fix to restore the service. This could involve rolling back a deployment, applying a hotfix, or restarting a service.

- Verification and Validation: After the fix is implemented, the service must be verified to ensure that it is fully restored and functioning correctly.

- Post-Incident Review: A post-incident review is conducted to analyze the incident, identify areas for improvement, and prevent similar incidents from occurring in the future. This includes analyzing the time spent in each stage.

To minimize Time to Restore Service, a well-defined incident response workflow is essential. The workflow should be designed to streamline the process and reduce the time spent on each stage.

- Automated Monitoring and Alerting: Implement robust monitoring tools that automatically detect and alert the team to potential incidents. The system should generate clear and concise alerts.

- Clear Incident Escalation Procedures: Define clear escalation paths to ensure that incidents are routed to the appropriate teams or individuals promptly. Include contact information and escalation timelines.

- Pre-Defined Runbooks and Playbooks: Create pre-defined runbooks and playbooks for common incidents. These provide step-by-step instructions for resolving known issues, reducing the time spent on diagnosis and remediation. These runbooks should be readily accessible.

- Automated Rollback Capabilities: Implement automated rollback capabilities to quickly revert to a previous stable state if a deployment causes an outage. This can significantly reduce downtime.

- Effective Communication Channels: Establish clear communication channels to keep stakeholders informed about the incident’s status and progress. Use a centralized communication platform.

- Blameless Post-Mortems: Conduct blameless post-mortems to analyze incidents and identify areas for improvement. The focus should be on learning from the incident rather than assigning blame.

- Continuous Improvement: Regularly review and refine the incident response workflow based on the insights gained from post-incident reviews. Continuously improve the monitoring, alerting, and automation processes.

The formula for calculating MTTR is:

MTTR = (Total Downtime) / (Number of Incidents)

For example, consider a service that experienced three incidents in a month. The first incident caused a downtime of 30 minutes, the second 15 minutes, and the third 45 minutes.MTTR = (30 + 15 + 45) / 3 = 30 minutes.In this case, the Time to Restore Service is 30 minutes. A team with a low MTTR demonstrates a high degree of operational efficiency and system resilience.

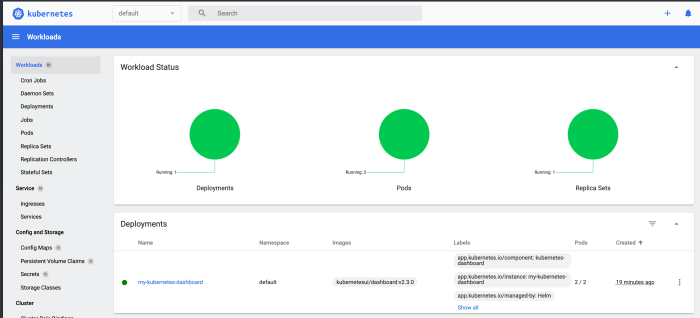

Setting Up a Measurement System

Setting up a robust measurement system is crucial for effectively tracking and improving DevOps performance. This involves choosing the right tools, establishing a data collection pipeline, and visualizing the data in a clear and actionable manner. The goal is to create a system that provides insights into your team’s performance and helps you identify areas for improvement.

Tools and Technologies for Collecting DevOps Metrics

The selection of tools for collecting DevOps metrics depends on your existing infrastructure and the specific needs of your team. A well-chosen toolset will automate data collection and provide a centralized location for your DevOps performance data.

- Version Control Systems: Systems like Git are fundamental. They provide the basis for measuring Lead Time for Changes and Deployment Frequency. These systems track every code change, allowing for precise calculation of the time it takes for a change to be merged and deployed.

- Continuous Integration/Continuous Delivery (CI/CD) Pipelines: Tools such as Jenkins, GitLab CI, CircleCI, and Azure DevOps are essential. They automatically collect data related to Deployment Frequency, Change Failure Rate, and Time to Restore Service. They also provide insights into build times, test results, and deployment success/failure rates.

- Monitoring and Alerting Systems: Tools like Prometheus, Grafana, Datadog, and New Relic are used to monitor application performance and infrastructure health. These systems are critical for tracking Time to Restore Service, as they can detect incidents and provide data on how quickly the system recovers. They also provide insight into Change Failure Rate by alerting on failed deployments.

- Issue Tracking Systems: Jira, Azure DevOps, and similar tools provide data related to the Change Failure Rate and Time to Restore Service. They capture information about incidents, bugs, and the time taken to resolve them.

- Log Aggregation and Analysis Tools: Solutions like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk are invaluable for analyzing logs and identifying patterns related to deployment failures, application errors, and other performance issues. They support tracking Change Failure Rate and Time to Restore Service.

- Cloud Provider Services: Cloud platforms like AWS, Azure, and Google Cloud offer their own monitoring and logging services (e.g., CloudWatch, Azure Monitor, Google Cloud Monitoring). These services often integrate seamlessly with other DevOps tools and provide valuable data for DORA metrics.

- Data Warehouses: For long-term trend analysis and advanced reporting, a data warehouse (e.g., Snowflake, BigQuery, Amazon Redshift) can be used to store and process large volumes of data from various sources. This allows for comprehensive analysis of all DORA metrics over time.

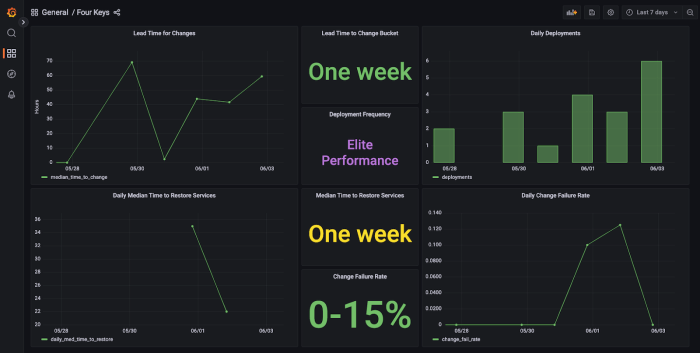

Setting Up a Basic Dashboard to Visualize DORA Metrics

Creating a dashboard to visualize DORA metrics provides a centralized view of your team’s performance. This helps to identify trends, pinpoint areas for improvement, and track progress over time. A well-designed dashboard should be easy to understand and provide actionable insights.

Here’s a step-by-step guide to setting up a basic dashboard:

- Choose a Dashboarding Tool: Select a tool that can connect to your data sources and visualize the metrics. Popular options include Grafana, Kibana, and Datadog. Grafana is often favored for its flexibility and support for a wide range of data sources.

- Connect to Data Sources: Configure the dashboarding tool to connect to the data sources where your metrics are stored. This might involve setting up connections to your CI/CD pipelines, monitoring systems, and issue tracking systems.

- Define Metrics and Calculations: Determine how each DORA metric will be calculated based on the data available in your data sources. For example, to calculate Deployment Frequency, you’ll need to count the number of deployments within a specific time period.

- Create Visualizations: Design visualizations for each metric. This could include line graphs for tracking trends over time, bar charts for comparing different deployments, or single-value displays for showing the current values of key metrics.

- Organize the Dashboard: Arrange the visualizations in a logical layout. Group related metrics together and provide clear labels and descriptions for each metric.

- Set Up Alerts: Configure alerts to notify you when metrics fall outside of acceptable ranges. For example, you might set an alert if the Change Failure Rate increases above a certain threshold.

- Regularly Review and Refine: Continuously review the dashboard and refine it based on your team’s needs. Add new metrics, adjust visualizations, and update alerts as necessary.

Sample Dashboard Layout

A sample dashboard layout can serve as a starting point for visualizing DORA metrics. The specific layout and visualizations will vary depending on your team’s needs and the tools you are using. However, the following is a general framework for a basic dashboard:

| Metric | Visualization | Description | Data Source | Target/Threshold |

|---|---|---|---|---|

| Deployment Frequency | Line Graph | The frequency at which code is successfully released to production. Shows the number of deployments per day/week/month. | CI/CD Pipeline (e.g., Jenkins, GitLab CI) | At least once per day (for high-performing teams) |

| Lead Time for Changes | Line Graph | The time it takes from code commit to code successfully running in production. | Version Control System (e.g., Git), CI/CD Pipeline | Less than one day (for high-performing teams) |

| Change Failure Rate | Bar Chart | The percentage of deployments that result in a failure in production. | CI/CD Pipeline, Monitoring System, Issue Tracking System | Less than 15% (for high-performing teams) |

| Time to Restore Service | Line Graph | The time it takes to restore service after an incident. | Monitoring System, Issue Tracking System | Less than one hour (for high-performing teams) |

| Mean Time to Recovery (MTTR) | Single Value | Average time to recover from an incident. | Issue Tracking System, Monitoring System | Less than one hour (for high-performing teams) |

| Number of Production Incidents | Bar Chart | The total count of incidents affecting production. | Issue Tracking System | Keep this as low as possible |

Example of Dashboard Implementation with Grafana:

Imagine using Grafana as your dashboarding tool. You’d connect Grafana to your CI/CD pipeline (e.g., Jenkins), monitoring system (e.g., Prometheus), and issue tracking system (e.g., Jira). You would then create panels within Grafana, each displaying a specific DORA metric. For instance, for Deployment Frequency, you might create a line graph that shows the number of deployments over time. You’d use Grafana’s query language (e.g., PromQL for Prometheus) to extract the necessary data from your data sources and calculate the metrics.

You’d set up alerts in Grafana to notify you when metrics exceed certain thresholds, such as a sudden increase in Change Failure Rate. The Grafana dashboard provides a centralized, real-time view of your team’s performance, enabling quick identification of issues and opportunities for improvement.

Best Practices for Data Collection and Analysis

Effective data collection and analysis are crucial for accurately measuring and improving DevOps performance. This section Artikels the best practices for ensuring the integrity of your data and deriving meaningful insights from DORA metrics. Implementing these practices will allow you to track progress, identify bottlenecks, and ultimately, accelerate your software delivery lifecycle.

Ensuring Data Accuracy and Reliability

Data accuracy and reliability are paramount to the validity of any performance analysis. Inaccurate or incomplete data can lead to flawed conclusions and ineffective improvement strategies. Several measures can be taken to enhance the trustworthiness of your DevOps metrics.

- Automate Data Collection: Automate the collection of metrics whenever possible. Manual data entry is prone to human error and can be time-consuming. Utilize tools that integrate with your CI/CD pipelines, version control systems, and monitoring platforms to automatically gather data related to deployment frequency, lead time for changes, change failure rate, and time to restore service.

- Implement Data Validation: Implement validation checks to ensure the accuracy of the collected data. This includes verifying data types, ranges, and formats. For instance, validate that deployment timestamps are within a reasonable timeframe and that the change failure rate percentages do not exceed 100%.

- Establish Clear Definitions: Define clear and consistent definitions for each metric. Ambiguity in definitions can lead to misinterpretations and inconsistencies in data collection. Document these definitions and make them accessible to all team members involved in the process.

- Regular Audits and Reviews: Regularly audit your data collection processes and review the collected data for anomalies or inconsistencies. This can involve spot checks, comparing data from different sources, and reviewing the data against known patterns or trends. Schedule regular reviews to identify and address data quality issues promptly.

- Use Version Control: Use version control for all data collection scripts, configurations, and dashboards. This allows you to track changes, revert to previous versions if necessary, and collaborate effectively on improvements.

- Consider Data Sources: Understand the origin and limitations of your data sources. Data from different tools and systems may have varying levels of accuracy and granularity. Be aware of these differences and adjust your analysis accordingly. For example, a monitoring tool may provide more granular data on deployment failures than a CI/CD pipeline.

Analyzing DORA Metrics for Improvement

Analyzing DORA metrics goes beyond simply tracking numbers; it involves identifying patterns, understanding root causes, and driving actionable improvements. The following techniques can help you extract meaningful insights from your data.

- Trend Analysis: Track the trends of your DORA metrics over time. Are your lead time for changes decreasing? Is your deployment frequency increasing? Identify areas where metrics are improving, stagnating, or deteriorating.

- Correlation Analysis: Analyze the relationships between different DORA metrics. For example, does a higher deployment frequency correlate with a lower lead time for changes? Understanding these correlations can help you identify the levers that have the greatest impact on your performance.

- Root Cause Analysis: When a metric deviates from its baseline or target, perform a root cause analysis to understand why. Use techniques like the “Five Whys” or fishbone diagrams to drill down to the underlying causes of issues such as high change failure rates or long lead times.

- Segmentation and Grouping: Segment your data by different factors, such as application, team, or environment. This can reveal variations in performance and help you identify specific areas that need attention. Grouping deployments by size can highlight whether larger deployments contribute disproportionately to lead time.

- Benchmarking: Compare your performance against industry benchmarks or your own past performance. This can provide context for your metrics and help you assess your progress.

- Visualization: Use data visualization tools to present your DORA metrics in a clear and understandable format. Dashboards, charts, and graphs can make it easier to identify trends, patterns, and outliers. Consider creating a dashboard that tracks all four DORA metrics in one place.

- Prioritize Based on Impact: Focus on improving the metrics that have the greatest impact on your overall goals. For example, if your goal is to increase the speed of software delivery, prioritize improvements that will reduce lead time for changes.

Establishing Baselines and Setting Targets for DORA Metrics

Establishing baselines and setting targets are essential steps in measuring and improving DevOps performance. Baselines provide a starting point for measuring progress, while targets provide a goal to strive for. The process should be iterative, involving ongoing monitoring and adjustments.

- Establish a Baseline: Gather historical data to establish a baseline for each DORA metric. This provides a starting point for measuring progress. The baseline should reflect your current performance levels before implementing any changes. Analyze at least three months of historical data to obtain a statistically relevant baseline.

- Set SMART Targets: Set SMART (Specific, Measurable, Achievable, Relevant, Time-bound) targets for each metric. Targets should be ambitious but realistic. For example, instead of saying “Improve deployment frequency,” set a target like “Increase deployment frequency to twice per day within the next quarter.”

- Consider Industry Benchmarks: Research industry benchmarks for your type of organization and technology stack. These benchmarks can provide a reference point for setting your targets. For instance, if the industry average lead time for changes is one day, you might aim to reduce your lead time to less than one day.

- Prioritize Metrics: Not all metrics are equally important. Prioritize the metrics that are most closely aligned with your business goals. For example, if your goal is to reduce time to market, prioritize lead time for changes and deployment frequency.

- Regularly Review and Adjust Targets: Review your targets regularly (e.g., quarterly) and adjust them based on your progress and changing business needs. If you consistently exceed your targets, consider raising the bar. If you are consistently missing your targets, analyze the reasons why and adjust your strategy or targets accordingly.

- Implement Continuous Monitoring: Continuously monitor your DORA metrics to track progress towards your targets. Use dashboards and alerts to proactively identify deviations from your baselines and targets.

- Example: Setting Targets and Tracking Progress

Suppose a company has a current lead time for changes of 5 days. They want to reduce this to 2 days within six months. The target is “Reduce lead time for changes to 2 days within six months.” The company tracks progress weekly, identifies bottlenecks in the change process, and implements improvements to reduce lead time.After three months, lead time is reduced to 3 days, so the company adjusts its strategy, focusing on automation and streamlining. This iterative approach ensures continuous improvement.

Using DORA Metrics for Continuous Improvement

DORA metrics provide a powerful framework for not only measuring DevOps performance but also for driving continuous improvement. By regularly analyzing these metrics, teams can pinpoint areas of weakness in their software delivery pipeline and implement targeted improvements to enhance efficiency, stability, and speed. This iterative process, driven by data and focused on continuous feedback, is at the heart of successful DevOps transformations.

Identifying Bottlenecks with DORA Metrics

DORA metrics serve as diagnostic tools, revealing specific points in the software delivery pipeline that are slowing down the process. Understanding how to interpret the metrics allows teams to focus their efforts effectively.Analyzing the four key DORA metrics can uncover bottlenecks:

- Deployment Frequency: Low deployment frequency can indicate issues with build processes, testing, or release management. Frequent deployments, on the other hand, often signal a more streamlined pipeline.

- Lead Time for Changes: Long lead times suggest inefficiencies in the development, testing, or deployment stages. Short lead times are a hallmark of efficient and agile teams.

- Change Failure Rate: A high change failure rate points to problems with code quality, testing, or deployment procedures. Lower failure rates demonstrate a more robust and reliable pipeline.

- Mean Time to Recover (MTTR): A high MTTR indicates difficulties in quickly resolving incidents. Rapid recovery times are crucial for minimizing downtime and maintaining user satisfaction.

Actionable Improvements Based on DORA Metric Findings

The findings from DORA metric analysis should be directly translated into actionable improvements. The specific actions will vary depending on the team and the identified bottlenecks, but the general approach remains consistent: analyze the data, identify the problem, implement a solution, and then re-measure the metrics to assess the impact of the change.Examples of actionable improvements based on specific metric findings:

- Low Deployment Frequency: If deployment frequency is low, investigate the build process. Are builds failing frequently? Are there long manual steps in the deployment process? Automating these steps can significantly increase deployment frequency. For example, implementing a Continuous Integration/Continuous Delivery (CI/CD) pipeline, using tools like Jenkins, GitLab CI, or CircleCI, can automate builds, tests, and deployments, leading to more frequent releases.

- Long Lead Time for Changes: If lead time for changes is excessive, examine the development and testing phases. Are code reviews taking too long? Are tests slow or unreliable? Implementing practices like pair programming, automated testing (unit, integration, and end-to-end tests), and feature flagging can reduce lead times. For instance, a team might implement automated unit tests that run within minutes, providing immediate feedback on code changes and reducing the time spent on manual testing.

- High Change Failure Rate: If the change failure rate is high, review the testing and deployment processes. Are tests comprehensive enough? Are deployments properly validated? Implementing robust testing strategies, including automated testing and canary deployments (releasing to a small subset of users first), can reduce failure rates. Consider adding automated rollback capabilities to quickly revert failed deployments.

- High Mean Time to Recover (MTTR): If MTTR is high, focus on incident response and monitoring. Are there clear incident response procedures? Are monitoring tools providing sufficient visibility into the system? Implementing robust monitoring and alerting systems, and establishing clear incident response protocols, can help reduce MTTR. For example, using tools like Prometheus and Grafana can provide real-time monitoring of system health, and alerting teams to issues immediately.

Improvement Strategies for Reducing Lead Time for Changes (Example)

The following blockquote Artikels specific improvement strategies for a fictional software development team, focusing on reducing Lead Time for Changes, based on the findings from their DORA metrics.

The “VelocityCoders” team has a high Lead Time for Changes. Analysis revealed that the main contributors to this long lead time are lengthy code review processes and slow integration testing. Improvement Strategies:

- Optimize Code Reviews: Implement a more efficient code review process. This includes:

- Setting clear guidelines for code reviews (e.g., maximum code size, specific review criteria).

- Using code review tools that integrate directly with the team’s version control system (e.g., GitHub, GitLab, Bitbucket).

- Establishing a process for quickly resolving review comments.

- Accelerate Integration Testing: Improve the speed and reliability of integration tests. This includes:

- Refactoring slow tests to run faster.

- Automating the execution of integration tests as part of the CI/CD pipeline.

- Implementing parallel test execution where possible.

- Implement Feature Flags: Use feature flags to enable continuous integration and deployment. This allows developers to merge code frequently and release features incrementally, reducing the impact of large changes.

- Improve Communication: Encourage better communication between developers and testers to resolve issues quickly. Implement daily stand-up meetings to discuss progress, blockers, and any issues arising during the development and testing phases.

By implementing these strategies, the VelocityCoders team aims to significantly reduce their Lead Time for Changes and improve their overall software delivery performance. They will continuously monitor their DORA metrics to measure the effectiveness of these improvements.

Integrating DORA Metrics with Other Performance Indicators

Integrating DORA metrics with other performance indicators is crucial for gaining a holistic understanding of DevOps performance and its impact on the business. This integration provides a richer context, enabling data-driven decisions that align with overall organizational goals. Combining DORA metrics with other relevant indicators allows for a more comprehensive evaluation of DevOps effectiveness, leading to continuous improvement and better business outcomes.

Comparing DORA Metrics with Other Relevant Performance Indicators

DORA metrics provide a valuable lens through which to view software delivery performance. However, they don’t tell the entire story. Comparing them with other relevant performance indicators provides a more complete picture of DevOps’ impact. This comparison reveals correlations, identifies areas for improvement, and helps justify investments in DevOps practices.

- Business Outcomes: Directly link DORA metrics to business outcomes such as revenue, profit, and market share. For example, faster lead time (a DORA metric) can translate to quicker time-to-market, potentially increasing revenue. Higher deployment frequency can enable faster responses to market changes, giving a competitive edge.

- Team Performance: Measure team morale, employee satisfaction, and skill development. A highly performing DevOps team is likely to exhibit good DORA metric scores. Correlating these indicators can highlight the impact of DevOps practices on team well-being and productivity.

- Operational Efficiency: Analyze infrastructure costs, resource utilization, and incident resolution times. While DORA focuses on software delivery, operational efficiency complements it by highlighting how efficiently the delivery process is run.

- Security and Compliance: Evaluate security vulnerabilities, compliance violations, and the time to remediate security issues. Integrating security metrics with DORA metrics emphasizes the importance of secure and compliant software delivery practices.

Correlating DORA Metrics with Customer Satisfaction and Revenue

Understanding the relationship between DORA metrics and customer satisfaction and revenue is vital for demonstrating the business value of DevOps. Faster delivery, lower failure rates, and quicker time-to-market, as measured by DORA, can directly impact customer satisfaction and, consequently, revenue. This correlation provides compelling evidence for investing in DevOps practices.

- Customer Satisfaction (CSAT): Higher deployment frequency and lower change failure rates can lead to more stable and reliable software, directly improving customer satisfaction. Regularly survey customers and correlate CSAT scores with DORA metrics.

- Net Promoter Score (NPS): NPS measures customer loyalty and willingness to recommend a product or service. Faster time-to-market, a result of improved lead time, can lead to increased NPS as customers experience new features and updates more quickly.

- Revenue Growth: Faster lead time and deployment frequency allow businesses to respond more quickly to market demands and customer feedback, potentially increasing revenue. Analyze revenue growth trends in relation to improvements in DORA metrics. For example, a 10% reduction in lead time might correlate with a 5% increase in revenue.

- Customer Acquisition Cost (CAC): Improved deployment frequency and stability, driven by good DORA performance, can reduce the number of bugs and incidents, potentially lowering CAC by improving customer retention and positive word-of-mouth.

Using DORA Metrics in Conjunction with Other Metrics to Tell a Comprehensive Story About DevOps Performance

Combining DORA metrics with other relevant indicators allows for a comprehensive understanding of DevOps performance and its impact on the business. This combined approach enables data-driven decision-making and facilitates continuous improvement efforts. It provides a more compelling narrative to stakeholders.

Example:

Consider a scenario where a company observes a significant improvement in their lead time (a DORA metric) after implementing CI/CD practices. To tell a comprehensive story, they can combine this data with other metrics:

- Lead Time (DORA): Decreased from 4 weeks to 1 week.

- Deployment Frequency (DORA): Increased from once a month to several times a week.

- Customer Satisfaction (CSAT): Increased by 15% as a result of faster feature releases and bug fixes.

- Revenue: Increased by 10% due to faster time-to-market and improved customer satisfaction.

- Team Morale: Increased by 20% as the team experienced less stress and improved collaboration.

This combination of metrics paints a clear picture of the positive impact of DevOps practices. The improvements in DORA metrics correlate directly with positive business outcomes, increased customer satisfaction, and improved team morale. This integrated view allows the company to justify further investments in DevOps and demonstrate its value to the organization.

Common Challenges and Solutions in Measuring DevOps Performance

Implementing DORA metrics, while beneficial, often presents significant hurdles for teams. These challenges can stem from technical limitations, organizational structures, or even cultural resistance. Addressing these obstacles proactively is crucial for a successful DevOps performance measurement journey. This section Artikels common challenges and provides actionable strategies to overcome them.

Data Collection and Integration Challenges

Collecting and integrating data from diverse tools and systems is a primary hurdle. The DevOps landscape often involves a multitude of tools for version control, CI/CD, monitoring, and more. This fragmentation makes it difficult to gather the necessary data for calculating DORA metrics.To address this, consider the following:

- Automated Data Pipelines: Implement automated data pipelines to extract, transform, and load (ETL) data from various sources. This reduces manual effort and improves data accuracy.

- API Integration: Leverage APIs provided by the tools in your DevOps toolchain to programmatically access data. This is often a more reliable and efficient method than manual data extraction.

- Centralized Data Storage: Store the collected data in a centralized data warehouse or data lake. This facilitates easier analysis and reporting.

- Standardized Data Formats: Enforce standardized data formats across different tools. This simplifies data integration and ensures consistency.

- Tool Selection: When selecting new tools, prioritize those that offer robust API integrations and data export capabilities. This future-proofs your data collection process.

Lack of Tooling and Automation

Many organizations lack the necessary tooling and automation to effectively measure DORA metrics. Without automated processes, the manual effort required to calculate and track these metrics can be overwhelming, leading to inaccuracies and delays.Solutions to this challenge include:

- Implementing Automation: Automate data collection, calculation, and reporting. Tools like custom scripts, dedicated monitoring solutions (e.g., Prometheus, Grafana), and CI/CD pipelines can significantly streamline these processes.

- Utilizing Dashboards: Create dashboards that visualize DORA metrics in real-time. This provides instant insights into performance and enables quick identification of bottlenecks.

- Investing in DevOps Tooling: Explore and invest in DevOps-specific tools that offer built-in DORA metric tracking capabilities. Examples include tools that integrate with CI/CD pipelines to automatically capture and report on key metrics.

- Developing Internal Tools: Consider developing custom tools or scripts if off-the-shelf solutions don’t fully meet your needs. This allows for greater customization and control.

Resistance to Change and Lack of Buy-in

Cultural resistance and a lack of buy-in from team members can significantly hinder the successful implementation of DORA metrics. People may resist changes to existing workflows or be skeptical of the value of performance measurement.To foster a culture of acceptance and collaboration:

- Communicate the Value: Clearly communicate the benefits of using DORA metrics, such as improved software delivery performance, reduced risk, and increased team efficiency.

- Involve the Team: Involve team members in the implementation process. Gather their input and feedback to ensure that the chosen metrics and measurement methods are relevant and practical.

- Provide Training and Education: Offer training and education on DORA metrics, DevOps principles, and the tools being used. This helps team members understand the concepts and how they can contribute to the measurement process.

- Lead by Example: Demonstrate the importance of DORA metrics by actively tracking and using them to inform decisions.

- Celebrate Successes: Acknowledge and celebrate successes achieved through improvements based on DORA metrics. This reinforces the value of the measurement process and motivates team members.

Organizational Silos and Collaboration Issues

Silos between different teams (e.g., development, operations, security) can create communication barriers and hinder collaboration, making it difficult to gather and analyze data across the entire software delivery lifecycle.Overcoming this requires:

- Promoting Cross-Functional Collaboration: Encourage cross-functional teams to work together. Establish clear communication channels and processes for sharing information.

- Implementing DevOps Practices: Adopt DevOps practices such as continuous integration and continuous delivery (CI/CD) to break down silos and foster collaboration.

- Establishing Shared Goals: Define shared goals and metrics that align the interests of different teams. This helps to create a sense of shared responsibility for software delivery performance.

- Using Collaboration Tools: Utilize collaboration tools, such as Slack, Microsoft Teams, or dedicated project management software, to facilitate communication and information sharing.

Inaccurate or Incomplete Data

Inaccurate or incomplete data can lead to misleading insights and incorrect conclusions about DevOps performance. This can undermine trust in the metrics and lead to poor decision-making.To ensure data accuracy and completeness:

- Validate Data Sources: Regularly validate the data sources used for calculating DORA metrics. Ensure that the data is accurate, reliable, and up-to-date.

- Implement Data Quality Checks: Implement data quality checks to identify and correct errors in the data. This might involve automated validation rules or manual reviews.

- Monitor Data Pipelines: Monitor the data pipelines used for collecting and processing data. Identify and address any issues that may be affecting data quality.

- Document Data Sources and Processes: Document the data sources, data definitions, and data processing steps used for calculating DORA metrics. This makes it easier to understand and maintain the measurement process.

Cultural Change and Its Importance

Successful DevOps performance measurement necessitates a shift in organizational culture. It requires a move away from blame culture to one focused on continuous learning and improvement.Here are some key aspects of this cultural transformation:

- Embracing a Growth Mindset: Encourage a growth mindset, where team members view challenges as opportunities for learning and improvement.

- Focusing on Outcomes: Shift the focus from individual tasks to overall outcomes, such as faster delivery, increased stability, and improved customer satisfaction.

- Promoting Blameless Postmortems: Implement blameless postmortems to analyze incidents and identify areas for improvement without assigning blame. This encourages open communication and learning from mistakes. For example, after a major production outage, the team can analyze the root causes, identify preventive measures, and update the system to avoid future occurrences.

- Fostering Psychological Safety: Create a psychologically safe environment where team members feel comfortable taking risks, experimenting, and sharing feedback without fear of reprisal. Google’s Project Aristotle, for example, highlighted psychological safety as a key factor in high-performing teams.

- Continuous Improvement: Emphasize the importance of continuous improvement. Regularly review DORA metrics, identify areas for improvement, and implement changes to optimize performance. An example would be a team consistently working to decrease their lead time for changes, using DORA metrics to track progress and adapt their processes.

Conclusive Thoughts

In conclusion, measuring DevOps performance with DORA metrics is not just about tracking numbers; it’s about fostering a culture of continuous improvement and driving business value. By understanding and acting upon the insights provided by these key metrics, teams can identify bottlenecks, optimize their processes, and ultimately deliver software faster, more reliably, and with greater impact. Embrace the journey, and watch your DevOps practices evolve towards peak performance and success.

FAQ Insights

What are the key benefits of using DORA metrics?

DORA metrics provide a data-driven approach to understanding and improving software delivery performance. They help teams identify bottlenecks, reduce risk, and ultimately deliver value faster and more reliably.

How do I get started with measuring DORA metrics?

Start by identifying the tools and systems you use for your software delivery pipeline. Then, determine how you can collect the necessary data for each DORA metric (Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Time to Restore Service). Consider using monitoring and automation tools to streamline data collection.

What tools can I use to track DORA metrics?

Various tools can be used, including CI/CD platforms (e.g., Jenkins, GitLab CI), monitoring tools (e.g., Prometheus, Datadog), and data visualization dashboards (e.g., Grafana, Tableau). The choice depends on your existing infrastructure and specific needs.

How often should I review my DORA metrics?

Regular reviews, such as weekly or bi-weekly, are recommended to track progress and identify trends. This allows for timely adjustments and improvements to your DevOps practices.

What if my DORA metrics are not ideal?

Analyze the data to pinpoint the root causes of the issues. Then, implement targeted improvements. For example, if Lead Time for Changes is high, focus on automating testing or streamlining the deployment process. Continuous improvement is key.