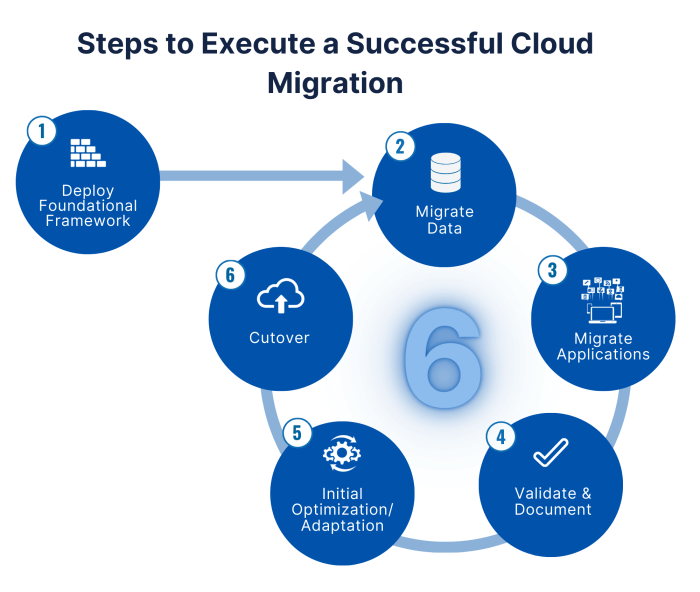

Migrating to the cloud is often viewed as a transformative step, promising scalability, agility, and reduced infrastructure management overhead. However, the journey doesn’t end with the completion of the migration. A critical, and often overlooked, phase involves actively managing and optimizing cloud costs to realize the full potential of your investment. This guide delves into the essential strategies and techniques required to effectively control and minimize cloud spending after your migration is complete, ensuring sustainable cost efficiency.

This document provides a structured approach, covering key areas such as monitoring and visibility, resource right-sizing, leveraging savings plans, cost allocation, storage optimization, automation, application refactoring, and network cost management. Each section offers practical guidance, from setting up monitoring dashboards to implementing automation workflows, enabling you to proactively manage and reduce cloud expenses. By implementing these strategies, organizations can transform cloud cost management from a reactive task into a proactive, data-driven process, leading to significant and sustained cost savings.

Monitoring and Visibility Post-Migration

Effective cloud cost optimization after migration hinges on robust monitoring and visibility into cloud spending patterns. This requires a proactive approach to track key metrics, establish centralized dashboards, and implement alerting mechanisms to identify and address cost anomalies promptly. Such measures are crucial for maintaining control over cloud expenditures and realizing the full financial benefits of cloud adoption.

Essential Metrics for Cloud Cost Optimization

Understanding and tracking the right metrics is paramount for effective cost management. This section details key metrics that should be monitored post-migration.

- Total Cloud Spend: The overarching metric representing the cumulative cost incurred for all cloud resources. Monitoring this provides a baseline understanding of overall spending trends.

- Resource Utilization: Tracking resource utilization (CPU, memory, storage, network) helps identify underutilized resources, which can be scaled down or eliminated to reduce costs. For example, a virtual machine consistently using only 10% of its CPU capacity represents a potential area for optimization.

- Cost per Resource: Analyzing the cost of individual resources (e.g., compute instances, storage buckets, databases) provides granular insights into where spending is concentrated. This allows for targeted optimization efforts.

- Cost per Application/Service: Allocating costs to specific applications or services helps understand the financial impact of each workload. This is especially important in a multi-application environment.

- Data Transfer Costs: Monitoring data transfer costs, particularly egress charges, is crucial, as these can significantly impact overall cloud expenses. Understanding data flow patterns helps in optimizing data transfer strategies.

- Storage Costs: Tracking storage costs across different storage tiers (e.g., standard, infrequent access, archive) enables optimization based on data access patterns. For instance, moving infrequently accessed data to cheaper storage tiers can lead to significant savings.

- Reserved Instance/Commitment Utilization: Monitoring the utilization of reserved instances or committed use discounts is critical to ensure that the discounts are fully leveraged. Low utilization indicates a missed opportunity for cost savings.

- Cost per Unit of Business Value: This metric ties cloud costs to business outcomes, such as cost per transaction or cost per customer. This provides a more holistic view of cloud cost efficiency.

Setting Up a Centralized Monitoring Dashboard

A centralized monitoring dashboard provides a single pane of glass for visualizing cloud spending and resource utilization. This section Artikels the steps involved in setting up such a dashboard.

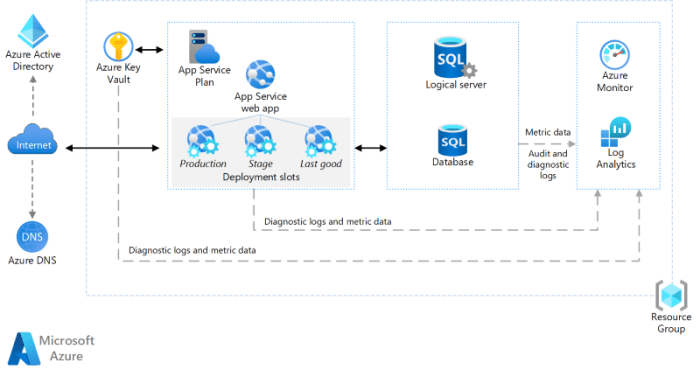

- Choose a Monitoring Tool: Select a cloud monitoring tool that supports the chosen cloud provider(s). Popular options include cloud provider native tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring), third-party solutions (e.g., Datadog, New Relic, Dynatrace), and open-source alternatives (e.g., Prometheus, Grafana). The choice should consider features, scalability, and integration capabilities.

- Collect Data: Configure the monitoring tool to collect relevant metrics from all cloud resources. This involves enabling monitoring services and configuring data collection agents or integrations.

- Create Dashboards: Design and build dashboards to visualize the collected metrics. These dashboards should include charts, graphs, and tables that provide clear insights into spending patterns, resource utilization, and cost drivers.

- Customize Views: Create different dashboard views tailored to different stakeholders (e.g., finance, engineering, operations). This allows each team to focus on the metrics most relevant to their roles.

- Establish Baselines: Establish baseline metrics to compare current performance against historical trends. This helps identify anomalies and potential cost optimization opportunities. For example, track the average CPU utilization of a particular instance type over a period to establish a baseline, and then monitor for deviations.

- Automate Reporting: Configure automated reporting to regularly share dashboard data with relevant stakeholders. This ensures that everyone stays informed about cloud spending and performance.

Configuring Alerts for Cost Anomalies and Spikes

Implementing alerts is crucial for proactive cost management. This section details how to configure alerts to identify and respond to unexpected spending.

- Define Alerting Thresholds: Set up thresholds for key metrics based on historical data, budget targets, and expected spending patterns. For example, an alert can be triggered if the total cloud spend exceeds a predefined budget or if the cost of a specific service increases by a certain percentage within a given period.

- Implement Anomaly Detection: Utilize anomaly detection features offered by the monitoring tool to automatically identify unusual spending patterns. Anomaly detection algorithms can learn from historical data and flag deviations from the norm.

- Configure Alerting Rules: Define alerting rules that specify when and how alerts should be triggered. These rules should consider factors such as metric values, time windows, and resource types.

- Integrate with Notification Channels: Integrate the monitoring tool with notification channels, such as email, Slack, or ticketing systems, to ensure timely notification of alerts.

- Define Escalation Procedures: Establish escalation procedures to ensure that alerts are addressed promptly. This may involve routing alerts to specific teams or individuals based on their area of responsibility.

- Automate Remediation: Explore options for automating remediation actions in response to alerts. For example, an alert indicating high CPU utilization on a compute instance could trigger an automated scale-up action.

Right-Sizing Compute Resources

Optimizing cloud costs post-migration necessitates a proactive approach to resource management. Right-sizing compute resources, specifically virtual machines (VMs) or instances, is a crucial step in this process. This involves continuously evaluating resource utilization and adjusting instance sizes to match actual workload demands, thereby minimizing waste and maximizing efficiency.

Regular Review and Adjustment of Virtual Machine Sizes

The process of right-sizing compute resources is not a one-time activity; it requires a continuous monitoring and adjustment cycle. This involves establishing a consistent review schedule, analyzing performance metrics, and implementing changes based on observed trends.

- Establish a Review Schedule: Define a regular cadence for assessing instance performance. This could be weekly, bi-weekly, or monthly, depending on the volatility of the workload and the granularity of monitoring data. The schedule should align with the business cycles and any planned application changes.

- Collect and Analyze Performance Metrics: Implement robust monitoring to gather key performance indicators (KPIs). These include CPU utilization, memory usage, disk I/O, and network throughput. Use cloud provider-specific tools or third-party monitoring solutions to collect and analyze this data.

- Identify Utilization Patterns: Analyze the collected data to identify trends and patterns in resource usage. Look for instances consistently exceeding or falling below optimal utilization thresholds. For example, an instance consistently running at less than 20% CPU utilization may be a candidate for downsizing.

- Determine the Optimal Instance Size: Based on the analysis, determine the appropriate instance size for each VM. Consider the workload’s peak and average resource demands. If the workload has predictable peaks, the instance size should accommodate those peaks without excessive over-provisioning during off-peak hours.

- Implement Changes and Validate: Implement the recommended changes, such as downsizing or upgrading instances. After making changes, monitor the instance’s performance to ensure the new size is appropriate. Continue monitoring the instance for a period, such as a week or two, to validate that the changes did not negatively impact performance.

- Automate Where Possible: Consider automating the right-sizing process. Many cloud providers offer auto-scaling features that automatically adjust instance sizes based on predefined rules and metrics. This can significantly reduce the manual effort required for right-sizing.

Identifying and Addressing Underutilized Instances

Underutilized instances represent a significant source of wasted cloud spending. Identifying and addressing these instances is crucial for cost optimization. This involves a systematic approach to pinpointing instances with low resource utilization and taking appropriate action.

- Define Utilization Thresholds: Establish clear thresholds for determining underutilization. For example, an instance consistently utilizing less than 10-20% CPU or memory may be considered underutilized. These thresholds should be tailored to the specific workloads.

- Utilize Monitoring Tools: Leverage monitoring tools to track instance performance metrics. These tools provide dashboards and alerts that can help identify instances that are consistently below the defined thresholds.

- Analyze Historical Data: Review historical performance data to identify instances with a pattern of low utilization over an extended period. This can help distinguish between temporary fluctuations and chronic underutilization.

- Investigate the Root Cause: Once an underutilized instance is identified, investigate the reason for the low utilization. This may involve analyzing the application’s behavior, the instance’s configuration, or the workload itself.

- Downsize Instances: If the instance is underutilized, the most common solution is to downsize it to a smaller instance type. This can significantly reduce costs while maintaining adequate performance.

- Terminate Unused Instances: If an instance is consistently idle or not needed, terminate it. This frees up resources and eliminates unnecessary costs.

- Optimize Application Code: In some cases, the underutilization may be due to inefficient application code. Optimize the code to improve resource utilization.

- Consider Auto-Scaling: Implement auto-scaling to automatically adjust instance sizes based on demand. This can prevent over-provisioning and ensure that instances are appropriately sized for the workload.

Impact of Right-Sizing: Example Table

The following table demonstrates the potential impact of right-sizing on cloud costs. This is a hypothetical example to illustrate the savings potential. The actual savings will vary depending on the instance types, utilization levels, and pricing models.

| Instance Type | Current Utilization | Recommended Size | Estimated Savings (Monthly) |

|---|---|---|---|

| `m5.2xlarge` | 15% CPU, 30% Memory | `m5.large` | $250 |

| `c5.4xlarge` | 20% CPU, 40% Memory | `c5.xlarge` | $400 |

| `r5.8xlarge` | 10% CPU, 25% Memory | Terminate Instance | $800 |

The example illustrates the potential savings from right-sizing. The first row shows a `m5.2xlarge` instance with low utilization, which is right-sized to a smaller `m5.large` instance, resulting in savings. The second row details a similar case for a `c5.4xlarge` instance, highlighting potential cost reductions. The third row provides an example of a complete instance termination to maximize savings.

Leveraging Reserved Instances and Savings Plans

Optimizing cloud costs post-migration necessitates a proactive approach to long-term resource allocation. Reserved Instances (RIs) and Savings Plans offer significant cost reductions compared to on-demand pricing by committing to consistent resource usage over a defined period. Strategic implementation of these tools requires careful analysis of workload patterns and a deep understanding of the available options.

Benefits of Using Reserved Instances and Savings Plans

The primary benefit of RIs and Savings Plans is the substantial reduction in cloud computing expenses. This reduction stems from a commitment to a certain level of resource usage, allowing cloud providers to offer discounted rates.

- Cost Reduction: The most immediate benefit is the significant reduction in hourly compute costs compared to on-demand pricing. Discounts can range from 30% to over 70%, depending on the cloud provider, instance type, commitment duration, and payment options.

- Predictable Costs: RIs and Savings Plans provide a predictable cost structure, making budgeting and forecasting easier. This is particularly valuable for organizations with consistent workloads.

- Capacity Reservation (for some RIs): Some RIs guarantee capacity, meaning the compute resources are reserved for the customer’s use, regardless of actual utilization. This is crucial for applications that require consistent performance and availability.

- Flexibility (for some Savings Plans): Savings Plans offer flexibility in terms of instance family and region, allowing for changes in resource allocation without losing the benefits of the discount.

Comparison of Reserved Instances and Savings Plans Offered by Major Cloud Providers

Cloud providers offer a variety of RIs and Savings Plans, each with its own characteristics. Understanding these differences is crucial for selecting the most appropriate option.

- Amazon Web Services (AWS): AWS offers several options:

- Reserved Instances (RIs): Provide discounts in exchange for a commitment to a specific instance type, region, and operating system for a 1- or 3-year term. Payment options include All Upfront, Partial Upfront, and No Upfront. RIs can be Standard or Convertible. Standard RIs offer the highest discounts but are less flexible. Convertible RIs allow for changes to instance families, operating systems, and payment options.

- Savings Plans: AWS Savings Plans offer flexible pricing models that provide savings on compute usage in exchange for a commitment to a consistent amount of usage (measured in USD per hour) for a 1- or 3-year term. Two types of Savings Plans are available: Compute Savings Plans and EC2 Instance Savings Plans. Compute Savings Plans apply to a broader range of compute usage, including EC2 instances, Lambda functions, and Fargate.

EC2 Instance Savings Plans provide the highest savings for EC2 instance usage, offering comparable discounts to Standard RIs.

- Microsoft Azure: Azure offers Reserved Virtual Machine Instances (RIs).

- Reserved Virtual Machine Instances (RIs): Provide discounts on virtual machines (VMs) in exchange for a 1- or 3-year commitment. RIs are available for various VM sizes, operating systems, and regions. Azure offers a flexible RI scope, allowing for the application of discounts across multiple subscriptions.

- Google Cloud Platform (GCP): GCP offers Committed Use Discounts (CUDs).

- Committed Use Discounts (CUDs): Provide discounts on compute engine instances in exchange for a commitment to use a specific amount of resources for a 1- or 3-year term. CUDs can be zone-specific or regional. GCP offers flexible CUDs, allowing for the application of discounts across different instance families and regions within the same project.

Procedure for Calculating the Optimal Commitment Duration and Instance Type for Reserved Instances

Determining the optimal commitment duration and instance type requires a data-driven approach. This involves analyzing historical usage patterns, forecasting future resource needs, and evaluating the trade-offs between flexibility and cost savings.

- Analyze Historical Usage:

- Gather historical data on compute resource utilization, including instance types, sizes, operating systems, and regions. Utilize cloud provider cost management tools to collect this data.

- Identify periods of peak and average usage.

- Determine the stability of workloads; stable workloads are better candidates for RIs.

- Forecast Future Resource Needs:

- Project future resource requirements based on anticipated growth, application changes, and business plans. Consider seasonality and other factors that might influence resource demand.

- Utilize capacity planning tools and forecasts from stakeholders to refine the projections.

- Evaluate RI/Savings Plan Options:

- Compare the cost savings offered by different RI and Savings Plan options, including different commitment durations (1-year vs. 3-year) and payment options (All Upfront, Partial Upfront, No Upfront).

- Consider the flexibility offered by Savings Plans and Convertible RIs to accommodate future changes in resource needs.

- Use cloud provider cost calculators to model different scenarios and determine the optimal combination of RIs/Savings Plans.

- Calculate the Break-Even Point:

- Determine the break-even point for each RI/Savings Plan option. This is the point at which the cost savings from the discount outweigh the cost of the commitment.

- Consider the risk of over-provisioning or under-provisioning resources when selecting the commitment duration and instance type.

- Implement and Monitor:

- Purchase the selected RIs/Savings Plans through the cloud provider’s console or API.

- Continuously monitor resource utilization and costs to ensure that the RIs/Savings Plans are being utilized effectively. Adjust the resource allocation as needed to maximize cost savings.

- Regularly review the RI/Savings Plan portfolio and make adjustments based on changing business needs and usage patterns.

Example: A company is running an EC2 instance consistently for 24/7 in us-east-1 region. The on-demand cost is $100/month. After analyzing the usage, they can choose a 3-year Standard RI with All Upfront payment. This might reduce the cost to $30/month. This saves $70/month.

Implementing Cost Allocation and Tagging

After a successful cloud migration, effectively managing and optimizing cloud costs requires granular visibility into spending patterns. Implementing a robust cost allocation and tagging strategy is paramount for understanding where your cloud resources are being utilized and how much they are costing. This allows for informed decision-making, budget control, and the identification of areas for potential cost savings.

Organizing Tagging for Accurate Cost Allocation

The cornerstone of effective cost allocation is a well-defined and consistently applied tagging strategy. Tags are key-value pairs that are assigned to cloud resources, providing metadata that enables categorization and grouping. This allows for the allocation of costs to specific departments, projects, applications, or any other logical grouping relevant to your organization.To achieve accurate cost allocation, consider the following:

- Define a Standardized Tagging Schema: Establish a consistent naming convention for tags across all cloud resources. This ensures uniformity and simplifies reporting. Consider using a combination of static and dynamic tags. Static tags are manually assigned and typically represent organizational aspects like department or project. Dynamic tags are often automatically applied by cloud services based on resource characteristics, such as instance type or region.

- Identify Key Tag Categories: Determine the categories that are most important for cost allocation within your organization. Common categories include:

- Department: The business unit responsible for the resource. (e.g., “Marketing”, “Engineering”, “Finance”)

- Project: The specific project the resource supports. (e.g., “WebsiteRedesign”, “DataWarehouse”, “MobileApp”)

- Application: The application or service the resource is associated with. (e.g., “CRM”, “E-commercePlatform”, “InternalTool”)

- Environment: The environment the resource belongs to (e.g., “Production”, “Staging”, “Development”).

- Owner: The individual or team responsible for the resource.

- Enforce Tagging Policies: Implement policies to ensure that all newly provisioned resources are tagged correctly. This can be achieved through automated processes, such as infrastructure-as-code (IaC) tools or cloud-native tagging enforcement mechanisms.

- Regularly Review and Update Tags: Periodically review the tagging strategy to ensure its continued relevance and accuracy. Update tags as needed to reflect changes in organizational structure, projects, or applications. This is crucial to avoid outdated or inaccurate cost allocations.

Using Cost Allocation Tags for Reporting and Understanding Spending Patterns

Cost allocation tags are instrumental in generating insightful reports and gaining a comprehensive understanding of cloud spending. By leveraging these tags, you can analyze costs from various perspectives, identify cost drivers, and pinpoint areas for optimization.To effectively utilize cost allocation tags for reporting:

- Utilize Cloud Provider’s Cost Management Tools: Cloud providers offer built-in cost management tools that allow you to filter and group costs based on tags. These tools provide dashboards, reports, and visualizations to help you analyze spending patterns.

- Generate Custom Reports: Beyond the built-in tools, create custom reports using the cloud provider’s APIs or third-party cost management platforms. This allows you to tailor reports to your specific needs and gain deeper insights. For example, you can create a report that shows the cost of each project, broken down by service and region.

- Analyze Spending Trends: Track spending trends over time to identify anomalies or unexpected increases in costs. This can help you proactively address potential issues and prevent cost overruns.

- Identify Cost Drivers: Determine the factors that are contributing the most to your cloud costs. This might include specific services, instance types, or regions. Understanding cost drivers allows you to focus your optimization efforts on the areas that will have the greatest impact.

- Establish Budget Alerts: Set up budget alerts to notify you when spending exceeds predefined thresholds. This helps you stay within budget and avoid unexpected costs.

Best Practices for Creating and Maintaining a Consistent Tagging Strategy

Implementing and maintaining a consistent tagging strategy requires a proactive and disciplined approach. Following these best practices will help ensure the accuracy and effectiveness of your cost allocation efforts.

- Document Your Tagging Strategy: Create a comprehensive document that Artikels your tagging schema, tag categories, naming conventions, and enforcement policies. This document should be easily accessible to all stakeholders.

- Provide Training and Education: Educate your team on the importance of tagging and how to apply tags correctly. This will help ensure that tags are consistently applied across all resources.

- Automate Tagging Where Possible: Automate the application of tags using IaC tools, cloud-native services, or custom scripts. This reduces the risk of human error and ensures consistency.

- Regularly Audit Your Tags: Conduct periodic audits to verify the accuracy and completeness of your tags. Identify and correct any inconsistencies or missing tags.

- Integrate Tagging with Other Processes: Integrate tagging with other processes, such as resource provisioning, change management, and incident management. This will help ensure that tags are automatically updated as resources change.

- Iterate and Improve: The tagging strategy is not static. Regularly review and update the strategy based on your evolving needs and experiences. This is an iterative process, so be prepared to make adjustments as needed.

Optimizing Storage Costs

Following a successful cloud migration, effectively managing storage costs becomes paramount. Inefficient storage practices can quickly erode the cost savings achieved through migration. This section explores strategies for optimizing storage expenses, ensuring that data is stored cost-effectively while maintaining accessibility and performance.

Strategies for Optimizing Storage Costs

A multi-faceted approach is necessary for optimizing storage costs. This involves leveraging various storage tiers, implementing data lifecycle management, and employing data compression techniques. The goal is to minimize expenses without compromising data availability or the ability to meet business requirements.

- Tiered Storage: This strategy involves classifying data based on its access frequency and importance, and storing it in the appropriate storage tier. This typically involves hot, warm, and cold tiers, each with different cost and performance characteristics.

- Data Lifecycle Management (DLM): DLM automates the movement of data between storage tiers based on predefined rules. This helps to ensure that infrequently accessed data is automatically moved to cheaper storage options, optimizing costs without manual intervention.

- Data Compression: Compressing data reduces the storage space required, thereby lowering storage costs. Compression can be implemented at the application level or by utilizing storage features that automatically compress data.

Moving Infrequently Accessed Data to Cheaper Storage Tiers

The process of moving infrequently accessed data to cheaper storage tiers is a critical component of cost optimization. This process typically involves identifying data based on access patterns, defining policies for data movement, and automating the transfer process.

- Identifying Data Access Patterns: Analyzing data access patterns is the first step. This involves monitoring data access logs to determine how frequently different data objects are accessed. Tools and services provided by cloud providers can help in this analysis.

- Defining Data Lifecycle Policies: Based on the analysis, data lifecycle policies are defined. These policies specify criteria for moving data between tiers, such as the age of the data or the last access time. For instance, data that hasn’t been accessed in 90 days might be moved to a colder storage tier.

- Automating Data Transfer: Once the policies are defined, the cloud provider’s services or third-party tools are used to automate the data transfer process. This ensures that data is moved to the appropriate tier automatically, based on the defined policies.

- Monitoring and Optimization: Continuous monitoring of data access patterns and storage costs is essential. This helps to refine data lifecycle policies and optimize storage costs over time. The performance of applications that access data in cheaper storage tiers must also be monitored to ensure that performance is acceptable.

Storage Options Comparison Table

The following table illustrates the different storage options available, along with their associated costs, access frequencies, and use cases. Costs are illustrative and may vary based on the cloud provider and region.

| Storage Type | Cost per GB (Monthly) | Access Frequency | Use Case |

|---|---|---|---|

| Hot Storage (e.g., Standard/Frequent Access) | $0.02 – $0.03 | Frequent | Active data, frequently accessed applications, databases, content delivery. |

| Warm Storage (e.g., Infrequent Access) | $0.01 – $0.02 | Infrequent | Backups, older application data, infrequently accessed archives. |

| Cold Storage (e.g., Glacier, Deep Archive) | $0.004 – $0.01 | Rare | Long-term archives, regulatory compliance data, disaster recovery data. |

| Object Storage (e.g., S3, Azure Blob Storage) | Varies (based on tier and access) | Variable | Data lakes, media files, static website hosting, backups. |

Automating Cost Optimization Processes

Automating cost optimization is crucial for maintaining efficiency and realizing sustained savings post-migration. Manually managing cloud resources is time-consuming and prone to human error. Automation ensures consistent application of cost-saving strategies, proactive detection of inefficiencies, and rapid response to changing resource demands. This approach allows organizations to shift their focus from reactive cost management to strategic resource allocation and innovation.

Designing a Workflow for Automating Cost Optimization

Designing an effective workflow for automating cost optimization involves several key steps. This process should be iterative and continuously refined based on performance data and evolving business needs.

- Resource Discovery and Inventory: Begin by establishing a comprehensive inventory of all cloud resources. This involves identifying instances, storage volumes, databases, and other services. This inventory forms the baseline for subsequent optimization efforts. Tools such as AWS Config, Azure Resource Graph, and Google Cloud Asset Inventory can be utilized for this purpose.

- Data Collection and Analysis: Implement data collection mechanisms to gather metrics related to resource utilization, performance, and cost. These metrics should be stored in a centralized repository for analysis. Tools like Prometheus, Grafana, and cloud provider-specific monitoring services are invaluable.

- Policy Definition: Define clear policies and thresholds for cost optimization. These policies should specify acceptable levels of resource utilization, idle time, and cost anomalies. For example, a policy might define an instance as “idle” if CPU utilization remains below 5% for more than a week.

- Automated Right-Sizing: Develop automation scripts to analyze resource utilization data and recommend or automatically implement right-sizing actions. This includes scaling instances up or down based on demand, terminating idle resources, and selecting more cost-effective instance types.

- Idle Resource Detection: Implement automation to identify and terminate idle resources. This process should involve regularly monitoring resource usage and triggering actions based on pre-defined thresholds.

- Reporting and Alerting: Configure automated reporting and alerting mechanisms to provide visibility into cost optimization efforts. These reports should track key metrics, such as cost savings, resource utilization, and policy compliance. Alerts should be triggered when anomalies or violations of defined policies are detected.

- Continuous Improvement: Regularly review and refine the automated workflow based on performance data and evolving business requirements. This includes adjusting policies, optimizing scripts, and integrating new cost optimization strategies.

Examples of Tools and Scripts for Automation

Several tools and scripts can be employed to automate cost optimization efforts. The choice of tools depends on the specific cloud provider, existing infrastructure, and the complexity of the optimization tasks.

- Cloud Provider Native Tools: AWS, Azure, and Google Cloud provide native tools that offer automation capabilities. These tools often integrate with other services and provide a streamlined approach to managing resources. For instance:

- AWS: AWS Compute Optimizer analyzes resource utilization and recommends right-sizing actions for EC2 instances. AWS Cost Explorer provides detailed cost analysis and allows the creation of custom reports.

AWS Lambda can be used to automate tasks based on events, such as automatically shutting down idle instances.

- Azure: Azure Advisor provides recommendations for optimizing cost, performance, security, and reliability. Azure Automation enables the creation and scheduling of runbooks to automate tasks. Azure Monitor collects and analyzes metrics, providing insights into resource utilization.

- Google Cloud: Google Cloud’s Recommendations provides cost optimization recommendations based on resource usage. Cloud Automation Manager enables the automation of infrastructure deployment and management. Cloud Monitoring collects and analyzes metrics, allowing for proactive detection of issues.

- AWS: AWS Compute Optimizer analyzes resource utilization and recommends right-sizing actions for EC2 instances. AWS Cost Explorer provides detailed cost analysis and allows the creation of custom reports.

- Open-Source Tools: Open-source tools offer flexibility and customization options for automating cost optimization.

- Terraform: Terraform allows the infrastructure-as-code approach. It can automate the provisioning and management of cloud resources, making it easier to right-size instances and optimize configurations.

- Ansible: Ansible is a configuration management and automation tool that can automate tasks such as installing software, configuring systems, and managing cloud resources. It can be used to automate the deployment and configuration of cost optimization tools.

- Python Scripts with Cloud SDKs: Python scripts, combined with cloud provider SDKs (e.g., Boto3 for AWS, Azure SDK for Python, Google Cloud Client Libraries), offer a flexible way to automate custom cost optimization tasks. These scripts can be used to analyze resource utilization data, identify idle resources, and implement right-sizing actions.

- Third-Party Tools: Third-party tools provide pre-built solutions for cost optimization, often offering advanced features and integrations.

- CloudHealth by VMware: CloudHealth provides comprehensive cost management and optimization capabilities, including automated recommendations and policy enforcement.

- CloudZero: CloudZero focuses on cost intelligence and allows for granular cost tracking and analysis.

Integrating Cost Optimization Automation with DevOps Practices

Integrating cost optimization automation with existing DevOps practices ensures efficiency and consistency. This integration allows cost optimization to be a seamless part of the development lifecycle.

- Infrastructure as Code (IaC): Incorporate cost optimization configurations into IaC templates (e.g., Terraform, CloudFormation). This ensures that cost-effective resource configurations are applied from the outset.

- Continuous Integration/Continuous Deployment (CI/CD): Integrate cost optimization checks into CI/CD pipelines. This includes validating resource configurations for cost efficiency before deployment. For instance, use tools like tf cost estimation to estimate the cost of infrastructure changes.

- Monitoring and Alerting: Integrate cost optimization monitoring and alerting with existing monitoring systems. Configure alerts to notify teams of cost anomalies, resource utilization issues, and policy violations.

- Collaboration and Communication: Foster collaboration between DevOps, FinOps, and other relevant teams. Establish clear communication channels to share cost optimization insights and recommendations. Use tools like Slack or Microsoft Teams for real-time updates and notifications.

- Automated Testing: Implement automated testing to validate the effectiveness of cost optimization automation. This includes testing right-sizing scripts, idle resource detection, and cost reporting.

- Feedback Loops: Establish feedback loops to continuously improve cost optimization automation. Analyze performance data, identify areas for improvement, and refine automation scripts and policies.

Reviewing and Refactoring Applications for Cost Efficiency

Post-migration, optimizing cloud costs extends beyond infrastructure adjustments. A crucial element involves scrutinizing the application architecture itself. This review identifies inefficiencies inherent in the application’s design and implementation that impact resource consumption and, consequently, cloud spending. This phase often unlocks significant cost savings by refining how the application utilizes cloud resources.

Assessing Application Architecture Post-Migration

Evaluating the application architecture is vital to pinpointing areas where resource usage can be streamlined. The objective is to align application behavior with cost-effective cloud practices.

- Identifying Resource Bottlenecks: Analyzing application performance metrics (CPU utilization, memory usage, network I/O, database query times) reveals bottlenecks. These bottlenecks, often stemming from inefficient code or design flaws, drive up resource consumption and costs. For example, a database query consistently taking a long time to execute indicates potential for optimization.

- Evaluating Scalability and Elasticity: The cloud’s inherent scalability and elasticity are leveraged to their full potential. Applications should dynamically scale resources based on demand. An application that is over-provisioned is wasting resources. Conversely, under-provisioning leads to performance degradation and potential cost inefficiencies due to increased response times and required scaling up.

- Analyzing Data Storage and Access Patterns: Data storage choices and access patterns have a significant cost impact. Frequent data access requires efficient storage tiers and optimized data retrieval strategies. An example is using a less expensive storage tier for infrequently accessed data.

- Reviewing Code for Inefficiencies: Code reviews are essential to identify inefficient algorithms, memory leaks, and other coding practices that contribute to excessive resource consumption. A memory leak, for instance, leads to ever-increasing memory usage, which necessitates more expensive instances.

Refactoring Techniques for Cost Reduction

Refactoring, the process of restructuring existing computer code, can dramatically reduce resource consumption and improve performance, leading to significant cost savings. This involves targeted improvements to application components.

- Optimizing Code for Resource Efficiency: Rewriting inefficient code segments, optimizing algorithms, and reducing unnecessary computations directly reduce resource usage. For instance, replacing a nested loop with a more efficient data structure lookup can dramatically reduce CPU usage.

- Implementing Caching Strategies: Caching frequently accessed data minimizes the need to retrieve it from the database or other external sources. This reduces database load, network I/O, and overall resource consumption. For example, caching frequently accessed product catalog data can significantly reduce database query costs.

- Refactoring Database Queries: Optimizing database queries improves their execution speed and reduces the load on the database server. This involves techniques such as indexing, query rewriting, and using appropriate data types. For instance, adding an index to a frequently filtered column can dramatically speed up query execution.

- Decomposing Monolithic Applications: Breaking down a monolithic application into smaller, independently deployable microservices can improve scalability, resilience, and resource utilization. Microservices allow for independent scaling of individual components, reducing the need to scale the entire application unnecessarily.

- Utilizing Serverless Computing: Migrating appropriate application components to serverless functions (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) can significantly reduce costs by paying only for actual compute time. Serverless architecture removes the need to provision and manage servers, leading to cost efficiencies.

Cost-Saving Architectural Patterns

Adopting specific architectural patterns can lead to significant cost savings by optimizing resource utilization and improving application performance.

- Event-Driven Architecture: This architecture uses asynchronous communication between components, reducing the need for constant polling and resource consumption. For example, using a message queue (e.g., AWS SQS, Azure Service Bus, Google Cloud Pub/Sub) to decouple components can reduce resource contention.

- Microservices Architecture: As mentioned above, microservices facilitate independent scaling and deployment, allowing for cost-effective resource allocation. Each microservice can be scaled independently based on its specific resource needs.

- Stateless Applications: Stateless applications store no session data on the server, making them easily scalable and resilient. This allows for efficient use of load balancing and auto-scaling, reducing the need for expensive persistent storage.

- Content Delivery Network (CDN) Integration: CDNs cache static content (images, videos, etc.) closer to users, reducing the load on origin servers and minimizing data transfer costs. A CDN lowers the cost of serving content to a global audience by leveraging geographically distributed servers.

Managing Network Costs

Optimizing network costs is crucial after cloud migration to ensure overall cost efficiency and maintain application performance. Network expenses, often overlooked during initial migration, can accumulate significantly over time. Effective management involves strategic monitoring, optimization of data transfer, and leveraging content delivery networks (CDNs) to minimize costs and enhance user experience.

Data Transfer Optimization

Data transfer costs, particularly egress charges (data leaving the cloud provider’s network), can represent a substantial portion of network expenses. Minimizing these costs requires a multifaceted approach, focusing on reducing the volume of data transferred and choosing cost-effective transfer methods.

- Data Compression: Implementing data compression techniques, such as Gzip or Brotli, before transferring data can significantly reduce the amount of data transmitted. This is especially effective for text-based assets like HTML, CSS, and JavaScript files. For instance, a web application serving static content could reduce its egress costs by 30-50% by compressing these files.

- Caching Strategies: Utilizing caching mechanisms, both client-side (browser caching) and server-side (caching proxies or content delivery networks), minimizes the need to re-download data repeatedly. This is particularly beneficial for frequently accessed static assets. Implementing proper caching headers (e.g., `Cache-Control: max-age`) allows browsers to store and reuse content, reducing data transfer.

- Choosing the Right Region: Selecting the cloud region closest to your users minimizes latency and, in some cases, can reduce data transfer costs. Data transfer within the same region is often cheaper or free compared to cross-region transfers. For example, if the majority of your users are in Europe, hosting your application in a European region will likely be more cost-effective.

- Optimizing Database Queries: Efficient database queries reduce the amount of data retrieved and transferred. Poorly optimized queries can result in retrieving excessive data, increasing both storage and network costs. Review and optimize database queries regularly to ensure only necessary data is retrieved.

- Batching Data Transfers: Consolidating multiple small data transfers into larger batches can reduce the overhead associated with each individual transfer. This is particularly relevant when interacting with storage services or databases. Instead of transferring numerous small files, combine them into a single archive before transfer.

- Using Private Network Connections: For high-volume data transfers between your on-premises infrastructure and the cloud, consider using private network connections like AWS Direct Connect or Azure ExpressRoute. These offer more predictable performance and often lower costs compared to transferring data over the public internet, especially for large datasets.

Monitoring Network Traffic and Identifying Cost Savings Opportunities

Continuous monitoring of network traffic is essential to identify patterns, anomalies, and areas for cost optimization. This involves analyzing data transfer volumes, identifying top consumers of bandwidth, and understanding traffic patterns to make informed decisions about cost-saving measures.

- Utilizing Cloud Provider Tools: Leverage the monitoring and reporting tools provided by your cloud provider (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring). These tools provide detailed insights into network traffic, including data transfer volumes, bandwidth usage, and costs.

- Setting Up Alerts: Configure alerts to notify you of unusual network activity, such as spikes in data transfer or unexpected increases in costs. This allows for proactive identification and resolution of potential issues.

- Analyzing Data Transfer Patterns: Regularly analyze data transfer patterns to identify trends and anomalies. This involves examining traffic volumes over time, identifying peak usage periods, and determining the sources and destinations of data transfers.

- Identifying Top Bandwidth Consumers: Determine which services, applications, or users are consuming the most bandwidth. This helps prioritize optimization efforts and identify potential bottlenecks.

- Analyzing Egress Costs by Service: Break down egress costs by service to understand which services are contributing the most to network expenses. This information can be used to target specific services for optimization.

- Implementing Network Flow Logs: Enable network flow logs (e.g., AWS VPC Flow Logs, Azure Network Watcher, Google Cloud VPC Flow Logs) to capture detailed information about network traffic. This data can be used for in-depth analysis and troubleshooting.

- Comparing Costs Over Time: Track network costs over time to identify trends and measure the effectiveness of optimization efforts. This allows you to quantify the impact of changes and make data-driven decisions.

Configuring a CDN to Reduce Data Transfer Costs and Improve Application Performance

Content Delivery Networks (CDNs) are distributed networks of servers that cache content closer to users, reducing latency and data transfer costs. Implementing a CDN is a crucial step in optimizing network costs and improving application performance, particularly for applications serving static content or media.

- Choosing a CDN Provider: Select a CDN provider based on your specific needs, including geographic coverage, pricing, features, and performance. Popular CDN providers include Amazon CloudFront, Cloudflare, and Google Cloud CDN.

- Configuring CDN for Static Content: Configure the CDN to cache static assets, such as images, videos, CSS, and JavaScript files. This involves pointing your domain or subdomains to the CDN and specifying the cache behavior (e.g., cache duration, content compression).

- Implementing Origin Shielding: Utilize origin shielding to reduce the load on your origin servers. Origin shielding involves caching content at a regional CDN node, which then serves as the origin for other CDN nodes in the region. This reduces the number of requests to your origin server.

- Using CDN for Dynamic Content (Advanced): Some CDNs offer features for caching and accelerating dynamic content. This involves using techniques like edge-side includes (ESI) and dynamic site acceleration (DSA) to improve performance.

- Monitoring CDN Performance: Monitor CDN performance metrics, such as cache hit ratio, latency, and data transfer costs. This helps you assess the effectiveness of the CDN and identify areas for improvement.

- Integrating CDN with Application: Integrate the CDN seamlessly with your application by updating your application’s configuration to serve static assets from the CDN. This can involve changing the URLs of static assets or using a CDN-aware reverse proxy.

- Regularly Reviewing and Optimizing CDN Configuration: Regularly review and optimize your CDN configuration to ensure it meets your evolving needs. This includes adjusting cache settings, updating origin server configurations, and monitoring performance metrics.

Conclusive Thoughts

In conclusion, optimizing cloud costs post-migration is not a one-time event but a continuous process requiring diligence, analysis, and adaptation. By implementing the strategies Artikeld, including robust monitoring, resource optimization, and automated processes, organizations can achieve significant cost savings and maximize the return on their cloud investments. Continuous evaluation and refinement of these strategies, coupled with a proactive approach to cost management, are key to maintaining a lean and efficient cloud environment.

Ultimately, this approach fosters a more sustainable and cost-effective cloud strategy, enabling organizations to fully leverage the benefits of cloud computing.

FAQ Compilation

What is the most important metric to track immediately after migration?

Immediately after migration, focus on tracking overall spending, resource utilization (CPU, memory, storage), and data transfer costs to establish a baseline and identify immediate cost anomalies.

How often should I review my cloud resources for right-sizing?

Regularly review your resources, ideally weekly or monthly, depending on workload volatility. Use automation to identify underutilized resources continuously.

What are the primary differences between Reserved Instances and Savings Plans?

Reserved Instances offer discounts for committing to a specific instance type for a set period, while Savings Plans provide flexibility across instance families and regions, offering more adaptability.

How can I accurately allocate cloud costs to different departments?

Implement a comprehensive tagging strategy, applying tags to all resources. Then, use cloud provider cost allocation tools to generate reports and allocate costs based on tags.

What are the key benefits of automating cost optimization processes?

Automation reduces manual effort, ensures consistent application of optimization strategies, and enables real-time responses to cost fluctuations, leading to greater efficiency and savings.