Embarking on a journey through the realm of modern data architectures, this guide delves into the intricacies of Change Data Capture (CDC) and its transformative impact. We will explore how Debezium, a powerful distributed platform, emerges as a pivotal tool in this landscape, enabling real-time data streaming and synchronization. This exploration will cover the core components, configuration, and advanced functionalities of Debezium, providing a comprehensive understanding of its capabilities.

This guide offers a step-by-step approach to setting up Debezium, configuring database connectors, and transforming data. You’ll learn how to publish change events to message brokers like Kafka, build robust data pipelines, and effectively monitor and troubleshoot Debezium deployments. Furthermore, we’ll discuss schema evolution, advanced configuration options, and real-world use cases, equipping you with the knowledge to leverage Debezium for auditing, data synchronization, and data warehousing.

Introduction to Debezium and Change Data Capture (CDC)

Change Data Capture (CDC) is a powerful paradigm for tracking and propagating database changes in real-time. It’s become a cornerstone of modern data architectures, enabling a variety of use cases from data warehousing and real-time analytics to event-driven architectures and database replication. By capturing and streaming changes as they occur, CDC minimizes latency and allows for immediate reactions to data modifications.

Change Data Capture (CDC) Concept and Benefits

CDC focuses on identifying and capturing changes made to data within a database. These changes, typically in the form of inserts, updates, and deletes (CRUD operations), are then made available for consumption by downstream systems. This contrasts with batch processing, where data is extracted and processed periodically, leading to delays in data availability. The benefits of CDC are numerous, making it an attractive solution for many data-driven applications.

- Real-time Data Availability: CDC provides near real-time data synchronization, reducing the latency between data modification and its availability in other systems. This is critical for applications requiring up-to-the-minute information, such as fraud detection and real-time dashboards.

- Reduced Impact on Source Systems: CDC typically employs mechanisms that minimize the load on the source database. This can be achieved by reading transaction logs, which are designed for efficient change tracking, rather than querying the database directly.

- Data Integration: CDC facilitates seamless data integration across various systems and platforms. Changes captured from a source database can be easily streamed to data warehouses, data lakes, or other applications.

- Event-Driven Architectures: CDC enables the creation of event-driven architectures, where changes in the database trigger specific actions or processes. This allows for building responsive and scalable applications.

- Improved Data Consistency: By capturing changes in a consistent manner, CDC helps maintain data consistency across different systems. This is particularly important for applications where data accuracy is critical.

Debezium Overview

Debezium is an open-source distributed platform for change data capture. It monitors databases and publishes the changes to Apache Kafka, making it an ideal solution for building real-time data pipelines. Debezium provides a robust and reliable way to capture database changes and stream them to various downstream consumers. It supports a wide range of databases and provides a flexible and scalable architecture.

Debezium Core Components

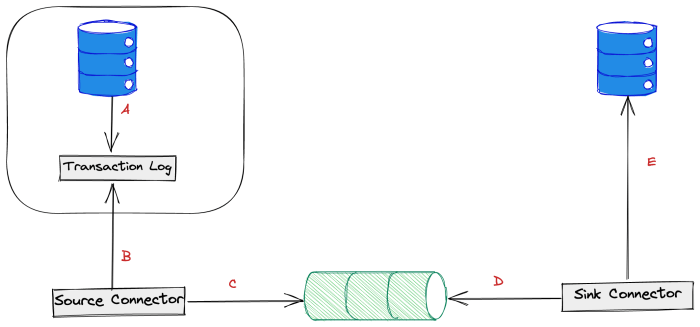

Debezium’s architecture is built around several key components that work together to capture and stream database changes. Understanding these components is crucial for effectively deploying and managing Debezium.

- Connectors: Connectors are the heart of Debezium. Each connector is specifically designed to monitor a particular database system (e.g., MySQL, PostgreSQL, MongoDB). The connector connects to the database, reads the transaction logs, and converts the changes into a standardized format.

- Kafka Connect: Debezium leverages Apache Kafka Connect, a framework for streaming data between various systems and Apache Kafka. Kafka Connect provides the infrastructure for running Debezium connectors, managing their lifecycle, and handling data transformation.

- Kafka: Apache Kafka serves as the central message broker for Debezium. The connectors publish the captured change events to Kafka topics. Downstream consumers can then subscribe to these topics to receive and process the changes.

- Change Events: Debezium transforms database changes into a standardized format called change events. These events contain information about the change, including the operation performed (insert, update, delete), the table affected, and the before and after values of the data. This standardized format simplifies the processing of changes by downstream consumers.

Setting Up the Environment for Debezium

To effectively utilize Debezium for Change Data Capture (CDC), establishing a robust environment is crucial. This involves installing the necessary software, configuring the connectors, and understanding the associated dependencies. This section provides a comprehensive guide to setting up your environment for Debezium.

Prerequisites for Deploying Debezium

Before deploying Debezium, ensure that your system meets the following prerequisites. Meeting these requirements is essential for the smooth operation of Debezium and its connectors.

- Java Runtime Environment (JRE) or Java Development Kit (JDK): Debezium is a Java-based project, therefore, a compatible Java runtime environment is necessary. The recommended version is Java 11 or later. Verify the installation by running

java -versionin your terminal. - Apache Kafka: Debezium relies heavily on Apache Kafka for storing and streaming change events. A running Kafka cluster is required. Ensure you have a Kafka broker and Zookeeper instance running. Kafka version 2.0 or later is generally recommended. You can verify Kafka’s installation and operational status by using the Kafka command-line tools, such as

kafka-topics.sh. - Kafka Connect: Kafka Connect is the framework that Debezium uses to connect to databases and stream changes. It needs to be installed and configured within your Kafka cluster. Kafka Connect manages the connectors and their configurations.

- Database System: The database system from which you intend to capture changes must be accessible. Supported databases include MySQL, PostgreSQL, MongoDB, SQL Server, and others. The specific requirements depend on the database system. For example, for MySQL, you will need to enable the binary log.

- Database Driver: The appropriate JDBC driver for the database you’re capturing changes from must be available in Kafka Connect’s classpath. For example, the MySQL Connector/J is required for MySQL.

- Operating System: While Debezium is platform-independent, ensure your operating system has sufficient resources (CPU, memory, disk space) to handle the database load and the change data streaming.

Installing and Configuring Debezium Connectors

Installing and configuring Debezium connectors involves several steps. The process depends on the specific database you are working with. This guide Artikels the general steps involved.

- Download the Connector Plugin: Download the appropriate Debezium connector plugin for your database from the Maven repository or the Debezium website. For instance, to use the MySQL connector, download the relevant JAR file.

- Install the Connector Plugin: Place the downloaded JAR file into the Kafka Connect plugin path. This path is typically defined in the Kafka Connect configuration (e.g.,

plugin.path). - Configure Kafka Connect: Modify the Kafka Connect configuration file (e.g.,

connect-standalone.propertiesorconnect-distributed.properties). Configure the following properties:plugin.path: Specifies the directory where the connector plugins are located.bootstrap.servers: Defines the Kafka brokers’ addresses.key.converter: Sets the key converter.org.apache.kafka.connect.json.JsonConverteris often used.value.converter: Sets the value converter.org.apache.kafka.connect.json.JsonConverteris commonly used.

- Configure the Connector: Create a configuration file (usually JSON format) for your Debezium connector. This file will specify the database connection details, the tables to capture changes from, and other relevant settings.

- Start Kafka Connect: Start Kafka Connect in standalone mode (for testing) or distributed mode (for production). Use the command-line tools provided with Kafka.

- Submit the Connector Configuration: Submit the connector configuration to Kafka Connect using the Kafka Connect REST API. This will start the connector and begin capturing changes from your database. You can use tools like

curlto send the configuration. - Verify Data Streaming: Monitor the Kafka topics to which the change data is being streamed. Use the Kafka command-line tools to consume messages from the topics and verify that the change events are being captured correctly.

Configuration Properties for a Database Source Connector

Configuring a database source connector requires defining various properties to specify how the connector interacts with the database. The specific properties will vary slightly depending on the database system, but there are common properties across all connectors.

- connector.class: Specifies the class of the Debezium connector to use. For example, for MySQL, it would be

io.debezium.connector.mysql.MySqlConnector. - database.hostname: The hostname or IP address of the database server.

- database.port: The port number of the database server.

- database.user: The username for connecting to the database.

- database.password: The password for connecting to the database.

- database.server.id: A unique numeric identifier for the database server within the Kafka Connect cluster. This is used to identify the source of the change events.

- database.server.name: A logical name for the database server. This is used to generate the Kafka topic names.

- database.history.kafka.bootstrap.servers: The address of the Kafka brokers used for storing the database schema history.

- database.history.kafka.topic: The Kafka topic name for storing the database schema history.

- table.include.list: A comma-separated list of regular expressions that specify the tables to include in the change data capture.

- table.exclude.list: A comma-separated list of regular expressions that specify the tables to exclude from the change data capture.

- topic.prefix: A prefix to be added to the Kafka topic names.

- schema.history.internal.kafka.bootstrap.servers: The address of the Kafka brokers used for storing the schema history.

- schema.history.internal.kafka.topic: The Kafka topic name for storing the schema history.

Example: For a MySQL connector, a basic configuration might look like this (in JSON format):

"name": "mysql-connector", "config": "connector.class": "io.debezium.connector.mysql.MySqlConnector", "database.hostname": "mysql-server", "database.port": "3306", "database.user": "debezium", "database.password": "dbz", "database.server.id": "18405", "database.server.name": "mysql_db", "database.include.list": "db.table1,db.table2", "database.history.kafka.bootstrap.servers": "kafka:9092", "database.history.kafka.topic": "schema-changes", "topic.prefix": "mysql_db"

Database Source Connectors

Debezium’s strength lies in its ability to capture changes from various database systems. This is achieved through source connectors, which are specialized components designed to interact with specific database types. Configuring these connectors is crucial for extracting and streaming change data.

Understanding the configuration process is vital for a successful Debezium implementation. Each connector requires specific parameters tailored to the target database, enabling it to connect, monitor, and capture changes effectively.

Configuring a Connector for a Specific Database System

Configuring a Debezium connector involves defining connection parameters, filtering options, and schema handling settings. The specific configuration options vary depending on the database system. However, the general process remains consistent: provide the necessary information for the connector to establish a connection and identify the data to be captured.

For each database system, the connector configuration requires different properties. These properties typically include connection details, database filtering, and data transformation configurations.

* Connection Details: This includes the hostname, port, username, and password for the database. These settings enable the connector to authenticate and connect to the database instance.

– Database Filtering: Specify which databases and tables the connector should monitor for changes. This is crucial for focusing on relevant data and minimizing the amount of data transferred.

– Data Transformation: Debezium allows you to transform data before it’s streamed. This can involve filtering specific fields, renaming columns, or adding computed values.

Defining Connection Parameters

Defining connection parameters is a fundamental step in configuring any Debezium source connector. These parameters provide the necessary information for the connector to connect to the target database instance. Incorrect or missing connection parameters will prevent the connector from establishing a connection and capturing change data.

Connection parameters typically include:

* `host`: The hostname or IP address of the database server. For example, `localhost` or `192.168.1.100`.

– `port`: The port number on which the database server is listening. For MySQL, the default port is 3306. For PostgreSQL, it’s 5432.

– `username`: The username used to authenticate with the database server. This user must have the necessary permissions to read the database logs and tables.

– `password`: The password associated with the specified username. This is used for authentication.

– `database.whitelist` or `database.include.list`: A comma-separated list of database names to capture changes from.

– `table.whitelist` or `table.include.list`: A comma-separated list of table names to capture changes from.

These parameters are crucial for establishing a secure and reliable connection to the database.

Configuration Example for a MySQL Connector

Here’s a configuration example for a MySQL connector, illustrating the settings required to capture changes from a specific database and table:

“`json

“name”: “mysql-connector”,

“config”:

“connector.class”: “io.debezium.connector.mysql.MySqlConnector”,

“tasks.max”: “1”,

“database.hostname”: “mysql-server”,

“database.port”: “3306”,

“database.user”: “debezium”,

“database.password”: “dbz”,

“database.server.id”: “184054”,

“database.server.name”: “my-mysql-server”,

“database.include.list”: “inventory”,

“table.include.list”: “inventory.products”,

“database.history.kafka.bootstrap.servers”: “kafka:9092”,

“database.history.kafka.topic”: “schema-changes.inventory”

“`

This configuration example showcases several key settings:

* `connector.class`: Specifies the connector class to be used, in this case, the MySQL connector.

– `database.hostname`: Defines the hostname of the MySQL server (`mysql-server`).

– `database.port`: Specifies the port number for the MySQL server (3306).

– `database.user`: Sets the username for database authentication (`debezium`).

– `database.password`: Sets the password for the database user (`dbz`).

– `database.server.id`: A unique identifier for the MySQL server within the Kafka Connect cluster.

– `database.server.name`: The logical name of the MySQL server. This is used as the prefix for Kafka topics.

– `database.include.list`: Specifies the database to capture changes from (`inventory`).

– `table.include.list`: Specifies the table within the database to capture changes from (`inventory.products`).

– `database.history.kafka.bootstrap.servers`: Defines the Kafka brokers for storing the database schema history.

– `database.history.kafka.topic`: Specifies the Kafka topic used to store the database schema history.

This configuration captures changes from the `products` table within the `inventory` database, streaming these changes to Kafka for further processing. This configuration allows you to capture changes efficiently and effectively.

Data Transformation and Filtering with Debezium

Debezium provides powerful mechanisms for transforming and filtering change data events before they reach their destination. This functionality is crucial for tailoring the data stream to specific needs, such as masking sensitive information, enriching events with additional context, or filtering out irrelevant changes. Effective data transformation and filtering ensures that only the necessary and relevant data is processed downstream, optimizing resource utilization and improving data quality.

Filtering Events Based on Schema or Content

Filtering events allows you to control which data changes are propagated, reducing the volume of data processed and improving efficiency. This can be achieved using various methods, including filtering based on the schema of the data and filtering based on the content of the data.

Filtering based on schema is valuable when you want to exclude entire tables or specific columns from the change data stream. This is often used to remove sensitive information or reduce data volume.

* Using `table.include.list` and `table.exclude.list`: These connector configuration properties allow you to specify which tables to include or exclude from the capture. For example, setting `table.exclude.list=inventory.products_audit` would prevent changes to the `products_audit` table from being captured.

– Using `column.include.list` and `column.exclude.list`: These properties allow you to specify which columns within a table to include or exclude. For instance, `column.exclude.list=inventory.products.description,inventory.products.price` would exclude the `description` and `price` columns from the `products` table.

Filtering based on content is useful when you need to process only a subset of changes based on the data within the event itself. This allows for more granular control over the data stream.

* Using Predicates within Single Message Transforms (SMTs): SMTs can be configured to evaluate conditions based on the data in the event and filter messages accordingly. For example, an SMT could be configured to only forward updates where a product’s `status` is ‘active’.

– Example: Filtering based on a column value: Suppose you only want to capture updates to orders where the `order_status` is “shipped.” You could use an SMT that evaluates the `order_status` field and drops any messages where it’s not “shipped.”

Applying Transformations Using Single Message Transforms (SMTs)

Single Message Transforms (SMTs) are a powerful feature in Kafka Connect that allows you to modify individual messages as they pass through the connector. Debezium leverages SMTs to perform various transformations, including data masking, enrichment, and filtering.

* Masking Sensitive Data: SMTs can be used to redact sensitive information, such as personally identifiable information (PII), before it’s written to a downstream system. This is crucial for compliance with data privacy regulations.

– Example: Masking email addresses: You can use the `RegexReplace` SMT to replace email addresses with a masked value. The configuration might look like this:

“`json

“transforms”: “mask_email”,

“transforms.mask_email.type”: “org.apache.kafka.connect.transforms.RegexReplace$Value”,

“transforms.mask_email.regex”: “([a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\\.[a-zA-Z]2,)”,

“transforms.mask_email.replacement”: ” @masked.com”,

“transforms.mask_email.target”: “email”

“`

This configuration replaces all email addresses found in the `email` field with ` @masked.com`.

– Enriching Data: SMTs can add additional context to events by looking up information from external sources.

– Example: Adding geographical location: You can enrich events with the geographical location of a customer by using an SMT that looks up the customer’s location based on their customer ID. This could involve making a call to a geolocation service or a database.

– Extracting Nested Data: SMTs can extract data from nested structures within the change event.

– Example: Flattening JSON data: If your source database stores data in JSON format, you can use the `Flatten` SMT to flatten the JSON structure, making it easier to query and analyze the data.

– Using the `Filter` SMT: This SMT allows for filtering messages based on complex conditions. You can specify a predicate that determines whether a message should be passed through or dropped.

– Example: Filtering based on multiple conditions: You can configure a `Filter` SMT to drop messages where a specific field has a particular value

-and* another field meets another condition. This provides fine-grained control over the data stream.

Strategies for Handling Data Types and Schema Evolution

Managing data types and schema evolution is crucial for ensuring data consistency and preventing errors. Debezium provides several strategies for handling these challenges.

* Schema Registry Integration: Using a schema registry, such as those provided by Apache Kafka or Confluent, is highly recommended. A schema registry stores the schemas for your data and ensures that producers and consumers of the data agree on the data’s structure.

– Benefits: Schema evolution is managed automatically. Schema compatibility checks prevent incompatible changes. Data serialization and deserialization are handled efficiently.

– Schema Evolution Strategies: When the schema of your source database changes, you need to decide how to handle the changes in your change data stream. Common strategies include:

– Adding new fields: Consumers that are unaware of the new fields will simply ignore them. This is a backward-compatible change.

– Removing fields: This is a breaking change and should be handled carefully. Consumers might need to be updated to accommodate the change.

– Changing data types: This is also a breaking change. Consumers will need to be updated to handle the new data type.

– Using the `Delete` event: When a column is removed from a table, the `Delete` event can be used to indicate the removal.

– Data Type Conversion: Debezium can handle some data type conversions automatically, but you may need to configure custom conversions using SMTs or custom converters.

– Example: Converting timestamps: You might need to convert timestamps from the source database’s time zone to UTC for consistency. You can use an SMT like `TimestampConverter` for this purpose.

– Handling Null Values: Be mindful of null values. Ensure your consumers handle null values correctly, or use SMTs to replace them with default values.

– Example: Replacing null values with a default: Use the `ReplaceField` SMT to replace null values in a specific field with a default value, such as “Unknown” or 0.

– Monitoring Schema Changes: Implement monitoring to detect schema changes in your source database. This allows you to proactively address potential compatibility issues.

– Tools: Use monitoring tools to track schema changes and receive alerts when they occur. These tools can often integrate with your schema registry.

Publishing Data to Message Brokers (e.g., Kafka)

After capturing changes from your databases using Debezium, the next crucial step is publishing these change events to a message broker. This enables downstream applications and services to react to data modifications in real-time, facilitating event-driven architectures and data synchronization. This section details how Debezium integrates with message brokers, particularly Kafka, to distribute change data effectively.

Publishing Change Events to Kafka

The process of publishing change events to a message broker, such as Kafka, involves several key steps, transforming database changes into a stream of events that other systems can consume.

- Event Generation: Debezium connectors, after capturing changes from the database, generate change events. These events contain details about the data modifications, including the type of operation (insert, update, delete), the affected table, and the data itself (both before and after images).

- Serialization: The change events are serialized into a specific format, typically JSON or Avro, to facilitate efficient transmission over the network. Avro is often preferred for its schema evolution capabilities, allowing for changes in the data structure over time without breaking compatibility.

- Publishing to Kafka: Kafka Connect, acting as a bridge, receives these serialized events from the Debezium connectors. Kafka Connect then publishes these events to designated Kafka topics. Each topic represents a specific table or a set of tables.

- Consumer Consumption: Consumers subscribe to the relevant Kafka topics and consume the change events. These consumers can be various applications or services that need to react to the data changes, such as data warehouses, search indexes, or other microservices.

Kafka Topic Configuration

Properly configuring Kafka topics is essential for efficient data streaming and consumption. The topic configuration should align with the structure and requirements of the change data.

- Topic Naming: A consistent naming convention is crucial. A common approach is to use the format: `<database_name>.<schema_name>.<table_name>`. For instance, `inventory.products.products`. This allows for easy identification and routing of change events.

- Partitioning: Partitioning determines how the data is distributed across Kafka brokers. The choice of partitioning strategy depends on the data volume and the need for parallel processing. Common strategies include:

- Key-based Partitioning: Using a primary key or a unique identifier from the data as the partition key. This ensures that all events related to the same entity (e.g., a specific product) are routed to the same partition, maintaining data order.

- Round-Robin Partitioning: Distributing data evenly across all partitions. This is suitable when the order of events is not critical.

- Replication Factor: Setting a replication factor (e.g., 3) ensures data durability and availability. Kafka replicates data across multiple brokers, so if one broker fails, the data remains available on other brokers.

- Compression: Enabling compression (e.g., using GZIP, Snappy, or LZ4) can significantly reduce the size of the data transmitted and stored in Kafka, improving performance and reducing storage costs.

Kafka Connect and Debezium Integration

Kafka Connect is the cornerstone of Debezium’s integration with Kafka, providing a scalable and reliable framework for moving data between various systems and Kafka.

- Connector Configuration: Kafka Connect relies on connector configurations to define how data is ingested and published. For Debezium, these configurations specify:

- The Debezium connector to use (e.g., MySQL, PostgreSQL).

- The database connection details (host, port, username, password).

- The Kafka topic to publish the change events to.

- The serialization format (e.g., JSON, Avro).

- Filtering and transformation rules (optional).

- Workers and Tasks: Kafka Connect operates using worker processes that manage the connectors. Each connector runs one or more tasks, which are responsible for reading data from the source system (database) and writing it to Kafka. The number of tasks can be scaled to handle increased data volume.

- Monitoring and Management: Kafka Connect provides APIs and tools for monitoring the performance and health of connectors. This includes metrics like data throughput, error rates, and lag, enabling proactive management and troubleshooting. Tools like Prometheus and Grafana can be used for advanced monitoring and alerting.

- Example Configuration Snippet (MySQL Debezium Connector):

"name": "inventory-connector",

"config":

"connector.class": "io.debezium.connector.mysql.MySqlConnector",

"tasks.max": "1",

"database.hostname": "mysql",

"database.port": "3306",

"database.user": "debezium",

"database.password": "dbz",

"database.server.id": "184054",

"database.server.name": "inventory",

"database.whitelist": "inventory",

"database.history.kafka.bootstrap.servers": "kafka:9092",

"database.history.kafka.topic": "schema-changes.inventory"

This configuration defines a MySQL connector named `inventory-connector` that captures changes from the `inventory` database and publishes them to Kafka. The `database.server.name` specifies the logical name for the database, which is used in the Kafka topic names. The `database.history.kafka.topic` configures where the schema changes are stored. The example uses a single task, and you can adjust `tasks.max` to increase the number of tasks.

Consuming Change Events and Data Integration

Change Data Capture (CDC) is only half the battle. The true value of Debezium lies in how you utilize the change events it captures. This section explores the methods for consuming these events from your message broker, building data pipelines, and integrating the captured data into various systems for analysis, reporting, and other crucial business functions. Efficient consumption and integration are critical to leveraging the real-time insights provided by CDC.

Methods for Consuming Change Events

Once Debezium has captured changes and published them to a message broker like Kafka, the next step is consuming these events. Several approaches exist, each with its advantages and disadvantages depending on your specific needs and the complexity of your data pipeline.

- Kafka Clients: The most fundamental method involves using standard Kafka client libraries (e.g., Kafka Java Client, Kafka Python Client). These clients allow you to subscribe to topics, receive messages, and process the change events. This provides the most flexibility and control over the consumption process.

- Kafka Streams: Kafka Streams is a stream processing library built on top of Kafka. It simplifies the processing of Kafka topics by providing abstractions for common stream processing tasks like filtering, aggregation, and joining. Kafka Streams offers a more declarative approach to building data pipelines, making it easier to reason about and maintain. It’s particularly well-suited for real-time transformations and aggregations of change events.

- ksqlDB: ksqlDB is a stream processing database built on top of Kafka. It provides a SQL-like interface for querying and processing Kafka topics. This allows developers to easily define streams and tables, perform joins, and execute complex aggregations without writing custom code. ksqlDB is a good choice for users who are familiar with SQL and want a straightforward way to build stream processing applications.

- Third-Party Connectors: Several third-party tools and connectors are designed specifically for consuming and processing Debezium events. These connectors often offer pre-built integrations with various data warehouses, databases, and other systems, simplifying the integration process. Examples include tools like Apache NiFi, which offers a visual interface for building data flows and integrates with Kafka and various data stores.

Building Data Pipelines with Change Events

Building effective data pipelines is essential for transforming raw change events into actionable insights. A well-designed pipeline ensures data is processed, transformed, and delivered to the target systems in a timely and reliable manner.

A typical data pipeline might involve the following stages:

- Consumption: This is the initial stage where change events are read from the Kafka topics. The consumer application subscribes to the relevant topics and receives the messages.

- Deserialization: Change events are typically serialized in formats like JSON or Avro. The deserialization stage converts these serialized messages into a usable format, such as Java objects or Python dictionaries.

- Transformation: This is where the core data processing logic happens. Transformations can include filtering irrelevant events, enriching data with information from other sources, performing aggregations, and transforming the data into a format suitable for the target system. For example, you might filter out ‘DELETE’ events from a specific table if you only need to track additions and updates.

- Loading: The transformed data is loaded into the target system, such as a data warehouse, database, or other application. This stage often involves writing the data in batches or streams, depending on the requirements of the target system.

Example Data Pipeline:

Consider a scenario where you are capturing changes from a MySQL database and want to load them into a data warehouse like Snowflake. The pipeline might look like this:

- Debezium captures changes from the MySQL database and publishes them to Kafka topics.

- A Kafka consumer application (e.g., using Kafka Streams or a custom application) consumes the change events.

- The consumer application deserializes the events and transforms them. This might involve converting the data types, applying business rules, and enriching the data with information from other sources. For example, you might join the change events with data from a customer service database to add customer information.

- The transformed data is loaded into Snowflake using a Snowflake connector. The connector handles the details of writing the data in batches, ensuring efficient loading.

Common Patterns for Integrating CDC Data

Integrating CDC data into various systems often involves common patterns that can be applied across different use cases.

- Real-time Analytics: CDC data can be used to power real-time dashboards and analytics. By consuming change events and performing aggregations, you can track key performance indicators (KPIs) and identify trends as they happen.

- Data Warehousing: CDC is a valuable tool for populating data warehouses with near real-time data. This enables faster reporting and analytics compared to traditional batch-oriented ETL processes.

- Event-Driven Architectures: CDC events can trigger actions in other systems. For example, when a new order is created, a CDC event can trigger a notification to the fulfillment team or update the inventory system.

- Cache Invalidation: When data in the source database changes, CDC events can be used to invalidate and update caches in other systems, ensuring that the data remains consistent.

- Auditing and Compliance: CDC can be used to track all changes to data, providing a complete audit trail for compliance purposes. This is especially important in regulated industries.

Example: Real-time Inventory Tracking

A retail company uses Debezium to capture changes to its product inventory database. The change events are consumed by a Kafka Streams application that calculates the real-time inventory levels for each product. The results are then published to a separate Kafka topic, which is consumed by a dashboard application. The dashboard application displays the real-time inventory levels, allowing the company to monitor stock levels, identify potential shortages, and make informed decisions about ordering and fulfillment.

This real-time approach, powered by CDC, allows for much more agile and responsive inventory management than a system relying on batch updates.

Monitoring and Troubleshooting Debezium

Effectively monitoring and troubleshooting Debezium deployments is crucial for ensuring the reliability and performance of your change data capture pipelines. Regularly monitoring key metrics and having a robust troubleshooting strategy can help you quickly identify and resolve issues, minimizing downtime and data loss. This section details important aspects of monitoring and troubleshooting Debezium.

Identifying Key Metrics for Monitoring

Monitoring key metrics provides insights into the health and performance of Debezium connectors. These metrics should be tracked regularly to identify potential bottlenecks or issues.

- Connector Status: Monitoring the overall status of the connector is essential. This includes tracking whether the connector is running, paused, or failed. A failed connector indicates a critical issue that requires immediate attention.

- Offset Management: Debezium uses offsets to track its progress in reading data from the database. Monitoring offset lag, which is the difference between the current offset and the latest offset available in the database, helps determine if the connector is keeping up with the database changes. A growing offset lag suggests the connector is falling behind.

- Number of Records Produced: Tracking the number of records produced by the connector over time provides insights into the data volume being processed. Significant drops in the number of records produced might indicate issues with the source database, the connector configuration, or the message broker.

- Data Processing Latency: Measuring the time it takes for data changes to be captured, processed, and published can reveal performance bottlenecks. High latency can indicate issues with the connector, the message broker, or the consumer applications.

- Error Rates: Monitoring error rates, including connection errors, schema evolution errors, and data conversion errors, helps identify potential problems within the connector or the data pipeline. High error rates indicate issues that need to be addressed.

- CPU and Memory Usage: Tracking CPU and memory usage of the Debezium connectors and the Kafka Connect workers helps identify resource constraints. High resource utilization might indicate the need for scaling the resources.

- Source Database Metrics: Monitoring the source database’s performance metrics, such as replication lag (for databases using replication), transaction logs, and query performance, provides insights into potential database-related issues affecting the connector’s performance.

Troubleshooting Techniques for Resolving Issues

When issues arise, employing a systematic approach to troubleshooting can help quickly identify and resolve problems.

- Check Connector Logs: Connector logs are the primary source of information for diagnosing issues. These logs provide detailed information about errors, warnings, and informational messages. Examine the logs for error messages, stack traces, and other relevant details.

- Verify Connector Configuration: Ensure the connector configuration is correct, including database connection details, topics, and data transformation settings. Incorrect configurations are a common source of issues.

- Examine Kafka Connect Worker Logs: Kafka Connect worker logs provide insights into the overall health of the Kafka Connect cluster and any issues related to the connector deployment.

- Inspect Kafka Topics: Verify that the Kafka topics used by the connector exist and are receiving data. Use Kafka tools to inspect the messages and their content.

- Check Network Connectivity: Ensure that the Debezium connector can connect to the source database and the Kafka brokers. Network connectivity issues can prevent data capture and publishing.

- Review Database Permissions: Verify that the database user configured for the connector has the necessary permissions to read the data and access the transaction logs. Insufficient permissions can lead to errors.

- Monitor Resource Usage: Check CPU, memory, and disk I/O usage on the machines running the connector and the Kafka Connect workers. Resource constraints can impact performance.

- Isolate the Issue: If the problem is not immediately obvious, try isolating the issue by simplifying the setup. For example, create a test connector with a minimal configuration to see if the problem persists.

- Consult Documentation and Community Resources: Refer to the Debezium documentation and community forums for troubleshooting guidance and solutions to common issues. The Debezium community is active and helpful.

- Use Debugging Tools: For complex issues, consider using debugging tools, such as Java debuggers, to step through the connector’s code and identify the root cause of the problem.

Creating a Guide for Logging and Error Handling in Debezium Deployments

Effective logging and error handling are critical for maintaining a robust and reliable Debezium deployment. Implementing a well-defined logging strategy and error-handling mechanisms can significantly improve the ability to diagnose and resolve issues.

- Configure Logging Levels: Configure appropriate logging levels (e.g., INFO, WARN, ERROR, DEBUG) to control the amount of information logged. Start with INFO and WARN levels in production and increase the verbosity to DEBUG when troubleshooting.

- Use Structured Logging: Utilize structured logging formats, such as JSON, to make logs easier to parse and analyze. Structured logs allow for efficient searching and filtering of log data.

- Include Contextual Information: Include contextual information in log messages, such as the connector name, task ID, source database details, and timestamps, to provide a comprehensive view of the events.

- Implement Error Handling: Implement error-handling mechanisms within the connector configuration to manage errors gracefully. This includes retry mechanisms, dead-letter queues (DLQs), and error notifications.

- Use Dead-Letter Queues (DLQs): Configure DLQs to store messages that cannot be processed successfully. DLQs allow you to examine and reprocess failed messages.

- Set up Alerting: Configure alerts based on key metrics and error conditions. Use monitoring tools to send notifications when specific thresholds are exceeded or when critical errors occur.

- Centralized Logging: Implement a centralized logging solution, such as the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk, to collect, aggregate, and analyze logs from all components of the Debezium deployment. This facilitates easier troubleshooting and monitoring.

- Regular Log Review: Regularly review the logs to identify recurring issues, performance bottlenecks, and potential security threats. Proactive log review helps prevent future problems.

- Documentation of Logging and Error Handling: Document the logging and error-handling strategy, including the logging configuration, error-handling mechanisms, and alerting rules. This ensures that the logging and error-handling practices are consistently applied.

- Test Error Handling: Regularly test the error-handling mechanisms to ensure they function as expected. Simulate error conditions to verify that the DLQs, retries, and alerts are working correctly.

Debezium and Schema Evolution

Debezium’s ability to handle schema evolution is a critical feature, allowing it to adapt to changes in the source database’s structure without requiring significant downtime or data loss. This capability is vital for maintaining data consistency and integrity as applications evolve and database schemas are updated. Debezium leverages schema registries to manage and track these changes, ensuring that consumers of the change data can understand and process the evolving data formats.

Handling Schema Changes in the Source Database

Debezium’s connectors are designed to detect and adapt to schema changes in the source database. When a schema change occurs, such as adding a new column, modifying a data type, or dropping a column, Debezium captures these modifications and propagates them to the downstream consumers. This process involves several key steps:

- Schema Detection: Debezium’s connectors monitor the database’s schema using mechanisms specific to each database system. For example, they might use database metadata tables, transaction logs, or other change data capture (CDC) mechanisms to identify schema modifications.

- Schema Evolution Events: When a schema change is detected, Debezium generates schema change events. These events contain information about the specific changes that have occurred, such as the table affected, the type of change (e.g., ADD COLUMN, ALTER COLUMN, DROP COLUMN), and the new schema definition.

- Schema Registry Integration: Debezium integrates with a schema registry, such as the one provided by Apache Kafka’s Schema Registry. The schema registry stores the history of schemas for each topic, allowing consumers to understand the evolving data formats. Debezium uses the schema registry to serialize change events, ensuring compatibility between producers (Debezium) and consumers.

- Data Serialization: Debezium serializes the change data using a format like Apache Avro, which supports schema evolution. Avro allows consumers to read data written with an older schema if the newer schema is compatible. Compatibility rules, such as those defined by Avro, are crucial for ensuring that consumers can handle schema changes without breaking.

- Event Propagation: Debezium publishes the schema change events and the corresponding data change events to the message broker (e.g., Kafka). Consumers subscribe to these topics and receive both the data changes and the schema information.

The Role of the Schema Registry in Managing Evolving Schemas

The schema registry plays a central role in managing evolving schemas and ensuring data compatibility in a Debezium-based CDC system. It acts as a central repository for storing and versioning schemas, providing a single source of truth for the structure of the data.

- Schema Storage and Versioning: The schema registry stores the schemas for each topic and maintains a history of schema versions. This allows consumers to access the schema used to write the data and understand the data format at any given point in time.

- Schema Evolution Management: The schema registry provides tools for managing schema evolution, including features like schema compatibility checks. These checks ensure that new schemas are compatible with existing data and that consumers can safely read data written with different schema versions.

- Serialization and Deserialization: The schema registry provides the necessary information for serializing and deserializing data in formats like Avro. This ensures that producers and consumers can exchange data in a consistent and efficient manner.

- Consumer Compatibility: By using the schema registry, consumers can determine the schema of the data they are receiving and adapt their processing logic accordingly. This allows consumers to handle schema changes without requiring code modifications or downtime.

Designing a Workflow for Managing Schema Changes and Data Compatibility

Managing schema changes effectively requires a well-defined workflow that addresses the different stages of the schema evolution process. This workflow ensures that changes are implemented safely and that data compatibility is maintained.

- Change Planning and Analysis: Before making any schema changes, carefully plan and analyze the impact of the changes. Consider the following:

- Impact on Consumers: Determine how the schema changes will affect the consumers of the change data. Identify any potential compatibility issues and plan for appropriate mitigation strategies.

- Compatibility Rules: Define the compatibility rules that will be used to ensure that the new schema is compatible with the existing data. For example, use Avro’s compatibility rules.

- Testing: Test the schema changes in a non-production environment to verify that they work as expected and that no data loss or corruption occurs.

- Schema Change Implementation: Implement the schema changes in the source database. Ensure that the changes are made in a controlled manner, such as by using database migration tools or scripts.

- Atomic Operations: Perform schema changes in atomic operations to minimize the risk of data inconsistencies.

- Backups: Create backups of the database before making any schema changes.

- Schema Registration: Register the new schema with the schema registry. The schema registry will then store the new schema version and manage its relationship with the previous versions.

- Schema Versioning: Ensure that the schema registry properly version the new schema.

- Data Transformation and Adaptation: Adapt the consumers to the new schema. This may involve updating the consumer applications to handle the new data format or adding data transformation logic to map the old data format to the new format.

- Consumer Updates: Update consumer applications to read and process data based on the new schema.

- Data Migration: Migrate any existing data to the new schema, if necessary.

- Monitoring and Validation: Continuously monitor the system for any issues related to schema changes. Validate that the data is being processed correctly and that there are no data inconsistencies.

- Monitoring Tools: Use monitoring tools to track the performance of the Debezium connectors and the consumer applications.

- Data Validation: Implement data validation checks to ensure that the data is consistent and accurate.

Advanced Configuration Options

Debezium offers a rich set of advanced configuration options that allow for fine-tuning of connector performance, ensuring data consistency, and adapting to specific use cases. These options are crucial for optimizing Debezium deployments in production environments, where factors like data volume, network latency, and schema evolution can significantly impact performance and reliability. Understanding and leveraging these configurations is essential for building robust and scalable change data capture pipelines.

Connector Performance Tuning

Optimizing connector performance involves adjusting various parameters to handle the specific characteristics of the source database and the target message broker. This can involve adjusting batch sizes, polling intervals, and buffer sizes.

- Batch Size Configuration: The `snapshot.fetch.size` parameter controls the number of rows fetched in a single batch during the initial snapshot, and the `poll.interval.ms` controls the frequency with which the connector polls for changes in the database. Adjusting these parameters can influence the connector’s throughput and latency. For instance, increasing `snapshot.fetch.size` can speed up the initial snapshot, but might increase the memory consumption.

- Buffer and Cache Management: Debezium uses internal buffers and caches to manage data flow. Configuration parameters like `max.batch.size` and `max.queue.size` (for Kafka Connect) are critical for managing the memory footprint and preventing data loss. Increasing `max.queue.size` allows the connector to buffer more events, potentially mitigating issues caused by temporary broker unavailability, but it also increases memory usage.

- Network Optimization: The connector’s interaction with the database and the message broker can be optimized. Parameters related to connection pooling and network timeouts can be tuned to reduce latency and improve resilience.

- Connector Tasks: In Kafka Connect, you can configure the number of tasks for a connector. More tasks can increase throughput by parallelizing the work, but they also increase the resource consumption on the Kafka Connect worker nodes.

Offset Management and Data Consistency

Offset management is fundamental to Debezium’s ability to guarantee data consistency and fault tolerance. The connector periodically persists its processing progress (offsets) to a dedicated topic in Kafka. This allows the connector to resume from where it left off in case of failures or restarts.

- Offset Storage: Debezium stores offsets in a Kafka topic named `__consumer_offsets` by default. This topic is managed by Kafka and is essential for tracking the connector’s progress. The frequency of offset commits can be controlled using the `offset.flush.interval.ms` configuration parameter.

- Data Loss Prevention: The frequency of offset commits influences the potential for data loss in case of a failure. A lower `offset.flush.interval.ms` value results in more frequent commits, minimizing the potential for data loss but potentially impacting performance.

- Exactly-Once Semantics: While Debezium doesn’t provide exactly-once semantics out-of-the-box (this is a Kafka Connect limitation), careful offset management, combined with idempotent operations on the consuming side, can achieve a high degree of data consistency.

- Idempotent Consumers: Building idempotent consumers on the consuming side is a best practice. This means that processing the same event multiple times will have the same effect as processing it only once. This helps to ensure data consistency in the face of failures and retries.

Custom Configuration Options for Specific Use Cases

Debezium provides several configuration options tailored to specific database systems and use cases. These options allow users to customize the behavior of the connectors to fit their unique requirements.

- MySQL Connector:

- `database.server.id`: Sets a unique server ID for the MySQL replica, essential for identifying the source database.

- `database.history.store.kafka.topic`: Configures the topic for storing the database schema history.

- PostgreSQL Connector:

- `plugin.name`: Specifies the logical decoding plugin (e.g., `pgoutput`, `decoderbufs`). Choosing the right plugin is critical for performance and compatibility.

- `slot.name`: Defines the replication slot used for logical decoding.

- SQL Server Connector:

- `database.history.kafka.topic`: Configures the Kafka topic for schema history.

- `database.server.name`: Sets a logical name for the database server.

- Custom Filters and Transformations: Debezium offers flexible mechanisms for filtering and transforming data.

- Transforms: Use SMT (Single Message Transforms) for simple transformations like masking sensitive data or adding metadata.

- Filters: Use filters to selectively include or exclude events based on criteria like table names or column values.

Use Cases and Examples

Debezium’s capabilities extend to a wide array of applications, transforming how organizations manage and utilize their data. Its ability to capture changes in real-time makes it a valuable tool for various scenarios, ranging from simple auditing to complex data synchronization across multiple systems. This section explores practical use cases and provides concrete examples demonstrating Debezium’s versatility and effectiveness.

Auditing with Debezium

Auditing involves tracking and recording changes made to data over time. Debezium provides a robust solution for implementing auditing, capturing every insert, update, and delete operation performed on database tables. This allows for detailed tracking of data modifications, essential for compliance, security, and debugging purposes.For instance, consider a financial institution that needs to maintain a complete audit trail of all transactions.

- Tracking Financial Transactions: Debezium can capture every change to the `transactions` table, including account numbers, amounts, and timestamps. This allows auditors to reconstruct the history of any transaction, identify potential fraud, and ensure regulatory compliance. The audit logs generated can be stored in a separate audit database or a secure data lake for long-term retention.

- Monitoring User Activity: By integrating Debezium with a user management system, organizations can monitor changes to user profiles, access rights, and sensitive data. For example, if a user’s role is changed, Debezium captures the before and after states of the user record, providing valuable insights for security audits.

- Data Integrity Checks: Debezium can be used to detect unauthorized modifications to critical data. By comparing the current state of a table with the historical records captured by Debezium, organizations can identify anomalies and potential data breaches. This proactive approach helps maintain data integrity and ensures the reliability of business operations.

Data Synchronization with Debezium

Data synchronization involves keeping data consistent across multiple systems. Debezium facilitates real-time data synchronization by streaming database changes to various targets, such as other databases, data warehouses, or cloud storage. This ensures that all systems have access to the latest data, enabling efficient data sharing and eliminating data silos.Consider an e-commerce platform needing to synchronize product information between its operational database and its data warehouse.

- Real-time Product Catalog Updates: When a product is added, updated, or removed in the operational database, Debezium captures these changes and streams them to the data warehouse. This ensures that the data warehouse always reflects the most current product information, enabling up-to-date reporting and analytics.

- Synchronizing Customer Data Across Microservices: In a microservices architecture, customer data might be stored in multiple services. Debezium can be used to propagate changes to customer records across these services, ensuring data consistency. For example, when a customer updates their address in one service, Debezium streams this change to other services that rely on customer data.

- Data Replication for Disaster Recovery: Debezium can replicate data from a primary database to a secondary database in real-time. In case of a primary database failure, the secondary database can take over, minimizing downtime and ensuring business continuity. This approach offers a cost-effective alternative to traditional database replication solutions.

Data Warehousing with Debezium

Data warehousing involves storing and processing large volumes of data for analytical purposes. Debezium simplifies the process of loading data into a data warehouse by capturing changes from source databases and streaming them to the warehouse in a near real-time manner. This enables up-to-date reporting, advanced analytics, and informed decision-making.An example is a retail company wanting to build a data warehouse for sales analysis.

- Real-time Sales Data Ingestion: Debezium can capture changes from the sales transaction database and stream them to the data warehouse. This enables near real-time reporting on sales performance, allowing analysts to track sales trends, identify top-selling products, and optimize pricing strategies.

- Building a Historical View of Data: By capturing all changes to the source database, Debezium enables the creation of a complete historical view of data in the data warehouse. This allows analysts to perform time-series analysis, track changes over time, and gain valuable insights into business trends.

- Integrating Data from Multiple Sources: Debezium can capture data changes from multiple source databases, such as sales, inventory, and customer databases, and stream them to a single data warehouse. This enables the creation of a unified view of data, allowing for comprehensive analysis and cross-functional insights.

Comparative Table: Advantages and Disadvantages

The following table compares the advantages and disadvantages of using Debezium in different scenarios.

| Scenario | Advantages | Disadvantages |

|---|---|---|

| Auditing |

|

|

| Data Synchronization |

|

|

| Data Warehousing |

|

|

Best Practices and Optimization

Deploying and managing Debezium connectors effectively, optimizing for performance, and ensuring data consistency and reliability are crucial for a successful Change Data Capture (CDC) implementation. These practices span across configuration, monitoring, and operational strategies. This section Artikels key considerations for maximizing the value of Debezium.

Deployment and Management Best Practices

Proper deployment and ongoing management are fundamental to a stable and performant Debezium environment. This involves careful planning, resource allocation, and continuous monitoring.

- Connector Configuration: Carefully configure each connector based on its specific database source and target. Use appropriate configurations for connection parameters, offsets, and schema evolution. Incorrect settings can lead to data loss, performance bottlenecks, or integration failures.

- Resource Allocation: Provision sufficient resources (CPU, memory, storage) for Debezium connectors and the Kafka Connect workers. Under-provisioning can result in slow data capture, increased latency, and potential data loss. Monitor resource utilization regularly and scale as needed. Consider using tools like Prometheus and Grafana to monitor resource consumption.

- Connector Scaling: Utilize Kafka Connect’s scalability features to handle increasing data volumes. Deploy multiple worker instances and scale the number of connector tasks. Scaling horizontally allows for parallel processing of change events.

- Monitoring and Alerting: Implement comprehensive monitoring for Debezium connectors, Kafka Connect workers, and the Kafka cluster itself. Set up alerts for critical metrics, such as connector status, lag, error rates, and resource utilization. Tools like Prometheus and Grafana are useful for visualization and alerting.

- Offset Management: Understand and manage offset commits. Debezium uses offsets to track the position of change events within the database transaction log. Proper offset management ensures data consistency and prevents data loss or reprocessing. Regularly check the offset commit strategy.

- Error Handling and Recovery: Implement robust error handling mechanisms. Configure connectors to handle errors gracefully, such as retrying failed operations or sending error messages to a dead-letter queue (DLQ). Define recovery strategies for connector failures or data inconsistencies.

- Configuration Management: Employ a robust configuration management strategy. Use a system like Ansible, Puppet, or Terraform to manage connector configurations and deployments. This ensures consistency and simplifies updates and rollbacks.

- Testing and Validation: Thoroughly test connector configurations and data transformations before deploying them to production. Validate the data captured by Debezium to ensure it meets the requirements of the target systems. Utilize testing frameworks to simulate various scenarios and validate data integrity.

Performance Optimization for High-Volume Data Capture

Optimizing performance is critical for handling high-volume data capture scenarios. Several strategies can be employed to minimize latency and maximize throughput.

- Connector Tuning: Fine-tune connector settings to optimize performance. Adjust parameters like `poll.interval.ms`, `tasks.max`, and `snapshot.mode`. The optimal settings depend on the specific database and workload. Experiment with different values and monitor the impact on performance.

- Database Configuration: Optimize the source database for change data capture. Ensure that the database transaction logs are properly configured and that sufficient resources are allocated to the database server. Regularly review database performance metrics.

- Kafka Configuration: Optimize the Kafka cluster for high-throughput data ingestion. Configure Kafka brokers and topics for optimal performance. Use appropriate replication factors, partition counts, and compression settings.

- Schema Evolution: Carefully manage schema evolution to minimize performance impact. Avoid excessive schema changes that can trigger costly re-reads of the database. Use the appropriate schema evolution strategy (e.g., `compact` or `delete`).

- Filtering and Transformation: Apply filtering and transformation at the connector level to reduce the volume of data processed. Filter out unnecessary events and transform data to optimize it for the target systems. This can significantly improve performance and reduce resource consumption.

- Batching: Configure the Kafka Connect worker to batch events. This can reduce the number of network calls and improve throughput. Adjust the `batch.size` and `linger.ms` parameters to find the optimal balance between throughput and latency.

- Network Optimization: Ensure a high-bandwidth, low-latency network connection between the database, Kafka Connect workers, and the Kafka brokers. Network bottlenecks can significantly impact performance.

- Monitoring and Profiling: Continuously monitor performance metrics and profile connectors to identify bottlenecks. Use tools like JConsole or VisualVM to analyze connector performance and identify areas for optimization.

Strategies for Ensuring Data Consistency and Reliability

Data consistency and reliability are paramount for any CDC implementation. Several strategies help to guarantee data integrity and minimize the risk of data loss or inconsistencies.

- Transactions and Exactly-Once Semantics: Utilize database transactions and, where possible, leverage Kafka’s exactly-once semantics to ensure that changes are captured and delivered consistently. This helps to prevent data loss or duplication.

- Offset Management and Consumer Groups: Carefully manage offset commits and use consumer groups to ensure that change events are processed only once. Incorrect offset management can lead to data loss or reprocessing.

- Schema Evolution Handling: Implement a robust schema evolution strategy to handle changes in the source database schema. Use the appropriate schema evolution mode and ensure that all consumers are compatible with the new schema.

- Dead-Letter Queues (DLQs): Configure DLQs to handle events that cannot be processed due to errors. DLQs allow you to isolate and analyze problematic events, preventing them from blocking the processing of other events.

- Idempotent Operations: Design downstream consumers to perform idempotent operations. This means that applying the same event multiple times has the same effect as applying it once. This helps to prevent data corruption in case of reprocessing.

- Data Validation and Reconciliation: Implement data validation and reconciliation mechanisms to verify the integrity of the captured data. Compare the data captured by Debezium with the source data to identify and correct any inconsistencies.

- Regular Backups and Disaster Recovery: Implement regular backups of the Kafka cluster and Debezium connector configurations. Develop a disaster recovery plan to ensure that data can be restored in case of a failure.

- Monitoring and Alerting: Monitor key metrics, such as connector status, lag, error rates, and data consistency. Set up alerts to proactively identify and address potential data consistency issues.

- Testing and Validation: Thoroughly test the entire CDC pipeline, including the Debezium connectors, Kafka brokers, and downstream consumers. Validate the data captured by Debezium to ensure that it meets the requirements of the target systems. Use comprehensive testing to identify and address potential issues before they impact production data.

Epilogue

In conclusion, mastering Debezium for Change Data Capture unlocks significant advantages in modern data management. By understanding the core concepts, configuration options, and best practices Artikeld in this guide, you’re well-equipped to implement real-time data streaming solutions. This allows for efficient data integration, improved data consistency, and ultimately, a more responsive and informed business environment. Embrace the power of Debezium and transform your data strategy.

FAQ Section

What databases does Debezium support?

Debezium supports a wide array of databases, including MySQL, PostgreSQL, SQL Server, MongoDB, and more, with new connectors being developed continuously.

How does Debezium handle schema changes?

Debezium automatically detects schema changes in the source database and propagates these changes to the Kafka topics, ensuring data consistency and compatibility.

Can Debezium handle large datasets?

Yes, Debezium is designed to handle large datasets. Performance can be optimized through proper configuration, including connector settings, Kafka topic partitioning, and hardware resources.

What is the role of Kafka Connect in Debezium?

Kafka Connect provides the framework for running Debezium connectors. It manages the lifecycle of the connectors, handles data streaming, and integrates Debezium with Kafka.

How can I monitor the performance of Debezium connectors?

You can monitor Debezium connectors using metrics provided by Kafka Connect, such as message processing rates, offset lag, and error counts. Tools like Prometheus and Grafana can be used for visualization.