Embarking on the journey of serverless computing often begins with Azure Functions, a powerful and flexible platform for executing code without the complexities of managing infrastructure. This guide, starting with how to write your first Azure Function with C#, will demystify the process, transforming complex concepts into actionable steps. We will explore the core tenets of serverless architecture, its benefits, and the various trigger types that initiate function execution, all while focusing on the advantages of C# for function development.

This exploration will traverse the landscape from setting up your development environment, including essential tools and configurations, to crafting a “Hello World” HTTP trigger function. We’ll delve into the intricacies of input and output bindings, enabling your functions to interact seamlessly with various Azure services. Furthermore, the guide will cover critical aspects like function parameters, HTTP request handling, logging, monitoring, authentication, authorization, and robust error management.

Finally, it will provide insights into diverse triggers, deployment strategies, and function management techniques to help you understand how to write your first azure function with c#.

Introduction to Azure Functions and C#

Azure Functions provides a serverless compute service that allows developers to run event-triggered code without managing infrastructure. This approach significantly simplifies application development, deployment, and scaling, enabling a focus on code rather than server administration. The combination of Azure Functions with C# offers a powerful and versatile platform for building various applications, from simple automation tasks to complex API endpoints.

Core Concepts of Serverless Computing

Serverless computing abstracts away the underlying infrastructure, allowing developers to execute code without provisioning or managing servers. The cloud provider handles server management, scaling, and resource allocation automatically. This paradigm shift offers several key advantages.

- Automatic Scaling: The platform dynamically allocates resources based on demand. Functions automatically scale up or down in response to incoming requests or events. This eliminates the need for manual scaling and ensures optimal resource utilization.

- Pay-per-use Pricing: Users are charged only for the actual compute time their functions consume. This contrasts with traditional hosting models where resources are provisioned and paid for regardless of usage. This can lead to significant cost savings, especially for applications with variable workloads.

- Event-Driven Architecture: Serverless functions are typically triggered by events, such as HTTP requests, database updates, or messages in a queue. This event-driven approach promotes loose coupling and enables the creation of highly responsive and scalable applications.

- Reduced Operational Overhead: The cloud provider handles all server management tasks, including patching, security updates, and infrastructure maintenance. This frees up developers to focus on writing code and delivering value to the business.

Benefits of Using Azure Functions Over Traditional Hosting

Choosing Azure Functions over traditional hosting models presents compelling advantages, particularly concerning operational efficiency, scalability, and cost optimization.

- Simplified Deployment and Management: Azure Functions simplifies the deployment process. Developers can upload their code and configure triggers directly through the Azure portal, command-line interface (CLI), or integrated development environments (IDEs). The platform handles the underlying infrastructure management.

- Scalability and High Availability: Azure Functions automatically scales based on demand, ensuring optimal performance even during peak traffic periods. The platform provides built-in high availability, minimizing downtime and ensuring application resilience. For example, a website that experiences a sudden surge in traffic during a promotional event can rely on Azure Functions to scale automatically and handle the increased load without manual intervention.

- Cost Efficiency: The pay-per-use pricing model of Azure Functions can lead to significant cost savings compared to traditional hosting models, especially for applications with variable workloads. For instance, a function that processes data once a day will only incur costs for the duration of that single execution, which is considerably more cost-effective than maintaining a server running 24/7.

- Rapid Development and Iteration: The ease of deployment and management allows developers to rapidly prototype, test, and iterate on their code. This accelerates the development lifecycle and enables faster time-to-market.

Different Trigger Types Available for Azure Functions

Azure Functions supports various trigger types that define how and when a function is executed. These triggers enable developers to build event-driven applications that respond to different types of events.

- HTTP Trigger: An HTTP trigger allows a function to be invoked by an HTTP request. This is commonly used to create REST APIs, webhooks, and other web-based endpoints. The function receives the HTTP request and can process the data, return responses, and interact with other services.

- Timer Trigger: A timer trigger allows a function to be executed on a scheduled basis. This is useful for tasks such as data cleanup, report generation, and periodic background processes. The schedule is defined using a CRON expression. For example, a function can be scheduled to run daily at midnight to generate a daily report.

- Queue Trigger: A queue trigger allows a function to be triggered when a new message is added to an Azure Storage queue. This is useful for decoupling components of an application and enabling asynchronous processing. For example, a web application can add messages to a queue, and a separate function can process those messages asynchronously.

- Blob Trigger: A blob trigger allows a function to be triggered when a new blob (file) is added to Azure Blob Storage. This is useful for tasks such as image processing, file validation, and data transformation. When a new image is uploaded to a storage container, a function can automatically resize it and store it in a different container.

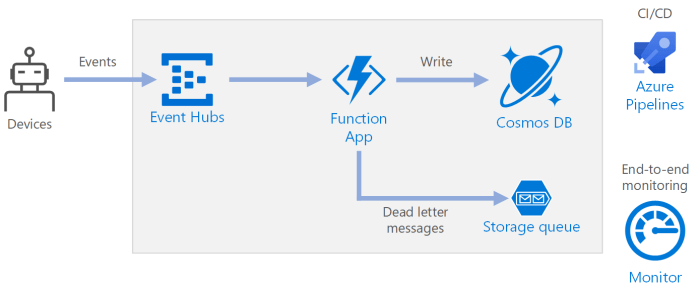

- Cosmos DB Trigger: A Cosmos DB trigger allows a function to be triggered when changes occur in a Cosmos DB database. This is useful for real-time data processing, event-driven architectures, and building reactive applications. When a new document is added to a Cosmos DB collection, a function can be triggered to process the data and update other systems.

- Event Hub Trigger: An Event Hub trigger allows a function to be triggered when events are sent to an Azure Event Hub. This is useful for processing high-volume event streams, such as telemetry data and application logs.

Advantages of Using C# for Azure Function Development

C# offers several advantages for developing Azure Functions, making it a popular choice for many developers.

- Mature and Well-Supported Ecosystem: C# has a mature and well-established ecosystem with a vast library of frameworks, tools, and libraries. This rich ecosystem simplifies development and provides pre-built components for common tasks.

- Strong Typing and Compile-Time Safety: C# is a strongly-typed language, which helps catch errors early in the development process. Compile-time safety reduces the likelihood of runtime errors and improves code reliability.

- Performance and Efficiency: C# is a compiled language that offers excellent performance and efficiency. This is crucial for serverless functions, where execution time and resource consumption directly impact cost.

- Integration with the .NET Ecosystem: C# seamlessly integrates with the .NET ecosystem, providing access to a wide range of .NET libraries and frameworks. This allows developers to leverage their existing .NET skills and expertise.

- Cross-Platform Development: With .NET Core and .NET, C# supports cross-platform development, allowing functions to be deployed on various operating systems, including Windows, Linux, and macOS.

Setting up the Development Environment

Establishing a robust development environment is paramount for efficiently creating and deploying Azure Functions. This section Artikels the necessary steps to prepare your system for C# Azure Functions development, ensuring a streamlined and productive workflow. The process involves installing essential tools, configuring a development environment, and setting up the Azure infrastructure.

Installing and Configuring Azure Functions Core Tools

The Azure Functions Core Tools provide a command-line interface (CLI) for local development, testing, and deployment of Azure Functions. Installing these tools is a crucial first step in the development process.The following steps detail the installation process for different operating systems:

- Windows: The recommended method is to use the installer, available from the official Microsoft documentation. This installer sets up the necessary environment variables and dependencies. After downloading and running the installer, verify the installation by opening a command prompt or PowerShell and typing `func –version`. This command should display the installed version of the Core Tools.

- macOS: macOS users can install the Core Tools using Homebrew. Open a terminal and execute the command `brew tap azure/functions && brew install azure-functions-core-tools`. After installation, confirm the version as described above.

- Linux: Linux installations vary depending on the distribution. For Debian/Ubuntu-based systems, use the following command: `curl https://packages.microsoft.com/keys/microsoft.asc | gpg –dearmor > microsoft.gpg && sudo mv microsoft.gpg /etc/apt/trusted.gpg.d/microsoft.gpg && sudo sh -c ‘echo “deb [arch=$(dpkg –print-architecture)] https://packages.microsoft.com/repos/azure-functions-release/ $(lsb_release -cs) main” > /etc/apt/sources.list.d/azure-functions.list’ && sudo apt-get update && sudo apt-get install azure-functions-core-tools-3`. For other distributions, refer to the official Microsoft documentation for specific instructions.

Verify the installation with `func –version`.

After installation, it is crucial to configure the Core Tools. This primarily involves setting up the necessary environment variables, particularly if using a local storage emulator for testing. Proper configuration ensures that the tools can access and interact with Azure services.

Installing Visual Studio or Visual Studio Code with Necessary Extensions

Choosing an appropriate Integrated Development Environment (IDE) is essential for efficient code writing, debugging, and project management. Both Visual Studio and Visual Studio Code are popular choices, each offering distinct advantages.The steps for installing and configuring each IDE are Artikeld below:

- Visual Studio:

- Download the latest version of Visual Studio from the official Microsoft website.

- During installation, select the “.NET desktop development” workload. This workload includes the necessary tools and SDKs for C# development, including support for Azure Functions. Ensure that the latest .NET SDK is installed.

- After installation, open Visual Studio and install the “Azure Functions and Web Jobs Tools” extension from the Visual Studio Marketplace. This extension provides specific features for Azure Functions development, such as project templates and deployment capabilities.

- Configure the Azure sign-in to enable access to your Azure subscription from within Visual Studio.

- Visual Studio Code:

- Download and install Visual Studio Code from the official website.

- Install the following extensions from the Visual Studio Code Marketplace:

- C# for Visual Studio Code (powered by OmniSharp): Provides language support for C#, including syntax highlighting, code completion, and debugging.

- Azure Functions: Offers templates, debugging support, and deployment capabilities specifically tailored for Azure Functions.

- Azure Account: Enables sign-in to your Azure account directly from within Visual Studio Code.

- Configure the Azure sign-in to enable access to your Azure subscription.

The choice between Visual Studio and Visual Studio Code often depends on personal preference and project complexity. Visual Studio offers a more comprehensive set of features and is well-suited for large, complex projects. Visual Studio Code is a lightweight, cross-platform editor that is ideal for smaller projects and rapid development. Both IDEs provide excellent support for Azure Functions development through extensions.

Setting up an Azure Subscription and Resource Group

Before creating and deploying Azure Functions, it is necessary to have an active Azure subscription and a resource group to organize and manage the resources.The following steps Artikel the process:

- Obtaining an Azure Subscription:

- If you do not already have one, create an Azure account at the Azure website. New users may be eligible for a free trial, which provides a limited amount of resources for testing and development.

- After creating an account, you can choose from various subscription options, including pay-as-you-go, reserved instances, and enterprise agreements. Select the subscription that best suits your needs.

- Creating a Resource Group:

- Navigate to the Azure portal (portal.azure.com) and log in with your Azure account.

- In the Azure portal, search for “Resource groups” and select the corresponding service.

- Click on “+ Create” to create a new resource group.

- Provide the following information:

- Subscription: Select your Azure subscription.

- Resource group name: Choose a unique name for your resource group (e.g., “my-function-app-rg”).

- Region: Select a region where you want to deploy your resources. Choose a region close to your users or where you want to host your application.

- Click “Review + create” and then “Create” to deploy the resource group.

A resource group acts as a logical container for your Azure resources, such as function apps, storage accounts, and other related services. Organizing resources into resource groups simplifies management, deployment, and cost tracking. For example, you can delete the entire resource group to remove all the associated resources in a single operation.

Creating a New Azure Functions Project in C#

After setting up the development environment and Azure infrastructure, the next step is to create a new Azure Functions project in C#.The steps for creating a new project are:

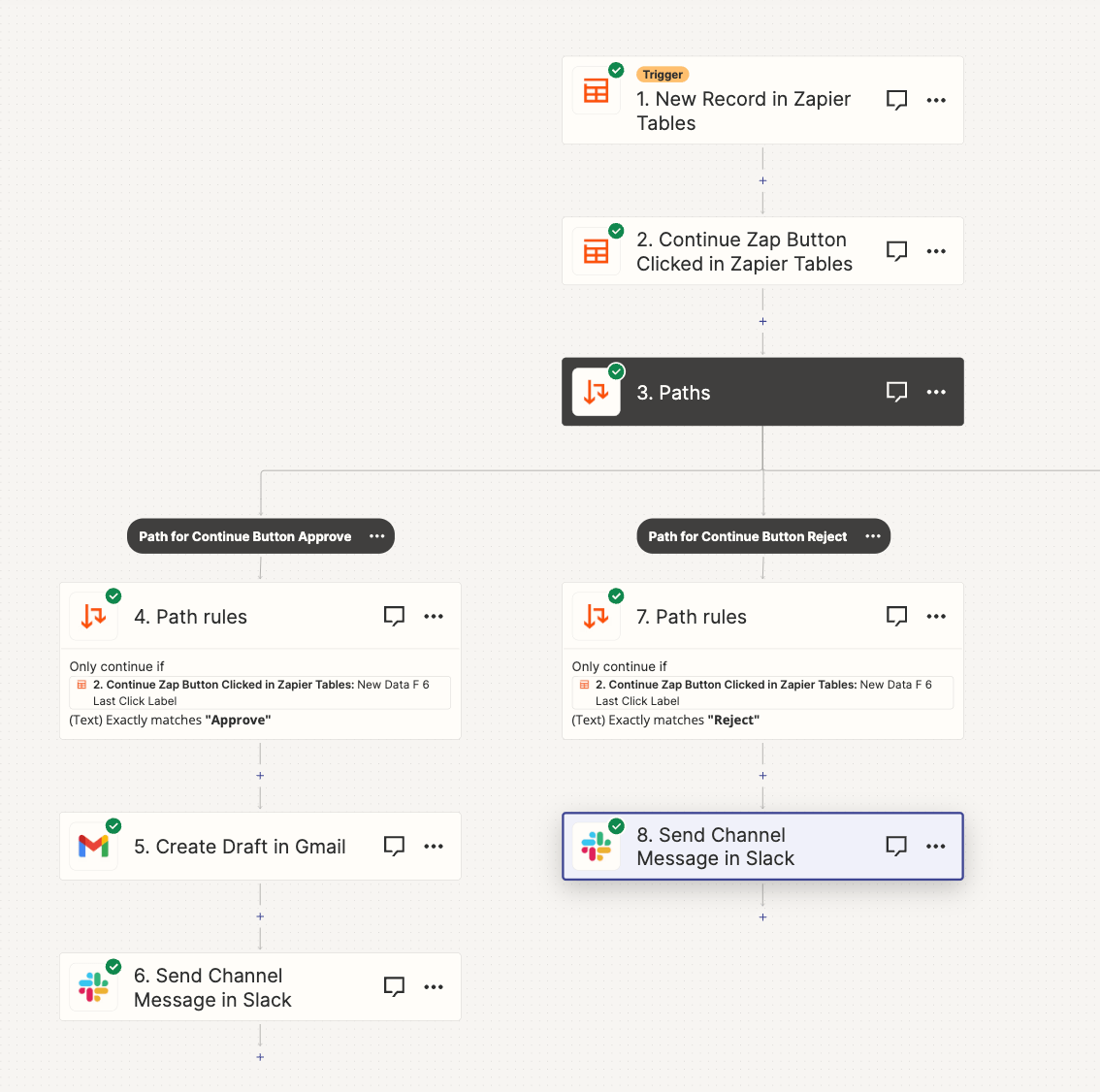

- Using Visual Studio:

- Open Visual Studio and select “Create a new project.”

- In the project template search box, type “Azure Functions” and select the “Azure Functions” template.

- Click “Next” and provide a project name and location.

- Click “Create.”

- In the “Create a new Azure Functions application” dialog, select the following options:

- Runtime: Choose “.NET” (or “.NET 6 isolated” for the latest isolated worker model).

- Trigger: Select a trigger type (e.g., “HTTP trigger”).

- Storage account: Select an existing storage account or create a new one.

- Authorization level: Choose an authorization level (e.g., “Anonymous” for public access).

- Click “Create” to generate the project. Visual Studio will create a project with a pre-configured function, including necessary dependencies and code.

- Using Visual Studio Code:

- Open Visual Studio Code and install the Azure Functions extension (if not already installed).

- Open the Command Palette (Ctrl+Shift+P or Cmd+Shift+P) and type “Azure Functions: Create New Project…”

- Select a project folder location.

- Select a language (C#).

- Select a template (e.g., “HTTP trigger”).

- Provide a function name.

- Select an authorization level (e.g., “Anonymous”).

- Select how you would like to open the project, either in the current window or in a new window.

- Visual Studio Code will generate a new project with a pre-configured function, including necessary dependencies and code.

- Using Azure Functions Core Tools (CLI):

- Open a terminal or command prompt.

- Navigate to the directory where you want to create the project.

- Run the following command to create a new project: `func init –dotnet`. This command will create a new function app project with the default settings.

- Navigate into the project directory using `cd

`. - Create a new function using the command: `func new`.

- Select a trigger type (e.g., “HTTP trigger”).

- Provide a function name.

- The Core Tools will generate a new function with the selected trigger, including necessary dependencies and code.

After creating the project, you can start customizing the function code to implement the desired functionality. The initial project structure typically includes a `*.csproj` file, a `local.settings.json` file (for local configuration), and a function file (e.g., `HttpTriggerCSharp.cs`). The `local.settings.json` file stores settings used during local development, such as connection strings for storage accounts.

Creating a Simple HTTP Trigger Function

This section Artikels the creation and deployment of a fundamental HTTP trigger Azure Function using C#. This function type is a core building block for many serverless applications, enabling responses to web requests. We will explore the essential components of an HTTP trigger, provide a “Hello World” example, and detail the steps for local testing and deployment to Azure.

Basic Structure of an HTTP Trigger Function in C#

The structure of an HTTP trigger function in C# is defined by attributes and parameters within a C# class. The core elements are essential for proper execution.

- Function Definition: The function is typically defined as a static method within a C# class. This method is decorated with the

[FunctionName("FunctionName")]attribute, which specifies the name of the function as it will be known in Azure. The name should be descriptive and unique within your function app. - HTTP Trigger Attribute: The

[HttpTrigger]attribute is applied to the function’s input parameter, typically of typeHttpRequest. This attribute specifies the HTTP methods (e.g., GET, POST, PUT, DELETE) that the function will respond to, as well as the route (or URL path) for the function. TheAuthorizationLevelparameter controls access to the function, with options like anonymous, function, and admin. - Input Parameter (HttpRequest): The function accepts an

HttpRequestparameter, which provides access to the incoming HTTP request’s details, including headers, query parameters, body, and other relevant information. - Output (IActionResult): The function returns an

IActionResult, representing the HTTP response. This allows you to control the response status code (e.g., 200 OK, 400 Bad Request, 500 Internal Server Error), response headers, and response body (e.g., JSON, plain text, HTML).

Code Snippet for a “Hello World” HTTP Trigger Function

The following C# code demonstrates a simple “Hello World” HTTP trigger function. This example is a fundamental demonstration of how to create a function that responds to an HTTP request with a text message.

“`csharp

using System.Net;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

namespace MyFunctionApp

public static class HelloWorldFunction

[FunctionName(“HelloWorld”)]

public static IActionResult Run(

[HttpTrigger(AuthorizationLevel.Anonymous, “get”, “post”, Route = null)] HttpRequest req,

ILogger log)

log.LogInformation(“C# HTTP trigger function processed a request.”);

string name = req.Query[“name”];

string responseMessage = string.IsNullOrEmpty(name)

? “This HTTP triggered function executed successfully. Pass a name in the query string or in the request body for a personalized response.”

: $”Hello, name.

This HTTP triggered function executed successfully.”;

return new OkObjectResult(responseMessage);

“`

The code defines a function named “HelloWorld” within the `MyFunctionApp` namespace. The [HttpTrigger] attribute allows GET and POST requests and specifies an anonymous authorization level, meaning anyone can access the function. The function retrieves the “name” query parameter and returns a personalized greeting or a default message if no name is provided. The OkObjectResult creates a 200 OK HTTP response with the response message as the body.

The `ILogger` is used for logging information.

Testing the Function Locally Using the Azure Functions Core Tools

Testing Azure Functions locally is crucial for development and debugging before deploying to Azure. The Azure Functions Core Tools provide a command-line interface for local function execution.

- Installation: Ensure the Azure Functions Core Tools are installed. This can typically be done using the npm package manager (

npm install -g azure-functions-core-tools). - Starting the Local Function Runtime: Navigate to the directory containing your function app’s project files (e.g., the directory with your

.csprojfile). Then, use the commandfunc startin the command line or terminal. This will start the local Azure Functions runtime. - Testing the Function: The

func startcommand will display the URLs of your HTTP trigger functions. For the “Hello World” example, it will likely show a URL similar tohttp://localhost:7071/api/HelloWorld. You can test the function by sending HTTP requests to this URL using a web browser, Postman, orcurl. For example, to test with a name parameter, you could use the URLhttp://localhost:7071/api/HelloWorld?name=YourName. - Debugging: You can set breakpoints in your C# code and debug the function using a debugger (e.g., Visual Studio, Visual Studio Code). The local runtime integrates with these debuggers.

Process of Deploying the Function to Azure

Deploying an Azure Function to Azure involves several steps, which include creating a Function App in the Azure portal, configuring the deployment settings, and publishing the code.

- Create a Function App in the Azure Portal: In the Azure portal, create a new Function App resource. Provide a unique name, select a region, choose a runtime stack (e.g., .NET), and select a hosting plan (Consumption plan for pay-as-you-go or App Service plan for dedicated resources).

- Configure Deployment Settings: Within the Function App resource, configure your deployment settings. Several deployment methods are available, including:

- Zip Deploy: Upload a zip file containing your function app code.

- Continuous Deployment (e.g., GitHub Actions, Azure DevOps): Configure a continuous integration/continuous deployment (CI/CD) pipeline to automatically deploy code changes from a source control repository. This is the preferred approach for ongoing development.

- Local Git Deployment: Deploy from a local Git repository.

- Publish the Code: Based on the deployment method selected, publish your function app code.

- Zip Deploy: Use the Azure CLI or Azure portal to upload the zip file.

- Continuous Deployment: The CI/CD pipeline will automatically build and deploy your code when changes are pushed to the source control repository.

- Local Git Deployment: Push your local Git repository to the Function App’s Git repository.

- Testing the Deployed Function: After deployment, the Azure portal will provide the URL of your deployed function. You can test the function by sending HTTP requests to this URL using a web browser, Postman, or

curl. For the “Hello World” example, the URL would be in the formathttps://yourfunctionappname.azurewebsites.net/api/HelloWorld(replace `yourfunctionappname` with the actual name of your Function App).

Function Input and Output Bindings

Azure Functions leverages bindings to simplify the interaction with external resources, streamlining data flow and reducing boilerplate code. Input bindings provide a declarative way to retrieve data from various sources, such as storage accounts, queues, and databases, making the data readily available to the function. Output bindings, conversely, allow functions to write data to diverse destinations, including storage, queues, and databases, without the need for explicit SDK calls.

This declarative approach enhances code readability and maintainability, allowing developers to focus on the core business logic.

Using Input Bindings to Retrieve Data

Input bindings streamline data retrieval from external sources. These bindings are defined within the function’s configuration and are automatically handled by the Azure Functions runtime. When the function executes, the runtime retrieves the data and makes it available to the function code as a parameter.

- Blob Storage: Input bindings can retrieve data from Azure Blob Storage. For example, a function can be triggered by a new blob being created, and the input binding can provide the content of the blob as a string or stream. This is particularly useful for processing images, documents, or any other type of file stored in blob storage.

- Queue Storage: Input bindings facilitate reading messages from Azure Queues. A function can be triggered by a new message in a queue, and the input binding can provide the message content as a string or a custom object. This enables asynchronous processing of tasks, where the function acts as a consumer of messages in a queue.

- Table Storage: Input bindings can retrieve data from Azure Table Storage. A function can read a specific entity from a table based on its partition key and row key. This is useful for retrieving configuration settings, user profiles, or other structured data stored in table storage.

- Cosmos DB: Input bindings provide the capability to retrieve data from Cosmos DB. Functions can read a specific document based on its ID. This allows for easy integration with document databases for data retrieval.

Using Output Bindings to Write Data

Output bindings simplify writing data to various destinations. Similar to input bindings, output bindings are defined in the function’s configuration, and the Azure Functions runtime handles the actual writing of data. This abstraction allows developers to focus on the logic of the function without needing to manage the details of the data storage or service interactions.

- Blob Storage: Output bindings allow functions to write data to Azure Blob Storage. A function can, for example, generate a report and use an output binding to save the report as a blob in a storage account.

- Queue Storage: Output bindings enable functions to add messages to Azure Queues. A function can, for instance, process an order and then use an output binding to add a message to a queue to notify another service about the completed order.

- Table Storage: Output bindings facilitate writing data to Azure Table Storage. A function can, for example, update a user profile and use an output binding to write the updated profile data to a table.

- Cosmos DB: Output bindings allow functions to write data to Cosmos DB. Functions can create or update documents within a database. This is useful for storing results, updating database entries, or triggering other events based on function execution.

- HTTP Response: Output bindings allow functions to return data as an HTTP response. This enables functions to serve as APIs, returning JSON, XML, or other data formats to the caller.

Common Input and Output Binding Scenarios

Input and output bindings are often used together to create complex data processing pipelines. For example, a function might use an input binding to read a message from a queue, process the message, and then use an output binding to write the results to blob storage.

- Image Processing: A function is triggered by a new image uploaded to blob storage (input binding). The function processes the image (e.g., resizing, adding watermarks) and then saves the processed image back to blob storage (output binding).

- Order Processing: A function receives an order message from a queue (input binding). The function validates the order, updates the order status in a table (output binding), and sends a confirmation email (output binding).

- Data Transformation: A function reads data from a Cosmos DB collection (input binding), transforms the data, and writes the transformed data to another Cosmos DB collection (output binding).

Binding Types, Purpose, and Examples

The following table summarizes different binding types, their purpose, and provides examples:

| Binding Type | Purpose | Example Input | Example Output |

|---|---|---|---|

| Blob Storage | Read/Write data from/to Azure Blob Storage. | Read the content of a specific blob when a new blob is created. | Save a processed image to blob storage. |

| Queue Storage | Read/Write messages from/to Azure Queue Storage. | Read a message from a queue when a new message arrives. | Add a message to a queue to trigger another function. |

| Table Storage | Read/Write data from/to Azure Table Storage. | Read a specific entity from a table based on key. | Update the status of an order in a table. |

| Cosmos DB | Read/Write data from/to Azure Cosmos DB. | Read a specific document from a collection. | Create a new document in a Cosmos DB collection. |

| HTTP | Trigger a function via HTTP and return an HTTP response. | Receive an HTTP request with a specific payload. | Return a JSON response containing the results of a calculation. |

Working with Function Parameters and HTTP Requests

Azure Functions, particularly those triggered by HTTP requests, offer a versatile mechanism for building web APIs and integrating with various services. Understanding how to effectively handle request parameters, different HTTP methods, response codes, and content types is crucial for developing robust and scalable function applications. This section will explore these aspects in detail, providing practical examples using C# to illustrate their implementation.

Accessing Request Parameters

HTTP requests carry data through various means, including query strings, route parameters, and request bodies. Azure Functions provide convenient access to these parameters within the function code.

- Query Strings: Query strings are appended to the URL after a question mark (?). They consist of key-value pairs separated by ampersands (&). For example, in the URL `https://example.com/api/myfunction?name=John&age=30`, `name` and `age` are query parameters. In C#, you can access these parameters using the `HttpRequest` object passed to your function. The `HttpRequest` object provides a `Query` property, which is an `IQueryCollection`.

This collection allows you to retrieve parameter values by their key.

- Route Parameters: Route parameters are embedded within the URL path itself. They are defined using curly braces “ in the function’s route configuration. For instance, in the route `api/users/id`, `id` is a route parameter. To access route parameters in your C# function, you use the `RouteData` property of the `HttpRequest` object, accessible via `req.RouteValues`. This property returns a dictionary-like structure where the keys are the parameter names defined in the route.

- Request Body: The request body is used to send data in the request, typically with POST, PUT, and PATCH methods. The data can be in various formats, such as JSON or XML. To access the request body, you can read the body content from the `HttpRequest` object. The content is usually read as a string, which can then be deserialized into a suitable object using libraries like `Newtonsoft.Json` or the built-in `System.Text.Json`.

Handling HTTP Methods

Different HTTP methods (GET, POST, PUT, DELETE, etc.) are used to perform different actions on resources. Azure Functions allow you to handle these methods and implement the appropriate logic.

- GET: Used to retrieve data from a server. In an Azure Function, you might use GET to fetch data based on query parameters or route parameters.

- POST: Used to submit data to be processed to a server. POST requests often contain data in the request body, such as JSON payloads.

- PUT: Used to update an existing resource on the server. PUT requests typically include the entire representation of the resource being updated.

- DELETE: Used to delete a resource on the server. DELETE requests often specify the resource to be deleted using route parameters.

- PATCH: Used to partially update an existing resource. PATCH requests typically contain only the changes to be applied to the resource.

The `[FunctionName]` attribute in your C# function allows you to specify the HTTP methods that the function should respond to. You can specify multiple methods by providing an array of strings to the `HttpTrigger` attribute’s `methods` parameter. For example, `[HttpTrigger(AuthorizationLevel.Function, “get”, “post”, Route = null)]HttpRequest req` would allow the function to be triggered by GET and POST requests.

Returning HTTP Response Codes and Content Types

Returning appropriate HTTP response codes and content types is essential for building well-behaved APIs. Azure Functions provide mechanisms to control the response.

- HTTP Response Codes: HTTP response codes indicate the outcome of the request. Common codes include:

- 200 OK: Success. The request was successful.

- 201 Created: Success. A new resource was created.

- 204 No Content: Success. The request was successful, but there is no content to return.

- 400 Bad Request: Client error. The request was malformed.

- 401 Unauthorized: Client error. Authentication is required.

- 403 Forbidden: Client error. The server understood the request, but refuses to authorize it.

- 404 Not Found: Client error. The requested resource was not found.

- 500 Internal Server Error: Server error. An unexpected error occurred on the server.

- Content Types: The `Content-Type` header specifies the format of the response body (e.g., `application/json`, `text/plain`). You can set the content type of your response using the `HttpResponseMessage` object.

In C#, you can create an `HttpResponseMessage` object to control the response. You can set the status code using the `StatusCode` property and the content using the `CreateResponse` method.

Processing JSON Data

JSON (JavaScript Object Notation) is a widely used format for exchanging data over the web. Azure Functions can easily handle JSON data sent in the request body.

- Receiving JSON Data: The request body is typically read as a string. This string can then be deserialized into a C# object using libraries like `Newtonsoft.Json` or `System.Text.Json`.

- Returning JSON Data: You can serialize a C# object into JSON and return it as the response body. Set the `Content-Type` header to `application/json` to indicate the response format.

For example, using `Newtonsoft.Json`, you could deserialize the request body using:

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

And serialize a C# object to JSON for the response:

string jsonResponse = JsonConvert.SerializeObject(myObject);

return new HttpResponseMessage(HttpStatusCode.OK)Content = new StringContent(jsonResponse, Encoding.UTF8, "application/json")

;

Logging and Monitoring Azure Functions

Robust logging and comprehensive monitoring are crucial components of any production-grade application, and Azure Functions are no exception. They provide valuable insights into function execution, helping to identify and resolve issues, optimize performance, and ensure the overall health of your serverless applications. Effective logging enables developers to understand the flow of execution, track errors, and analyze function behavior. Monitoring, on the other hand, provides a broader view of function performance, resource utilization, and potential bottlenecks.

Importance of Logging in Azure Functions

Logging in Azure Functions serves multiple critical purposes, contributing significantly to the maintainability, debuggability, and operational efficiency of your applications. It allows for the capture of detailed information about function execution, enabling proactive issue identification and resolution.

- Debugging and Troubleshooting: Logs provide a detailed trail of events, allowing developers to trace the execution path, identify errors, and pinpoint the root cause of issues. This is particularly important in a distributed, serverless environment where traditional debugging methods may be less effective.

- Performance Analysis: By logging key metrics like execution time, resource consumption, and frequency of invocations, you can identify performance bottlenecks and optimize your functions for better efficiency and scalability.

- Error Detection and Alerting: Logging facilitates the detection of errors and exceptions, allowing for the implementation of alerting mechanisms. When critical errors occur, you can be notified immediately, enabling rapid response and minimizing downtime.

- Auditing and Compliance: Logs can be used to track function invocations, input parameters, and output results, which is essential for auditing purposes and ensuring compliance with regulatory requirements.

- Application Insights Integration: Logging integrates seamlessly with Azure Application Insights, providing advanced analytics, visualization, and alerting capabilities. This allows for comprehensive monitoring of function health and performance.

Using the ILogger Interface to Write Logs

The `ILogger` interface in Azure Functions is the primary mechanism for writing logs. It provides a simple and consistent way to record information at different severity levels, making it easy to manage and analyze logs.

To use `ILogger`, you first need to inject it into your function’s constructor or as a parameter in your function’s method signature. The `ILogger` interface offers several methods for writing logs at different levels of severity:

- LogTrace: Used for detailed diagnostic information that is typically only useful during development and troubleshooting.

- LogDebug: Used for information that is helpful during debugging.

- LogInformation: Used for general information about the function’s execution, such as successful completion or key events.

- LogWarning: Used for non-critical issues or potential problems that do not necessarily indicate an error.

- LogError: Used for errors that have occurred during function execution, such as exceptions or invalid input.

- LogCritical: Used for critical errors that may cause the function to fail or impact the overall application.

Here’s an example of how to use `ILogger` in a C# Azure Function:

“`csharp

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.Extensions.Logging;

using System;

public static class MyFunction

[FunctionName(“MyFunction”)]

public static void Run([TimerTrigger(“0

-/5

–

–

–

-“)]TimerInfo myTimer, ILogger log)

log.LogInformation($”C# Timer trigger function executed at: DateTime.Now”);

log.LogInformation($”Next timer schedule at: myTimer.ScheduleStatus.Next”);

try

// Simulate an operation

int result = 10 / 0; // This will throw an exception

catch (Exception ex)

log.LogError(ex, “An error occurred during the operation.”);

“`

In this example, the `ILogger` instance `log` is injected into the `Run` method. The function uses `LogInformation` to log informational messages and `LogError` to log any exceptions that occur. The `LogTrace` and `LogDebug` methods can be used for detailed debugging information during development, and their output is often controlled by the logging level settings in the Azure Function app configuration.

The `LogWarning` method is suitable for events that require attention but do not necessarily halt the function’s execution. `LogCritical` is employed for severe errors that could jeopardize the application’s functionality.

Viewing Logs in the Azure Portal

The Azure portal provides a convenient way to view logs generated by your Azure Functions. Logs can be accessed through several methods, allowing for real-time monitoring and historical analysis.

- Function App Logs: Navigate to your Function App in the Azure portal, and then select the “Monitor” section. Here, you can view a stream of logs from your function invocations, including the messages written using the `ILogger` interface.

- Log Stream: The “Log stream” feature in the “Monitor” section provides a real-time view of the logs as they are generated. This is particularly useful for debugging and monitoring function behavior in real-time.

- Application Insights: If Application Insights is enabled for your Function App, you can access more detailed logs and analytics in the Application Insights portal. This includes the ability to query logs, create custom dashboards, and set up alerts.

- Kudu Console: The Kudu console (accessible through the “Advanced Tools” section of your Function App) allows you to access the file system and view the raw log files. This can be useful for troubleshooting and analyzing logs in more detail.

The Azure portal’s monitoring tools offer a spectrum of views, ranging from live streams for immediate inspection to advanced analytics within Application Insights, ensuring a comprehensive understanding of function behavior. This multifaceted approach to log viewing provides flexibility and depth in monitoring Azure Functions.

Setting up Application Insights for Monitoring Azure Functions

Application Insights is a powerful monitoring service provided by Azure that integrates seamlessly with Azure Functions. It provides a comprehensive set of features for monitoring the health, performance, and availability of your functions.

To set up Application Insights for monitoring your Azure Functions:

- Create an Application Insights resource: If you don’t already have one, create an Application Insights resource in the Azure portal. This resource will store the telemetry data generated by your functions.

- Enable Application Insights during function app creation: When creating a new Function App in the Azure portal, you can choose to enable Application Insights. The portal will then prompt you to select an existing Application Insights resource or create a new one. This step is often the easiest way to configure the integration.

- Enable Application Insights for an existing Function App: If you already have a Function App, you can enable Application Insights by navigating to the “Application Insights” section in the Function App’s settings. You can then select an existing Application Insights resource or create a new one.

- Configure the `APPINSIGHTS_INSTRUMENTATIONKEY` app setting: This app setting contains the instrumentation key for your Application Insights resource. This key tells your Function App where to send the telemetry data. It is automatically configured when you enable Application Insights through the portal.

- Use the `ILogger` interface: The `ILogger` interface automatically sends log messages to Application Insights. You don’t need to make any special configurations in your code to send logs to Application Insights.

- Access the Application Insights data: After enabling Application Insights and deploying your function, you can view the telemetry data in the Application Insights portal. This includes logs, performance metrics, dependency information, and more.

Once Application Insights is enabled, you can leverage its features to monitor your functions effectively. For instance, the “Performance” blade provides insights into function execution times and resource utilization, helping to identify performance bottlenecks. The “Failures” blade allows you to view detailed information about function errors, including stack traces and related telemetry, aiding in rapid issue resolution. The “Live Metrics” feature offers a real-time view of function performance, enabling immediate monitoring of function health.

Custom dashboards can be created to visualize key metrics and alerts can be set up to notify you of critical events. The integration with Application Insights provides a comprehensive monitoring solution that significantly enhances the observability and maintainability of Azure Functions.

Function Authentication and Authorization

Securing Azure Functions is crucial for protecting sensitive data and ensuring that only authorized users or applications can access them. Authentication verifies the identity of a user or application, while authorization determines what they are allowed to do. Azure Functions offers several built-in mechanisms and integrates seamlessly with various identity providers to provide robust security.

Available Authentication Methods for Azure Functions

Azure Functions supports multiple authentication methods to secure your endpoints, allowing flexibility based on your security requirements and existing infrastructure.

- API Keys: This is a simple method where a secret key is required in the HTTP request headers or query parameters. It’s suitable for basic access control and is often used for internal services or testing.

- Azure Active Directory (Azure AD): Azure AD provides a more robust and scalable authentication solution. It allows users to sign in using their existing Azure AD credentials, leveraging features like multi-factor authentication and conditional access policies. This is recommended for applications requiring enterprise-grade security.

- Managed Identities: Azure Functions can use managed identities to access other Azure resources without storing credentials in the code. This simplifies secret management and enhances security, particularly when the function needs to interact with other Azure services like storage or databases.

- Function Access Keys: These are a special type of key specifically designed for Azure Functions. They can be used to authorize function calls. They are separate from API keys.

- Custom Authentication: For more complex scenarios, you can implement custom authentication mechanisms. This might involve integrating with third-party identity providers or creating your own authentication logic.

Securing an HTTP Trigger Function Using API Keys

Implementing API key authentication is a straightforward way to secure your HTTP trigger functions. The following steps Artikel the process.

- Generate an API Key: In the Azure portal, navigate to your function app and select your function. Under the “Functions” section, choose “Function Keys.” Create a new key by clicking “Add.” Provide a key name and the key value will be generated. This key will be used to authenticate requests to the function. Alternatively, you can use the “Host Keys” which apply to all functions in the Function App.

- Configure Function to Require API Key: You can configure your function to require an API key. There are two ways to do this:

- Function Key (recommended for individual functions): In the “Integration” section of your function, configure the HTTP trigger to require an API key. When creating the function, the default is to require an API key. This key will be passed in the `x-functions-key` header or as a query parameter named `code`.

- Host Key (applies to all functions in the Function App): Configure the function app’s settings to require a host key for all function calls. The host key can be accessed from the “Host keys” section in the Function App. This key is passed in the `x-functions-key` header or as a query parameter named `code`.

- Implement Key Validation in the Function Code: Inside your function’s code, you can optionally validate the API key. This can be useful if you need more granular control over which keys are accepted. For example, if you are using a host key, you might only want to accept it.

- Testing: Test your function by sending HTTP requests with and without the API key. Requests without the key should be rejected, and requests with the correct key should be successful.

Implementing Authorization Using Azure Active Directory

Azure Active Directory (Azure AD) offers a powerful way to authorize access to your Azure Functions, providing secure access control based on user identities and roles. The process involves configuring your function app to use Azure AD authentication and then implementing role-based access control (RBAC).

- Register Your Function App in Azure AD:

- In the Azure portal, navigate to Azure Active Directory.

- Select “App registrations” and click “+ New registration.”

- Provide a name for your application (e.g., “MyFunctionApp”).

- Choose the appropriate “Supported account types” based on your needs (e.g., “Accounts in this organizational directory only” or “Accounts in any organizational directory and personal Microsoft accounts”).

- Set the “Redirect URI” to the URL of your function app (e.g., `https://yourfunctionapp.azurewebsites.net`).

- Click “Register.”

- Take note of the “Application (client) ID” and “Directory (tenant) ID,” as you will need them later.

- Configure Authentication in Your Function App:

- Navigate to your function app in the Azure portal.

- Under “Settings,” select “Authentication.”

- Click “+ Add identity provider.”

- Choose “Microsoft” (which represents Azure AD).

- In the “App registration” section, select “Choose an app” and select the app you registered in the previous step. You can search for your app by name. The Application (client) ID will be automatically populated.

- Configure the redirect URL.

- Choose whether to allow unauthenticated access (typically “Off” to enforce authentication).

- Click “Add.”

- Implement Role-Based Access Control (RBAC):

- In the Azure portal, navigate to your function app and select “Access control (IAM).”

- Click “+ Add” and select “Add role assignment.”

- Choose a role that grants the necessary permissions to access your function (e.g., “Reader” for read-only access). For a Function App, the ‘Contributor’ role is commonly used, allowing for function execution and management. Be mindful of the principle of least privilege, assigning only the necessary permissions.

- Assign the role to the user, group, or service principal that should have access.

- Click “Review + assign.”

- Accessing User Information in Your Function Code:

- The user’s identity information (e.g., claims, user ID, name) will be available in the request headers, specifically within the `x-ms-client-principal` header, which contains a JSON Web Token (JWT). You can access the user’s information from within the function code. You will need to parse this token.

- Example (C#):

Restricting Access to Specific Users or Roles

Implementing authorization using Azure AD allows you to restrict access to your functions based on user identity or assigned roles.

- Identify User or Role Information: The user’s claims, including the object identifier (OID) and any assigned roles, are available in the JWT. You’ll need to parse this token within your function code to access this information.

- Implement Access Control Logic:

- User-Based Authorization: Within your function code, you can check the user’s OID (object identifier) against a list of allowed users. If the user’s OID is not in the allowed list, the function should return an appropriate error (e.g., 403 Forbidden).

- Role-Based Authorization: Check the user’s roles against the required roles for the function. If the user doesn’t have the necessary role, deny access. This approach is more scalable as it allows you to manage access through role assignments in Azure AD.

- Example (C#):

Here is an example of role-based authorization in a C# Azure Function:

using System; using System.Collections.Generic; using System.IdentityModel.Tokens.Jwt; using System.Linq; using System.Net; using System.Net.Http; using System.Security.Claims; using System.Threading.Tasks; using Microsoft.Azure.WebJobs; using Microsoft.Azure.WebJobs.Extensions.Http; using Microsoft.AspNetCore.Mvc; using Microsoft.Extensions.Logging; using Newtonsoft.Json; using Newtonsoft.Json.Linq; public static class AuthorizationFunction [FunctionName("RoleBasedAuthorization")] public static async Task<IActionResult> Run( [HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] HttpRequest req, ILogger log) // Retrieve the user's identity from the request headers. string clientPrincipalHeader = req.Headers["x-ms-client-principal-id"]; if (string.IsNullOrEmpty(clientPrincipalHeader)) log.LogWarning("No client principal found."); return new UnauthorizedResult(); // or ForbiddenResult // Decode the JWT var token = new JwtSecurityTokenHandler().ReadJwtToken(clientPrincipalHeader); // Extract the roles claim var roles = token.Claims.Where(c => c.Type == "roles").Select(c => c.Value).ToList(); // Define the required roles List<string> requiredRoles = new List<string> "Admin" ; // Check if the user has the required role if (!roles.Any(r => requiredRoles.Contains(r))) log.LogWarning("User does not have the required role."); return new ForbidResult(); // If the user is authorized, proceed with the function logic string responseMessage = "This HTTP triggered function executed successfully."; return new OkObjectResult(responseMessage);This example extracts the roles from the JWT and checks if the user has the “Admin” role. If not, access is denied. The actual roles are managed within Azure AD and assigned to users or groups.

- Testing: Test the function with different users and role assignments to ensure that access is correctly restricted.

Error Handling and Exception Management

Robust error handling is crucial for the reliability and maintainability of Azure Functions. Functions, by their nature, can encounter various issues, from invalid input to external service failures. Implementing effective error handling ensures that these issues are gracefully managed, preventing unexpected function terminations and providing valuable insights for debugging and monitoring. Proper error management contributes to a more resilient and user-friendly system.

Importance of Error Handling in Azure Functions

Error handling is paramount in Azure Functions for several key reasons. Functions, operating in a serverless environment, are subject to unpredictable events and external dependencies. Without robust error handling, these functions can fail silently, leading to data loss, incorrect processing, or an overall degraded user experience.

- Preventing Function Termination: Unhandled exceptions can abruptly halt a function’s execution, potentially leading to data corruption or incomplete processing. Error handling mechanisms, such as `try-catch` blocks, allow functions to gracefully recover from errors, preventing premature termination.

- Improving Reliability: Functions are designed to be resilient and handle errors. Comprehensive error handling increases the reliability of functions, ensuring they continue to operate even in the face of unexpected issues.

- Facilitating Debugging and Monitoring: Detailed error logging provides valuable information for diagnosing and resolving issues. Proper logging, coupled with monitoring tools, enables developers to quickly identify the root causes of errors and implement appropriate fixes.

- Enhancing User Experience: Error handling allows for the provision of informative error messages to users, rather than exposing technical details. This improves the overall user experience by providing clear guidance and context when something goes wrong.

- Compliance and Security: Certain errors, such as those related to data validation or access control, can have compliance or security implications. Effective error handling can help to mitigate these risks by preventing unauthorized access or data breaches.

Using `try-catch` Blocks for Exception Handling

The `try-catch` block is the cornerstone of exception handling in C# Azure Functions. It allows the function to anticipate potential errors, attempt to execute a block of code, and provide a mechanism to handle exceptions if they occur.

- `try` Block: This block contains the code that is potentially prone to exceptions. If an exception occurs within the `try` block, the program flow immediately transfers to the corresponding `catch` block.

- `catch` Block: This block specifies the type of exception to catch and contains the code to handle the exception. Multiple `catch` blocks can be used to handle different types of exceptions. If the exception type matches the one specified in the `catch` block, the code within the `catch` block is executed.

- `finally` Block: This optional block contains code that always executes, regardless of whether an exception occurred or not. This is useful for releasing resources, closing connections, or performing other cleanup tasks.

Syntax of a `try-catch` block:

try // Code that might throw an exception catch (ExceptionType exception) // Code to handle the exception finally // Code that always executes

Best Practices for Handling Common Errors

Implementing best practices for error handling is essential to write effective and maintainable Azure Functions. Different types of errors require specific handling strategies.

- Input Validation Errors: Validate all inputs to the function to prevent unexpected behavior and security vulnerabilities. Use `try-catch` blocks to handle validation errors, providing informative error messages to the caller. For example, if a function expects a numeric input, validate that the input is actually a number before processing it.

- Network and Service Failures: When interacting with external services (databases, APIs, etc.), anticipate network issues and service outages. Implement retry mechanisms with exponential backoff to handle transient failures. Log detailed information about service failures, including timestamps and error codes, for debugging purposes.

- Database Connection Errors: Database connections can fail for various reasons, such as incorrect credentials, network problems, or database server unavailability. Implement connection retry logic and handle connection-related exceptions appropriately. Always close database connections in a `finally` block to prevent resource leaks.

- Authentication and Authorization Errors: When handling authentication and authorization, use `try-catch` blocks to catch exceptions related to invalid credentials or unauthorized access. Return appropriate HTTP status codes (e.g., 401 Unauthorized, 403 Forbidden) to the caller.

- Logging: Implement comprehensive logging throughout the function. Log errors with detailed information, including the exception type, message, and stack trace. Use a structured logging approach (e.g., using a logging framework like Serilog) to facilitate analysis and monitoring.

- HTTP Status Codes: Return appropriate HTTP status codes to indicate the result of the function execution. Use status codes like 200 OK for success, 400 Bad Request for client errors, 500 Internal Server Error for server errors, etc.

Example: Using `try-catch` Blocks and Error Logging

This example illustrates how to use `try-catch` blocks and error logging within an Azure Function to handle potential exceptions. The function simulates a division operation and handles the potential `DivideByZeroException`.

“`csharp

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

using System;

using System.Net;

using System.Net.Http;

using System.Threading.Tasks;

public static class DivideFunction

[FunctionName(“Divide”)]

public static async Task

[HttpTrigger(AuthorizationLevel.Function, “get”, “post”, Route = null)] HttpRequest req,

ILogger log)

log.LogInformation(“C# HTTP trigger function processed a request.”);

string num1Str = req.Query[“num1”];

string num2Str = req.Query[“num2”];

if (string.IsNullOrEmpty(num1Str) || string.IsNullOrEmpty(num2Str))

return new HttpResponseMessage(HttpStatusCode.BadRequest)

Content = new StringContent(“Please pass ‘num1’ and ‘num2’ parameters in the query string or in the request body”)

;

try

if (!double.TryParse(num1Str, out double num1) || !double.TryParse(num2Str, out double num2))

return new HttpResponseMessage(HttpStatusCode.BadRequest)

Content = new StringContent(“Invalid input: num1 and num2 must be numbers.”)

;

double result = num1 / num2;

return new HttpResponseMessage(HttpStatusCode.OK)

Content = new StringContent($”Result: result”)

;

catch (DivideByZeroException ex)

log.LogError(ex, “DivideByZeroException occurred.”);

return new HttpResponseMessage(HttpStatusCode.InternalServerError)

Content = new StringContent(“Cannot divide by zero.”)

;

catch (Exception ex)

log.LogError(ex, “An unexpected error occurred.”);

return new HttpResponseMessage(HttpStatusCode.InternalServerError)

Content = new StringContent(“An unexpected error occurred.”)

;

“`

This example demonstrates:

- Input Validation: The function validates the input parameters to ensure they are present and valid numbers.

- `try-catch` Block: The division operation is enclosed within a `try-catch` block.

- Specific Exception Handling: The `catch` block specifically handles the `DivideByZeroException`.

- Error Logging: The `log.LogError()` method is used to log the exception with a descriptive message and the exception details.

- HTTP Response Codes: Appropriate HTTP status codes (e.g., 400, 500, 200) are returned based on the outcome of the operation.

Azure Function Triggers and Bindings in Depth

Azure Functions leverage triggers and bindings to define how functions are invoked and how data is handled. Triggers initiate function execution based on specific events, while bindings declaratively connect functions to other Azure services. This architecture simplifies development by abstracting away much of the underlying infrastructure management, allowing developers to focus on the core business logic. The effective utilization of triggers and bindings is crucial for building scalable and event-driven applications on Azure.

Timer Trigger

The Timer trigger allows functions to be executed on a predefined schedule. This scheduling is achieved using a CRON expression, which specifies the intervals at which the function should be triggered. This mechanism is highly suitable for periodic tasks such as data cleanup, report generation, or scheduled API calls.

To use the Timer trigger, you must define a `timerTrigger` property in the `function.json` file. This property accepts a CRON expression as its value.

Consider the following example of a `function.json` file that defines a Timer trigger:

“`json

“bindings”: [

“name”: “myTimer”,

“type”: “timerTrigger”,

“direction”: “in”,

“schedule”: “0

-/5

–

–

–

-” // Runs every 5 minutes

]

“`

The CRON expression in this example, `0

-/5

–

–

–

-`, translates to “run every five minutes.” The format of the CRON expression is: `second minute hour dayOfMonth month dayOfWeek`. The first field, representing the seconds, is set to `0`. The second field, representing the minutes, is set to `*/5`, indicating every five minutes. Other fields specify the hour, day of the month, month, and day of the week.

The asterisk (`*`) character indicates “every.”

The function code in C# would then be responsible for performing the scheduled task. For instance:

“`csharp

using System;

using Microsoft.Azure.WebJobs;

using Microsoft.Extensions.Logging;

namespace MyTimerFunction

public class TimerFunction

[FunctionName(“MyTimerFunction”)]

public void Run([TimerTrigger(“0

-/5

–

–

–

-“)] TimerInfo myTimer, ILogger log)

log.LogInformation($”C# Timer trigger function executed at: DateTime.Now”);

log.LogInformation($”Next timer schedule at: myTimer.ScheduleStatus.Next”);

“`

In this code, the `Run` method is decorated with the `FunctionName` attribute and the `TimerTrigger` attribute. The `TimerTrigger` attribute is configured with the same CRON expression as in the `function.json` file. The `TimerInfo` parameter provides information about the timer trigger execution, including the schedule status. The `ILogger` parameter allows for logging information.

The advantages of using the Timer trigger include:

- Simplified Scheduling: The CRON expression provides a straightforward way to define complex schedules.

- Automatic Execution: The Azure Functions runtime handles the scheduling and execution of the function.

- Scalability: Azure Functions automatically scales the function instances to handle the load.

Queue Trigger

The Queue trigger activates a function when a new message is added to an Azure Storage queue. This trigger is ideal for decoupling components of an application, enabling asynchronous processing, and handling high-volume workloads. The queue serves as an intermediary, allowing different parts of the application to communicate without direct dependencies.

The `function.json` file is also used to configure the Queue trigger. The `type` property is set to `queueTrigger`, and the `queueName` property specifies the name of the Azure Storage queue to monitor. The `connection` property indicates the name of the application setting that holds the connection string for the Azure Storage account.

Example of `function.json`:

“`json

“bindings”: [

“name”: “myQueueItem”,

“type”: “queueTrigger”,

“direction”: “in”,

“queueName”: “myqueue-items”,

“connection”: “AzureWebJobsStorage”

]

“`

The corresponding C# code to process the queue messages would resemble the following:

“`csharp

using System;

using Microsoft.Azure.WebJobs;

using Microsoft.Extensions.Logging;

namespace MyQueueFunction

public class QueueFunction

[FunctionName(“ProcessQueueMessage”)]

public void Run([QueueTrigger(“myqueue-items”, Connection = “AzureWebJobsStorage”)] string myQueueItem, ILogger log)

log.LogInformation($”C# Queue trigger function processed: myQueueItem”);

// Process the queue message here

“`

In this example, the `Run` method is triggered whenever a new message appears in the “myqueue-items” queue. The `QueueTrigger` attribute is used to configure the trigger, specifying the queue name and the connection string. The message content is passed to the function as a string parameter, `myQueueItem`.

Key benefits of utilizing the Queue trigger are:

- Asynchronous Processing: Allows functions to handle tasks without blocking the calling application.

- Scalability: Azure Functions automatically scale based on the queue message volume.

- Decoupling: Facilitates loosely coupled architectures by separating the components.

Blob Trigger

The Blob trigger enables functions to respond to changes in Azure Blob Storage. Functions are triggered when a blob is created, updated, or deleted within a specified container. This trigger is especially useful for tasks such as image processing, file transformations, and data analysis triggered by file uploads.

The `function.json` file configures the Blob trigger. The `type` property is set to `blobTrigger`, the `path` property specifies the container and the blob path to monitor, and the `connection` property indicates the storage account connection string.

Example of `function.json`:

“`json

“bindings”: [

“name”: “myBlob”,

“type”: “blobTrigger”,

“direction”: “in”,

“path”: “images/name.jpg”, // Example: Monitor for .jpg files in the ‘images’ container

“connection”: “AzureWebJobsStorage”

]

“`

In this example, the trigger monitors for the creation or modification of .jpg files within the “images” container. The `name` placeholder indicates that the function will receive the blob name as a parameter.

Corresponding C# code:

“`csharp

using System;

using Microsoft.Azure.WebJobs;

using Microsoft.Extensions.Logging;

namespace MyBlobFunction

public class BlobFunction

[FunctionName(“ProcessBlob”)]

public void Run([BlobTrigger(“images/name.jpg”, Connection = “AzureWebJobsStorage”)] Stream myBlob, string name, ILogger log)

log.LogInformation($”C# Blob trigger function processed blob\n Name:name \n Size: myBlob.Length Bytes”);

// Process the blob here, e.g., resize the image, extract metadata, etc.

“`

In this example, the `Run` method is triggered when a new or updated .jpg file is detected in the “images” container. The `BlobTrigger` attribute specifies the path and connection string. The `Stream myBlob` parameter provides access to the blob content as a stream, and the `string name` parameter contains the blob’s name.

Key benefits of using the Blob trigger are:

- Event-Driven Processing: Reacts automatically to file changes in Azure Blob Storage.

- File Transformation: Enables tasks like image resizing, format conversion, and metadata extraction.

- Data Analysis: Triggers data processing workflows upon file uploads.

Event Grid Trigger

The Event Grid trigger enables functions to react to events published by Azure Event Grid. Azure Event Grid is a fully managed event routing service that delivers events from various Azure services, such as Azure Blob Storage, Azure Resource Manager, and custom topics, to various destinations, including Azure Functions. This trigger is ideal for building event-driven architectures and responding to events in near real-time.

To use the Event Grid trigger, the `function.json` file configures the trigger. The `type` property is set to `eventGridTrigger`, and there are no specific configuration properties needed within the bindings section, as Event Grid directly routes events to the function.

Example of `function.json`:

“`json

“bindings”: [

“name”: “eventGridEvent”,

“type”: “eventGridTrigger”,

“direction”: “in”

]

“`

The C# code to handle Event Grid events:

“`csharp

using System;

using Microsoft.Azure.WebJobs;

using Microsoft.Extensions.Logging;

using Azure.Messaging.EventGrid;

namespace MyEventGridFunction

public class EventGridFunction

[FunctionName(“ProcessEventGridEvent”)]

public void Run([EventGridTrigger] EventGridEvent eventGridEvent, ILogger log)

log.LogInformation($”Event Type: eventGridEvent.EventType”);

log.LogInformation($”Subject: eventGridEvent.Subject”);

log.LogInformation($”Data: eventGridEvent.Data”);

// Process the event data based on the event type and subject.

“`

In this example, the `Run` method is triggered by events from Event Grid. The `EventGridTrigger` attribute is used to indicate the trigger. The `EventGridEvent` parameter contains the event data. The function then processes the event based on the event type, subject, and data.

The Event Grid trigger can be used for a wide range of use cases, including:

- Real-time notifications: Triggering actions in response to events, such as file uploads, resource creation, or system alerts.

- Workflow automation: Orchestrating complex workflows across different Azure services.

- Application integration: Connecting applications and services to react to events in a decoupled manner.

Consider a scenario where an image is uploaded to Azure Blob Storage. Using Event Grid, you can configure an event subscription to send an event to an Azure Function when a blob is created in the storage account. The function could then process the image, such as generating thumbnails or extracting metadata.

Another use case involves monitoring Azure resources. For example, when a virtual machine is created, Event Grid can send an event to an Azure Function, which then performs tasks such as configuring the VM or updating a monitoring dashboard.

The advantages of using the Event Grid trigger include:

- Real-time event processing: Allows for near real-time responses to events.

- Decoupled architecture: Enables loosely coupled architectures.

- Scalability: Azure Functions automatically scale to handle event volume.

Deploying and Managing Azure Functions

Deploying and managing Azure Functions is a critical aspect of the development lifecycle, ensuring that your functions are accessible, scalable, and perform as expected. This section explores various deployment methods, management techniques, and scaling strategies for Azure Functions. Effective management is crucial for monitoring, maintaining, and optimizing function performance in production environments.

Deployment Options for Azure Functions

Several deployment options are available for Azure Functions, each offering different advantages depending on the project’s requirements and development workflow. Understanding these options allows developers to choose the most appropriate method for their needs.

- Visual Studio: This is a popular option, particularly for developers already using Visual Studio for their C# code. It provides a streamlined experience with built-in deployment capabilities, allowing for easy publishing directly from the IDE. The deployment process often involves creating a publish profile and configuring settings within Visual Studio.

- Azure CLI: The Azure Command Line Interface (CLI) offers a command-line interface for managing Azure resources, including Azure Functions. This is a versatile option for automating deployments and integrating with CI/CD pipelines. The CLI allows for scripting deployment tasks, making it suitable for repeatable and automated deployments. For example, the command `az functionapp deploy –resource-group

–name –src-path ` can be used to deploy a function app. - Continuous Deployment (e.g., Azure DevOps, GitHub Actions): Continuous deployment allows for automatic deployments triggered by code changes in a source control repository. This option is ideal for teams that want to automate the deployment process and quickly deploy updates to their functions. Tools like Azure DevOps and GitHub Actions can be configured to build, test, and deploy function apps automatically whenever changes are pushed to the repository.

This typically involves setting up a build pipeline that builds the function app and then uses the Azure CLI or other deployment tools to deploy it to Azure.

- Zip Deploy: This is a deployment method where the function app code is zipped and uploaded to Azure. It is a fast and efficient method for deploying code, particularly when using tools like the Azure CLI or Azure PowerShell. The zip file is typically created from the publish output folder.

- Kudu/SCM (Source Control Management): Azure Functions provide a Kudu service, accessible via the SCM site. This allows developers to deploy code directly from a Git repository. This option is particularly useful for small projects or quick updates. The Kudu service handles the build and deployment process.

Deploying an Azure Function from Visual Studio

Deploying an Azure Function from Visual Studio involves several steps, including configuring the deployment settings and publishing the function app to Azure. This process is integrated within the Visual Studio IDE, making it a convenient method for developers.

- Create a Publish Profile: Right-click on the project in Solution Explorer and select “Publish.” This will open the “Publish” dialog. Choose “Azure” as the target and select “Azure Function App (Windows)” or “Azure Function App (Linux)” depending on the hosting plan. Then, select “Create New” or choose an existing function app to publish to.

- Configure Deployment Settings: In the “Publish” dialog, configure the necessary settings, such as the function app name, resource group, and storage account. Select the appropriate hosting plan (Consumption, Premium, or App Service). Choose the desired deployment method, such as “Zip Deploy.”

- Publish the Function App: Click the “Publish” button. Visual Studio will build the project, package the function app, and deploy it to Azure. Monitor the output window for any errors or warnings during the deployment process.

- Verify Deployment: After the deployment is complete, Visual Studio will provide a link to the function app in the Azure portal. Verify that the function app is running and that the functions are accessible by testing the HTTP endpoints or triggering the functions.

Managing Azure Function Settings

Managing function settings is essential for configuring and maintaining Azure Functions. Settings control various aspects of function behavior, including connection strings, application settings, and other configuration parameters. Proper management ensures the function app operates correctly and securely.

- Connection Strings: Connection strings are used to store information about how to connect to Azure services, such as Azure Storage, Azure SQL Database, and Azure Cosmos DB. These are stored securely within the function app settings. The function app uses these connection strings to access the required Azure resources.

- App Settings: App settings are used to store configuration information that is specific to the function app. These settings can include API keys, environment variables, and other configuration values. These settings are accessible within the function code.