DynamoDB access patterns are fundamental to the performance and cost-effectiveness of your NoSQL database. They define how your application interacts with the data stored within DynamoDB, directly impacting read/write speeds, storage utilization, and overall operational expenses. Understanding these patterns is crucial for designing efficient data models and building scalable applications.

This exploration delves into the core concepts of DynamoDB access patterns, providing a detailed examination of common patterns such as GetItem, Query, and Scan. We will analyze how these patterns interact with table design, focusing on strategies for optimization and techniques for modeling complex data structures. The objective is to equip you with the knowledge to build robust and cost-effective DynamoDB solutions.

Introduction to DynamoDB Access Patterns

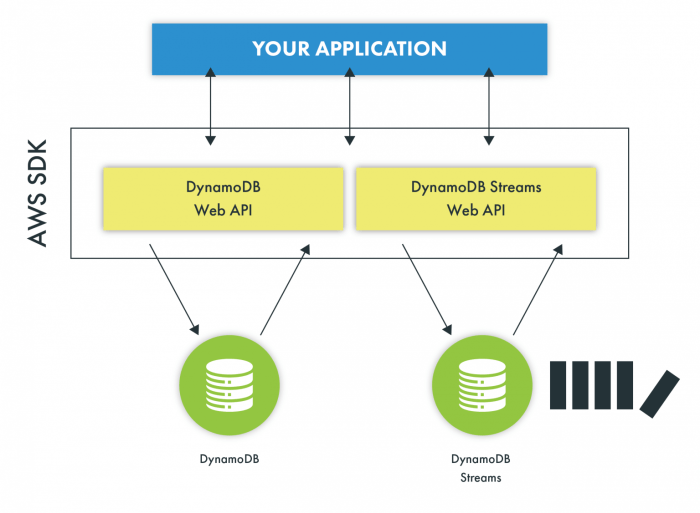

Understanding access patterns is fundamental to effectively utilizing Amazon DynamoDB. Access patterns define how applications read, write, and query data stored within DynamoDB tables. They represent the anticipated usage of the data, dictating the types of operations and the frequency with which they are performed.DynamoDB’s performance and cost-effectiveness are directly tied to how well access patterns are understood and incorporated into the data modeling and table design.

Choosing the right access patterns helps optimize query performance, minimize latency, and reduce provisioned capacity costs. Neglecting access patterns can lead to inefficient queries, increased costs due to over-provisioning, and poor application performance. The process of analyzing and designing for specific access patterns is a critical step in DynamoDB implementation.

Relationship Between Data Modeling and Access Patterns

Data modeling in DynamoDB is intrinsically linked to access patterns. The design of your table schema, including the choice of partition key, sort key (if applicable), and secondary indexes, is primarily driven by the access patterns you intend to support. A well-designed data model aligns with the access patterns, allowing for efficient data retrieval and manipulation.

- Data Modeling Driven by Access Patterns: The design process begins with identifying the access patterns. For instance, if the application frequently needs to retrieve data by a specific attribute (e.g., a user’s email address), this becomes a primary access pattern. The data model should then incorporate this requirement, potentially using the email address as a partition key or creating a Global Secondary Index (GSI) on the email attribute.

- Impact of Access Patterns on Table Design: The choice of partition key is critical. It determines how data is distributed across partitions. A good partition key leads to even distribution and efficient reads and writes. Sort keys, when used, allow for efficient range queries within a partition. GSIs enable querying data based on attributes other than the primary key, supporting various access patterns.

Local Secondary Indexes (LSIs) are useful for range queries on the same partition as the base table.

- Iteration and Refinement: The data model and access patterns are often iteratively refined. As application requirements evolve or performance bottlenecks are identified, the data model may need to be adjusted. This could involve adding new GSIs, modifying the partition key, or restructuring the data.

- Example: E-commerce Product Catalog: Consider an e-commerce application. Common access patterns might include:

- Retrieving a product by its ID.

- Listing products within a specific category.

- Searching for products by s.

The data model would reflect these access patterns. Product ID could be the partition key for retrieving a product directly. A GSI could be created on the ‘category’ attribute for efficient category-based listing. search might require a more complex solution, potentially involving pre-calculated search terms or additional GSIs.

- Consequences of Misaligned Models: If the data model is not designed with access patterns in mind, it can lead to significant performance issues. For example, a poor choice of partition key can cause hot partitions, leading to throttling and increased latency. Inefficient queries can strain resources, resulting in higher costs and slower response times.

Common Access Patterns

DynamoDB’s flexibility arises from its ability to support diverse access patterns. Understanding these patterns is crucial for optimizing performance and cost-effectiveness. The choice of access pattern significantly impacts read and write throughput, storage utilization, and ultimately, the overall efficiency of your application. Effective design involves selecting the appropriate access patterns to meet specific application requirements, considering the trade-offs inherent in each approach.

Get Item

The GetItem operation retrieves a single item from a table using its primary key. This is the most straightforward and efficient access pattern for fetching specific data.

- Characteristics: GetItem provides immediate consistency, meaning the retrieved data reflects the latest committed state. It offers high performance due to its direct access based on the primary key.

- Strengths: It’s highly efficient for retrieving individual items and is the fastest access pattern. It’s also cost-effective for single-item lookups.

- Weaknesses: It’s limited to retrieving data based on the primary key. It’s not suitable for retrieving multiple items at once or for performing complex filtering operations.

- Use Cases:

- Retrieving a user’s profile based on their user ID.

- Fetching product details based on a product ID.

- Accessing order information using an order ID.

Query

The Query operation retrieves items from a table based on the partition key and, optionally, a sort key. This pattern enables fetching a range of items efficiently.

- Characteristics: Query can retrieve multiple items, filtered by the partition key and an optional sort key condition. It supports range queries on the sort key, allowing for flexible data retrieval. Results are sorted by the sort key.

- Strengths: Enables efficient retrieval of multiple items sharing the same partition key. Supports range queries, allowing for flexible filtering.

- Weaknesses: Requires a partition key. Limited filtering capabilities within a partition (can only filter on the sort key). Can be less efficient for large datasets if not properly designed (e.g., if a single partition key has a very large number of items).

- Use Cases:

- Retrieving all orders for a specific customer (partition key: customer ID, sort key: order timestamp).

- Fetching all products within a specific category (partition key: category ID, sort key: product name).

- Listing all blog posts published on a specific date (partition key: publication date, sort key: post title).

Scan

The Scan operation reads all items in a table. This is a full-table scan, meaning it examines every item in the table to find matching items.

- Characteristics: Scan reads all items in a table. It can filter results based on any attribute. It is resource-intensive and should be used cautiously in production environments.

- Strengths: Can be used when you need to examine all items in a table. Allows filtering on any attribute, not just the primary key.

- Weaknesses: Inefficient and slow, especially for large tables. Consumes significant read capacity units (RCUs). Can negatively impact performance and cost.

- Use Cases:

- Performing an initial data load or a one-time data migration.

- Analyzing a small dataset where performance is not critical.

- Searching for data that doesn’t fit into the primary key or sort key patterns (used rarely in production).

Modeling for Get Item Access Patterns

Designing a DynamoDB table to efficiently support `GetItem` operations is crucial for optimal performance. The `GetItem` operation retrieves a single item from a table based on its primary key. Effective design minimizes latency and provisioned capacity consumption. This section details how to optimize table structure for this common access pattern.

Designing for Efficient GetItem Operations

Optimizing a DynamoDB table for `GetItem` operations involves strategic choices in primary key design and data modeling. The goal is to ensure that the items are located and retrieved quickly, consuming minimal read capacity units (RCUs).

- Primary Key Selection: The primary key is the cornerstone of `GetItem` efficiency. The key should uniquely identify each item and facilitate efficient data retrieval. A composite primary key, consisting of a partition key and a sort key, allows for more complex data organization and filtering.

- Data Locality: DynamoDB stores data based on the partition key. Designing a partition key that groups related items together can improve read performance. When frequently accessing related items, grouping them under the same partition key minimizes the number of partitions that need to be accessed.

- Attribute Projection: Using the `ProjectionExpression` parameter in the `GetItem` request to retrieve only the necessary attributes minimizes the amount of data read, thus reducing RCU consumption and improving latency.

- Data Size Considerations: Large items consume more RCUs for retrieval. Consider strategies such as data compression or splitting large items into multiple items if frequent retrieval of specific parts is needed.

Example Table Design: Retrieving Data by Primary Key

Consider a scenario involving a “Products” table where items are retrieved by product ID. This example showcases a table design optimized for `GetItem` operations using a single primary key (product ID).

This table is designed to efficiently retrieve product information based on a unique product identifier. The table utilizes a single primary key, `ProductID`, to ensure fast lookups. The attributes included are designed to contain the necessary product details while minimizing data redundancy and storage overhead. The data types chosen are appropriate for the information they store, promoting data integrity and retrieval efficiency.

| Attribute Name | Data Type | Description | Example Value |

|---|---|---|---|

| ProductID | String | Unique identifier for the product (Partition Key) | “PROD-12345” |

| ProductName | String | Name of the product | “Awesome Widget” |

| Description | String | Detailed product description | “A revolutionary widget designed to…” |

| Price | Number | Price of the product | 19.99 |

In this design, the `ProductID` serves as the primary key, facilitating direct and efficient retrieval of product details using the `GetItem` operation. This approach ensures minimal latency when accessing product information.

Modeling for Query Access Patterns

DynamoDB’s `Query` operation provides a powerful mechanism for retrieving data based on a partition key and, optionally, a sort key. Efficiently modeling tables for `Query` access patterns is crucial for optimizing performance and minimizing read costs. This involves careful consideration of how data is structured and how queries will be executed.

Strategies for Designing Tables to Support Query Operations

Designing tables for `Query` operations necessitates a deep understanding of the data and anticipated access patterns. The goal is to structure the data in a way that allows for efficient filtering and retrieval.

- Choosing the Partition Key: The partition key is the primary filter for `Query` operations. It determines the physical location of the data within DynamoDB. The selection of the partition key should be based on the most common and critical filtering criteria. For example, if you frequently query for all orders belonging to a specific customer, the customer ID would be a suitable partition key.

- Defining the Sort Key: The sort key enables filtering and sorting within a partition. It is used in conjunction with the partition key to narrow down the results. The sort key allows for a range of filtering operations such as `BETWEEN`, ` <`, `>`, `<=`, and `>=`. For instance, if you are storing order details, the sort key could be a timestamp to enable querying orders within a specific time range for a given customer.

- Data Distribution and Hot Keys: It’s crucial to consider data distribution. If a single partition key value receives a disproportionate amount of read/write activity (a “hot key”), it can lead to throttling and performance bottlenecks. Techniques to mitigate hot keys include:

- Salting: Adding a random prefix to the partition key to distribute the load across multiple partitions.

- Sharding: Breaking down a logical partition into multiple physical partitions, often by adding a sub-component to the partition key.

- Index Considerations: If you need to query data based on attributes other than the partition key and sort key, consider using Global Secondary Indexes (GSIs) or Local Secondary Indexes (LSIs). GSIs allow querying data across the entire table, while LSIs are limited to the same partition key as the base table.

Elaborating on the Use of Partition Keys and Sort Keys for Filtering Data

The effectiveness of `Query` operations hinges on the appropriate use of partition and sort keys. Understanding their functionalities is paramount for optimizing data retrieval.

- Partition Key Filtering: The `Query` operation

-always* requires a partition key. This is the fundamental filter. The `Query` operation will only retrieve items where the partition key matches the specified value. For example, if the partition key is “CustomerID” and the value is “C123”, only items with a “CustomerID” of “C123” will be returned. - Sort Key Filtering: The sort key provides the ability to filter within a partition. The sort key can be used with a variety of comparison operators.

- Equality: `sort_key = value` (exact match).

- Comparison Operators: `sort_key < value`, `sort_key <= value`, `sort_key > value`, `sort_key >= value`.

- Between: `sort_key BETWEEN value1 AND value2`.

- Begins_with: `begins_with(sort_key, substring)` (useful for prefix-based searches).

- Compound Keys: The combination of partition and sort keys allows for highly specific queries. For instance, a table storing events might use “UserID” as the partition key and “Timestamp” as the sort key. This allows for querying all events for a specific user within a given time range.

- Pagination: DynamoDB uses pagination to limit the amount of data returned in a single `Query` operation. The `LastEvaluatedKey` attribute in the response can be used to retrieve the next set of results. This is crucial for handling large datasets efficiently.

Scenario Where Query is the Preferred Method

Consider a scenario involving an e-commerce platform that stores customer order data. The platform needs to retrieve orders based on specific criteria, such as all orders placed by a specific customer within a given date range. In this case, the `Query` operation is the optimal solution.

- Table Design:

- Partition Key: `CustomerID` (String).

- Sort Key: `OrderDate` (Number, Unix timestamp).

- Other Attributes: `OrderID`, `OrderTotal`, `OrderStatus`.

- Query Procedure:

- Step 1: Specify the `CustomerID` (e.g., “C456”) in the `KeyConditionExpression`. This ensures that only orders for the specified customer are considered.

- Step 2: Use the `BETWEEN` operator in the `KeyConditionExpression` to filter by `OrderDate`. Specify the start and end dates as Unix timestamps. For example: `OrderDate BETWEEN 1678886400 AND 1679059200` (March 15, 2023 to March 17, 2023).

- Step 3: Optionally, use the `FilterExpression` to filter by other attributes. For example, to filter for orders with a status of “Shipped,” you would use `OrderStatus = ‘Shipped’`.

- Step 4: The `Query` operation returns a list of matching orders, sorted by `OrderDate`.

- Step 5: If the number of orders exceeds the page size, the `LastEvaluatedKey` can be used to paginate through the results.

- Benefits:

- Efficiency: The `Query` operation is highly efficient because it uses the partition key and sort key to locate and retrieve the desired data.

- Scalability: DynamoDB’s underlying architecture handles the scaling of `Query` operations, ensuring consistent performance even with large datasets.

- Data Integrity: Using the correct partition and sort keys ensures data integrity by isolating data and facilitating efficient retrieval.

Modeling for Scan Access Patterns

Scan operations in DynamoDB offer a way to retrieve all items within a table or index. While providing flexibility, they often come with significant performance trade-offs. Understanding these implications is crucial for designing efficient data access strategies.

When and Why to Use Scan Operations, and Their Performance Implications

Scan operations are typically used when the query criteria are not easily satisfied by indexed attributes. This often occurs when the application needs to retrieve all items or a subset of items based on non-indexed attributes, or when the query predicates are complex and cannot be effectively modeled with Query operations. However, Scan operations can be resource-intensive and have significant performance impacts.

- Performance Bottlenecks: Scans examine every item in the table or index, leading to potentially high read costs, especially for large datasets. The operation can be slow and can strain the provisioned throughput capacity of the table, potentially leading to throttling.

- Cost Implications: Scans consume read capacity units (RCUs) for every item scanned. The cost can quickly escalate with the size of the table.

- Throughput Limitations: DynamoDB limits the number of items scanned per second. For large tables, this limitation can significantly increase the latency of the scan operation. The `Limit` parameter in the Scan API can control the number of items returned in a single scan, but it does not reduce the total number of items scanned.

- Best-Effort Consistency: Scans provide eventual consistency by default. While you can request strong consistency, this further increases read costs.

Guidance on Minimizing the Impact of Scan Operations

Several strategies can mitigate the negative effects of Scan operations. These techniques aim to reduce the number of items scanned or optimize the operation’s efficiency.

- Minimize Data Size: Reduce the overall size of the table and the number of items stored. Consider data archival or deletion strategies for older or less frequently accessed data.

- Use `FilterExpression`: Apply `FilterExpression` to reduce the amount of data returned after the scan. This filters data at the server side, but the scan still reads all items, so this reduces the amount of data returned to the client.

- Parallel Scans: Utilize parallel scans to increase throughput. The `Segment` and `TotalSegments` parameters enable breaking down the scan into multiple segments, allowing parallel execution. This can be helpful, but it doesn’t eliminate the underlying performance challenges.

- Consider Alternatives: Evaluate whether alternative approaches, such as denormalization, secondary indexes, or different data modeling strategies, can eliminate the need for scans altogether. For example, creating a Global Secondary Index (GSI) on an attribute frequently used for filtering can dramatically improve query performance.

- Optimize Data Access Patterns: Analyze the application’s data access patterns and optimize the table design accordingly. Identify attributes frequently used for filtering and consider creating secondary indexes on those attributes.

- Monitor and Analyze: Regularly monitor DynamoDB metrics, such as consumed RCUs and throttled requests, to identify potential performance bottlenecks. Analyze slow scan operations and identify areas for improvement.

Comparison of Query and Scan Access Patterns

The following table summarizes the key differences between Query and Scan operations in DynamoDB, highlighting their strengths and weaknesses.

| Attribute | Query | Scan | Description | Performance Impact |

|---|---|---|---|---|

| Primary Use Case | Retrieve items based on primary key or index key | Retrieve items based on non-indexed attributes or all items in a table | This defines the typical scenario for using each operation. | Queries generally have much better performance due to the efficient use of indexes. Scans can be slow and resource-intensive. |

| Data Retrieval | Efficient, using indexes to locate specific items | Reads all items in the table or index | This details how each operation retrieves data. | Queries are generally much faster, particularly for large datasets. Scans can be very slow, especially on large tables. |

| Performance | Fast, predictable performance, scales well with data size | Slow, performance degrades significantly with table size | This reflects the performance characteristics of each operation. | Queries offer consistently good performance. Scans can experience significant performance degradation as table size increases, leading to increased latency and potential throttling. |

| Cost | Lower cost, as it reads only the necessary items | Higher cost, as it reads all items, consuming more read capacity units (RCUs) | This describes the cost implications of each operation. | Queries are typically more cost-effective. Scans can be expensive, especially on large tables. |

Advanced Access Pattern Techniques

DynamoDB’s flexibility stems from its support for various indexing strategies, enabling optimization for diverse access patterns beyond the primary key. This section delves into advanced techniques leveraging Global Secondary Indexes (GSIs) and Local Secondary Indexes (LSIs) to enhance query performance and data retrieval capabilities. These techniques are crucial for handling complex data access requirements efficiently.

Global Secondary Indexes (GSIs) for Diverse Access Patterns

GSIs provide a mechanism to query data based on attributes other than the primary key, offering a more flexible approach to data retrieval. They are essentially independent indexes that maintain a separate copy of the data, allowing for efficient querying based on different key combinations. This is particularly useful when access patterns involve filtering or sorting based on non-key attributes.The utility of GSIs is best illustrated through a practical scenario: Consider an e-commerce platform managing product listings.The base table structure is defined as follows:“`Table Name: ProductsPrimary Key:

Partition Key

ProductID (String)

Sort Key

ProductName (String)Attributes:

Category (String)

Price (Number)

SellerID (String)

InStock (Boolean)

“`The initial access pattern focuses on retrieving product details by `ProductID` and `ProductName`. However, the platform also requires the ability to:* Find all products within a specific `Category`.

- Identify all products offered by a particular `SellerID`.

- List all products within a `Price` range.

To address these requirements, the following GSIs are created:

1. GSI for Category-Based Queries

GSI Name

`CategoryIndex`

Partition Key

`Category` (String)

Sort Key

`ProductName` (String)

Projected Attributes

`ProductID`, `Price`, `InStock` This GSI enables efficient queries to retrieve all products belonging to a specific category. For instance, to find all “Electronics” products, a query is performed using `CategoryIndex` with `Category = “Electronics”`. The sort key `ProductName` allows for sorting products within the category. The projected attributes optimize query performance by only retrieving necessary data.

2. GSI for Seller-Based Queries

GSI Name

`SellerIndex`

Partition Key

`SellerID` (String)

Sort Key

`ProductName` (String)

Projected Attributes

`ProductID`, `Category`, `Price`, `InStock` This GSI allows for retrieving products offered by a specific seller. A query using `SellerIndex` with `SellerID = “Seller123″` efficiently retrieves all products from that seller. The `ProductName` sort key supports sorting products by name within the seller’s offerings. The projected attributes provide the required information.

3. GSI for Price Range Queries

GSI Name

`PriceIndex`

Partition Key

`Price` (Number)*This is a simplification; realistically, using price directly as a partition key can lead to hot keys. Consider using a price range bucket as the partition key.*

Sort Key

`ProductName` (String)

Projected Attributes

`ProductID`, `Category`, `SellerID`, `InStock` This GSI allows for querying products within a specific price range. The partition key, in this example `Price`, can be used to specify a price point. The sort key `ProductName` allows for sorting products within the price range.

Important Consideration

* Since DynamoDB queries are inherently designed to access a single partition at a time, querying directly on `Price` may be inefficient if many products share the same price. A better design might involve creating price buckets (e.g., price ranges like “0-10”, “11-20”, etc.) as the partition key, or using a combination of price and another attribute as the partition key and sort key.

The advantages of using GSIs in this e-commerce scenario are:

Flexibility

Enables querying based on different criteria (category, seller, price) without modifying the primary key structure.

Performance

GSIs optimize queries for these specific access patterns, improving response times.

Scalability

DynamoDB handles GSI updates automatically, ensuring data consistency as the base table scales. The disadvantage of GSIs is that they increase the storage and write costs. Every write operation to the base table also writes to the GSIs, and each GSI consumes storage space. Careful consideration is needed to balance the performance benefits against the increased cost.

The choice of projected attributes is also crucial; projecting too many attributes can increase storage costs, while projecting too few might require additional reads from the base table.

Local Secondary Indexes (LSIs)

LSIs provide a means to index data within a single partition of a table. They are associated with the base table’s primary key’s partition key, but they offer a different sort key. This enables efficient querying within a specific partition based on an alternative sort order.LSIs are particularly useful for scenarios where data is logically grouped within a partition and needs to be sorted or filtered based on a secondary attribute.

Unlike GSIs, LSIs do not create a separate copy of the data; they index the existing data within the partition.Consider a scenario where a social media platform stores user posts.The base table structure is defined as follows:“`Table Name: PostsPrimary Key:

Partition Key

UserID (String)

Sort Key

PostID (String)Attributes:

Timestamp (Number)

Content (String)

Likes (Number)

“`The primary access pattern is to retrieve a user’s posts, sorted by `PostID` (e.g., in chronological order). Now, the platform also needs to display a user’s posts sorted by `Timestamp` (most recent first). Since `Timestamp` is not the primary sort key, an LSI is used.To address this requirement, the following LSI is created:* LSI Name: `TimestampIndex`

Partition Key

`UserID` (inherited from the base table)

Sort Key

`Timestamp` (Number)

Projected Attributes

`Content`, `Likes` The advantages of using an LSI in this scenario are:

Efficient Sorting

Enables sorting posts by `Timestamp` within each user’s partition.

Cost-Effective

LSIs do not duplicate data across partitions, reducing storage costs compared to GSIs for similar use cases.

Localized Queries

Queries are performed within the same partition, which often results in faster retrieval. The disadvantages of using LSIs are:

Limited Scope

Queries are restricted to a single partition; they cannot span across multiple users.

Partition Size Limits

LSIs are subject to the partition size limits of DynamoDB. If a partition becomes too large, performance can be impacted.

Update Considerations

Modifications to LSI data are bound by the same limitations as the base table. Using the `TimestampIndex`, the application can efficiently retrieve a user’s posts sorted by the `Timestamp` attribute. The projected attributes only retrieve the `Content` and `Likes` to minimize the data transferred. LSIs are ideal for use cases where sorting is needed within a partition based on a secondary attribute, and the partition size is manageable.

Access Patterns for Time-Series Data

Time-series data, characterized by its temporal dimension, presents unique challenges and opportunities in database design. DynamoDB, with its inherent scalability and flexibility, can be effectively utilized for storing and analyzing time-series data. However, the specific access patterns and data modeling strategies employed are crucial for achieving optimal performance and cost-efficiency. Efficient modeling is vital to leverage DynamoDB’s strengths and avoid common pitfalls.

Modeling Time-Series Data

The core principle in modeling time-series data for DynamoDB revolves around the effective utilization of the partition key and sort key. These keys are critical for data distribution, retrieval, and query performance. The choice of these keys dictates how data is organized, indexed, and accessed.To understand the process, consider the example of storing sensor readings. Each reading is associated with a timestamp, the sensor ID, and the measured value.

The goal is to efficiently query readings for a specific sensor within a given time range.

The following blockquotes illustrates the key steps in modeling time-series data in DynamoDB:

1. Partition Key Selection

The partition key should be chosen to evenly distribute the data across the DynamoDB partitions. Options include:

- Sensor ID: Grouping data by sensor. This is suitable if you frequently query data for individual sensors.

- Sensor ID combined with a time-based component (e.g., day, hour): This approach combines the sensor ID with a granularity of time, allowing for efficient queries within specific time ranges. For example, you could use a combination of sensor ID and the day the reading was taken.

2. Sort Key Selection

The sort key is primarily used for sorting data within a partition. For time-series data, the timestamp is almost always used as the sort key.

- Timestamp: The primary sort key, used for ordering the readings by time.

3. Data Structure

Data is structured in the table based on the selected partition and sort keys.

- Table Schema:

- Partition Key: Sensor ID (or Sensor ID + Time Component)

- Sort Key: Timestamp

- Attributes: Reading Value, Other Metadata

4. Querying Strategies

DynamoDB provides efficient query capabilities based on the partition key and sort key.

- Querying by Sensor and Time Range: To retrieve readings for a specific sensor within a time range, use a query with the sensor ID as the partition key and the timestamp as the sort key, utilizing the `Between` or `GreaterThan` and `LessThan` operators on the timestamp.

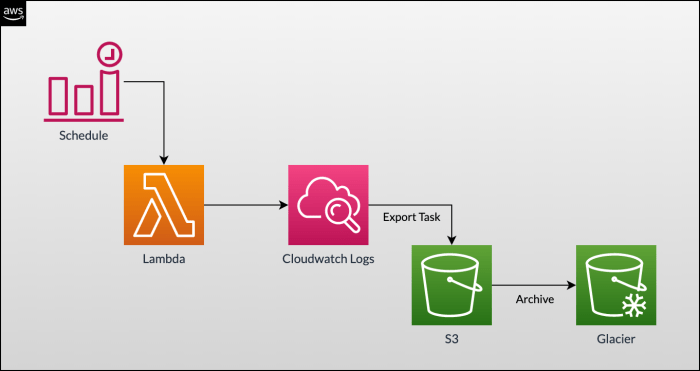

- Querying for Aggregated Data: For aggregate functions (e.g., average, sum), retrieve the data and perform the calculations on the client-side, or utilize DynamoDB Streams and Lambda functions for real-time aggregation.

Optimizing Read and Write Performance

Optimizing read and write performance in time-series scenarios involves several strategies, focusing on data modeling, access patterns, and resource utilization.

The following list Artikels key optimization strategies:

1. Data Modeling for Query Efficiency

- Choosing Granularity: The choice of time granularity (e.g., hourly, daily) in the partition key can significantly impact query performance. A finer granularity (e.g., hourly) enables faster queries for smaller time windows but can lead to increased item counts. A coarser granularity (e.g., daily) reduces item counts but might require scanning more data for queries spanning multiple time periods.

Consider query patterns and data volume to determine the optimal granularity.

- Composite Keys: Using composite keys (e.g., Sensor ID + Day) can improve efficiency.

2. Read Performance Optimization

- Consistent Read vs. Eventual Read: Utilize eventual consistent reads where possible to reduce read costs. However, use consistent reads when data accuracy is critical.

- Query and Scan Optimization: Employ queries whenever possible. Avoid scans, which are less efficient and more expensive. Ensure proper indexing (partition and sort keys) for efficient queries.

- Pagination: Implement pagination to handle large result sets, preventing timeouts and minimizing resource consumption.

3. Write Performance Optimization

- Batch Writes: Use batch writes (BatchWriteItem) to write multiple items in a single operation, improving write throughput.

- Provisioned Capacity: Properly provision read and write capacity units (RCUs and WCUs) based on the expected data volume and access patterns. Monitor utilization to ensure adequate capacity and avoid throttling.

- Exponential Backoff and Retry: Implement exponential backoff and retry mechanisms for write operations to handle potential throttling or transient errors.

4. Data Lifecycle Management

- TTL (Time to Live): Implement TTL to automatically delete old data, reducing storage costs and improving query performance over time.

- Data Archiving: Consider archiving older data to a more cost-effective storage solution (e.g., Amazon S3) to further optimize storage costs and improve query performance for recent data.

Access Patterns for Hierarchical Data

Modeling and querying hierarchical data in DynamoDB presents unique challenges due to its NoSQL nature and lack of inherent support for complex relationships. Efficiently representing and accessing hierarchical structures requires careful consideration of data modeling techniques to optimize for read performance and data consistency. The choice of access pattern significantly impacts the ability to query and retrieve hierarchical data effectively.Hierarchical data is characterized by parent-child relationships, where elements are organized in a tree-like structure.

This structure can represent various real-world scenarios, such as organizational charts, file system directories, or product catalogs. DynamoDB, designed for high scalability and performance, demands specific strategies to effectively manage and query such data structures.

Modeling Hierarchical Relationships

Representing hierarchical data in DynamoDB necessitates strategies to capture parent-child relationships. Several techniques are available, each with trade-offs in terms of query flexibility, storage efficiency, and write performance.

- Nested Attributes: Using nested attributes within a single item is suitable for representing small, relatively static hierarchies. For instance, a product category might have subcategories nested within its attributes. This approach is straightforward for simple hierarchies but becomes less manageable as the depth and complexity of the hierarchy increase.

Example:

Item: Product Category

Attributes:

- CategoryID: “Electronics”

- CategoryName: “Electronics”

- Subcategories:

- CategoryID: “Televisions”

- CategoryName: “Televisions”

- Subcategories:

- CategoryID: “LED TVs”

- CategoryName: “LED TVs”

- Parent-Child Relationships with Foreign Keys: This method involves storing the parent’s primary key within the child item. This design is effective for large hierarchies and allows for efficient queries to retrieve child items given a parent’s key. However, retrieving the entire hierarchy often requires multiple queries, potentially impacting read performance. Example:

Item: Product

Attributes:- ProductID: “P123”

- ProductName: “4K TV”

- CategoryID: “Televisions” (Foreign Key referencing a Category item)

Item: Category

Attributes:- CategoryID: “Televisions”

- CategoryName: “Televisions”

- ParentCategoryID: “Electronics” (Foreign Key)

- Adjacency List Model: In this approach, each item stores its direct parent’s ID. This facilitates queries to retrieve the immediate children of a node. Traversing the entire hierarchy, however, may involve multiple queries to traverse the tree. Example:

Item: Node

Attributes:- NodeID: “Node1”

- NodeName: “Root”

- ParentNodeID: null

Item: Node

Attributes:- NodeID: “Node2”

- NodeName: “Child of Node1”

- ParentNodeID: “Node1”

- Path Enumeration (Materialized Path): This technique involves storing the complete path from the root to each node within each item. This enables efficient queries to retrieve all descendants of a node. The downside is the increased storage requirements and the need to update multiple items when a node is moved within the hierarchy. Example:

Item: Node

Attributes:- NodeID: “Node1”

- NodeName: “Root”

- Path: “Node1”

Item: Node

Attributes:- NodeID: “Node2”

- NodeName: “Child of Node1”

- Path: “Node1/Node2”

- Nested Sets Model: This model assigns each node a left and right value, allowing efficient retrieval of all descendants of a node with a single query. However, this model requires more complex logic for managing the values during updates and can be less intuitive to understand. Example:

Item: Node

Attributes:- NodeID: “Node1”

- NodeName: “Root”

- Left: 1

- Right: 6

Item: Node

Attributes:- NodeID: “Node2”

- NodeName: “Child of Node1”

- Left: 2

- Right: 5

Querying Hierarchical Data

Querying hierarchical data in DynamoDB depends on the chosen data modeling approach. The objective is to retrieve data efficiently while minimizing the number of read operations.

- Retrieving Children: Using the parent-child relationship with foreign keys, a query can be constructed to fetch all children of a specific parent by filtering on the parent’s ID. The `Query` operation is typically used.

Example: Retrieving all products belonging to a specific category.

// Example using AWS SDK for JavaScript (Node.js)

const params =

TableName: 'Products',

IndexName: 'CategoryIndex', // Assuming an index on CategoryID

KeyConditionExpression: 'CategoryID = :category',

ExpressionAttributeValues:

':category': 'Televisions',

,

;dynamoDB.query(params, function(err, data)

if (err)

console.error("Unable to query. Error:", JSON.stringify(err, null, 2));

else

console.log("Query succeeded.");

data.Items.forEach(function(item)

console.log(item);

););

- Retrieving Descendants: With the path enumeration model, a query can use the `Begins_with` function to retrieve all descendants of a given node. The path attribute is used for filtering.

Example: Retrieving all subcategories of a category using the Path Enumeration Model.

// Example using AWS SDK for JavaScript (Node.js)

const params =

TableName: 'Categories',

KeyConditionExpression: 'CategoryID = :categoryID',

FilterExpression: 'begins_with(Path, :pathPrefix)',

ExpressionAttributeValues:

':categoryID': 'Electronics',

':pathPrefix': 'Electronics/',

,

;dynamoDB.scan(params, function(err, data)

if (err)

console.error("Unable to query. Error:", JSON.stringify(err, null, 2));

else

console.log("Query succeeded.");

data.Items.forEach(function(item)

console.log(item);

););

- Retrieving Ancestors: This is more challenging and depends on the model. Using the parent-child relationship model, you would need to traverse up the hierarchy, querying for each parent. Path enumeration can be used to determine ancestors, but it’s not the most efficient approach.

Optimizing for Read Performance

To enhance read performance when querying hierarchical data, it is essential to implement strategies that reduce the number of read operations and data transferred.

- Denormalization: Duplicate data across items to avoid joins and reduce the need for multiple queries. For instance, including the category name in the product item can eliminate the need to query the category table. However, denormalization increases storage costs and write complexity, as updates to the category name would necessitate updating multiple product items.

Example: Including the category name in the product item.

Item: Product

Attributes:

- ProductID: “P123”

- ProductName: “4K TV”

- CategoryID: “Televisions”

- CategoryName: “Televisions”

- Indexes: Create global secondary indexes (GSIs) to enable efficient querying based on attributes other than the primary key. For instance, an index on the `CategoryID` attribute in the product table can facilitate efficient retrieval of products within a specific category. Example: Creating a GSI on the `CategoryID` attribute. This enables quick lookups of all products belonging to a particular category.

- Caching: Implement caching mechanisms, such as Amazon ElastiCache, to store frequently accessed data. This can significantly reduce read latency and DynamoDB read capacity unit (RCU) consumption. Caching is particularly beneficial for relatively static hierarchical data that does not change frequently.

- Batch Operations: Utilize batch operations (e.g., `BatchGetItem`) to retrieve multiple items in a single API call, minimizing the overhead of individual requests. This approach is effective when retrieving a set of related items, such as a list of products belonging to the same category.

Monitoring and Optimization

Effective monitoring and optimization are critical for maintaining optimal performance and cost-efficiency in DynamoDB. Proactive monitoring allows for the identification of performance bottlenecks and inefficient access patterns, enabling timely adjustments to table designs and access strategies. Continuous optimization, driven by performance data, ensures that DynamoDB resources are utilized effectively, leading to improved application responsiveness and reduced operational costs.

Monitoring DynamoDB Performance

DynamoDB provides a suite of tools and metrics for monitoring performance. These metrics are crucial for understanding how applications interact with DynamoDB and identifying areas for improvement. Analyzing these metrics is essential to make informed decisions about table design and access pattern optimization.

- CloudWatch Metrics: Amazon CloudWatch provides detailed metrics for DynamoDB, including read/write capacity units (RCUs/WCUs) consumed, throttled requests, latency, and item counts. These metrics are available at both the table and index levels.

- Key Metrics to Monitor: Several key metrics provide insights into DynamoDB performance.

- Consumed Read Capacity Units (RCUs) and Consumed Write Capacity Units (WCUs): Tracking these metrics helps determine if the provisioned capacity is sufficient for the workload. Spikes in these metrics can indicate inefficient access patterns or an undersized table.

- Throttled Requests: A high number of throttled requests indicates that the provisioned capacity is insufficient. Throttling directly impacts application performance.

- Successful Requests: The number of successful requests reflects the overall throughput and the efficiency of the application’s interactions with DynamoDB.

- Latency: Monitoring latency (e.g., `SuccessfulRequestLatency`) helps to assess the responsiveness of read and write operations. High latency can indicate bottlenecks or inefficient access patterns.

- Provisioned Capacity vs. Actual Usage: This comparison reveals the efficiency of capacity allocation. Over-provisioning leads to unnecessary costs, while under-provisioning results in throttling.

- Monitoring Tools: Several tools can be used to monitor DynamoDB.

- AWS Management Console: The AWS Management Console provides a user-friendly interface for viewing CloudWatch metrics and configuring alarms.

- AWS CLI: The AWS Command Line Interface (CLI) allows programmatic access to CloudWatch metrics and DynamoDB table information, enabling automation and scripting.

- Third-Party Monitoring Solutions: Various third-party monitoring solutions integrate with CloudWatch and provide advanced analytics, alerting, and dashboards.

Identifying Inefficient Access Patterns

Analyzing performance metrics can help identify inefficient access patterns that negatively impact performance and increase costs. Specific patterns and metrics indicate the need for optimization.

- High RCU/WCU Consumption: Excessive consumption of RCUs and WCUs, relative to the number of items retrieved or written, suggests inefficient access patterns. For instance, a `Scan` operation that reads many items to find a few specific items is highly inefficient.

- Frequent Throttling: Frequent throttling indicates that the provisioned capacity is insufficient for the access patterns. This can be caused by poorly designed indexes or inefficient queries.

- High Latency: High latency for read or write operations suggests that DynamoDB is under strain. This could be due to hotspots, inefficient queries, or insufficient capacity.

- Scan Operations on Large Tables: Scan operations, which read all items in a table, are generally inefficient. They consume significant capacity and can severely impact performance, especially on large tables.

- Inefficient Query Operations: Query operations that do not use the primary key or a secondary index effectively can also lead to performance issues. For example, a query that filters on a non-indexed attribute will require a full table scan.

Strategies for Optimizing DynamoDB Table Designs and Access Patterns

Optimizing DynamoDB table designs and access patterns involves making informed decisions based on performance data. These optimizations can significantly improve application performance and reduce costs.

- Provisioning Adequate Capacity: Ensure sufficient read and write capacity is provisioned to handle the expected workload. Auto Scaling can dynamically adjust capacity based on demand, preventing throttling and optimizing costs.

- Using Appropriate Data Modeling: Design the table schema and indexes to support the application’s access patterns. This includes selecting the correct primary key, designing efficient secondary indexes, and denormalizing data where appropriate.

- Optimizing Queries: Optimize queries to minimize the number of items read. This includes using the primary key or appropriate secondary indexes, limiting the number of results returned, and avoiding full table scans.

- Batch Operations: Use batch operations (e.g., `BatchGetItem`, `BatchWriteItem`) to reduce the number of API calls and improve efficiency, especially for bulk operations.

- Caching: Implement caching mechanisms (e.g., using Amazon ElastiCache) to reduce the load on DynamoDB for frequently accessed data.

- Implementing Auto Scaling: Configure Auto Scaling to automatically adjust the provisioned capacity based on the actual workload, preventing throttling and optimizing costs. Auto Scaling can be configured to react to CloudWatch metrics like consumed RCUs/WCUs and throttled requests.

Step-by-Step Procedure for Optimizing Access Patterns

Optimizing access patterns is an iterative process that involves monitoring, analysis, and refinement. The following steps provide a structured approach to optimizing DynamoDB performance.

- Baseline Performance Measurement: Establish a baseline by monitoring performance metrics before making any changes. This baseline provides a reference point for evaluating the effectiveness of optimizations. Record metrics such as consumed RCUs/WCUs, throttled requests, latency, and the volume of data processed.

- Identify Inefficient Access Patterns: Analyze the performance data to identify inefficient access patterns. Look for high RCU/WCU consumption, frequent throttling, high latency, and inefficient query patterns. Correlate these issues with specific application operations.

- Analyze Table Design and Queries: Review the table design and queries to identify potential areas for improvement. Evaluate the primary key and secondary indexes to ensure they support the application’s access patterns effectively. Analyze query patterns to determine if they are using the primary key or appropriate secondary indexes.

- Implement Optimizations: Implement optimizations based on the analysis. This may include redesigning the table schema, creating or modifying secondary indexes, optimizing queries, or using batch operations. For example, if a query frequently filters on a specific attribute, create a secondary index on that attribute.

- Test and Validate: Test the implemented optimizations to ensure they improve performance and do not introduce new issues. Measure the performance metrics after the changes and compare them to the baseline. Use load testing tools to simulate realistic workloads and assess the impact of the changes.

- Monitor and Iterate: Continuously monitor performance metrics and iterate on the optimization process. DynamoDB performance is dynamic, and access patterns can change over time. Regularly review performance data and make adjustments as needed. Use automated monitoring and alerting to detect performance degradations early.

Conclusion

In conclusion, mastering DynamoDB access patterns is not merely about understanding technical operations; it’s about crafting efficient, scalable, and cost-effective data solutions. From optimizing for GetItem operations to leveraging Global Secondary Indexes and managing time-series data, the principles discussed provide a solid foundation for designing and maintaining high-performing DynamoDB applications. By carefully considering access patterns, you can unlock the full potential of DynamoDB, ensuring your applications meet performance and budgetary goals.

Helpful Answers

What is the primary difference between Query and Scan operations in DynamoDB?

Query operations are significantly more efficient because they use the primary key or an index to locate data directly. Scan operations, on the other hand, read every item in a table or index, making them less efficient and potentially more expensive, especially for large datasets.

How do Global Secondary Indexes (GSIs) improve access patterns?

GSIs allow you to query data based on attributes other than the primary key, creating alternative access paths. This supports diverse access patterns and enables efficient retrieval of data based on different search criteria, without having to scan the entire table.

What are the potential performance implications of using Scan operations frequently?

Frequent Scan operations can lead to high read capacity unit (RCU) consumption, increased latency, and higher costs. They can also impact the overall performance of your DynamoDB table, especially during periods of high traffic. It’s crucial to use Scan operations sparingly and optimize them whenever possible.

How can I monitor and optimize DynamoDB access patterns?

Monitor DynamoDB performance metrics such as consumed RCUs, latency, and throttling events using CloudWatch. Analyze your application’s access patterns to identify inefficient operations, and optimize table designs, indexes, and queries accordingly. Regularly review and adjust your access patterns based on performance data.