Migrating large databases presents a complex undertaking, fraught with potential pitfalls that can significantly impact business operations. The process demands meticulous planning, technical expertise, and a deep understanding of various interconnected factors. This exploration delves into the multifaceted challenges inherent in such migrations, providing a structured analysis of critical considerations from initial planning to post-migration maintenance. The successful migration of a large database is not merely a technical feat; it’s a strategic imperative that requires careful orchestration across multiple domains.

This document systematically dissects the key aspects of database migration, including data compatibility, downtime mitigation, security protocols, and resource allocation. We will examine strategies to minimize disruption, ensure data integrity, and optimize performance. The goal is to equip readers with the knowledge necessary to navigate the complexities of database migration projects effectively, ultimately leading to successful outcomes and minimal business impact.

Planning and Preparation Challenges

Migrating large databases presents a complex undertaking, requiring meticulous planning and preparation to mitigate risks and ensure a successful transition. The planning phase is crucial as it lays the groundwork for the entire migration process. Insufficient planning often leads to project delays, budget overruns, and operational disruptions.

Comprehensive Pre-Migration Assessments

Thorough pre-migration assessments are fundamental to understanding the current database environment and identifying potential challenges. This involves a multi-faceted evaluation process that covers various aspects of the existing system.

- Database Inventory and Analysis: This step involves a detailed inventory of all database instances, including their versions, sizes, and configurations. Analyzing the schema, data types, and dependencies within the database is essential to understand the complexity of the data model. This analysis identifies potential compatibility issues with the target database. For instance, migrating from an older version of SQL Server to a newer version might expose deprecated features or data types that require conversion.

- Performance Evaluation: Assessing the current database’s performance is critical. This includes evaluating query performance, identifying bottlenecks, and measuring resource utilization (CPU, memory, disk I/O). Tools like performance monitoring software, database query analyzers, and profiling tools are used to collect data. This evaluation helps to estimate the impact of the migration on performance and identify areas for optimization. For example, if a database consistently experiences high CPU utilization, the migration plan might need to include server upgrades or query optimization strategies.

- Application Dependency Mapping: Understanding how applications interact with the database is crucial. This involves identifying all applications that access the database, analyzing their connection strings, and assessing the impact of the migration on these applications. This may involve code analysis to determine the SQL queries used by the applications. A poorly mapped dependency can lead to application outages after migration.

- Data Quality Assessment: Evaluating the quality of the data within the database is vital. This includes assessing data consistency, completeness, and accuracy. Data profiling tools are often used to identify data quality issues, such as missing values, incorrect formats, and duplicate records. Addressing data quality issues before migration can prevent data corruption and ensure data integrity in the target database.

- Security Assessment: Evaluating the security posture of the existing database is critical. This includes identifying security vulnerabilities, assessing access controls, and evaluating data encryption. The security assessment informs the security strategy for the target database, ensuring that data is protected during and after the migration.

Defining Scope and Objectives

Clearly defining the scope and objectives is essential for guiding the migration project and ensuring alignment among stakeholders. This process clarifies the project’s boundaries, goals, and success criteria.

- Defining the Scope: The scope defines the boundaries of the migration project. It specifies which databases, schemas, and data will be migrated. It also includes details on which applications are involved and the cutover strategy. For example, the scope might define a migration of a specific set of databases from an on-premises environment to a cloud-based platform, including the applications that access those databases.

- Establishing Objectives: Objectives Artikel the specific goals of the migration project. These should be SMART (Specific, Measurable, Achievable, Relevant, and Time-bound). Examples include reducing operational costs, improving performance, enhancing scalability, or improving data security.

- Developing a Migration Strategy: A well-defined migration strategy Artikels the approach to be used for the migration. This strategy encompasses selecting the migration method (e.g., online, offline, or hybrid), choosing the target database platform, and defining the cutover plan. The choice of strategy depends on factors like downtime tolerance, data volume, and application requirements.

- Identifying Key Performance Indicators (KPIs): KPIs are metrics used to measure the success of the migration project. Examples include migration time, downtime, data loss, and performance improvements. KPIs should be defined early in the planning phase to track progress and measure the success of the project.

- Stakeholder Alignment: Engaging with stakeholders, including business users, application developers, database administrators, and security teams, is crucial. Their input is vital for defining the scope, objectives, and success criteria. Clear communication and alignment among stakeholders can prevent misunderstandings and ensure that the migration meets the needs of the business.

Common Pitfalls and Avoidance Strategies

The planning phase is susceptible to several pitfalls that can significantly impact the migration project. Recognizing and proactively addressing these pitfalls is crucial for project success.

- Underestimating Complexity: Large database migrations are often more complex than initially anticipated. Data volumes, application dependencies, and performance requirements can create unforeseen challenges. To avoid this pitfall, conduct a thorough pre-migration assessment.

- Inadequate Testing: Insufficient testing is a common cause of migration failures. Thoroughly test the migration process in a non-production environment before migrating to production. This includes functional testing, performance testing, and security testing.

- Ignoring Data Quality Issues: Data quality issues can become amplified during migration. Addressing data quality problems before migration can prevent data corruption and ensure data integrity in the target database. Implement data cleansing and validation processes.

- Lack of a Rollback Plan: A rollback plan is a contingency plan that Artikels the steps to revert to the original database environment in case of migration failure. A well-defined rollback plan is essential for minimizing downtime and mitigating risks. Test the rollback plan thoroughly.

- Poor Communication: Inadequate communication among stakeholders can lead to misunderstandings and delays. Establish clear communication channels and regularly update stakeholders on the project’s progress. Hold regular meetings and provide status reports.

- Insufficient Resource Allocation: Failing to allocate sufficient resources (personnel, hardware, software) can lead to delays and project failure. Accurately estimate the resources needed for the migration project and secure them in advance. Consider the experience and expertise of the team.

- Ignoring Security Considerations: Neglecting security aspects can expose sensitive data to risks. Include security assessments and incorporate security measures throughout the migration process. Implement robust access controls, encryption, and data masking.

Data Compatibility and Schema Transformation

Migrating large databases often necessitates significant effort in ensuring data compatibility and transforming schemas to function effectively within the target environment. This phase addresses the intricacies of handling diverse data types, mapping schema elements, and resolving inconsistencies that arise during the transition. Successful execution is crucial for maintaining data integrity and operational efficiency post-migration.

Data Type Conversions and Schema Mapping

The diverse data types supported by different database systems pose a significant challenge during migration. Systems like Oracle, SQL Server, and PostgreSQL each have their specific implementations and nuances, making direct data transfer complex.

- Data type mismatches are a common issue. For instance, converting an Oracle `NUMBER` to a SQL Server `DECIMAL` requires careful consideration of precision and scale to avoid data truncation or loss of accuracy. A `BLOB` in one system might need to be mapped to a `VARBINARY` or a file storage mechanism in another.

- Schema mapping involves translating the structure of the source database to the target. This includes mapping tables, columns, indexes, constraints, and relationships. For example, an Oracle sequence might need to be converted to an identity column in SQL Server or a PostgreSQL sequence.

- The use of vendor-specific features can complicate the process. Functions, stored procedures, and triggers written in proprietary languages like PL/SQL (Oracle) or T-SQL (SQL Server) need to be rewritten or emulated in the target environment, which might require substantial code modifications.

- Consider the example of migrating a large e-commerce database. In Oracle, a product description field might be stored as a `CLOB` (Character Large Object). When migrating to MySQL, this might be mapped to a `TEXT` or `LONGTEXT` field. However, if the application relies on specific character set support in Oracle (e.g., UTF-8), it’s crucial to ensure the target MySQL database is also configured with UTF-8 to avoid data corruption.

Procedure for Identifying and Resolving Data Inconsistencies

Data inconsistencies can arise from various sources, including data quality issues in the source database, differing data validation rules, and transformation errors during migration. A structured procedure is essential to identify and resolve these issues.

- Data Profiling: Before migration, comprehensive data profiling is essential. This involves analyzing data to understand its characteristics, identify potential quality issues, and establish baselines for comparison. Tools can be used to examine data types, identify null values, detect outliers, and assess data distributions.

- Data Validation Rules: Document and translate the source database’s validation rules into the target environment. These rules enforce data integrity, and any discrepancies between the source and target must be addressed.

- Data Cleansing: Implement a data cleansing process to correct identified inconsistencies. This can involve standardizing data formats, removing duplicates, correcting invalid values, and handling missing data. For instance, a postal code might need to be validated against a known list and corrected if it’s inaccurate.

- Data Transformation: Develop and execute data transformation scripts or mappings to convert data from the source format to the target format. This may include data type conversions, schema mapping, and the application of business rules.

- Data Comparison and Reconciliation: After the initial migration, compare the data in the source and target databases. This can involve using data comparison tools or writing custom scripts to identify discrepancies. The discrepancies must be investigated, and appropriate reconciliation actions must be taken.

- Auditing and Monitoring: Implement an auditing mechanism to track data changes during the migration process. This helps in identifying and resolving any issues that arise during data transformation and loading.

Transforming Data Structures for Optimal Performance Post-Migration

Optimizing the data structure for performance is critical to ensuring the migrated database functions efficiently in the target environment. This requires considering the target database’s specific features, indexing strategies, and query optimization techniques.

- Indexing Strategy: Design and implement an appropriate indexing strategy for the target database. This involves identifying frequently queried columns and creating indexes to speed up data retrieval. Consider the query patterns used by the application to determine the most effective index types (e.g., B-tree, hash, or full-text indexes).

- Data Partitioning: For very large tables, consider data partitioning to improve query performance and manageability. This involves dividing the table into smaller, more manageable segments based on a specific criteria (e.g., date, range, or list). For example, a historical sales data table can be partitioned by year or month.

- Data Denormalization: In some cases, denormalizing the data can improve query performance. This involves introducing redundancy to reduce the need for joins. However, denormalization must be done carefully to avoid data inconsistencies.

- Performance Testing and Tuning: Conduct performance tests to evaluate the performance of the migrated database. This involves running realistic queries and monitoring the response times. Based on the results, fine-tune the database configuration, indexes, and query optimization to achieve optimal performance.

- Example: Consider a table containing customer orders with millions of records. After migration, the application’s performance might suffer due to slow query response times. To optimize performance, create an index on the `order_date` column, partition the table by year, and consider denormalizing the customer address information to avoid joins with the customer address table.

Downtime and Business Impact

The duration of downtime during a database migration directly correlates with the operational disruptions experienced by a business. Minimizing downtime is paramount, as even brief interruptions can lead to significant financial losses, reputational damage, and reduced customer satisfaction. A well-defined migration strategy must prioritize strategies to mitigate these risks, ensuring business continuity throughout the transition.

Strategies to Minimize Downtime

Several techniques can be employed to reduce the downtime associated with database migration. These strategies involve careful planning, advanced technologies, and meticulous execution.

- Choosing the Right Migration Approach: The selection of a migration strategy is crucial. Options include:

- Big Bang Migration: This involves a complete switchover from the old database to the new one at a single point in time. While it offers the advantage of simplicity, it typically results in extended downtime, making it suitable only for systems with limited operational dependencies or low tolerance for interruption.

- Trickle Migration: Data is migrated incrementally over time, with the old and new systems coexisting. This approach minimizes downtime but requires careful synchronization of data between the two systems and may incur higher operational costs during the transition period.

- Parallel Run Migration: Both the old and new databases run in parallel, with data replicated to the new system. This allows for testing and validation of the new system before the final switchover, thus minimizing downtime. However, it demands significant resources to maintain both systems concurrently.

- Implementing Data Replication: Technologies like database replication can minimize downtime by continuously replicating data from the source database to the target database. This ensures that the target database is up-to-date when the switchover occurs, allowing for a quicker transition.

- Utilizing Zero-Downtime Migration Tools: Specialized migration tools and services offer features designed to minimize or eliminate downtime. These tools often employ techniques like transaction replay, schema conversion, and automated failover mechanisms.

- Pre-Migration Testing and Validation: Thorough testing of the migration process in a non-production environment is essential. This includes data validation, performance testing, and failover testing. These tests identify potential issues and allow for corrective actions before the live migration.

- Optimizing Network and Hardware: Ensure the network infrastructure and hardware resources are sufficient to handle the increased load during the migration. Network bottlenecks and insufficient hardware can significantly prolong the migration process and downtime.

- Scheduling Migration During Off-Peak Hours: Performing the migration during periods of low business activity can reduce the impact of downtime on users and operations. This requires careful planning and coordination to minimize disruptions.

- Automated Rollback Procedures: Establishing automated rollback procedures is critical. In case of issues during the migration, the system should be able to revert to the original state quickly, minimizing downtime and potential data loss.

Potential Business Impacts of Prolonged Downtime

Prolonged downtime can have far-reaching consequences for a business, impacting various aspects of its operations and financial performance.

- Financial Losses: Downtime directly translates to lost revenue, as the business cannot process transactions or provide services. This includes lost sales, order cancellations, and penalties for failing to meet service level agreements (SLAs). For instance, a major e-commerce platform experiencing a 24-hour outage during a peak shopping season could incur millions of dollars in lost revenue.

- Reputational Damage: Downtime can erode customer trust and damage the company’s reputation. Negative publicity, social media backlash, and loss of customer confidence can lead to long-term consequences, including decreased customer loyalty and difficulty attracting new customers.

- Reduced Productivity: Employees may be unable to perform their duties during downtime, leading to decreased productivity and efficiency. This can affect various departments, including customer service, sales, and operations.

- Operational Disruptions: Downtime can disrupt critical business processes, such as order fulfillment, inventory management, and supply chain operations. This can lead to delays, errors, and increased operational costs.

- Legal and Compliance Issues: In some industries, downtime can lead to legal and compliance issues. For example, financial institutions must comply with regulations regarding data availability and transaction processing. Downtime can result in penalties or legal action.

- Employee Morale: Prolonged downtime can negatively impact employee morale. Employees may feel frustrated and stressed, leading to decreased productivity and increased employee turnover.

Schedule of Migration Phases with Estimated Downtime

Creating a detailed migration schedule is essential for managing downtime effectively. The schedule should Artikel the phases of the migration, estimated duration, and associated downtime. This schedule should be flexible to accommodate unexpected issues, with clearly defined contingency plans.

| Phase | Description | Activities | Estimated Duration | Estimated Downtime |

|---|---|---|---|---|

| Planning and Preparation | Defining scope, selecting tools, and preparing the environment. | Requirements gathering, tool selection, environment setup, data mapping, and schema conversion. | 4-8 weeks | None |

| Data Extraction and Transformation | Extracting data from the source database and transforming it to match the target database schema. | Data extraction scripts, data cleansing, data validation, and transformation processes. | 2-4 weeks | None |

| Data Loading and Validation | Loading the transformed data into the target database and validating its integrity. | Data loading scripts, data validation checks, and reconciliation reports. | 1-2 weeks | None |

| Testing and Performance Tuning | Testing the new database and optimizing its performance. | Functional testing, performance testing, security testing, and performance tuning. | 2-4 weeks | None |

| Cutover and Switchover | Switching over to the new database. | Final data synchronization, application switchover, and DNS updates. | 2-8 hours (depending on chosen approach) | 2-8 hours (Big Bang) or minimal (Trickle, Parallel Run) |

| Post-Migration Validation | Verifying the functionality and performance of the new database. | Functional testing, performance monitoring, and user acceptance testing. | 1-2 weeks | None |

The estimated durations and downtime figures are indicative and can vary significantly depending on the size and complexity of the database, the chosen migration approach, and the tools and technologies used. Real-world examples, such as the migration of a large retail chain’s database, often experience downtime within the 4-hour window using a parallel run approach with advanced replication technologies. However, a more complex migration involving substantial data transformation might extend the downtime to 8 hours or more, especially with a Big Bang approach.

Data Transfer Methods and Performance

Migrating large databases necessitates careful consideration of data transfer methods, as the chosen approach significantly impacts migration time, downtime, and overall efficiency. Understanding the strengths and weaknesses of different methods is crucial for selecting the optimal strategy. Performance optimization techniques and an awareness of influencing factors are essential to minimize disruption and ensure a smooth transition.

Data Transfer Method Comparison

Several data transfer methods exist, each with distinct characteristics suitable for different scenarios. The selection of the best method hinges on factors such as acceptable downtime, data consistency requirements, and the complexity of the source and target systems.

- Bulk Loading: This method involves transferring large volumes of data in a single batch. It’s typically the fastest approach for initial data migration.

- Advantages: High throughput, efficient for large datasets.

- Disadvantages: Requires downtime, may not capture ongoing changes.

- Use Cases: Initial migration of static data, large-scale data warehousing.

- Change Data Capture (CDC): CDC captures and tracks data changes in real-time or near real-time. This method allows for incremental data transfer, minimizing downtime.

- Advantages: Minimizes downtime, supports continuous data synchronization.

- Disadvantages: Increased complexity, potential performance overhead on the source system.

- Use Cases: Continuous data synchronization, minimal-downtime migrations, real-time analytics.

- Online Migration: Online migration combines bulk loading with CDC. It initially transfers the bulk of the data and then uses CDC to synchronize ongoing changes.

- Advantages: Reduced downtime compared to bulk loading alone, supports data consistency.

- Disadvantages: More complex to implement than bulk loading, potential for increased resource consumption.

- Use Cases: Migrations where downtime must be minimized, and data consistency is critical.

Optimizing Data Transfer Performance

Optimizing data transfer performance is critical to reducing migration time and minimizing business impact. Several techniques can be employed to improve the speed and efficiency of data transfer operations.

- Parallelism: Utilizing parallel processing to transfer data in multiple threads or processes. This can significantly reduce the overall transfer time. For example, a database migration tool might allow for the parallel loading of tables, where multiple tables are loaded concurrently, utilizing multiple CPU cores.

- Network Optimization: Optimizing network bandwidth and latency. This can involve using high-speed network connections and configuring network settings for optimal performance. In practice, this might mean upgrading from a 1 Gbps network connection to a 10 Gbps connection between the source and target databases.

- Compression: Compressing data during transfer to reduce the amount of data that needs to be transmitted. This can be particularly effective when transferring data over a network with limited bandwidth. A common example is using gzip compression during the data transfer process, significantly reducing the data volume.

- Indexing Strategy: Pre-creating indexes on the target database before the data transfer. This accelerates data loading by enabling efficient data insertion and query performance after the migration. A concrete example is creating indexes on the target database’s primary keys and frequently queried columns before the data load begins.

- Resource Allocation: Allocating sufficient resources, such as CPU, memory, and disk I/O, to the data transfer process. Insufficient resources can create bottlenecks and slow down the migration. For instance, providing the migration process with dedicated high-speed storage can prevent I/O bottlenecks.

- Staging Area: Using a staging area to pre-process and transform data before loading it into the target database. This can improve data loading performance and reduce the load on the target database during the migration process. An example is using a staging database to perform data cleansing, transformation, and pre-sorting before loading data into the final target.

Factors Affecting Data Transfer Speed and Efficiency

Several factors influence the speed and efficiency of data transfer, including network characteristics, database configuration, and data characteristics. Understanding these factors is essential for effective migration planning and execution.

- Network Bandwidth and Latency: The available network bandwidth and latency between the source and target databases directly impact data transfer speed. High bandwidth and low latency are crucial for fast data transfer. For example, a migration between two data centers with high latency could significantly slow down the process compared to a migration within the same data center with low latency.

- Database Configuration: The configuration of both the source and target databases can affect transfer performance. Factors such as buffer pool size, logging settings, and indexing strategies play a role. For instance, tuning the buffer pool size on the target database to match the dataset size can greatly enhance data loading speed.

- Data Volume and Complexity: The size and complexity of the data being transferred are significant factors. Larger datasets and complex data structures require more time and resources to transfer. For example, migrating a database with terabytes of data will inherently take longer than migrating a database with gigabytes of data.

- Hardware Resources: The CPU, memory, and disk I/O capabilities of both the source and target servers influence data transfer performance. Sufficient hardware resources are essential to avoid bottlenecks. A server with fast solid-state drives (SSDs) will outperform a server with slower spinning hard disk drives (HDDs) during data loading.

- Data Transformation Requirements: Any data transformation operations performed during the transfer process can impact performance. Complex transformations can increase the processing time. If data needs to be converted from one format to another, this can add significant overhead.

- Source System Load: The load on the source database during the transfer can affect performance. High CPU or I/O utilization on the source system can slow down the data transfer process. Running a migration during off-peak hours can help mitigate this issue.

Security and Compliance Concerns

Migrating large databases presents significant security and compliance challenges, particularly when sensitive data is involved. Failure to adequately address these concerns can lead to data breaches, regulatory penalties, and reputational damage. A robust security strategy is essential throughout the migration process to protect sensitive information and ensure adherence to relevant data privacy regulations.

Security Risks Associated with Migrating Sensitive Data

The migration of sensitive data introduces several security risks that must be carefully managed. These risks stem from vulnerabilities inherent in the migration process itself, the environments involved, and the data’s sensitivity.

- Data Exposure During Transfer: Data in transit is susceptible to interception and unauthorized access. Unencrypted data packets can be easily captured and analyzed, exposing sensitive information to potential attackers. The risk is amplified when data traverses public networks.

- Insufficient Access Controls: Weak or poorly implemented access controls can lead to unauthorized data access. This includes inadequate authentication mechanisms, insufficient role-based access controls (RBAC), and lack of proper auditing of user activities.

- Vulnerability Exploitation in Source and Target Systems: Both the source and target database systems may have vulnerabilities that can be exploited during the migration process. Exploitation of these vulnerabilities can lead to data breaches, data corruption, or system compromise. This is particularly concerning if the systems are not up-to-date with the latest security patches.

- Insider Threats: Individuals involved in the migration process, whether internal employees or external contractors, can pose a security risk. Malicious insiders can intentionally or unintentionally compromise data security. The risk is exacerbated by inadequate background checks, lack of security awareness training, and insufficient monitoring of privileged access.

- Data Loss or Corruption: Data loss or corruption can occur due to various factors, including hardware failures, software bugs, human error, or malicious attacks. This can result in the unavailability of data, financial losses, and reputational damage.

- Compliance Violations: Failure to comply with relevant data privacy regulations, such as GDPR, CCPA, or HIPAA, can result in significant financial penalties, legal action, and loss of customer trust. The migration process must be designed to ensure compliance with all applicable regulations.

Checklist for Ensuring Data Privacy and Compliance with Regulations

Adhering to data privacy regulations during database migration necessitates a meticulous approach. This checklist Artikels key steps to ensure compliance and data protection.

- Identify and Classify Data: Conduct a thorough data inventory to identify all sensitive data elements and classify them based on their sensitivity level (e.g., Personally Identifiable Information (PII), Protected Health Information (PHI), financial data). This is a foundational step for applying appropriate security controls.

- Assess Regulatory Requirements: Determine all applicable data privacy regulations based on the location of the data, the location of the data subjects, and the nature of the business. Understand the specific requirements of each regulation, including data minimization, purpose limitation, and data retention.

- Develop a Data Migration Plan: Create a detailed data migration plan that addresses all aspects of the migration process, including security considerations. The plan should Artikel the migration strategy, the technologies to be used, the roles and responsibilities of each team member, and the security controls to be implemented.

- Implement Strong Access Controls: Implement robust access controls to restrict access to sensitive data to authorized personnel only. Use role-based access control (RBAC) to grant users only the necessary permissions. Implement multi-factor authentication (MFA) to verify user identities. Regularly review and update access controls.

- Encrypt Data at Rest and in Transit: Encrypt sensitive data at rest within the database and in transit during the migration process. Use strong encryption algorithms, such as AES-256, and manage encryption keys securely. Encryption protects data from unauthorized access even if the storage media or network is compromised.

- Secure the Migration Environment: Secure the migration environment, including the source and target systems, the network infrastructure, and any intermediate systems used for data transformation. Implement security best practices, such as patching vulnerabilities, configuring firewalls, and monitoring network traffic.

- Implement Data Masking and Anonymization: Use data masking and anonymization techniques to protect sensitive data during testing and development. This involves replacing sensitive data with fictitious but realistic values or removing identifying information altogether.

- Monitor and Audit the Migration Process: Implement comprehensive monitoring and auditing to track all activities during the migration process. Log all access attempts, data modifications, and security events. Regularly review audit logs to identify any security breaches or policy violations.

- Conduct Data Quality Checks: Implement data quality checks to ensure that data is accurate, complete, and consistent throughout the migration process. This helps to prevent data loss or corruption and ensures the integrity of the migrated data.

- Document Everything: Document all aspects of the migration process, including the data inventory, the migration plan, the security controls implemented, and the audit logs. Documentation is essential for demonstrating compliance with regulatory requirements and for troubleshooting any issues that may arise.

Security Protocol for Data Protection During Migration

A comprehensive security protocol is crucial for protecting sensitive data during migration. This protocol should incorporate encryption, access control, and other security measures to mitigate the risks.

- Encryption Strategy:

- Encryption in Transit: Utilize Transport Layer Security (TLS) or Secure Sockets Layer (SSL) to encrypt all data transmitted over the network. This prevents eavesdropping and protects data confidentiality during transfer. Consider using VPNs for additional security, especially when migrating data across public networks.

- Encryption at Rest: Implement database-level encryption or full disk encryption to protect data stored on the source and target systems. Use strong encryption algorithms like AES-256 and manage encryption keys securely using a key management system (KMS).

- Example: Consider a financial institution migrating customer financial data. They could employ TLS 1.3 for secure data transfer and AES-256 encryption for the database at rest.

- Access Control Strategy:

- Role-Based Access Control (RBAC): Implement RBAC to grant users access to data based on their roles and responsibilities. Define clear roles and assign appropriate permissions to each role. Regularly review and update role assignments.

- Multi-Factor Authentication (MFA): Enforce MFA for all users accessing the migration environment. This adds an extra layer of security by requiring users to provide multiple forms of authentication, such as a password and a one-time code from a mobile device.

- Least Privilege Principle: Grant users only the minimum necessary privileges to perform their tasks. Avoid granting excessive permissions that could expose data to unnecessary risks.

- Example: During the migration, only the migration team should have access to the source and target databases. Within the team, different roles (e.g., database administrator, data engineer) should have specific permissions tailored to their tasks.

- Data Masking and Anonymization:

- Data Masking: Implement data masking techniques to replace sensitive data with realistic but non-sensitive values during testing and development. This protects sensitive data from exposure while still allowing for functional testing.

- Data Anonymization: Consider anonymizing data whenever possible, removing or generalizing identifying information. This is especially useful for data used for analytics and research.

- Example: A healthcare provider migrating patient data might mask patient names, addresses, and social security numbers during testing, replacing them with fictitious but structurally valid data. They could also anonymize data for research purposes by removing direct identifiers.

- Monitoring and Auditing:

- Real-Time Monitoring: Implement real-time monitoring of all activities during the migration process. Monitor network traffic, system logs, and database activity for any suspicious behavior or security breaches.

- Auditing: Enable detailed auditing of all data access, data modifications, and security events. Regularly review audit logs to identify any unauthorized access or policy violations.

- Alerting: Configure alerts to notify security personnel of any unusual activity or potential security threats.

- Example: Security Information and Event Management (SIEM) systems can be used to collect and analyze logs from various sources, providing real-time insights into security events and enabling proactive threat detection.

- Secure Communication Channels:

- VPNs: Use Virtual Private Networks (VPNs) to establish secure and encrypted connections between the source and target systems, especially when migrating data across public networks.

- Dedicated Network Segments: Isolate the migration environment from the rest of the network to reduce the attack surface and prevent unauthorized access.

- Example: A company might establish a dedicated VPN tunnel between its on-premises data center and its cloud provider during the migration, ensuring all data traffic is encrypted and secure.

Testing and Validation

The rigorous testing and validation of a migrated database are paramount to ensuring data integrity, application functionality, and business continuity. A comprehensive testing strategy minimizes the risk of data loss, corruption, or unexpected application behavior, ultimately safeguarding the organization’s investment and reputation. This phase systematically verifies the accuracy, completeness, and consistency of the data after the migration process.

Best Practices for Data Integrity Validation

Several best practices should be implemented to validate data integrity post-migration. These methods are crucial for detecting and rectifying any discrepancies that may have arisen during the transfer.

- Data Comparison: Utilize automated data comparison tools to compare data sets between the source and target databases. These tools should support various comparison techniques, including row-by-row, column-by-column, and checksum-based comparisons. The goal is to identify any deviations in data values, data types, or structural integrity.

- Checksum Verification: Implement checksum calculations (e.g., MD5, SHA-256) on critical data elements or entire tables before and after migration. This allows for the quick detection of data corruption during the transfer. Any mismatch in checksum values indicates a potential data integrity issue.

- Sampling and Auditing: Randomly sample data from the target database and compare it against the source database. This process should include auditing the migrated data against business rules and constraints to ensure data quality. Regular audits provide a continuous monitoring mechanism to detect data anomalies.

- Referential Integrity Checks: Verify referential integrity constraints (foreign keys) to ensure data relationships remain consistent. This involves checking that all foreign key values in the target database correctly reference existing primary key values in related tables.

- Business Rule Validation: Validate that all business rules, data validation rules, and constraints are correctly implemented and enforced in the target database. This ensures that the migrated data conforms to the required data quality standards and business requirements.

- Automated Testing Frameworks: Employ automated testing frameworks to execute a series of tests systematically. This includes creating test scripts for data validation, functional testing, and performance testing. Automation reduces manual effort and improves the efficiency of the testing process.

Testing Plan

A well-defined testing plan is essential for the successful validation of a migrated database. This plan Artikels the different testing phases, their objectives, and the expected outcomes. The following details a testing plan encompassing unit tests, integration tests, and user acceptance testing (UAT).

- Unit Tests: These tests focus on verifying the functionality of individual database objects, such as stored procedures, functions, triggers, and views. Each object should be tested in isolation to ensure it performs its intended task correctly. The focus is on validating the behavior of the smallest units of code.

- Integration Tests: These tests assess the interaction between different database objects and the application’s interaction with the database. They ensure that the various components of the system work together seamlessly. This involves testing the interfaces between different database components and application modules.

- User Acceptance Testing (UAT): This is the final testing phase, where end-users validate the migrated database to ensure it meets their business requirements. Users perform real-world tasks using the migrated data to verify the functionality and usability of the system. This phase provides the final sign-off before the system goes live.

Testing Scenarios and Expected Outcomes

The following table Artikels different testing scenarios and their expected outcomes, providing a structured approach to database migration validation.

| Testing Scenario | Test Objective | Test Data | Expected Outcome |

|---|---|---|---|

| Data Type Validation | Verify that data types are correctly mapped and preserved during migration. | Test data with various data types (e.g., integers, strings, dates, decimals) | All data types should be accurately represented in the target database, with no data loss or type conversion errors. |

| Data Integrity Checks | Ensure that referential integrity and other database constraints are enforced. | Test data with relationships between tables, including primary keys, foreign keys, and constraints. | All constraints should be correctly enforced, and relationships between tables should be maintained. No orphaned records or data inconsistencies should exist. |

| Stored Procedure Validation | Validate the functionality of stored procedures, functions, and triggers. | Test data that triggers stored procedures with different input parameters. | Stored procedures should execute correctly, return the expected results, and update data as intended. No errors or unexpected behavior should occur. |

| Performance Testing | Measure the performance of the migrated database under various workloads. | Simulated user loads and query executions on a representative dataset. | Database queries should execute within acceptable timeframes. The system should handle the expected user load without performance degradation. Performance metrics should meet or exceed pre-migration benchmarks. |

Resource Allocation and Budgeting

Accurate resource allocation and meticulous budgeting are critical for the successful migration of large databases. Underestimation in either area can lead to significant project delays, cost overruns, and potentially, project failure. A well-defined resource plan and budget provide a roadmap for the migration process, ensuring sufficient personnel, infrastructure, and financial resources are available throughout the project lifecycle.

Importance of Accurate Resource Allocation

Effective resource allocation directly impacts the efficiency and effectiveness of the migration. Failure to accurately assess and allocate resources can create bottlenecks, leading to delays and increased costs. This includes personnel with specialized skills, adequate infrastructure to support data transfer and transformation, and sufficient time for testing and validation.

- Personnel: A skilled team is essential. This includes database administrators (DBAs), migration specialists, network engineers, security experts, and project managers. The size of the team depends on the complexity and scale of the migration. For example, a migration involving petabytes of data and multiple database types will require a larger and more specialized team than a migration of a smaller, single-database system.

The team’s experience level and skill sets also impact resource requirements; a team lacking experience in the target database platform or migration tools will likely require more time and resources.

- Infrastructure: Sufficient infrastructure is crucial for data transfer, processing, and storage. This includes servers for the source and target databases, network bandwidth for data transfer, and storage capacity to accommodate the data. The infrastructure requirements depend on factors such as the volume of data, the data transfer method, and the performance requirements. For example, migrating data across geographically dispersed data centers necessitates robust network connectivity and potentially, the use of data replication technologies.

- Time: Realistic timelines are essential. The migration process involves various stages, including planning, preparation, data transfer, testing, and validation. Each stage requires time for execution and potential troubleshooting. Underestimating the time required for any stage can lead to project delays. For instance, the time needed for data validation can be significantly underestimated, leading to delays if data integrity issues are discovered late in the process.

- Tools and Software: Migration tools and software are often required to facilitate the migration process. The choice of tools depends on factors such as the source and target database platforms, the complexity of the data, and the desired level of automation. Licensing costs for these tools should be factored into the budget.

Budget Breakdown for a Large Database Migration Project

A comprehensive budget breakdown is crucial for managing costs effectively. This should encompass all direct and indirect costs associated with the migration project. The following is a sample budget breakdown for a hypothetical large database migration project. The specific percentages and costs will vary depending on the project’s scope and complexity. This example uses a total budget of $1,000,000 for illustrative purposes.

| Cost Category | Description | Estimated Percentage | Estimated Cost |

|---|---|---|---|

| Personnel | Salaries, benefits, and consultant fees for the migration team (DBAs, migration specialists, project managers, etc.) | 30% | $300,000 |

| Infrastructure | Costs for new servers, storage, and network equipment, or cloud infrastructure services. | 25% | $250,000 |

| Software and Tools | Licenses for migration tools, database management software, and other relevant software. | 10% | $100,000 |

| Data Transfer | Costs associated with data transfer methods, such as network bandwidth or data replication services. | 5% | $50,000 |

| Testing and Validation | Costs for testing environments, data validation tools, and quality assurance efforts. | 10% | $100,000 |

| Training | Training for the migration team on the new database platform and migration tools. | 5% | $50,000 |

| Contingency | A buffer for unexpected costs and delays. This is critical for managing risk. | 15% | $150,000 |

Impact of Unexpected Costs and Delays on the Overall Budget

Unexpected costs and delays can severely impact the project budget, potentially leading to overruns and compromising the project’s success. These issues can arise from various sources, including unforeseen technical challenges, data quality issues, and scope creep.

- Personnel Costs: Delays extend the project timeline, increasing personnel costs. If the migration team works longer than initially planned, this leads to higher salary expenses and potential overtime payments. The need for additional consultants to address unexpected issues further exacerbates these costs.

- Infrastructure Costs: Unexpected performance bottlenecks might necessitate the purchase of additional hardware or increased cloud resource consumption. This adds to the infrastructure costs, and also can extend the duration of the project, thus further increasing personnel costs.

- Software and Tool Costs: If the project requires additional software licenses or the extension of existing licenses, these costs will increase. This is especially true if the migration project requires new or specialized tools to resolve unexpected problems.

- Contingency Fund Depletion: Unexpected costs will draw upon the contingency fund, potentially depleting it. If the contingency fund is exhausted, the project may require additional funding, which can be difficult to obtain, and may even lead to the project being canceled.

- Impact on Business Operations: Delays can disrupt business operations, leading to lost revenue and decreased productivity. The longer the downtime, the greater the impact on the business. For example, if a retail company’s database migration causes an extended outage during a peak shopping season, it can result in significant revenue losses.

Skill Gap and Expertise

Successfully migrating large databases necessitates a specialized skill set and a deep understanding of various technologies and methodologies. The absence of these skills, or the inability to effectively apply them, constitutes a significant risk to the migration project, potentially leading to delays, cost overruns, data loss, and operational disruptions. This section delves into the crucial skills required, the challenges of finding and retaining skilled professionals, and the resources available for skill development.

Essential Skills and Expertise for Database Migration

The complexity of large database migrations demands a multidisciplinary approach. Professionals involved must possess a combination of technical proficiency and project management acumen.

- Database Administration: Expertise in managing and administering the source and target database systems is paramount. This includes a thorough understanding of database internals, performance tuning, backup and recovery strategies, and security configurations. For example, a deep understanding of Oracle database internals is essential when migrating from Oracle to PostgreSQL, or vice versa.

- Database Architecture and Design: The ability to design and optimize database schemas for the target environment is crucial. This involves understanding data modeling principles, schema transformation techniques, and the impact of schema changes on application performance.

- Data Migration Tools and Techniques: Proficiency in using various data migration tools and techniques, such as ETL (Extract, Transform, Load) processes, change data capture (CDC), and replication, is essential. This includes knowledge of specific tools like AWS Database Migration Service (DMS), Azure Database Migration Service (ADMS), or open-source alternatives like Apache Kafka.

- Scripting and Automation: The ability to write scripts (e.g., SQL, Python, Bash) to automate migration tasks, such as data validation, schema creation, and data loading, significantly improves efficiency and reduces the risk of human error.

- Networking and Infrastructure: A solid understanding of networking concepts, including network connectivity, bandwidth requirements, and security protocols, is necessary to ensure smooth data transfer between the source and target environments.

- Project Management: Effective project management skills, including planning, scheduling, risk management, and communication, are critical for managing the migration project effectively. This ensures the project stays on track, within budget, and meets the defined objectives.

- Performance Tuning and Optimization: The ability to identify and resolve performance bottlenecks during and after the migration is crucial. This involves analyzing query performance, optimizing database configurations, and tuning application code.

- Security and Compliance: Expertise in implementing security best practices and ensuring compliance with relevant regulations (e.g., GDPR, HIPAA) is essential to protect sensitive data during the migration process.

Challenges of Finding and Retaining Skilled Migration Professionals

The demand for skilled database migration professionals often outstrips the supply, leading to several challenges.

- Competition for Talent: The IT industry faces intense competition for skilled professionals with expertise in database migration. This is exacerbated by the rapid evolution of database technologies and the increasing complexity of migration projects.

- Specialized Skill Sets: Database migration requires a unique combination of skills, including database administration, data modeling, scripting, and project management. Finding individuals with all these skills can be challenging.

- High Salaries and Benefits: The scarcity of skilled professionals often leads to higher salaries and benefits, increasing project costs. This can strain project budgets and make it difficult for organizations to compete for talent.

- Retention Challenges: Skilled professionals are often in high demand and may be tempted to switch jobs for better opportunities or compensation. Retaining these professionals requires competitive compensation packages, opportunities for professional development, and a positive work environment.

- Knowledge Transfer and Documentation: Even with skilled professionals, ensuring adequate knowledge transfer and documentation is crucial. This involves documenting migration processes, configurations, and troubleshooting steps to enable future maintenance and support.

Training Resources and Certifications for Database Migration

Numerous resources and certifications are available to help individuals develop the necessary skills for database migration.

- Vendor-Specific Training: Database vendors, such as Oracle, Microsoft, and AWS, offer training courses and certifications related to their database products and migration tools. These courses provide in-depth knowledge of specific technologies and best practices.

- Online Courses and Tutorials: Online learning platforms, such as Coursera, Udemy, and edX, offer a wide range of courses on database administration, data migration, and related topics. These courses provide flexible and affordable learning options.

- Professional Certifications: Obtaining professional certifications, such as Oracle Certified Professional (OCP), Microsoft Certified: Azure Database Administrator Associate, or AWS Certified Database – Specialty, can validate an individual’s skills and expertise in database migration.

- Industry Conferences and Workshops: Attending industry conferences and workshops provides opportunities to learn from experts, network with peers, and stay up-to-date on the latest trends and technologies.

- Books and Documentation: Reading books and documentation on database migration, database administration, and related topics provides a comprehensive understanding of the subject matter.

- Hands-on Experience: Gaining hands-on experience through practice projects, internships, or real-world migration projects is crucial for developing practical skills and expertise. For example, creating a test environment for a small-scale migration using a tool like AWS DMS can provide valuable experience.

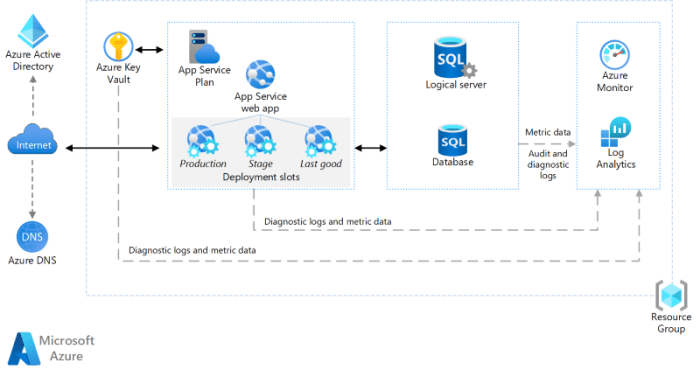

Infrastructure and Environment Considerations

The infrastructure and environment underpinning a database migration significantly influences its success. Insufficient resources or inadequately configured systems can introduce bottlenecks, extend migration timelines, and potentially jeopardize data integrity. Careful assessment of both the source and target environments, coupled with strategic resource allocation, is paramount for a seamless transition.

Impact of Infrastructure Limitations

Infrastructure limitations, encompassing network bandwidth, storage capacity, and compute resources, can profoundly impact the database migration process. These limitations can manifest in various ways, leading to performance degradation and potential failures.

- Network Bandwidth: Insufficient network bandwidth constitutes a primary bottleneck during data transfer. The rate at which data can be moved between the source and target environments is directly proportional to available bandwidth. For example, migrating a 10 TB database over a 1 Gbps network connection theoretically requires approximately 22 hours of continuous transfer. However, factors such as network congestion, protocol overhead, and data compression can significantly extend this timeframe.

In contrast, utilizing a 10 Gbps connection would reduce the theoretical transfer time considerably. Therefore, bandwidth limitations directly affect migration duration.

- Storage Capacity: Inadequate storage capacity on the target environment can halt the migration process entirely. The target system must possess sufficient storage to accommodate the entire database, including temporary storage required during the migration. Furthermore, the target environment’s storage performance (e.g., I/O operations per second – IOPS) can influence data loading speeds. Migrating to a storage system with lower IOPS will result in slower data ingestion rates, even if sufficient storage capacity is available.

- Compute Resources: The compute resources, including CPU and memory, of both the source and target servers impact migration performance. The migration process itself, especially tasks like schema transformation and data validation, consumes significant CPU cycles and memory. Insufficient resources on either end can lead to slower processing times. Consider a scenario where a large data transformation requires intensive CPU usage. If the target server’s CPU is overloaded, the transformation process will be significantly slowed, extending the migration duration.

Similarly, insufficient memory can lead to excessive paging, further degrading performance.

Checklist for Evaluating Target Environment Suitability

Evaluating the target environment’s suitability is crucial to ensure that it can support the migrated database and meet the performance and availability requirements. A comprehensive checklist should cover various aspects of the infrastructure and environment.

- Hardware Specifications: Verify that the target server’s CPU, memory, and storage meet or exceed the requirements of the database. Consider factors such as CPU cores, RAM capacity, and storage type (e.g., SSD, HDD, NVMe). Evaluate the target server’s ability to handle the expected workload, including peak loads.

- Network Connectivity: Assess the network bandwidth between the source and target environments. Determine if the network connection is sufficient for the expected data transfer rates. Consider network latency and any potential network bottlenecks.

- Storage Configuration: Analyze the storage capacity, performance (IOPS, throughput), and redundancy of the target storage system. Verify that the storage system can accommodate the database size and future growth. Determine the appropriate storage configuration (e.g., RAID level, storage tiering) to meet performance and cost requirements.

- Operating System and Database Software: Ensure that the target environment supports the required operating system and database software versions. Check for compatibility issues and any necessary patches or updates. Confirm that the target environment is configured according to the vendor’s best practices.

- Security and Compliance: Evaluate the security measures in place, including firewalls, access controls, and encryption. Verify that the target environment complies with all relevant security and compliance requirements. Assess the data protection policies and procedures.

- Backup and Recovery: Review the backup and recovery strategy for the target environment. Verify that the backup and recovery procedures are in place and tested. Ensure that the recovery time objective (RTO) and recovery point objective (RPO) meet the business requirements.

- Monitoring and Alerting: Confirm that the target environment includes monitoring and alerting systems. Implement monitoring for key performance indicators (KPIs), such as CPU utilization, memory usage, and storage I/O. Configure alerts to notify administrators of potential issues.

Steps to Prepare the Target Environment

Preparing the target environment involves a series of essential steps to ensure it is ready to receive the migrated database. These steps involve configuration, optimization, and validation.

1. Infrastructure Provisioning: Procure and configure the necessary hardware and software components for the target environment. This includes servers, storage, network devices, and database software. Consider utilizing infrastructure-as-code (IaC) tools to automate provisioning and ensure consistency.

2. Network Configuration: Configure the network settings, including IP addresses, DNS, and firewall rules. Establish network connectivity between the source and target environments. Ensure sufficient bandwidth and low latency for data transfer.

3. Storage Configuration: Configure the storage system, including creating storage volumes, setting up RAID levels, and optimizing storage performance. Ensure sufficient storage capacity to accommodate the migrated database and future growth. Test the storage system’s performance under load.

4. Database Software Installation and Configuration: Install and configure the database software on the target environment. Configure database parameters, such as memory allocation, buffer pools, and connection limits. Follow the vendor’s best practices for database configuration.

5. Security Hardening: Implement security measures, including access controls, encryption, and auditing. Configure firewalls to restrict access to the database server. Regularly update security patches and monitor for security vulnerabilities.

6. Backup and Recovery Setup: Configure the backup and recovery procedures for the target database. Test the backup and recovery processes to ensure data integrity and recoverability. Establish a regular backup schedule and offsite backup storage.

7. Performance Tuning: Optimize the target environment’s performance. Fine-tune database parameters, configure query optimization, and index data appropriately. Conduct performance testing to identify and address any performance bottlenecks.

8. Monitoring and Alerting Implementation: Implement monitoring and alerting systems to track key performance indicators (KPIs). Configure alerts to notify administrators of potential issues, such as high CPU utilization, memory usage, or storage I/O. Regularly review and refine monitoring configurations.

Post-Migration Monitoring and Maintenance

Post-migration activities are crucial for ensuring the long-term success of a database migration. Monitoring and maintenance are ongoing processes designed to proactively address potential issues, optimize performance, and maintain data integrity. This ensures that the migrated database operates efficiently and effectively, meeting the needs of the business.

Importance of Post-Migration Monitoring

Effective post-migration monitoring is essential for several reasons. It serves as an early warning system, detecting anomalies and potential problems before they escalate into significant issues. Continuous monitoring provides insights into system performance, identifies areas for optimization, and ensures that the database is meeting service-level agreements (SLAs). Regular monitoring also helps to validate the success of the migration process itself, confirming that data integrity has been maintained and that the migrated system functions as expected.

Database Maintenance Plan

A comprehensive maintenance plan should be implemented immediately following the database migration. This plan should encompass various tasks to ensure the database’s ongoing health, performance, and security.

- Regular Backups: Establish a robust backup strategy, including full and incremental backups, to protect against data loss. Backups should be tested regularly to ensure recoverability. For example, a financial institution might implement daily full backups and hourly incremental backups to minimize the risk of data loss in case of a disaster.

- Index Optimization: Regularly analyze and optimize database indexes to maintain query performance. This involves identifying and addressing fragmented indexes, as well as creating new indexes to support frequently executed queries. A retail company might analyze query logs to identify slow-running queries and create indexes on relevant columns to improve performance.

- Database Statistics Updates: Ensure that database statistics are regularly updated to provide the query optimizer with accurate information about the data distribution. This allows the optimizer to generate efficient execution plans. This can be automated through scheduled jobs.

- Security Audits: Conduct regular security audits to identify and address potential vulnerabilities. This includes reviewing user permissions, monitoring access logs, and implementing security patches. A healthcare provider might conduct quarterly security audits to ensure compliance with HIPAA regulations.

- Performance Tuning: Continuously monitor and tune database performance parameters, such as memory allocation, buffer pool size, and connection limits. This involves analyzing performance metrics and adjusting settings to optimize resource utilization.

- Capacity Planning: Regularly assess the database’s capacity requirements and plan for future growth. This involves monitoring storage utilization, CPU usage, and network bandwidth to anticipate potential bottlenecks and ensure sufficient resources are available. A growing e-commerce platform might forecast database storage needs based on historical sales data and projected growth rates.

Key Performance Indicators (KPIs) for Monitoring

Monitoring a migrated database involves tracking several KPIs to assess its performance, health, and stability. These KPIs should be regularly reviewed and analyzed to identify trends, detect anomalies, and proactively address potential issues.

- Query Response Time: Measures the time it takes for queries to execute. High response times can indicate performance bottlenecks, such as slow indexes or inefficient query plans.

Query Response Time = Total Time / Number of Queries

- Transactions per Second (TPS): Indicates the number of transactions processed by the database per second. A drop in TPS can indicate performance issues or application problems.

- CPU Utilization: Monitors the percentage of CPU resources used by the database server. High CPU utilization can indicate resource contention or inefficient query execution.

- Memory Usage: Tracks the amount of memory used by the database server. Insufficient memory can lead to performance degradation.

- Disk I/O: Measures the rate of data transfer between the database server and the storage devices. High disk I/O can indicate slow storage or inefficient query execution.

- Database Connection Count: Monitors the number of active database connections. Excessive connections can indicate resource exhaustion.

- Error Rates: Tracks the frequency of errors, such as query errors or connection errors. An increase in error rates can indicate application or database issues.

- Backup and Restore Time: Measures the time it takes to perform backups and restores. These times are critical for disaster recovery planning.

Interpreting these KPIs involves establishing baseline values and monitoring for deviations. For instance, if query response times suddenly increase, it could indicate a problem with indexing, a change in data volume, or an application issue. High CPU utilization coupled with slow query response times might indicate the need for query optimization or hardware upgrades. Regularly reviewing these KPIs, along with the maintenance plan, will help ensure the long-term health and performance of the migrated database.

Wrap-Up

In conclusion, migrating large databases necessitates a holistic approach, encompassing technical proficiency, strategic planning, and proactive risk management. Addressing the challenges Artikeld—from data compatibility and downtime to security and resource allocation—is crucial for a successful migration. By implementing robust testing, rigorous monitoring, and a well-defined maintenance plan, organizations can ensure data integrity, optimize performance, and realize the full benefits of their migrated databases.

The ability to adapt and respond to unforeseen challenges is paramount, underscoring the importance of a flexible and informed strategy.

FAQ Resource

What is the biggest risk in migrating a large database?

The biggest risk is prolonged downtime, which can significantly impact business operations, leading to lost revenue and reputational damage. Thorough planning and testing are essential to mitigate this risk.

How can I ensure data integrity during a migration?

Data integrity is maintained through rigorous testing, validation, and the use of data transformation processes that preserve data consistency and accuracy. Comprehensive testing plans, including unit, integration, and user acceptance testing (UAT), are critical.

What are the primary considerations for choosing a data transfer method?

The primary considerations include the size of the database, the acceptable downtime, the network bandwidth available, and the need for real-time data synchronization. Different methods, like bulk loading, CDC, and online migration, are chosen based on these factors.

How do I estimate the cost of a database migration project?

Cost estimation involves factoring in personnel costs, infrastructure expenses (hardware, software, cloud services), data transfer costs, and the cost of potential downtime. A detailed budget breakdown, including contingency funds, is necessary.

What skills are essential for a successful database migration?

Essential skills include database administration, data modeling, data transformation, scripting, networking, and project management. Expertise in the source and target database systems is also critical.