The migration of stateful applications presents a complex undertaking, demanding meticulous planning and execution. These applications, unlike their stateless counterparts, maintain persistent data and session information, introducing unique challenges absent in simpler migration scenarios. Understanding these challenges is paramount for organizations seeking to modernize their infrastructure, improve scalability, or leverage cloud-based services. The following analysis dissects the multifaceted difficulties encountered when migrating stateful applications, offering a structured approach to navigate these complexities.

This exploration will systematically examine key aspects of stateful application migration, from the fundamental characteristics of these applications and the objectives driving migration efforts, to the intricate technical considerations surrounding data integrity, downtime management, infrastructure compatibility, and security. Furthermore, the analysis will encompass the critical role of testing, the required skillsets, and the financial implications associated with these complex projects.

By dissecting these areas, a comprehensive understanding of the intricacies of stateful application migration can be developed.

Understanding Stateful Applications

Stateful applications, unlike their stateless counterparts, maintain a persistent record of past interactions, or ‘state’. This characteristic fundamentally alters their architecture and operational considerations, particularly when it comes to migration. Understanding these core attributes is crucial for effectively addressing the challenges involved.

Core Characteristics of Stateful Applications

Stateful applications are defined by their ability to retain and utilize information about previous interactions. This state can encompass various forms, from simple session data to complex transactional records. The state is critical for the application’s functionality and can be stored in-memory, on disk, or in a dedicated database. The management of this state significantly impacts the application’s behavior, performance, and scalability.

Examples of Common Stateful Applications

Several application types inherently rely on maintaining state. These applications often necessitate preserving information across multiple requests or sessions to function correctly.

- Databases: Database management systems (DBMS) are fundamentally stateful. They maintain persistent storage of data, transaction logs, and indexing information. The state is critical for data integrity, consistency, and efficient query processing. For instance, a relational database like PostgreSQL stores its data on disk, along with metadata about tables, indexes, and user permissions. This persistent state enables the database to recover from failures and maintain data consistency even after restarts.

- Message Queues: Systems like Apache Kafka and RabbitMQ are stateful because they store messages, manage message offsets, and track consumer subscriptions. Kafka, for example, maintains a log of messages for a defined retention period, allowing consumers to replay messages from a specific offset. This persistent state is crucial for ensuring message delivery, fault tolerance, and scalability.

- Caching Systems: Caching solutions, such as Redis and Memcached, are stateful as they store cached data in memory. This state enables faster retrieval of frequently accessed information, improving application performance. Redis, for example, allows persistence of cached data to disk, adding a layer of durability to the caching system’s state.

- Session-based Web Applications: Many web applications use sessions to maintain user context. This state, stored on the server-side, tracks user login status, shopping cart contents, and other personalized data. Consider an e-commerce website; the session state stores the user’s current shopping cart items, allowing the user to browse different product pages without losing the selected items.

- Distributed File Systems: Systems like Hadoop Distributed File System (HDFS) maintain state about file metadata, block locations, and cluster health. This state is essential for data consistency, fault tolerance, and efficient data access across the cluster.

State Management within Stateful Applications

The methods for managing state vary widely, depending on the application’s architecture and requirements. The choice of state management strategy impacts the application’s performance, scalability, and resilience.

- In-Memory State: Some applications store state directly in memory, offering fast access but vulnerability to failures. This approach is suitable for applications where data loss is acceptable or can be easily recovered. For example, a game server might store player session data in memory for fast access. If the server crashes, the player’s progress might be lost, but the recovery is often possible.

- Persistent Storage: Many stateful applications employ persistent storage mechanisms like databases, file systems, or object stores to ensure data durability. This approach provides fault tolerance and enables data recovery. A banking application, for example, would use a database to store transaction records persistently.

- Distributed State Management: In distributed systems, state is often distributed across multiple nodes for scalability and high availability. This requires techniques like replication, sharding, and consensus algorithms. For instance, a distributed database like Cassandra replicates data across multiple nodes, providing fault tolerance.

- Stateful Components: Certain components within a system are inherently stateful, managing their own internal state. For example, a load balancer maintains a connection table to direct traffic to appropriate backend servers.

- State Synchronization: Maintaining consistency across multiple instances of a stateful application requires state synchronization mechanisms. These mechanisms can include distributed locking, two-phase commit protocols, or eventual consistency models.

Identifying Migration Goals and Objectives

The successful migration of stateful applications hinges on clearly defined goals and objectives. Without a precise understanding of the “why” behind the migration, the process becomes significantly more complex and prone to failure. Defining these goals early is crucial for guiding decision-making, selecting appropriate migration strategies, and measuring the overall success of the project.

Primary Reasons for Migrating Stateful Applications

Organizations pursue stateful application migration for a variety of compelling reasons, often driven by a combination of technological advancements, business needs, and cost considerations. These reasons typically fall into several key categories:

- Infrastructure Modernization: Many organizations are migrating stateful applications to take advantage of modern infrastructure platforms, such as cloud environments (AWS, Azure, GCP). This shift provides access to more scalable, resilient, and cost-effective resources compared to on-premises infrastructure. For example, a financial institution might migrate its core banking system to the cloud to improve disaster recovery capabilities and reduce capital expenditure on hardware.

- Cost Optimization: Cloud migration can lead to significant cost savings through reduced hardware maintenance, energy consumption, and IT staff overhead. Organizations often leverage pay-as-you-go pricing models offered by cloud providers, enabling them to optimize resource allocation and reduce operational expenses. Consider a scenario where a retail company migrates its order processing system to the cloud, scaling resources up during peak seasons and down during slower periods, resulting in substantial cost savings compared to maintaining fixed on-premises infrastructure.

- Enhanced Scalability and Elasticity: Stateful applications often require significant resources, especially during peak usage periods. Cloud environments offer the ability to dynamically scale resources up or down based on demand, providing greater elasticity and improved performance. This is particularly crucial for applications that experience fluctuating workloads, such as e-commerce platforms during sales events or streaming services during prime time.

- Improved Disaster Recovery and Business Continuity: Cloud providers offer robust disaster recovery and business continuity solutions, including data replication, automated failover, and geographically distributed deployments. Migrating stateful applications to the cloud enhances an organization’s ability to recover quickly from outages and ensure business operations continue with minimal disruption. For example, a healthcare provider might migrate its patient record system to a cloud environment with multi-region replication to ensure data availability and business continuity in the event of a regional disaster.

- Increased Agility and Innovation: Cloud platforms offer a wide range of services and tools that enable faster development cycles, more rapid deployment of new features, and improved innovation. Organizations can leverage these capabilities to modernize their applications, adopt new technologies, and respond more quickly to changing market demands. This can include leveraging services like serverless computing for specific application components or integrating with managed database services for simplified management.

Potential Benefits from Migrating Stateful Applications

Migrating stateful applications offers a range of potential benefits that can significantly impact an organization’s efficiency, competitiveness, and overall success. These benefits often intertwine and contribute to a more agile, resilient, and cost-effective IT environment.

- Reduced Operational Costs: Migrating to the cloud can significantly reduce operational costs by eliminating the need for on-premises hardware, reducing energy consumption, and automating IT management tasks.

- Improved Scalability and Performance: Cloud environments provide the ability to dynamically scale resources up or down based on demand, ensuring optimal performance and responsiveness, even during peak usage periods.

- Enhanced Business Continuity and Disaster Recovery: Cloud providers offer robust disaster recovery solutions, including data replication and automated failover, enabling organizations to recover quickly from outages and minimize downtime.

- Increased Agility and Innovation: Cloud platforms provide access to a wide range of services and tools that enable faster development cycles, more rapid deployment of new features, and improved innovation.

- Improved Security: Cloud providers offer advanced security features and services, including data encryption, intrusion detection, and compliance certifications, enhancing the overall security posture of applications.

- Greater Efficiency: Automation tools and managed services within cloud environments streamline IT operations, reducing manual tasks and improving overall efficiency. For instance, automating database backups and monitoring tasks can free up IT staff to focus on more strategic initiatives.

- Access to Advanced Technologies: Cloud platforms provide access to cutting-edge technologies such as artificial intelligence, machine learning, and big data analytics, enabling organizations to gain valuable insights and drive innovation.

Defining Success Metrics for a Migration Project

Defining clear success metrics is essential for measuring the effectiveness of a stateful application migration project. These metrics provide a quantifiable way to assess whether the migration objectives are being met and to identify areas for improvement. The selection of appropriate metrics depends on the specific goals of the migration, but several key categories are commonly used.

- Performance Metrics: These metrics focus on the performance and responsiveness of the migrated application.

- Response Time: Measures the time it takes for the application to respond to user requests. A lower response time indicates better performance.

- Throughput: Measures the number of transactions or requests processed per unit of time. Higher throughput indicates better performance and capacity.

- Error Rate: Measures the percentage of requests that result in errors. A lower error rate indicates a more stable and reliable application.

- Cost Metrics: These metrics focus on the cost-effectiveness of the migrated application.

- Total Cost of Ownership (TCO): Measures the total cost of running the application, including infrastructure, operations, and maintenance. A lower TCO indicates greater cost efficiency.

- Resource Utilization: Measures the utilization of computing resources, such as CPU, memory, and storage. Efficient resource utilization minimizes costs.

- Availability and Reliability Metrics: These metrics focus on the availability and reliability of the migrated application.

- Uptime: Measures the percentage of time the application is available and operational. Higher uptime indicates greater reliability.

- Mean Time Between Failures (MTBF): Measures the average time between failures. A higher MTBF indicates greater reliability.

- Mean Time To Recovery (MTTR): Measures the average time it takes to recover from a failure. A lower MTTR indicates faster recovery and reduced downtime.

- Business Impact Metrics: These metrics focus on the impact of the migration on business operations.

- Customer Satisfaction: Measures customer satisfaction with the application, often through surveys or feedback mechanisms.

- Revenue: Measures the impact of the migration on revenue generation.

- Operational Efficiency: Measures the impact of the migration on operational efficiency, such as the time it takes to complete a business process.

Data Consistency and Integrity Challenges

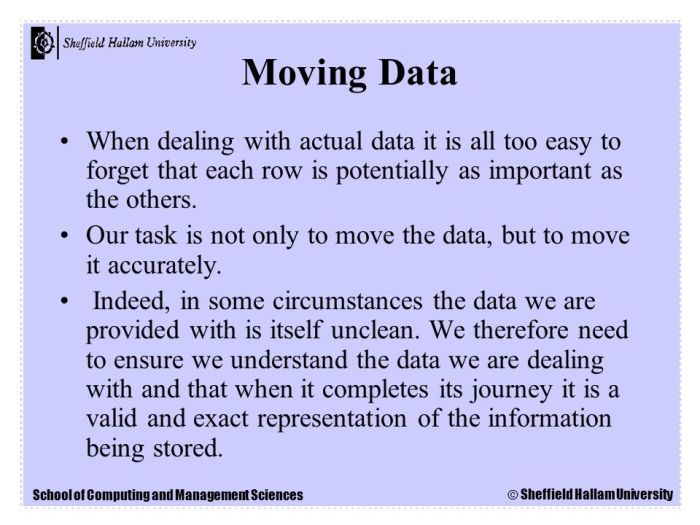

Migrating stateful applications introduces significant challenges related to data consistency and integrity. These challenges stem from the distributed nature of many stateful applications, the potential for data loss or corruption during the migration process, and the need to maintain data accuracy across different environments. Ensuring data consistency and integrity is paramount to the successful operation of the migrated application, preventing data corruption, and maintaining business continuity.

Potential Data Consistency Issues During Migration

Data consistency issues during migration can arise from several factors, potentially leading to inconsistencies between the source and target environments. These issues must be carefully considered and addressed to avoid data corruption and ensure the reliability of the migrated application.

- Network Latency and Timeouts: Network delays and timeouts can disrupt data transfer, especially when migrating large datasets. If a transaction is interrupted mid-transfer, it can lead to partial data updates, creating inconsistencies. For example, consider a financial transaction involving updating a customer’s account balance. If the transfer of the balance update is interrupted, the source and target databases may show different balances.

- Data Transformation Errors: Migrating data often requires transformations to adapt it to the target environment’s schema or data formats. Errors during these transformations can lead to incorrect data values or data loss. For instance, if a date format conversion from “MM/DD/YYYY” to “YYYY-MM-DD” is implemented incorrectly, it can lead to inaccurate date representation and subsequent application errors.

- Concurrency Conflicts: If the source and target environments are both active during the migration, concurrent operations on the same data can lead to conflicts. This is particularly problematic when migrating data that is actively being modified by the application. Imagine two users updating the same record simultaneously: one on the source and one on the target. The system must manage these updates to prevent data overwrites.

- Incomplete Data Transfers: Incomplete data transfers can occur due to various reasons, including network failures, storage limitations, or process interruptions. If data is not fully migrated, the target environment will have an incomplete view of the data, leading to application malfunctions. A real-world example is a customer order with missing line items due to an incomplete transfer.

- Version Incompatibilities: Differences in database versions, operating systems, or application software between the source and target environments can cause data compatibility issues. These incompatibilities can result in data corruption or application errors. For example, an application relying on a specific database feature unavailable in the target environment can fail to function correctly.

Procedure to Ensure Data Integrity Throughout the Migration Process

A well-defined procedure is essential to ensure data integrity throughout the migration process. This procedure should incorporate measures to minimize data loss, prevent corruption, and verify the accuracy of the migrated data.

- Pre-Migration Data Validation: Before initiating the migration, validate the data in the source environment. This includes checking for data inconsistencies, missing values, and format errors. Use tools to identify and correct any issues before the migration begins.

- Data Backup and Recovery Plan: Create a comprehensive backup of the source data before migration. Develop a recovery plan to restore the data to its original state in case of migration failures or data corruption. This backup serves as a safety net, allowing you to revert to a known good state if necessary.

- Staged Migration Approach: Implement a staged migration approach, migrating data in batches or phases. This allows for testing and validation at each stage, minimizing the impact of errors. For example, migrate a subset of the data first, validate its integrity, and then proceed with the rest.

- Data Transformation and Validation: Implement robust data transformation processes to convert the data to the target environment’s format. Validate the transformed data to ensure its accuracy and completeness. This may involve checksums or comparison of source and target data.

- Monitoring and Logging: Implement comprehensive monitoring and logging throughout the migration process. Monitor data transfer progress, error rates, and system performance. Log all migration activities to facilitate troubleshooting and auditing.

- Data Verification and Reconciliation: After the migration, verify the data in the target environment against the source data. Perform data reconciliation to identify and resolve any discrepancies. This can involve comparing data counts, checksums, or performing more complex data validation checks.

- Rollback Plan: Have a rollback plan in place to revert to the source environment if critical issues arise during or after the migration. This plan should include steps to restore the data and application to the pre-migration state.

Handling Data Corruption Scenarios

Data corruption scenarios are inevitable during migration, and it is essential to have procedures to handle them effectively. These procedures should focus on detecting, isolating, and repairing corrupted data while minimizing the impact on the application.

- Data Corruption Detection: Implement mechanisms to detect data corruption. This includes using checksums, data validation rules, and anomaly detection techniques. Regular data integrity checks should be performed throughout the migration process.

- Isolation of Corrupted Data: When data corruption is detected, isolate the corrupted data to prevent it from further affecting the application. This might involve quarantining the corrupted records or disabling access to the affected data.

- Data Repair and Recovery: Develop procedures to repair or recover corrupted data. This could involve restoring data from backups, applying data corrections, or re-migrating the affected data. The repair strategy will depend on the nature and extent of the corruption.

- Error Handling and Alerting: Implement robust error handling mechanisms to capture and log data corruption events. Set up alerts to notify administrators of data integrity issues. This allows for prompt response and resolution.

- Post-Migration Data Auditing: After the migration, conduct regular data audits to ensure the ongoing integrity of the data. These audits should include data validation checks and anomaly detection.

- Example: Handling Checksum Failures Suppose a checksum validation fails during data transfer. The system should immediately stop the transfer of the corrupted data. Then, it should log the failure, alert the administrator, and provide options to re-transfer the affected data block or revert to a known good state.

Downtime and Business Continuity Concerns

Migrating stateful applications presents significant challenges to maintaining business operations. Planned or unplanned downtime can disrupt services, leading to financial losses, reputational damage, and decreased customer satisfaction. A well-defined migration strategy must prioritize minimizing downtime and ensuring business continuity to mitigate these risks.

Impact of Downtime on Business Operations

Downtime, the period when a system or service is unavailable, has a direct and measurable impact on various aspects of a business. The extent of the impact depends on factors such as the criticality of the application, the duration of the outage, and the nature of the business.

- Financial Losses: Downtime translates directly into financial losses. These losses can arise from lost revenue due to interrupted transactions, reduced productivity, and penalties for failing to meet service level agreements (SLAs). For example, consider an e-commerce platform experiencing a 2-hour outage during peak shopping hours. If the average revenue per hour is $100,000, the direct revenue loss would be $200,000, not accounting for potential loss of future sales.

- Reputational Damage: Extended downtime can severely damage a company’s reputation. Customers may lose trust in the service provider, leading to churn and negative reviews. This damage is particularly acute in industries where reliability is critical, such as financial services or healthcare. Negative publicity and social media backlash can amplify the reputational impact.

- Decreased Customer Satisfaction: Service unavailability leads to customer frustration and dissatisfaction. Customers may be unable to access critical data, complete transactions, or receive support. This negative experience can lead to decreased customer loyalty and a decline in customer lifetime value.

- Operational Costs: Downtime incurs operational costs associated with incident response, troubleshooting, and recovery efforts. These costs include the salaries of IT staff, the use of external consultants, and the potential need for expedited hardware or software upgrades.

- Legal and Compliance Risks: In regulated industries, downtime can result in non-compliance with regulations, leading to fines and legal liabilities. For example, financial institutions must adhere to strict uptime requirements to protect customer data and ensure the integrity of transactions.

Strategies for Minimizing Downtime During the Migration

Minimizing downtime is a critical goal during stateful application migration. Several strategies can be employed to reduce the duration and impact of outages.

- Careful Planning and Preparation: A comprehensive migration plan is essential. This includes detailed documentation, risk assessment, and the identification of potential failure points. Thorough pre-migration testing in a staging environment allows for the detection and resolution of issues before the production migration.

- Phased Rollouts: Instead of migrating the entire application at once, a phased rollout approach can be used. This involves migrating the application in smaller increments, such as by migrating a portion of the user base or specific functionalities. This approach reduces the blast radius of potential issues, allowing for faster rollback if problems arise.

- Blue/Green Deployments: This strategy involves maintaining two identical environments: a “blue” environment (the current production environment) and a “green” environment (the new environment). During migration, the green environment is deployed and tested. Once testing is complete, traffic is switched from the blue environment to the green environment. This allows for a quick rollback to the blue environment if any issues are encountered in the green environment.

- Canary Deployments: A canary deployment involves routing a small percentage of user traffic to the new application version. This allows for monitoring of the new version’s performance and stability in a real-world environment before a full rollout. If issues are detected, the traffic can be quickly rerouted back to the original version.

- Automated Rollback Mechanisms: Implementing automated rollback procedures is crucial. In the event of a migration failure, the system should automatically revert to the previous stable state, minimizing downtime. This requires careful planning and the pre-configuration of rollback scripts and procedures.

- Infrastructure Automation: Automating infrastructure provisioning and configuration streamlines the migration process. Tools like Infrastructure as Code (IaC) allow for the consistent and repeatable deployment of infrastructure components, reducing the risk of human error and accelerating the migration.

- Effective Monitoring and Alerting: Implementing robust monitoring and alerting systems is essential. These systems should track key performance indicators (KPIs) and provide timely notifications of any anomalies or performance degradation. Proactive monitoring allows for the rapid identification and resolution of issues, minimizing downtime.

Methods for Ensuring Business Continuity During the Migration Process

Ensuring business continuity is paramount during stateful application migration. Several methods can be employed to maintain critical business functions even during periods of planned or unplanned downtime.

- High Availability Architectures: Designing the target infrastructure with high availability in mind is crucial. This involves implementing redundancy at all levels, including hardware, software, and network components. Load balancers, failover mechanisms, and automated recovery processes are essential elements of a high-availability architecture.

- Disaster Recovery Planning: A comprehensive disaster recovery (DR) plan is necessary. This plan should Artikel the steps to be taken to restore the application and its data in the event of a major outage. The DR plan should include regular testing and updates to ensure its effectiveness.

- Data Replication and Synchronization: Implementing data replication and synchronization mechanisms is critical to maintain data consistency across environments. Technologies like database replication, distributed file systems, and cloud-based storage solutions can be used to ensure that data is available in multiple locations, minimizing the impact of a single point of failure.

- Failover Mechanisms: Automated failover mechanisms should be in place to quickly switch to a backup instance or environment in case of an outage. This requires careful configuration and testing to ensure that the failover process is seamless and transparent to users.

- Geographic Redundancy: Deploying the application across multiple geographic regions can provide resilience against regional outages. This ensures that even if one region experiences an outage, the application can continue to function in other regions.

- Backup and Recovery Procedures: Regular backups of the application and its data are essential. Backup procedures should be automated and tested regularly to ensure that data can be restored quickly and reliably in the event of an outage.

- Communication Plan: A well-defined communication plan is essential to keep stakeholders informed during the migration process. This plan should Artikel the channels of communication, the frequency of updates, and the key personnel responsible for communication. Transparency and proactive communication help to build trust and manage expectations.

Infrastructure Compatibility and Resource Allocation

Migrating stateful applications necessitates careful consideration of infrastructure compatibility and resource allocation. These factors directly impact the success of the migration, influencing performance, stability, and cost-effectiveness. Incompatible infrastructure or inadequate resource provisioning can lead to application downtime, data loss, and a compromised user experience.

Compatibility Issues Between Source and Target Environments

Compatibility issues between the source and target environments are a primary concern during stateful application migrations. Differences in operating systems, networking configurations, storage solutions, and database versions can introduce significant challenges.

The following factors highlight common compatibility challenges:

- Operating System Differences: Migrating from one operating system to another (e.g., Windows to Linux) presents significant compatibility hurdles. Applications and their dependencies may not function identically, requiring code modifications, recompilation, or the use of compatibility layers.

- Networking Configuration Discrepancies: Network settings, including IP addresses, port assignments, and firewall rules, must be replicated or adapted in the target environment. Mismatches can lead to connectivity issues, preventing the application from communicating with other services or users. For example, if the source application uses a specific port that is blocked in the target environment’s firewall, the application will fail to function correctly.

- Storage Solution Compatibility: The source and target environments may employ different storage technologies (e.g., SAN, NAS, object storage). Data migration strategies must account for these differences, potentially requiring data transformation, data replication, or the use of compatible storage drivers.

- Database Version Mismatches: Database versions in the source and target environments must be compatible. Upgrading or downgrading the database during migration can introduce data corruption risks or require significant application code changes to adapt to the new database features and behaviors.

- Containerization and Orchestration Platforms: The source and target environments may utilize different containerization platforms (e.g., Docker, Kubernetes) or orchestration tools. Ensuring that the application and its dependencies are compatible with the target platform is critical for successful migration.

Resource Allocation Challenges in the Target Environment

Proper resource allocation is crucial for ensuring the migrated stateful application performs optimally in the target environment. Under-provisioning resources can lead to performance bottlenecks, while over-provisioning increases costs.

Several resource allocation challenges need careful consideration:

- CPU and Memory Requirements: Determining the appropriate CPU and memory resources requires analyzing the application’s historical resource utilization patterns. Monitoring tools can provide insights into peak loads, average usage, and resource consumption trends.

- Storage Capacity and I/O Performance: Sufficient storage capacity and adequate I/O performance are essential for data storage and retrieval. The storage solution must be able to handle the application’s data volume, data access patterns, and expected growth.

- Network Bandwidth and Latency: The network infrastructure must provide sufficient bandwidth and low latency to support the application’s communication needs. High latency can negatively impact application performance, especially for applications that are sensitive to network delays.

- Database Resource Allocation: Databases require careful resource allocation, including CPU, memory, storage, and network bandwidth. The database server must be sized appropriately to handle the application’s data volume, query load, and concurrent user connections.

- Load Balancing and Scalability: The target environment should support load balancing and scalability to handle fluctuating workloads. Load balancers distribute traffic across multiple application instances, ensuring high availability and preventing overload on any single instance.

Infrastructure Option Comparison

The choice of infrastructure significantly impacts the success of a stateful application migration. The following table compares different infrastructure options, considering their key characteristics.

| Infrastructure Option | Description | Advantages | Disadvantages |

|---|---|---|---|

| On-Premises Infrastructure | Applications are hosted on servers and infrastructure owned and managed by the organization within its own data center. |

|

|

| Infrastructure as a Service (IaaS) | Applications are hosted on virtualized infrastructure provided by a cloud provider (e.g., AWS, Azure, Google Cloud). |

|

|

| Platform as a Service (PaaS) | A cloud computing model that provides a platform for developing, running, and managing applications without the complexity of managing the underlying infrastructure. |

|

|

| Containerization (e.g., Kubernetes) | Applications are packaged into containers and orchestrated using platforms like Kubernetes. |

|

|

Network Configuration and Security Implications

Migrating stateful applications necessitates careful consideration of network configurations and security protocols. The transition involves altering network settings to accommodate the application’s new environment, which presents significant security challenges. Failing to address these implications can expose the application and associated data to vulnerabilities, potentially leading to data breaches, service disruptions, and financial losses. This section explores the network configuration changes required, associated security considerations, and a comprehensive plan for securing the migrated application.

Network Configuration Changes During Migration

Migrating stateful applications often requires substantial modifications to network infrastructure. These changes are critical to ensure the application functions correctly in its new environment while maintaining optimal performance and availability. The network configuration must be adapted to handle data transfer, traffic routing, and communication protocols.

- IP Address Management: The assignment of IP addresses is a fundamental aspect of network configuration. During migration, the application’s IP addresses might need to be changed to align with the new network infrastructure. This can involve reconfiguring static IP addresses, updating DNS records, and ensuring DHCP servers are correctly configured. For example, if an application is migrating from an on-premises data center to a cloud environment, the IP address scheme will almost certainly change.

This necessitates careful planning to minimize downtime and ensure seamless service continuity.

- Firewall Rules and Security Groups: Firewalls and security groups are essential for controlling network traffic and protecting the application from unauthorized access. The migration process necessitates updating these rules to allow the application to communicate with the necessary services and users in the new environment. This involves opening specific ports, defining source and destination IP addresses, and configuring access control lists (ACLs). For instance, when moving a database server, firewall rules must be adjusted to permit database client access to the new server’s IP address while denying access from unauthorized sources.

- Load Balancing and Traffic Management: Stateful applications often benefit from load balancing to distribute traffic across multiple instances, ensuring high availability and performance. During migration, load balancers need to be reconfigured to direct traffic to the application’s new instances. This includes updating the health checks, configuring session persistence (if required by the application), and adjusting traffic distribution algorithms. Consider a scenario where a web application uses a load balancer; during migration, the load balancer configuration must be updated to point to the new server instances and maintain session affinity to preserve user sessions.

- Network Segmentation and Virtual Private Networks (VPNs): Network segmentation is crucial for isolating the application and its data from other parts of the network, enhancing security. VPNs create secure tunnels for communication between the application and other resources, such as databases or external services. Migration may involve reconfiguring network segments and VPN connections to ensure the application remains isolated and secure in its new environment. This is particularly important when migrating applications to a public cloud, where network isolation is essential to protect sensitive data.

- DNS Configuration: Domain Name System (DNS) records must be updated to point to the application’s new IP addresses or load balancer endpoints. This is critical for users and other services to access the application in the new environment. The migration process requires careful planning and execution to minimize DNS propagation delays and prevent service disruptions. For example, when migrating a customer-facing web application, DNS records must be updated to reflect the new IP addresses or load balancer endpoints to ensure users can access the application without interruption.

Security Considerations for Migrating Stateful Applications

Migrating stateful applications introduces several security considerations that must be addressed to protect the application and its data from potential threats. These considerations encompass various aspects of security, including data encryption, access control, vulnerability management, and incident response. Neglecting these can leave the application vulnerable to attacks, leading to significant risks.

- Data Encryption: Encryption is crucial for protecting sensitive data, both in transit and at rest. During migration, it is essential to ensure that all data is encrypted to prevent unauthorized access. This includes encrypting data transmitted over the network, encrypting data stored in databases, and encrypting backups. For example, when migrating a database, the data at rest encryption should be enabled and the database connection should be encrypted using TLS/SSL to protect sensitive data.

- Access Control and Authentication: Implementing robust access control mechanisms is critical to restrict access to the application and its data to authorized users only. This involves using strong authentication methods, such as multi-factor authentication (MFA), and defining granular access control policies. During migration, access control configurations must be reviewed and updated to ensure they align with the new environment. For instance, if the application is using a new identity provider, the authentication and authorization configurations should be updated accordingly.

- Vulnerability Management: Identifying and mitigating vulnerabilities in the application and its underlying infrastructure is essential to prevent exploitation by attackers. This involves conducting vulnerability scans, patching software, and implementing security best practices. During migration, it’s crucial to scan the new environment for vulnerabilities and remediate any identified issues before the application goes live.

- Security Monitoring and Logging: Implementing comprehensive security monitoring and logging is crucial for detecting and responding to security incidents. This involves collecting logs from the application, the network, and the infrastructure, and analyzing them for suspicious activity. During migration, the security monitoring and logging configurations should be updated to include the new environment.

- Incident Response Plan: Having a well-defined incident response plan is essential for handling security incidents effectively. This plan should Artikel the steps to be taken in the event of a security breach, including containment, eradication, recovery, and post-incident analysis. During migration, the incident response plan should be updated to include the new environment.

Plan for Securing the Migrated Application

Securing a migrated stateful application requires a comprehensive plan that addresses all relevant security aspects. This plan should be developed before the migration and updated as needed. The plan should incorporate several key elements to ensure the application is secure in its new environment.

- Risk Assessment: Conduct a thorough risk assessment to identify potential security threats and vulnerabilities. This should include an analysis of the application, the network, and the infrastructure. The risk assessment should consider all aspects of the migration process, including data transfer, network configuration, and access control.

- Security Architecture Design: Design a security architecture that addresses the identified risks and vulnerabilities. This should include implementing security controls, such as firewalls, intrusion detection systems, and access control policies. The security architecture should be designed to be scalable and adaptable to changing threats.

- Implementation of Security Controls: Implement the security controls Artikeld in the security architecture. This includes configuring firewalls, setting up intrusion detection systems, and implementing access control policies. The implementation should be thoroughly tested to ensure it functions correctly.

- Security Testing and Validation: Conduct thorough security testing and validation to ensure that the implemented security controls are effective. This includes penetration testing, vulnerability scanning, and code reviews. The testing should be performed regularly to identify and address any new vulnerabilities.

- Security Training and Awareness: Provide security training and awareness programs to all personnel involved in the migration and operation of the application. This should include training on security best practices, incident response procedures, and the use of security tools. The training should be updated regularly to reflect changes in the threat landscape.

- Regular Security Audits and Reviews: Conduct regular security audits and reviews to assess the effectiveness of the security controls and identify areas for improvement. The audits should be performed by qualified security professionals. The results of the audits should be used to update the security plan and implement any necessary changes.

Application Complexity and Dependencies

Migrating stateful applications often encounters significant hurdles related to their inherent complexity and the intricate web of dependencies they possess. These applications, by their very nature, tend to be multifaceted, comprising numerous components, services, and interconnected modules. The presence of these complexities, coupled with the reliance on external resources and other applications, can significantly complicate the migration process, increasing the risk of errors, downtime, and unexpected behavior.

Understanding and addressing these challenges is crucial for a successful migration.

Identifying Application Complexity Challenges

The complexity of a stateful application presents several key challenges during migration. The more complex the application, the more difficult it is to understand, document, and ultimately, migrate.

- Inter-Component Communication: Stateful applications frequently involve complex interactions between various components. These interactions can be synchronous or asynchronous, utilizing protocols such as REST, gRPC, or message queues. Migrating these components requires meticulous planning to ensure that communication channels remain functional and data is correctly routed post-migration. A failure in communication can lead to data loss or service unavailability.

- External Dependencies: Stateful applications often depend on external services, databases, caches, and other infrastructure components. Migrating these dependencies, or ensuring their compatibility with the new environment, is a critical challenge. For instance, migrating a database requires careful consideration of data schema, data volume, and the impact on application performance. Incompatibility between the application and its dependencies can render the application unusable.

- Codebase Size and Structure: Large and complex codebases are difficult to understand, test, and migrate. The presence of legacy code, technical debt, and undocumented functionality can further complicate the process. The sheer volume of code and the intricate relationships between different modules make it challenging to identify and address potential issues during migration.

- Configuration Management: Stateful applications often rely on extensive configuration files that specify settings for various components. Managing and migrating these configurations, ensuring they are compatible with the new environment, is a significant challenge. Incorrect configuration can lead to application failures or unexpected behavior.

- Monitoring and Logging: Robust monitoring and logging are essential for identifying and resolving issues during and after migration. Complex applications require sophisticated monitoring tools to track performance, identify errors, and ensure data integrity. The lack of adequate monitoring can make it difficult to diagnose and fix problems, leading to prolonged downtime.

Managing Dependencies During Migration

Effective management of dependencies is critical for a successful stateful application migration. A systematic approach is required to identify, assess, and migrate all dependencies while minimizing disruption.

- Dependency Mapping and Analysis: Before starting the migration, it is crucial to create a comprehensive map of all application dependencies. This involves identifying all external services, databases, libraries, and other components that the application relies on. Analyzing these dependencies allows for the prioritization of migration efforts and the identification of potential compatibility issues. Tools like dependency scanners and network traffic analyzers can aid in this process.

- Dependency Isolation and Decoupling: Where possible, isolate and decouple dependencies to simplify the migration process. This may involve refactoring code to reduce dependencies on specific services or components. Containerization technologies, such as Docker, can be used to package and isolate dependencies, making them easier to migrate.

- Compatibility Assessment: Assess the compatibility of each dependency with the target environment. This involves verifying that the dependencies are supported in the new environment and that they can interact correctly with the migrated application. If compatibility issues are identified, consider alternatives or migration strategies that address these issues.

- Dependency Migration Strategies: Implement appropriate migration strategies for each dependency. These strategies can include:

- Lift and Shift: Migrate the dependency as-is to the new environment.

- Replatforming: Modify the dependency to run on the new environment.

- Re-architecting: Redesign the dependency to leverage the new environment’s capabilities.

- Replacement: Replace the dependency with a similar service or component available in the new environment.

- Testing and Validation: Rigorously test and validate the migrated application and its dependencies in the new environment. This involves performing functional, performance, and integration tests to ensure that the application behaves as expected. Use automated testing tools to streamline the testing process and ensure comprehensive coverage.

Flow Chart: Migration of a Complex Application

The following flow chart illustrates the process of migrating a complex application, incorporating considerations for both application complexity and dependency management.

Description of the flow chart:The flow chart begins with a start node labeled “Initiate Migration Project”.

1. Phase 1

Assessment and Planning

Node 1

“Application Discovery and Analysis”

Process

Identify application components, dependencies (databases, external services, libraries), and communication patterns. Document the application architecture.

Output

Application Architecture Diagram, Dependency Map.

Node 2

“Define Migration Goals and Objectives”

Process

Determine business requirements (e.g., reduced costs, improved performance), set success metrics (e.g., uptime, latency), and establish a timeline.

Output

Migration Plan, Success Criteria.

Node 3

“Environment Selection and Preparation”

Process

Choose the target environment (cloud, on-premises, hybrid), provision resources, and set up infrastructure.

Output

Target Environment Ready.

Node 4

“Dependency Analysis and Strategy Selection”

Process

Analyze dependencies, assess compatibility, and select migration strategies (Lift and Shift, Replatform, Re-architect, Replace) for each.

Output

Dependency Migration Strategies.

2. Phase 2

Migration Execution

Node 5

“Component Migration”

Process

Migrate individual application components according to the chosen strategies. This may involve code changes, configuration updates, and data migration.

Output

Migrated Components.

Node 6

“Dependency Migration”

Process

Migrate external dependencies, such as databases, caches, and external services, ensuring compatibility and data integrity.

Output

Migrated Dependencies.

Node 7

“Integration Testing”

Process

Test the integration of migrated components and dependencies to ensure they work together correctly.

Output

Integrated System.

Node 8

“User Acceptance Testing (UAT)”

Process

Involve end-users in testing the migrated application to validate functionality and user experience.

Output

UAT Results, User Sign-off.

3. Phase 3

Post-Migration

Node 9

“Deployment and Cutover”

Process

Deploy the migrated application to production, manage downtime, and switch traffic to the new environment.

Output

Deployed Application.

Node 10

“Monitoring and Optimization”

Process

Monitor the application’s performance, identify and resolve issues, and optimize resources.

Output

Optimized Application.

Node 11

“Ongoing Maintenance and Support”

Process

Provide ongoing maintenance, support, and updates to the migrated application.

Output

Maintained Application.

Decision point (Diamond)

“Rollback Plan?”

If YES

“Execute Rollback Plan”

If NO

“Continue to next phase”The flow chart illustrates a cyclical process with feedback loops for testing and refinement. The migration process is iterative, with continuous monitoring and optimization. The flow chart includes decision points, such as “Rollback Plan?”, to ensure a safety net for any unforeseen issues during the migration. The flow chart structure also includes the testing phases (Integration Testing and UAT), which helps to validate the migrated application before deployment.

The flow chart structure is designed to handle complex application migration, with a step-by-step process that minimizes the risk of errors and downtime.

Testing and Validation Strategies

Migrating stateful applications necessitates rigorous testing and validation to ensure data integrity, functionality, and performance are preserved throughout the transition. This process is crucial for minimizing risks, identifying potential issues early, and guaranteeing a smooth user experience post-migration. A comprehensive testing strategy encompasses various levels of testing, each designed to address specific aspects of the application’s behavior and its interaction with the underlying infrastructure.

Testing Strategies for Validating Migrated Applications

A multifaceted approach to testing is essential for verifying the successful migration of stateful applications. This involves employing a combination of testing techniques, each tailored to assess different facets of the migrated application.

- Unit Testing: Focuses on individual components or modules of the application. Each unit is tested in isolation to ensure it functions correctly according to its specifications. This helps to identify and resolve localized issues early in the migration process.

- Integration Testing: Examines the interactions between different modules or components. This verifies that the integrated components work together seamlessly, ensuring data flows correctly between them. Integration testing helps identify issues related to data synchronization and communication between various parts of the application.

- System Testing: Evaluates the complete application as a whole, simulating real-world scenarios. This testing level verifies that the migrated application meets all functional and non-functional requirements, including performance, security, and usability.

- Performance Testing: Assesses the application’s performance under various load conditions. This involves measuring response times, throughput, and resource utilization to ensure the migrated application can handle the expected workload without degradation. Load testing, stress testing, and endurance testing are typical performance testing techniques.

- Regression Testing: Ensures that new changes or migrations do not introduce new defects or break existing functionality. Regression testing involves re-running previously passed test cases to verify that the migrated application continues to function as expected.

- Data Validation Testing: Confirms the integrity and consistency of the data after migration. This involves comparing data between the source and target systems to ensure all data has been correctly migrated and is consistent across both environments. Techniques include data reconciliation and data quality checks.

- Security Testing: Verifies the security aspects of the migrated application, including access controls, authentication, authorization, and data protection. This testing ensures that the application is protected against security threats and vulnerabilities.

Testing Checklist for Stateful Application Migration

A comprehensive checklist ensures all critical aspects of the migrated application are thoroughly tested. This structured approach helps maintain consistency and completeness in the testing process.

- Pre-Migration Testing:

- Environment Setup: Verify the readiness of both the source and target environments. Ensure that all necessary infrastructure components, such as servers, storage, and networking, are properly configured and accessible.

- Dependency Verification: Confirm that all dependencies, including libraries, frameworks, and external services, are compatible with the target environment and the migrated application.

- Data Backup and Validation: Create a complete backup of the source data and validate its integrity. Ensure the backup is restorable and that the data is consistent.

- Test Data Preparation: Prepare representative test data that covers various scenarios and edge cases. This data should include both valid and invalid inputs to test different aspects of the application.

- Data Migration Testing: Verify the accuracy and completeness of data migration. Compare data in the source and target systems to ensure all data has been transferred correctly. This involves checking data types, formats, and relationships.

- Functional Testing: Perform functional tests to verify that all application features and functionalities work as expected in the target environment. This includes testing user interfaces, business logic, and data processing.

- Performance Testing: Evaluate the application’s performance under various load conditions to ensure it meets performance requirements. Measure response times, throughput, and resource utilization.

- Integration Testing: Test the integration of the migrated application with other systems and services to ensure seamless communication and data exchange.

- User Acceptance Testing (UAT): Conduct UAT with end-users to validate that the migrated application meets their requirements and expectations. This involves testing the application in a production-like environment.

- Security Testing: Perform security testing to ensure the application is protected against security threats and vulnerabilities.

- Disaster Recovery Testing: Test the disaster recovery plan to ensure the application can be restored in case of a failure. This involves simulating failure scenarios and verifying the recovery process.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track the application’s performance and identify any issues. This includes monitoring metrics such as response times, error rates, and resource utilization.

Demonstrating the Importance of User Acceptance Testing

User Acceptance Testing (UAT) is a critical phase in the migration process, as it involves the end-users who will be using the application post-migration. It is the final validation step before the application is released to production.

UAT provides a crucial opportunity to gather feedback from end-users, ensuring that the migrated application meets their needs and expectations. This helps to identify and resolve any usability issues, functional gaps, or performance problems before the application is deployed to a wider audience.

UAT typically involves:

- User involvement: End-users are actively involved in testing the application in a production-like environment.

- Scenario-based testing: Testers execute test cases based on real-world scenarios and user workflows.

- Feedback collection: Users provide feedback on the application’s functionality, usability, and performance.

- Issue resolution: Any identified issues are addressed and resolved before the application is released to production.

For instance, a financial institution migrating its core banking system would involve bank tellers and loan officers in UAT. These users would test key functionalities like transaction processing, account management, and loan origination. Their feedback on the system’s usability and performance would be essential in ensuring a smooth transition and preventing disruption to customer service. This real-world validation helps in uncovering issues that might be missed during technical testing, ensuring that the migrated application truly meets the needs of its users.

The outcome of UAT directly influences the success of the migration and the user’s satisfaction with the new system.

Skillset and Expertise Requirements

Successful migration of stateful applications necessitates a multidisciplinary team possessing a diverse range of technical skills and specialized expertise. This section details the critical skillsets, the roles of specialized experts, and training resources essential for a successful migration strategy. The complexity of stateful applications demands a comprehensive approach to ensure data integrity, minimize downtime, and maintain business continuity.

Required Skillsets

The migration process requires proficiency across several technical domains. A team with the following skillsets is crucial:

- Cloud Computing and Platform Expertise: Deep understanding of the target cloud platform (e.g., AWS, Azure, GCP) including services related to compute, storage, networking, and databases. This includes knowledge of platform-specific APIs, configuration options, and best practices for resource optimization and cost management. For instance, understanding the nuances of AWS’s Elastic Block Storage (EBS) versus Azure’s Disk Storage is essential for data migration and performance tuning.

- Containerization and Orchestration: Proficiency in container technologies like Docker and orchestration platforms such as Kubernetes is vital. This includes the ability to containerize applications, manage containerized workloads, and implement scaling and automated deployment strategies. Experience with Kubernetes Operators and Helm charts can streamline deployment and configuration management.

- Networking and Security: Expertise in network configuration, security protocols, and firewall management is critical. This includes understanding of virtual networks, load balancing, VPNs, and security best practices such as implementing network segmentation and intrusion detection systems. Knowledge of cloud-specific networking services (e.g., AWS VPC, Azure Virtual Network) is also required.

- Database Administration and Data Migration: Extensive knowledge of database systems (e.g., relational, NoSQL) and data migration strategies is necessary. This involves experience with database schema design, data replication, backup and recovery procedures, and data transformation tools. Expertise in specific database migration tools (e.g., AWS Database Migration Service, Azure Database Migration Service) is highly beneficial.

- Programming and Scripting: Strong programming skills in languages like Python, Go, or scripting languages such as Bash are crucial for automation, scripting, and application modernization. These skills are used for developing migration scripts, automating configuration tasks, and integrating with APIs.

- Operating Systems and System Administration: In-depth knowledge of operating systems (e.g., Linux, Windows) and system administration tasks is necessary for managing servers, configuring resources, and troubleshooting issues. This includes experience with system monitoring, performance tuning, and security hardening.

- Monitoring and Observability: Ability to implement and manage monitoring and observability tools to track application performance, resource utilization, and potential issues during and after migration. This involves experience with tools like Prometheus, Grafana, and cloud-specific monitoring services.

Specialized Expertise Roles

Specific roles within the migration team require specialized expertise to address complex challenges.

- Cloud Architect: Responsible for designing the overall migration strategy, selecting the appropriate cloud services, and ensuring the solution aligns with business requirements and architectural principles. They must have a comprehensive understanding of cloud platforms and application architecture.

- Database Specialist: Focuses on database migration, data transformation, and ensuring data integrity throughout the process. This role requires deep knowledge of database systems, data migration tools, and data security best practices. They may also be involved in database schema design and optimization.

- Security Engineer: Ensures the security of the migrated application and data, implementing security controls and adhering to compliance requirements. This includes designing and implementing network security, access controls, and data encryption strategies.

- DevOps Engineer: Automates the deployment, configuration, and management of the application and infrastructure, utilizing tools for infrastructure-as-code, continuous integration, and continuous delivery. This role ensures the efficiency and reliability of the migration process.

- Application Developer/Modernization Specialist: Involved in modernizing the application code, addressing compatibility issues, and adapting the application to the new cloud environment. They need a strong understanding of the application’s codebase and dependencies.

Training Resources for Migration Teams

Migration teams can benefit from a variety of training resources to enhance their skills and knowledge.

- Cloud Provider Certifications: Certifications offered by cloud providers (e.g., AWS Certified Solutions Architect, Azure Solutions Architect Expert, Google Cloud Professional Cloud Architect) provide in-depth training and validation of skills related to cloud services and best practices. These certifications are valuable for demonstrating expertise and gaining a competitive edge.

- Online Courses and Tutorials: Platforms like Coursera, Udemy, and edX offer a wide range of online courses and tutorials covering various aspects of cloud computing, containerization, database administration, and other relevant technologies. These resources provide flexible learning options for individuals and teams.

- Vendor-Specific Training: Many vendors offer training courses and workshops on their specific products and services. These courses provide in-depth knowledge of vendor-specific features and functionalities.

- Documentation and Whitepapers: Cloud providers and technology vendors provide comprehensive documentation, whitepapers, and best practices guides. These resources offer detailed information on specific technologies, migration strategies, and security considerations.

- Hands-on Labs and Practice Environments: Hands-on labs and practice environments allow teams to gain practical experience and experiment with different technologies and migration scenarios. These environments provide a safe space to test and refine migration strategies.

- Industry Conferences and Workshops: Attending industry conferences and workshops provides opportunities to learn from experts, network with peers, and stay up-to-date on the latest trends and best practices in cloud migration and stateful applications.

Cost and Budget Considerations

Migrating stateful applications presents a significant financial undertaking, requiring careful planning and execution to ensure cost-effectiveness. Understanding the various cost components, employing robust estimation methodologies, and implementing effective budget management strategies are crucial for a successful and fiscally responsible migration. Failure to adequately address these aspects can lead to budget overruns, project delays, and ultimately, a compromised migration outcome.

Cost Components of a Stateful Application Migration

Several distinct cost elements contribute to the overall expense of migrating stateful applications. A comprehensive understanding of these components is essential for accurate budgeting.

- Assessment and Planning Costs: This involves the initial phase of evaluating the current stateful application, identifying migration goals, and developing a detailed migration plan. Costs include:

- Resource allocation for application profiling and dependency analysis.

- Expert consultation fees for migration strategy development.

- Tools and software licensing for application assessment.

- Infrastructure Costs: The target infrastructure, whether cloud-based or on-premises, necessitates resource allocation and provisioning. These costs can be broken down into:

- Hardware procurement or cloud instance provisioning.

- Network configuration and setup.

- Storage solutions for data replication and persistence.

- Operating system and software licensing.

- Data Migration Costs: The process of transferring data from the source environment to the target environment can be a significant cost driver. These include:

- Data transfer fees, particularly for cloud-based migrations.

- Data transformation and cleansing costs.

- Data validation and verification expenses.

- Costs associated with data synchronization tools.

- Application Refactoring and Development Costs: Modifications to the application code may be necessary to ensure compatibility with the target environment. These costs involve:

- Development team salaries or contractor fees.

- Code refactoring and adaptation efforts.

- Testing and quality assurance activities.

- Integration with new APIs or services.

- Testing and Validation Costs: Rigorous testing is crucial to validate the migrated application’s functionality and performance. These expenses encompass:

- Testing environment setup and maintenance.

- Test case development and execution.

- Performance testing and load testing tools.

- Bug fixing and remediation costs.

- Downtime and Business Disruption Costs: Minimizing downtime is paramount. Costs associated with planned or unplanned downtime include:

- Loss of revenue due to application unavailability.

- Increased support costs during the migration and cutover phases.

- Potential damage to brand reputation.

- Training and Skill Development Costs: Ensuring that personnel have the necessary skills to manage and maintain the migrated application is essential. Costs associated with this include:

- Training programs for operations and development teams.

- Consultancy fees for knowledge transfer.

- Ongoing skill development and certification expenses.

- Ongoing Operational Costs: Post-migration, there will be recurring expenses associated with the migrated application. These include:

- Infrastructure maintenance and support fees.

- Monitoring and management tools and services.

- Software licensing renewals.

Methods for Estimating Migration Costs

Accurate cost estimation is critical for securing budget approval and monitoring project expenses. Several methods can be employed to estimate migration costs.

- Bottom-Up Estimation: This method involves breaking down the migration project into individual tasks and estimating the cost of each task.

- This approach requires a detailed understanding of the project scope.

- It involves estimating the resources (time, personnel, tools) required for each task.

- The costs of individual tasks are then aggregated to determine the total project cost.

- Top-Down Estimation: This method uses historical data and industry benchmarks to estimate the overall project cost.

- It is often used when detailed project information is unavailable.

- It can be based on similar migration projects or industry averages.

- This approach may provide a rough estimate, but it can be refined as more information becomes available.

- Parametric Estimation: This method utilizes statistical relationships between project characteristics and cost.

- It involves using historical data and statistical models to predict costs based on specific parameters.

- Parameters may include the size of the application, the complexity of the data, and the number of dependencies.

- The accuracy of this method depends on the availability of reliable historical data.

- Analogous Estimation: This method uses the actual cost of a similar project to estimate the cost of the current project.

- It is based on the assumption that similar projects will have similar costs.

- The estimator identifies a past project that is similar to the current project.

- The cost of the past project is then adjusted to reflect differences in scope, complexity, and other factors.

- Expert Judgment: This method relies on the experience and expertise of individuals or groups with relevant knowledge.

- It involves gathering input from subject matter experts.

- Experts provide their estimates based on their experience and understanding of the project.

- This method can be used in conjunction with other estimation methods to improve accuracy.

- Software Cost Estimation Models: Specialized software tools can be used to estimate migration costs.

- These tools use algorithms and historical data to generate cost estimates.

- Examples include COCOMO (Constructive Cost Model) and SLIM (Software Lifecycle Management).

- These models can provide more precise estimations but require careful calibration and input data.

Strategies for Managing Migration Budgets Effectively

Effective budget management is crucial to ensure that the migration project stays within budget and delivers the expected value. Several strategies can be employed.

- Detailed Budget Planning: Develop a comprehensive budget that includes all anticipated costs, as detailed above.

- Allocate funds for each cost component, including contingency funds to address unforeseen expenses.

- Obtain detailed quotes from vendors and service providers.

- Create a realistic schedule and resource allocation plan.

- Regular Budget Monitoring and Tracking: Continuously monitor and track actual spending against the budget.

- Implement a system for tracking all project expenses.

- Generate regular budget reports to identify any variances.

- Use project management software to monitor budget and resource allocation.

- Variance Analysis: Analyze any variances between the planned budget and actual spending.

- Investigate the causes of any variances.

- Take corrective actions to bring the project back on track.

- Document all budget changes and justifications.

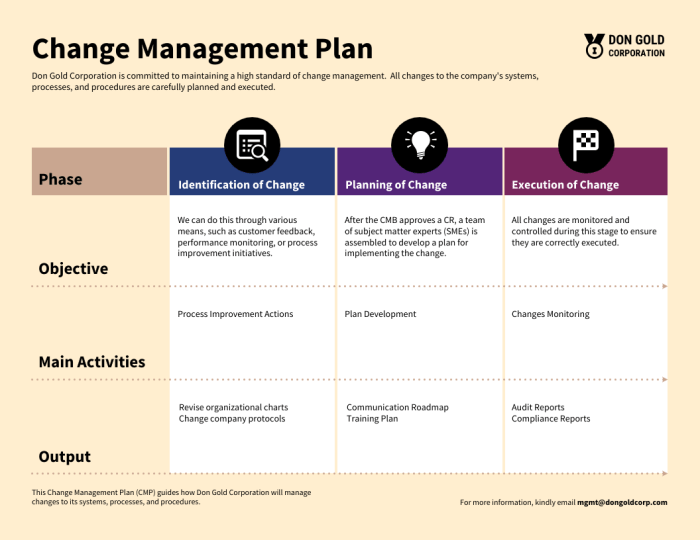

- Change Management: Establish a formal change management process to control project scope and cost.

- Implement a process for reviewing and approving all change requests.

- Assess the impact of each change request on the budget and schedule.

- Document all approved changes and their associated costs.

- Risk Management: Identify and mitigate potential risks that could impact the budget.

- Identify potential risks that could impact the budget, such as technical challenges or resource constraints.

- Develop risk mitigation plans to minimize the impact of potential risks.

- Maintain a risk register to track and manage project risks.

- Negotiation and Vendor Management: Negotiate favorable terms with vendors and manage vendor relationships effectively.

- Obtain multiple quotes from different vendors to compare pricing.

- Negotiate favorable contract terms, such as payment schedules and service level agreements.

- Monitor vendor performance and address any issues promptly.

- Phased Approach: Consider a phased migration approach to spread costs over time and mitigate risk.

- Migrate the application in stages, starting with a pilot project or a subset of the application.

- Learn from each phase and refine the migration strategy.

- This approach can help to reduce the overall cost and risk of the migration.

- Cost Optimization: Implement cost optimization strategies to reduce project expenses.

- Explore cost-effective solutions, such as open-source software and cloud-based services.

- Optimize resource utilization to minimize costs.

- Identify opportunities to streamline processes and eliminate unnecessary expenses.

- Contingency Planning: Include a contingency fund in the budget to address unexpected expenses.

- Allocate a percentage of the budget for unforeseen costs.

- Use the contingency fund to cover unexpected expenses, such as technical challenges or delays.

- Regular Reporting: Provide regular budget reports to stakeholders.

- Communicate budget status to stakeholders.

- Provide regular updates on budget performance, including variances and corrective actions.

- Ensure that stakeholders are informed of any potential budget overruns.

Monitoring and Performance Tuning

The successful migration of stateful applications necessitates a robust monitoring and performance tuning strategy. Post-migration, constant vigilance is crucial to ensure optimal application performance, identify and address potential bottlenecks, and maintain the integrity of the stateful data. Proactive monitoring allows for early detection of issues, preventing performance degradation and ensuring a smooth user experience. Performance tuning is the iterative process of optimizing application components to improve resource utilization, reduce latency, and enhance overall system efficiency.

Importance of Monitoring the Migrated Application

Effective monitoring provides insights into the application’s behavior, resource consumption, and overall health. This information is vital for identifying performance issues, validating the migration’s success, and proactively addressing potential problems.

- Real-time Performance Tracking: Monitoring tools provide real-time data on key performance indicators (KPIs) such as CPU utilization, memory usage, disk I/O, network latency, and response times. This enables immediate identification of performance degradation.

- Alerting and Notification: Configure alerts based on predefined thresholds for critical metrics. This automated system notifies administrators of potential problems, enabling rapid response and minimizing downtime. For example, if the CPU utilization of a database server exceeds 90% for more than 5 minutes, an alert should be triggered.

- Trend Analysis: Monitoring tools collect historical data, allowing for trend analysis. This analysis helps in understanding performance patterns, predicting future resource needs, and planning capacity upgrades. Analyzing the growth of database size over time is a good example.

- Problem Diagnosis: Monitoring data assists in diagnosing the root cause of performance issues. By correlating different metrics, administrators can pinpoint the specific components or processes contributing to bottlenecks. For instance, high network latency coupled with increased error rates could indicate a network configuration problem.

- Validation of Migration: Post-migration, monitoring serves to validate that the application performs as expected in the new environment. Comparing performance metrics before and after migration provides evidence of the migration’s success or highlights areas requiring further optimization.

Performance Tuning Techniques for Stateful Applications