The adoption of serverless computing has surged, promising agility and cost efficiency. However, this paradigm shift introduces a critical consideration: vendor lock-in. This phenomenon, where reliance on a specific provider’s proprietary services restricts portability and flexibility, presents significant challenges that demand careful analysis. Understanding these challenges is crucial for organizations aiming to harness the full potential of serverless architectures while maintaining control and adaptability.

This exploration will dissect the multifaceted aspects of vendor lock-in within the serverless context. We will examine technical hurdles, cost implications, operational complexities, security concerns, and the impact on innovation. Furthermore, we will delve into mitigation strategies, alternative technologies, and real-world examples to provide a comprehensive understanding of this critical topic. The goal is to equip readers with the knowledge necessary to make informed decisions and navigate the evolving serverless landscape strategically.

Understanding Vendor Lock-in in the Serverless Context

Vendor lock-in in serverless computing represents a significant challenge, impacting the portability and flexibility of applications. This phenomenon arises when a business becomes overly reliant on a specific vendor’s services, making it difficult and costly to switch to another provider or utilize a hybrid approach. Understanding the nuances of vendor lock-in is crucial for making informed decisions when adopting serverless architectures.

Fundamental Concept and Implications of Vendor Lock-in

Vendor lock-in, in the context of serverless computing, occurs when the design and implementation of an application become so tightly coupled with a specific vendor’s serverless platform that migrating to another platform becomes prohibitively complex, expensive, or even impossible. This dependence stems from several factors, including the use of proprietary services, specific platform features, and the intricacies of the vendor’s ecosystem.The implications of vendor lock-in are multifaceted.

One primary concern is a loss of flexibility. Businesses are constrained in their ability to choose the best-suited services for their needs, potentially missing out on more cost-effective or feature-rich alternatives offered by other providers. Another critical implication is the potential for increased costs. Vendors can leverage lock-in to raise prices or impose less favorable terms, knowing that switching costs are high.

Moreover, lock-in can stifle innovation, as businesses are less likely to experiment with new technologies or approaches if they are tied to a single vendor’s offerings. Furthermore, vendor lock-in can affect disaster recovery and business continuity plans, as the reliance on a single provider introduces a single point of failure.

Examples of Serverless Platforms and Contribution to Lock-in

Several prominent serverless platforms contribute to the potential for vendor lock-in through their unique features, services, and architectural approaches. These platforms, while offering compelling benefits, also present challenges related to portability and interoperability.AWS Lambda, a leading serverless compute service, exemplifies this. While Lambda supports multiple programming languages, its integration with other AWS services, such as API Gateway, DynamoDB, and S3, creates a strong ecosystem lock-in.

- Proprietary Services and APIs: The use of AWS-specific APIs and services, such as the AWS SDK, facilitates rapid development but complicates migration to other platforms. For example, code written to interact with DynamoDB requires modification to work with alternative NoSQL databases.

- Platform-Specific Features: Features unique to AWS Lambda, like its event sources (e.g., S3 event triggers, CloudWatch events), can make it difficult to replicate functionality on other platforms. Replicating the exact functionality on Azure Functions or Google Cloud Functions might require significant code rewriting and architectural adjustments.

- Cost Optimization: While AWS offers various cost optimization strategies, such as reserved instances and spot instances for EC2, serverless computing offers a different cost model. Lambda’s pay-per-use model can lead to significant cost savings, but the vendor’s pricing structure can still contribute to lock-in if the cost of switching is prohibitive.

Azure Functions, another popular serverless platform, presents a similar scenario. Integration with other Azure services, such as Azure Cosmos DB, Azure Event Hubs, and Azure Blob Storage, can lead to vendor lock-in.

- Integration with Azure Services: Similar to AWS, the tight integration of Azure Functions with Azure services can create a strong dependency. Code written to leverage Azure Cosmos DB, for instance, would need significant changes to work with alternative database services.

- Developer Tools and Ecosystem: Azure provides a comprehensive suite of developer tools and an extensive ecosystem, including the Azure portal, Visual Studio Code extensions, and Azure DevOps. While these tools enhance the developer experience, they can also contribute to lock-in by making it more difficult to switch to different development environments or CI/CD pipelines.

Google Cloud Functions, the serverless compute service from Google Cloud, follows a similar pattern. The deep integration with Google Cloud services, such as Cloud Storage, Cloud Pub/Sub, and BigQuery, contributes to vendor lock-in.

- Google Cloud Services: The use of Google Cloud-specific services, like Cloud Storage, creates a strong dependence on the Google Cloud ecosystem. Migrating functions that heavily rely on Cloud Storage to AWS or Azure would necessitate significant code modifications.

- Event Triggers: Google Cloud Functions supports various event triggers, including those from Cloud Storage, Cloud Pub/Sub, and Cloud Firestore. Replicating these event triggers on other platforms might require custom solutions or alternative architectures, making migration more complex.

Impact on Portability and Flexibility

Vendor lock-in significantly impacts portability and flexibility in serverless solutions. The ability to move an application between different cloud providers or to adopt a multi-cloud strategy is essential for mitigating risks, optimizing costs, and avoiding vendor dependence.

- Portability Challenges: Vendor lock-in hinders portability by making it difficult to migrate serverless functions and associated infrastructure to a different platform. This can involve rewriting code, reconfiguring services, and adapting to different APIs and event triggers. For example, migrating a Lambda function that utilizes AWS API Gateway and DynamoDB to Azure Functions would require rewriting the API Gateway configuration using Azure API Management and modifying the DynamoDB interactions to work with Azure Cosmos DB.

- Flexibility Limitations: Vendor lock-in limits flexibility by restricting the choice of cloud providers and services. Businesses may be forced to use services that are not the best fit for their needs or miss out on innovative offerings from other vendors. For instance, if a company is locked into AWS Lambda, it may be unable to easily leverage the advanced machine learning capabilities offered by Google Cloud’s AI Platform.

- Cost Implications: The costs associated with vendor lock-in extend beyond the immediate service charges. Switching platforms involves significant development, testing, and operational costs. These costs include the time and resources required to rewrite code, reconfigure infrastructure, and retrain personnel.

- Architectural Constraints: Serverless architectures designed with a specific vendor in mind often make assumptions about the underlying infrastructure and service availability. This can limit the flexibility to adapt to changing business requirements or take advantage of new technologies. For example, an application tightly coupled with AWS’s security features might be difficult to adapt to a different security model offered by another vendor.

To mitigate the risks of vendor lock-in, businesses should consider several strategies:

- Embrace Abstraction: Use abstraction layers to decouple the application code from the underlying vendor-specific services. This can be achieved by using libraries and frameworks that provide a common interface for interacting with different cloud providers.

- Prioritize Open Standards: Favor open standards and technologies that are widely supported across different platforms. This includes using standard protocols, data formats, and APIs.

- Adopt Multi-Cloud Strategies: Design applications to run across multiple cloud providers. This can involve using a combination of services from different vendors or deploying the same application on multiple platforms.

- Containerization: Utilize containerization technologies, such as Docker, to package serverless functions and their dependencies. This can improve portability and make it easier to deploy applications across different platforms.

- Careful Service Selection: When choosing serverless services, carefully evaluate the level of vendor lock-in and the potential impact on portability and flexibility. Consider the use of services that are available across multiple cloud providers or that offer open APIs.

Technical Challenges of Vendor Lock-in

The technical challenges of vendor lock-in in serverless computing stem from the proprietary nature of many serverless platforms. Migrating serverless applications across different vendors involves significant effort due to architectural incompatibilities, service discrepancies, and the need to adapt code and deployment pipelines. This section will delve into these hurdles, comparing architectural approaches, highlighting compatibility issues, and outlining the complexities involved in adapting applications for different serverless environments.

Migration Hurdles

Migrating serverless applications across vendors presents several technical challenges. These challenges include differences in underlying infrastructure, API implementations, and the specific services offered by each provider. The degree of difficulty is often directly proportional to the application’s complexity and the extent of vendor-specific features utilized.

- Code Adaptation: Serverless functions written for one platform often rely on vendor-specific SDKs and APIs. Rewriting or adapting code to work with a different vendor’s SDK can be a time-consuming process. For example, an application using AWS Lambda might use the AWS SDK for accessing S3 buckets, while migrating to Google Cloud Functions would necessitate rewriting those parts of the code to use the Google Cloud Storage API and the Google Cloud SDK.

This process involves not just changing the API calls but also understanding the nuances of each vendor’s SDK, which may involve different error handling, rate limiting, and data formats.

- Configuration Management: Each vendor has its own configuration format for defining serverless resources, such as functions, APIs, and databases. Migrating configurations, such as infrastructure-as-code (IaC) definitions, often requires significant changes. For instance, a project using AWS CloudFormation templates needs to be translated into Google Cloud Deployment Manager or Terraform configurations, or a different cloud provider’s IaC tools, which have different syntax and resource definitions.

The resources, their properties, and how they are linked together need to be thoroughly re-evaluated and potentially re-architected to function correctly on the new platform.

- Deployment Pipelines: Serverless platforms employ distinct deployment mechanisms. Migrating the deployment pipeline involves adapting the tools and processes to work with the new vendor’s infrastructure. For example, an application deployed using AWS CodePipeline would need to be adapted to use Google Cloud Build or another platform-specific equivalent. This involves changes to build steps, testing procedures, and the overall deployment strategy, and may require changes to the Continuous Integration and Continuous Delivery (CI/CD) scripts, which are responsible for automating the deployment process.

- Monitoring and Logging: The way applications are monitored and logs are collected also varies. Adapting to a new vendor means learning new monitoring dashboards and logging systems. This includes understanding how to interpret the new platform’s metrics, configuring alerts, and troubleshooting issues. This may also involve changes to how logs are formatted, stored, and accessed.

Architectural and Service Compatibility Issues

Serverless architectures and service offerings differ significantly among vendors. These differences lead to compatibility issues when attempting to move applications. These differences affect how applications are designed, implemented, and maintained.

- Function Runtime Environments: Different vendors support different programming languages and runtime versions for serverless functions. While most support common languages like Python, Node.js, and Java, specific versions and the availability of particular libraries may vary. For example, AWS Lambda might offer the latest Python version, while Google Cloud Functions may be slightly behind. This can lead to compatibility issues if an application depends on a specific language version or a library that is not available on the target platform.

- API Gateway Services: API gateway services, which manage API endpoints and routing, have varying features and capabilities. Moving an API from one vendor to another often requires reconfiguring the API gateway, including setting up authentication, authorization, and rate limiting. The specifics of configuring these features differ across vendors. For example, AWS API Gateway may offer a different set of authentication mechanisms compared to Google Cloud API Gateway, which could lead to changes in the application’s security model.

- Database Integration: Serverless applications often interact with databases. The integration with databases, such as NoSQL databases or relational databases, varies across vendors. Migrating an application that uses a vendor-specific database service to a different vendor requires migrating the database itself and adapting the code to interact with the new database. For example, migrating from AWS DynamoDB to Google Cloud Firestore necessitates significant code changes to accommodate differences in data models, query languages, and API calls.

- Event-Driven Architectures: Event-driven architectures are fundamental to serverless applications. The specific services used for event processing, such as message queues and event buses, vary across vendors. Moving an application that uses AWS SQS to Google Cloud Pub/Sub requires redesigning the event processing pipeline. The design and implementation of these event-driven architectures will need to be carefully evaluated and re-architected to function correctly on the new platform.

Adapting Code, Configurations, and Deployment

Adapting code, configurations, and deployment processes for different serverless environments involves a multi-faceted approach. It includes rewriting code, reconfiguring resources, and modifying deployment pipelines to align with the new vendor’s specifications.

- Code Rewriting and Refactoring: Code written using vendor-specific SDKs or APIs requires significant rewriting. This involves translating API calls, adapting data structures, and refactoring code to work with the new vendor’s services. For instance, an application using AWS S3 needs to be adapted to use Google Cloud Storage.

- Configuration Transformation: Configuration files, such as infrastructure-as-code templates, must be transformed to match the new vendor’s syntax and resource definitions. This involves understanding the equivalent resources and their properties in the new environment. The transformation can range from simple syntax changes to a complete redesign of the infrastructure.

- Deployment Pipeline Modification: The deployment pipeline must be adapted to work with the new vendor’s deployment tools and processes. This includes modifying build steps, testing procedures, and deployment strategies. The goal is to ensure that the application is deployed consistently and reliably in the new environment.

- Testing and Validation: Rigorous testing and validation are essential to ensure the application functions correctly in the new environment. This includes unit tests, integration tests, and end-to-end tests to verify the functionality of the application. The testing strategy must be adapted to the new environment.

Cost and Pricing Implications

Vendor lock-in within the serverless ecosystem significantly impacts the financial viability of applications over their lifecycle. This impact extends beyond the initial deployment phase, creating long-term cost vulnerabilities and potentially hindering the ability to optimize spending. Understanding these implications is crucial for making informed decisions about serverless architecture and vendor selection.

Long-Term Cost Effects

Vendor lock-in often results in increased costs over time due to several factors. The lack of portability inherent in a locked-in environment limits the options for cost optimization and competitive pricing.

- Price Increases: Serverless providers, aware of their customers’ dependency, may implement price increases. This can be particularly detrimental if a significant portion of an application’s infrastructure is tied to a single vendor’s services. The ability to negotiate or switch providers to mitigate these increases is severely curtailed.

- Lack of Negotiation Leverage: Without the option to easily migrate to a different platform, businesses have limited leverage in negotiating pricing with their chosen vendor. This is especially true for services with complex pricing models where subtle changes can significantly impact overall costs.

- Vendor-Specific Feature Dependency: Reliance on vendor-specific features can further exacerbate costs. If a crucial feature is only available from one vendor, the application is forced to use it, regardless of its cost or performance. The dependency on proprietary services may lead to inflated costs and reduced efficiency.

- Hidden Costs and Complex Pricing: Serverless pricing models can be complex, involving charges for execution time, memory usage, requests, and data transfer. Vendor lock-in can make it challenging to accurately predict and control these costs. The complexity of pricing models makes it easier for vendors to implement cost increases that are not immediately apparent.

Escalating Cloud Cost Scenario

Consider a hypothetical e-commerce platform built on a leading serverless vendor’s services. The platform utilizes the vendor’s API Gateway, Lambda functions, DynamoDB database, and CloudFront CDN. Initially, the costs are competitive, and the platform experiences rapid growth. After two years, the vendor announces a 15% increase in API Gateway pricing, citing increased infrastructure costs.

The e-commerce platform’s architecture is heavily dependent on the API Gateway for routing and authentication.

Migrating to a different provider would involve significant refactoring of the application, requiring a substantial investment in engineering resources and potentially causing downtime. The platform is effectively locked in. The increased pricing, coupled with other cost increases for storage and compute, significantly impacts the platform’s profitability. Furthermore, the platform also faces an increase in costs as they rely on vendor-specific features, and they also lack the flexibility to take advantage of new pricing models or service offerings from competitors.

The business is forced to absorb these increased costs, reduce its profit margins, or pass the cost increases on to its customers.

This scenario illustrates how vendor lock-in can lead to unexpected and escalating cloud costs. The initial benefits of serverless computing are eroded over time, and the platform’s financial stability is compromised. The platform’s reliance on a single vendor limits its ability to adapt to changing market conditions and optimize its infrastructure costs.

Operational and Management Complexities

The operational and management aspects of serverless applications become significantly more complex when vendor lock-in is present. This complexity arises from the need to manage applications across potentially disparate platforms, each with its own set of tools, interfaces, and operational procedures. This heterogeneity necessitates specialized expertise and can lead to inefficiencies in resource allocation, debugging, and incident response.

Organizing Challenges of Managing Serverless Applications Across Multiple Vendor Platforms

Managing serverless applications across multiple vendor platforms presents a multifaceted challenge. Each vendor offers a unique set of services, APIs, and management consoles, creating a fragmented operational landscape.

- Deployment Pipelines: Creating and maintaining consistent deployment pipelines across different vendors can be complex. Each platform may require specific tooling and configurations for code deployment, function updates, and resource provisioning. For example, deploying a function to AWS Lambda might involve using the AWS CLI or a service like AWS CodePipeline, while deploying to Google Cloud Functions requires using the gcloud CLI or Google Cloud Build.

This disparity necessitates adapting deployment strategies for each platform, increasing the risk of errors and inconsistencies.

- Monitoring and Observability: Monitoring application performance and health across different vendors often requires integrating multiple monitoring solutions. Gathering metrics, logs, and traces from different platforms can be challenging, making it difficult to gain a unified view of application behavior. Integrating third-party observability tools might alleviate this issue, but it adds complexity and potential vendor dependencies.

- Security Management: Managing security across multiple platforms introduces additional layers of complexity. Each vendor has its own security models, identity and access management (IAM) systems, and security best practices. Ensuring consistent security configurations, implementing robust access controls, and managing security updates across all platforms require careful planning and execution.

- Resource Management: Managing the underlying resources that serverless functions utilize, such as memory, compute time, and storage, can become complex. Each vendor offers different resource allocation options and pricing models, which can lead to challenges in optimizing resource utilization and controlling costs. Moreover, the differences in resource limits and scaling behavior across platforms require careful consideration during application design and deployment.

- Vendor-Specific APIs and SDKs: Utilizing vendor-specific APIs and SDKs increases the risk of vendor lock-in. Developers often integrate these APIs to interact with vendor-specific services, such as databases, object storage, and message queues. Switching to a different vendor would then necessitate rewriting code that uses these APIs.

Comparative Analysis of Monitoring, Logging, and Debugging Tools

Different serverless vendors provide distinct monitoring, logging, and debugging tools, each with its own strengths and weaknesses. A comparative analysis highlights the differences in functionality, usability, and integration capabilities.

| Feature | AWS Lambda | Google Cloud Functions | Azure Functions |

|---|---|---|---|

| Monitoring | CloudWatch: Comprehensive monitoring, logging, and metrics. Provides detailed performance metrics, error tracking, and custom dashboards. | Cloud Monitoring: Integrated with Cloud Logging. Offers performance metrics, error reporting, and custom dashboards. | Azure Monitor: Provides comprehensive monitoring, logging, and alerting capabilities. Supports metrics, logs, and application insights. |

| Logging | CloudWatch Logs: Centralized logging for function invocations, errors, and custom logs. Supports log filtering, aggregation, and analysis. | Cloud Logging: Centralized logging for function invocations, errors, and custom logs. Supports log filtering, aggregation, and analysis. Integrated with Cloud Monitoring. | Azure Monitor Logs: Centralized logging for function invocations, errors, and custom logs. Supports log filtering, aggregation, and analysis. Integrated with Application Insights. |

| Debugging | X-Ray: Distributed tracing for debugging function invocations. Allows developers to trace requests across services and identify performance bottlenecks. Debugging is typically done through log analysis in CloudWatch. | Cloud Debugger: Real-time debugging with breakpoints and variable inspection. Allows developers to debug functions running in production. Stackdriver Trace for distributed tracing. | Application Insights: Provides end-to-end debugging, performance monitoring, and error tracking. Supports live debugging and code profiling. |

| Integration | Integrates with other AWS services (e.g., S3, DynamoDB, API Gateway). Supports integration with third-party tools via APIs and SDKs. | Integrates with other Google Cloud services (e.g., Cloud Storage, Cloud Datastore, Cloud Pub/Sub). Supports integration with third-party tools via APIs and SDKs. | Integrates with other Azure services (e.g., Blob Storage, Cosmos DB, API Management). Supports integration with third-party tools via APIs and SDKs. |

The choice of monitoring, logging, and debugging tools significantly impacts operational efficiency. AWS CloudWatch offers comprehensive monitoring but can be complex to navigate. Google Cloud Monitoring and Cloud Logging provide strong integration within the Google Cloud ecosystem. Azure Monitor and Application Insights provide robust debugging and performance monitoring capabilities.

Complicating Operational Tasks with Vendor Lock-in

Vendor lock-in significantly complicates operational tasks such as security patching, updates, and incident response. The reliance on a specific vendor’s platform can restrict an organization’s ability to respond quickly to security threats or implement necessary updates.

- Security Patching: The frequency and responsiveness of security patching vary between vendors. If a critical vulnerability is discovered in a vendor’s serverless platform, organizations are at the mercy of the vendor’s patching schedule. In some cases, the patching process might require downtime or careful orchestration to avoid disrupting the application. This dependency can leave applications vulnerable for an extended period, increasing the risk of exploitation.

- Updates and Feature Rollouts: Vendor-specific updates and feature rollouts can also create operational challenges. Each vendor releases new features and updates at its own pace. Adopting these updates may require code modifications, testing, and adjustments to deployment pipelines. If an organization is heavily reliant on a vendor’s platform, it might be forced to adopt updates quickly, even if they introduce compatibility issues or require significant rework.

- Incident Response: When incidents occur, vendor lock-in can complicate incident response. The investigation and resolution of issues often rely on vendor-specific tools, documentation, and support channels. If a critical issue arises, organizations might be dependent on the vendor’s support team for assistance. Delays in receiving support or the lack of adequate tooling can prolong the incident response process, leading to downtime and potential data loss.

- Compliance and Regulatory Requirements: Meeting compliance and regulatory requirements can be more challenging when locked into a specific vendor. Different vendors offer varying levels of compliance certifications (e.g., SOC 2, HIPAA). Switching to a vendor with a different compliance posture might require significant changes to the application architecture and operational procedures.

Security and Compliance Considerations

Vendor lock-in significantly impacts the security posture and compliance adherence of serverless applications. Choosing a specific vendor often introduces security risks tied to data residency, proprietary security features, and the ability to comply with evolving industry regulations. Understanding these implications is crucial for making informed decisions regarding serverless architecture and vendor selection.

Security Risks Associated with Vendor Lock-in

Vendor lock-in can expose serverless applications to several security risks, particularly concerning data residency and the limitations imposed by vendor-specific security implementations. These risks can compromise the confidentiality, integrity, and availability of data and applications.Data residency, the physical location where data is stored, is a critical security and compliance consideration. When locked into a vendor, data residency options may be limited, potentially forcing data to reside in jurisdictions with inadequate data protection laws or in locations that conflict with internal security policies.

This can lead to increased exposure to data breaches and non-compliance with regulations.Vendor-specific security features can also create lock-in, reducing the flexibility to adopt best-of-breed security solutions or to respond effectively to emerging threats. While these features may initially seem advantageous, they can hinder the ability to migrate to a different vendor or to implement a defense-in-depth security strategy that leverages multiple security providers.

- Data Residency Challenges: Serverless functions might execute in regions dictated by the vendor, potentially violating data residency requirements. For example, a company operating in the EU might need to ensure its data remains within the EU borders to comply with GDPR. If a vendor’s serverless platform does not offer EU-specific regions, compliance becomes impossible.

- Limited Security Tooling Interoperability: Vendor-specific security features may not integrate seamlessly with other security tools, creating security silos. For instance, a vendor’s Web Application Firewall (WAF) might not be compatible with a specific Security Information and Event Management (SIEM) system, hindering threat detection and incident response capabilities.

- Dependency on Vendor Security Updates: Security vulnerabilities in vendor-specific features can become a single point of failure. If a vendor is slow to patch a vulnerability, the entire application becomes vulnerable. A real-world example is the Log4Shell vulnerability, where reliance on a vendor’s patched libraries was critical.

Vendor-Specific Security Features and Lock-in

The adoption of vendor-specific security features often exacerbates vendor lock-in, limiting choices and potentially creating security vulnerabilities. These features, while providing immediate security benefits, can hinder the ability to migrate to alternative platforms or to integrate with broader security ecosystems.Vendors often offer security features that are tightly integrated with their serverless platforms, such as identity and access management (IAM) systems, encryption services, and vulnerability scanning tools.

While these features can simplify security management, they can also create dependencies that make it difficult to switch vendors.

- Proprietary IAM Systems: Vendors often have their own IAM systems, which can make it difficult to integrate with existing identity providers or to adopt a consistent IAM strategy across multiple cloud providers. For instance, if a company uses a specific vendor’s IAM solution for its serverless applications, migrating to another vendor would require rewriting access control policies and potentially retraining users.

- Vendor-Specific Encryption: Encryption services offered by vendors may use proprietary algorithms or key management systems, making it challenging to decrypt data outside of the vendor’s platform. This can restrict the ability to perform forensic analysis or to migrate data to another environment.

- Limited Security Auditing: Vendor-specific security features may have limited audit capabilities, making it difficult to demonstrate compliance with industry regulations or to track security events. This can hinder incident response and increase the risk of non-compliance.

Impact on Compliance with Industry Regulations

Vendor lock-in can significantly impact compliance with industry regulations such as HIPAA (Health Insurance Portability and Accountability Act) in the healthcare sector and GDPR (General Data Protection Regulation) in the EU. Non-compliance can result in significant financial penalties and reputational damage.Compliance with regulations often requires specific data residency requirements, security controls, and audit trails. Vendor lock-in can make it difficult to meet these requirements, particularly if the vendor’s platform does not offer the necessary features or is not certified to the required standards.

- HIPAA Compliance: In the healthcare industry, HIPAA requires strict controls over protected health information (PHI). Vendor lock-in can complicate compliance by limiting data residency options, restricting access to audit logs, and potentially failing to meet the stringent security requirements mandated by HIPAA. For example, if a serverless platform does not provide granular access control or audit logging, it may be difficult to demonstrate compliance with HIPAA’s audit requirements.

- GDPR Compliance: GDPR imposes strict requirements on the processing of personal data of EU citizens. Vendor lock-in can hinder GDPR compliance by limiting data residency options, restricting the ability to provide data subject access requests, and potentially failing to meet the GDPR’s security requirements. A company locked into a vendor that does not offer data portability features might struggle to comply with the “right to be forgotten” under GDPR.

- PCI DSS Compliance: For businesses handling credit card information, PCI DSS (Payment Card Industry Data Security Standard) compliance is essential. Vendor lock-in can make PCI DSS compliance difficult if the vendor’s platform does not offer the necessary security controls or is not certified to PCI DSS standards. For example, if a serverless platform does not provide robust network segmentation or encryption, it may be challenging to meet PCI DSS requirements.

Impact on Innovation and Development

Vendor lock-in significantly impacts innovation and development in serverless computing, hindering the adoption of cutting-edge technologies and restricting developers’ choices. This section explores how platform dependencies can stifle progress and limit the flexibility needed to leverage the full potential of serverless architectures.

Stifling Innovation and Limiting Technology Adoption

Vendor lock-in directly impedes the rate at which new technologies and features are adopted. Serverless platforms, while offering convenience, often prioritize their own proprietary services and features, which can create a siloed environment that discourages the integration of external innovations. This results in slower uptake of advancements that are not aligned with the vendor’s roadmap.

- Proprietary Services: Platforms frequently introduce unique services or extensions to existing open-source technologies, creating dependencies. For example, a platform might offer a specialized database service tightly integrated with its serverless functions, making it difficult to migrate to a different database or platform.

- Feature Lag: The implementation of new features or support for emerging technologies might be delayed if the vendor doesn’t prioritize them. This can force developers to wait for updates or find workarounds, slowing down development cycles and hindering the adoption of potentially beneficial tools.

- Limited Community Support: Vendors may not always prioritize community contributions or open-source integrations. This can lead to a lack of support for third-party libraries, tools, and frameworks, limiting the ecosystem’s growth and the ability to leverage community-driven innovation.

Comparing Innovation and Feature Releases Across Platforms

The rate of innovation and feature releases varies considerably across different serverless platforms. Some platforms, with larger development teams and greater financial resources, can introduce new features more rapidly. However, the speed of innovation doesn’t always correlate with the overall value or suitability for specific use cases.

Consider the release cycles of different serverless platforms. Platform A, a well-established provider, might release a new compute feature every quarter. Platform B, a smaller, more agile provider, might focus on specialized tools or integrations. While Platform A offers broader functionality, Platform B’s focus on a niche area can drive innovation in a specific domain.

- Platform A (Large Vendor): Offers a wide range of services and frequent feature releases, often driven by broad market demands. However, the rate of innovation in specific areas might be slower due to the complexity of managing a large ecosystem.

- Platform B (Specialized Vendor): Focuses on a narrower set of services, allowing for faster innovation and more targeted features. This can result in more tailored solutions for specific use cases but might limit the availability of broader services.

Restricting Developer Choices

Vendor lock-in limits developers’ ability to select the best tools and services for their needs, often forcing them to compromise on performance, cost, or functionality. This restriction can lead to suboptimal solutions and prevent the adoption of best-of-breed technologies.

Developers might be compelled to use a vendor’s database service, even if it’s not the optimal choice for their application’s performance or cost requirements, simply because it’s tightly integrated with the serverless platform. This can lead to situations where developers are locked into a specific technology stack, hindering their ability to adapt to changing requirements or take advantage of emerging solutions.

- Service Availability: Vendors may not offer all the services developers need, forcing them to choose less suitable alternatives or build custom solutions. For instance, a vendor might lack a specific machine learning service, requiring developers to either use a less effective alternative or build their own integration, increasing development time and complexity.

- Cost Considerations: The pricing models of vendor-specific services can be inflexible, potentially leading to higher costs compared to open-source or multi-cloud alternatives. Developers might be forced to pay more for a service simply because it’s part of the vendor’s ecosystem.

- Integration Limitations: Vendor lock-in can hinder the integration of third-party tools and services. If a platform doesn’t provide easy integration with popular CI/CD tools, monitoring systems, or security services, developers might face additional complexity and effort in setting up their development and deployment pipelines.

Strategies for Mitigating Vendor Lock-in

Adopting serverless technologies offers numerous advantages, but it’s crucial to proactively address the potential for vendor lock-in. Implementing strategic mitigation measures from the outset can significantly reduce risks, promoting flexibility, portability, and long-term cost-effectiveness. This proactive approach allows organizations to maintain control over their infrastructure and adapt to evolving business needs.

Portability and Abstraction

Achieving portability in serverless environments hinges on abstracting away vendor-specific implementations. This involves designing applications to interact with cloud services through standardized interfaces and using infrastructure-as-code (IaC) tools that support multiple cloud providers. The goal is to minimize dependencies on proprietary features and create a more flexible and transferable architecture.

- Standardized Interfaces: Utilizing open standards and APIs, such as HTTP REST APIs or message queues adhering to standards like AMQP, facilitates interaction with various cloud services without being tightly coupled to a single vendor’s offering. For example, instead of relying on a vendor-specific message queue, one could use a queue that supports standard protocols, allowing for easier migration.

- Infrastructure-as-Code (IaC): Employing IaC tools like Terraform or Pulumi enables the declarative definition of infrastructure across multiple cloud providers. This approach allows for the automated provisioning and management of resources, promoting consistency and simplifying the process of migrating workloads between different platforms. A Terraform configuration, for example, can define the same function deployment on AWS Lambda, Google Cloud Functions, or Azure Functions, with minimal modifications.

- Abstraction Layers: Developing custom abstraction layers can provide a consistent interface to underlying cloud services. This layer translates generic function calls into vendor-specific implementations, allowing the core application logic to remain vendor-agnostic. For example, a custom library could handle database interactions, allowing the application to switch between different database services without code changes in the application logic.

Open-Source Tools and Frameworks

Leveraging open-source tools and frameworks is a key strategy for reducing vendor lock-in. These resources provide portability, promote community support, and often offer vendor-neutral solutions. Selecting and integrating these tools early in the development process can significantly enhance flexibility.

- Serverless Framework: The Serverless Framework is a popular open-source tool for building and deploying serverless applications across multiple cloud providers. It simplifies the deployment process and offers abstractions for various serverless services, allowing developers to manage their infrastructure with a single configuration file. The framework supports AWS, Azure, Google Cloud, and other platforms.

- Kubeless: Kubeless is a serverless framework built on Kubernetes, providing a vendor-agnostic platform for deploying and managing serverless functions. It allows developers to deploy functions on any Kubernetes cluster, offering portability and flexibility.

- Apache OpenWhisk: Apache OpenWhisk is an open-source, cloud-native, distributed event-driven programming platform. It provides a platform for executing functions in response to events, supporting multiple programming languages and integrating with various cloud services.

- Faas-cli: Faas-cli is a command-line interface for building and deploying serverless functions. It provides a unified interface for interacting with various serverless platforms, simplifying the development and deployment process.

Multi-Cloud and Hybrid Cloud Strategies

Employing multi-cloud or hybrid cloud strategies provides an effective means of mitigating vendor lock-in. By distributing workloads across multiple cloud providers or combining on-premises infrastructure with cloud services, organizations can reduce their dependence on a single vendor. This approach also enhances resilience and availability.

- Workload Distribution: Distributing different components of an application across multiple cloud providers or on-premises environments mitigates the impact of vendor-specific outages or limitations. For example, a company might deploy its front-end applications on one cloud provider and its database on another.

- Data Replication: Replicating data across multiple cloud providers ensures data availability and provides a backup in case of a vendor-specific failure. This also facilitates easier migration of data between different platforms.

- Hybrid Cloud Architecture: Integrating on-premises infrastructure with cloud services provides flexibility and control over data and applications. This allows organizations to keep sensitive data on-premises while leveraging the scalability and cost-effectiveness of the cloud for other workloads.

Governance and Standardization

Establishing clear governance policies and standards is essential for mitigating vendor lock-in. This involves defining coding standards, architectural guidelines, and procurement processes that prioritize portability and vendor independence. These policies help ensure consistency and reduce the risk of inadvertently becoming locked into a specific vendor.

- Coding Standards: Establishing coding standards that prioritize portability and vendor independence is crucial. These standards should encourage the use of open standards and APIs, minimize the use of vendor-specific features, and promote modular design.

- Architectural Guidelines: Defining architectural guidelines that favor vendor-agnostic designs and discourage tight coupling with specific cloud services promotes flexibility. These guidelines should emphasize the use of abstraction layers, IaC tools, and standardized interfaces.

- Procurement Processes: Implementing procurement processes that consider vendor lock-in risks is essential. This involves evaluating the portability and interoperability of different cloud services, negotiating flexible contracts, and considering the long-term implications of vendor choices.

Mitigation Strategies: Pros and Cons

The following table summarizes different mitigation strategies, outlining their pros and cons.

| Mitigation Strategy | Pros | Cons |

|---|---|---|

| Portability and Abstraction |

|

|

| Open-Source Tools and Frameworks |

|

|

| Multi-Cloud and Hybrid Cloud Strategies |

|

|

| Governance and Standardization |

|

|

Alternative Approaches and Technologies

Addressing vendor lock-in necessitates exploring alternative technologies and approaches that promote portability and flexibility within serverless architectures. These strategies aim to reduce dependence on specific cloud providers, thereby mitigating the risks associated with vendor lock-in.

Containerization for Portability

Containerization, particularly through technologies like Docker and orchestration platforms like Kubernetes, offers a significant advantage in achieving greater portability in serverless environments.

- Containerization Explained: Containerization encapsulates an application and its dependencies into a self-contained unit, known as a container. This ensures that the application runs consistently across different environments, irrespective of the underlying infrastructure.

- Docker’s Role: Docker is a leading platform for creating, deploying, and managing containers. Developers can package serverless functions, along with their required libraries and runtime environments, into Docker images.

- Kubernetes Orchestration: Kubernetes facilitates the automated deployment, scaling, and management of containerized applications. It provides a platform for deploying and managing serverless functions packaged as containers across various cloud providers or on-premises infrastructure.

- Portability Advantages: The use of containers enables functions to be easily moved between different cloud providers or even to on-premises environments. This significantly reduces vendor lock-in, as the underlying infrastructure becomes less of a constraint.

- Example: A serverless function, originally developed for AWS Lambda, can be containerized using Docker. This container can then be deployed on Google Cloud Functions, Azure Functions, or a Kubernetes cluster, promoting interoperability.

Comparison of FaaS with Other Serverless Computing Models

Understanding the nuances of Function-as-a-Service (FaaS) in comparison to other serverless computing models is crucial for making informed architectural decisions and avoiding vendor lock-in.

- FaaS Defined: FaaS is a serverless computing model where developers deploy individual functions that are triggered by events. The cloud provider manages the infrastructure, scaling, and execution of these functions.

- Backend-as-a-Service (BaaS): BaaS provides pre-built backend services, such as databases, authentication, and storage, that developers can integrate into their applications. While BaaS can accelerate development, it can also lead to vendor lock-in if the application heavily relies on proprietary services.

- Serverless Databases: Serverless databases, such as Amazon DynamoDB or Google Cloud Firestore, are managed databases that scale automatically. They provide a fully managed database solution without the need for server management. Reliance on these databases can contribute to vendor lock-in.

- Comparison Table: A table summarizing the key differences:

Feature FaaS BaaS Serverless Databases Primary Focus Execution of individual functions Backend services (authentication, storage, etc.) Managed database services Vendor Lock-in Potential Medium (depending on event triggers and function dependencies) High (due to reliance on proprietary services) High (due to specific database features and APIs) Portability Can be improved with containerization Limited Limited - Vendor Lock-in Mitigation: To mitigate vendor lock-in, developers can adopt a multi-cloud strategy, using open-source tools and standard APIs whenever possible.

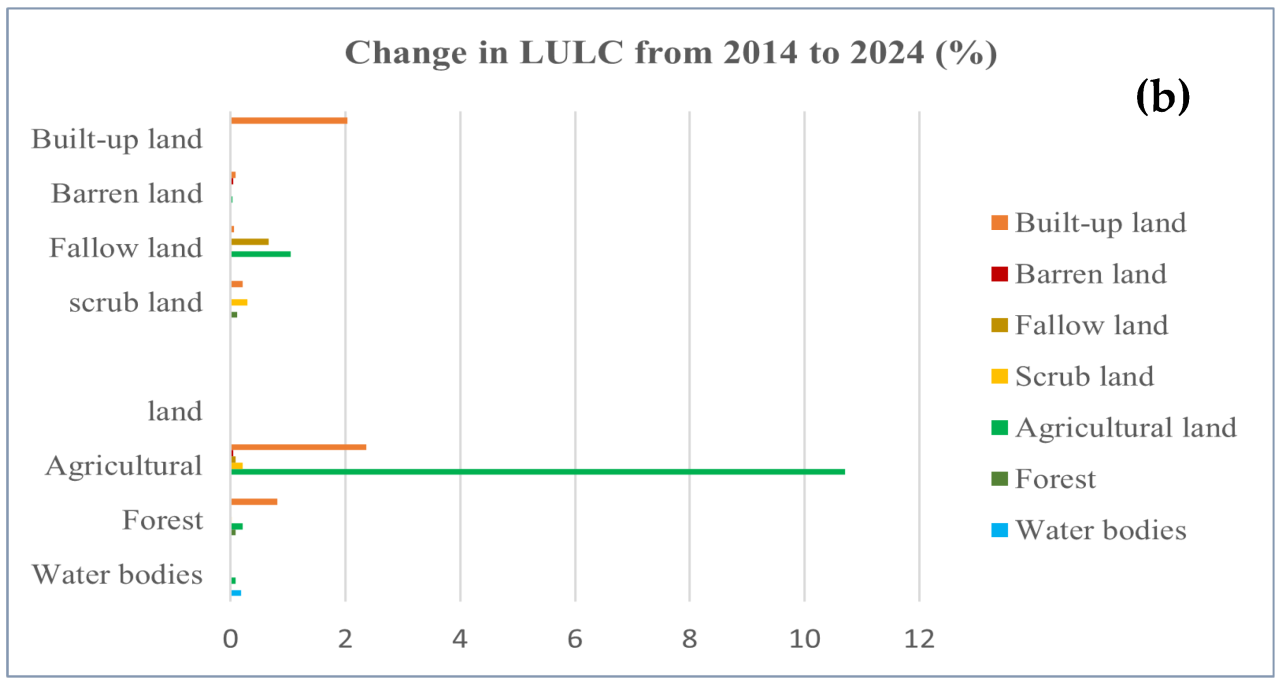

Architecture of a Vendor-Agnostic Serverless Application

The architecture of a vendor-agnostic serverless application utilizes open-source tools and standard interfaces to minimize dependency on any single cloud provider.

Image Description: A diagram depicting a vendor-agnostic serverless application architecture. At the center, there is a ‘User’ interacting with an ‘API Gateway’ (e.g., Kong, Tyk), which serves as the entry point for all requests. The API Gateway routes requests to a ‘Function Router’ (e.g., Apache OpenWhisk, Knative). The Function Router then invokes ‘Serverless Functions’ (e.g., deployed via Docker containers) that are hosted on a ‘Container Orchestration Platform’ (e.g., Kubernetes).

These functions interact with a ‘Message Queue’ (e.g., RabbitMQ, Kafka) for asynchronous communication. The functions can also access a ‘Database’ (e.g., PostgreSQL, MongoDB) and ‘Object Storage’ (e.g., MinIO, Ceph), which are designed to be portable and easily replaceable. The API Gateway, Function Router, Container Orchestration Platform, Message Queue, Database, and Object Storage are all labeled as ‘Open Source Components’. Arrows indicate the flow of data and requests, showcasing the interaction between the user, API Gateway, Function Router, Serverless Functions, Container Orchestration Platform, Message Queue, Database, and Object Storage.

The diagram highlights the use of open-source components to ensure vendor neutrality and portability.

Real-World Case Studies and Examples

Understanding the practical implications of vendor lock-in necessitates examining real-world scenarios. Analyzing how organizations have navigated the complexities of serverless vendor dependencies offers invaluable insights into the challenges and potential mitigation strategies. These case studies illuminate the practical difficulties and provide lessons learned.

Organizations’ Experiences with Serverless Vendor Lock-in

Several organizations have encountered vendor lock-in issues in serverless environments. These experiences range from minor inconveniences to significant operational and financial setbacks. The degree of impact is often correlated with the extent of vendor-specific services utilized and the complexity of the application architecture.

- Company A: E-commerce Platform. Company A, a growing e-commerce platform, initially adopted a single vendor’s serverless platform for its core application logic. They leveraged the vendor’s API Gateway, Function-as-a-Service (FaaS), and database offerings. As their business grew, they found themselves heavily reliant on the vendor’s specific tooling, monitoring, and debugging features. The vendor’s pricing structure, while competitive initially, became increasingly expensive as traffic increased.

Furthermore, the vendor’s specific API Gateway implementation presented challenges in integrating with other services, limiting their architectural flexibility.

- Company B: SaaS Provider. Company B, a Software-as-a-Service (SaaS) provider, chose a serverless vendor for its backend infrastructure. They integrated the vendor’s compute, storage, and message queuing services. Over time, the vendor introduced breaking changes in their SDKs and APIs, which required significant code refactoring. They also found it difficult to migrate specific functionalities to a different vendor due to the high degree of integration and the proprietary nature of the code.

This resulted in a slower development cycle and increased operational costs.

- Company C: Media Streaming Service. Company C, a media streaming service, adopted a serverless platform for video transcoding and delivery. They heavily utilized the vendor’s specific video processing services and content delivery network (CDN). This created significant lock-in. When the vendor experienced outages or introduced pricing changes, it directly impacted the service’s availability and profitability. They were also restricted in optimizing their video delivery infrastructure because they were constrained by the vendor’s specific CDN features and pricing.

Detailed Account of a Migration Journey

The following is a detailed account of a hypothetical company’s migration journey, emphasizing the challenges of moving from one serverless vendor to another. This scenario reflects the difficulties encountered during such a transition.

Consider “GlobalTech,” a company providing a mobile application for real-time data analytics. They initially built their backend on a specific serverless vendor’s platform, using their FaaS offering for processing data streams, their NoSQL database for data storage, and their API Gateway for client access. The initial setup was straightforward, but as the application grew, GlobalTech faced increasing challenges:

- Increased Costs: The vendor’s pricing model became unsustainable as data volume and processing demands increased. The costs significantly exceeded initial projections.

- Performance Issues: The vendor’s FaaS platform experienced occasional performance bottlenecks during peak hours, impacting the user experience.

- Feature Limitations: GlobalTech needed specific features, such as advanced data analytics capabilities, that were not available or were poorly implemented on the vendor’s platform.

GlobalTech decided to migrate to a different vendor offering a more cost-effective and feature-rich platform. The migration process was complex and resource-intensive. The steps included:

- Assessment and Planning: A comprehensive assessment of the existing architecture was conducted to identify dependencies and potential migration challenges. A detailed migration plan was created, outlining the steps, timelines, and resource requirements.

- Code Refactoring: A significant amount of code refactoring was required. The existing code, tightly coupled with the original vendor’s SDKs and APIs, had to be rewritten to work with the new vendor’s services. This included rewriting the FaaS functions, adapting database interactions, and modifying API Gateway configurations.

- Data Migration: Migrating the data from the original vendor’s NoSQL database to the new vendor’s database was a critical and time-consuming task. Data transformation and validation were essential to ensure data integrity.

- Testing and Validation: Rigorous testing was conducted to ensure the migrated application functioned correctly. This included unit tests, integration tests, and performance tests.

- Deployment and Cutover: The new application was deployed, and the traffic was gradually shifted from the original vendor’s platform to the new one. This process required careful monitoring to minimize downtime and ensure a smooth user experience.

The migration took several months and required a dedicated team of engineers. GlobalTech faced several challenges during the process, including:

- Code Compatibility: Adapting the existing code to the new vendor’s APIs and SDKs was a significant undertaking.

- Data Migration Complexity: Migrating a large volume of data between different database systems required complex scripting and data validation.

- Downtime and Risk: Minimizing downtime during the cutover process was critical. There was a risk of data loss or service interruption.

- Learning Curve: The team had to learn the new vendor’s platform and tooling, which took time and effort.

Key Lessons Learned from Vendor Lock-in Experiences

Vendor lock-in in serverless environments can result in significant operational overhead, increased costs, and reduced flexibility. Organizations should carefully evaluate the trade-offs of vendor-specific services, prioritize portability, and invest in strategies to mitigate lock-in risks.

Ultimate Conclusion

In conclusion, the journey through the challenges of vendor lock-in in serverless computing reveals a complex interplay of technical, financial, and operational factors. While serverless offers compelling advantages, organizations must proactively address the risks associated with vendor dependency. By employing mitigation strategies, exploring alternative technologies, and learning from real-world experiences, businesses can navigate the serverless landscape with greater agility and resilience.

The future of serverless success hinges on a balanced approach that prioritizes both innovation and strategic vendor management.

FAQ Summary

What is vendor lock-in in the context of serverless?

Vendor lock-in refers to the situation where a serverless application becomes tightly coupled with a specific cloud provider’s services, making it difficult and costly to migrate to another provider or to use open-source alternatives.

How does vendor lock-in affect cost management?

Vendor lock-in can lead to unexpected cost increases over time, as providers may raise prices or discontinue free tiers. It also reduces negotiation leverage, limiting the ability to seek more favorable pricing.

What are the technical challenges of migrating a serverless application?

Migrating involves adapting code written for proprietary APIs, reconfiguring infrastructure as code (IaC) scripts, and potentially rewriting parts of the application to work with a different provider’s services, all of which can be time-consuming and complex.

How can open-source tools help mitigate vendor lock-in?

Open-source tools and frameworks provide abstraction layers that allow developers to write code that is more portable across different cloud providers. This reduces dependency on vendor-specific features and simplifies migration.

What are the security implications of vendor lock-in?

Vendor lock-in can limit security choices by restricting the use of certain security features or compliance certifications. It can also impact data residency, potentially affecting compliance with regulations like GDPR or HIPAA.