Serverless computing has revolutionized application development, offering unprecedented scalability and efficiency. At the heart of this paradigm shift lies the need for robust standards, particularly concerning event-driven architectures. This exploration delves into the core of what are the standards for serverless like CloudEvents, examining their pivotal role in enabling interoperability, portability, and vendor neutrality within a complex ecosystem. We will unravel the intricacies of CloudEvents, a specification designed to standardize the description of event data, and its impact on the future of serverless deployments.

CloudEvents, as a specification, provides a common language for describing events across different platforms and services. This standardization facilitates seamless communication between various components, regardless of their underlying infrastructure. This document will meticulously dissect the CloudEvents specification, detailing its core attributes, event types, and delivery mechanisms. Furthermore, we will investigate the role of event brokers, compliance and validation processes, implementation considerations, and the broader ecosystem that supports CloudEvents, providing practical insights and code examples.

Introduction to Serverless Computing

Serverless computing represents a paradigm shift in cloud computing, enabling developers to build and run applications without managing servers. This approach focuses on abstracting away the underlying infrastructure, allowing developers to concentrate solely on writing code and responding to events. This section will explore the foundational concepts of serverless computing, its historical development, and the advantages it offers over traditional infrastructure models.

Fundamental Concepts of Serverless Computing

Serverless computing, despite its name, does not mean there are no servers. Instead, it signifies that developers are not responsible for managing them. The cloud provider handles all aspects of server management, including provisioning, scaling, and maintenance. The core concept revolves around the execution of code in response to events, often triggered by HTTP requests, database updates, or scheduled timers.Key characteristics define serverless architecture:

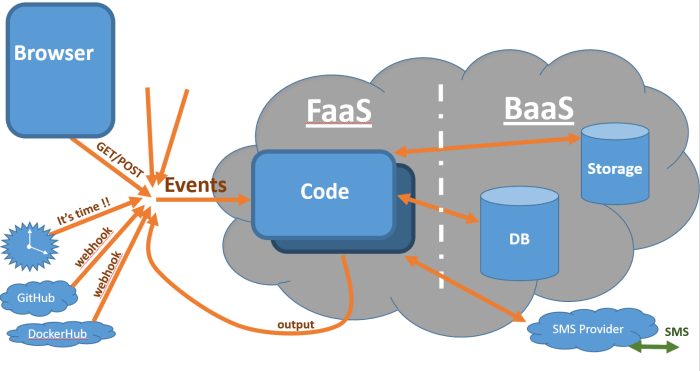

- Event-Driven Execution: Applications are triggered by events. This can be anything from a user action to a scheduled task. The function is executed only when the event occurs.

- Function-as-a-Service (FaaS): The fundamental building block of serverless is the function. Developers write small, independent pieces of code (functions) that perform specific tasks. Examples include AWS Lambda, Azure Functions, and Google Cloud Functions.

- Automatic Scaling: The cloud provider automatically scales the resources allocated to a function based on demand. This ensures that the application can handle fluctuating workloads without manual intervention.

- Pay-per-Use Pricing: Users are charged only for the actual compute time and resources consumed by their functions. This can lead to significant cost savings compared to traditional infrastructure, especially for applications with variable traffic patterns.

- Stateless Functions: Functions are generally stateless, meaning they do not retain any information from one invocation to the next. Any necessary state management is typically handled by external services like databases or caches.

Brief History and Evolution of Serverless

The concept of serverless computing has evolved over time, with its roots in early cloud services. The evolution has been marked by the increasing abstraction of infrastructure management.The evolution of serverless can be summarized as:

- Early Cloud Computing (2000s): The emergence of Infrastructure-as-a-Service (IaaS) provided virtual machines, but still required manual server management.

- Platform-as-a-Service (PaaS) (Early 2010s): PaaS offered higher-level abstractions, simplifying application deployment and management, but still involved managing the underlying platform.

- Function-as-a-Service (FaaS) (Mid-2010s): FaaS platforms, such as AWS Lambda (2014), truly ushered in the serverless era, enabling event-driven execution and pay-per-use pricing.

- Serverless Application Platforms (Late 2010s – Present): Serverless has matured with the introduction of platforms that provide complete solutions for building, deploying, and managing serverless applications, including tools for monitoring, security, and CI/CD.

Core Advantages of Serverless Over Traditional Infrastructure

Serverless computing provides several advantages over traditional infrastructure models, leading to increased efficiency, reduced costs, and faster development cycles. These benefits are particularly relevant for modern application development and deployment.The main advantages are:

- Reduced Operational Overhead: The cloud provider handles server provisioning, scaling, and maintenance, freeing developers from these tasks. This allows developers to focus on writing code and building features.

- Automatic Scalability: Serverless applications automatically scale up or down based on demand, ensuring optimal performance and resource utilization. This eliminates the need for manual capacity planning and reduces the risk of performance bottlenecks.

- Cost Optimization: Pay-per-use pricing means users are charged only for the actual resources consumed. This can result in significant cost savings, especially for applications with unpredictable or spiky traffic patterns. For example, a media company might see large fluctuations in traffic based on new content releases; serverless can scale up and down to handle these spikes, without the company having to over-provision resources.

- Faster Time to Market: Serverless platforms streamline the development and deployment process, allowing developers to build and release applications more quickly. The simplified infrastructure management reduces the time spent on operational tasks, enabling developers to focus on delivering value to users.

- Improved Developer Productivity: By abstracting away the complexities of infrastructure management, serverless empowers developers to be more productive. They can focus on writing code, debugging, and iterating on features without being bogged down by server-related tasks.

Defining CloudEvents

CloudEvents provides a standardized way to describe event data across different serverless platforms and systems. This standardization simplifies event routing, processing, and observability in event-driven architectures. Its purpose is to ensure interoperability and portability of event data, facilitating the creation of loosely coupled, scalable, and resilient serverless applications.

CloudEvent Definition and Purpose

CloudEvents is a specification for describing event data in a common format. It’s designed to provide a consistent structure for events regardless of the source or the system consuming them. This uniformity is crucial for interoperability and enables developers to build event-driven systems that can seamlessly integrate components from different vendors and platforms. The primary purpose of CloudEvents is to foster a unified approach to eventing, enabling easier integration, portability, and management of events across diverse environments.

Key Components of a CloudEvent Message

A CloudEvent message is structured as a set of attributes, some of which are required, and others that are optional. These attributes provide metadata about the event itself, allowing consumers to understand the context and content of the event without needing to know the specifics of the event producer.

- specversion: This REQUIRED attribute specifies the version of the CloudEvents specification used. This ensures that consumers can parse the event correctly, knowing the structure and attributes to expect. For example, “1.0” indicates compliance with the CloudEvents 1.0 specification.

- type: This REQUIRED attribute describes the type of event. It provides a semantic meaning for the event, such as “com.example.order.created” or “com.github.issue.opened”. The event type is critical for event routing and filtering. Consumers use this to determine whether they should process a particular event.

- source: This REQUIRED attribute identifies the source of the event. It’s a URI that indicates the origin of the event, for instance, “https://example.com/orderservice”. The source allows consumers to understand the origin of the event, crucial for debugging and tracing.

- id: This REQUIRED attribute is a unique identifier for the event. It allows consumers to distinguish between individual events and prevent duplicate processing. The ID ensures that each event is processed only once.

- data: This OPTIONAL attribute contains the event data itself. The format of the data is specified by the `datacontenttype` attribute. This allows for the event data to be in various formats, such as JSON, XML, or plain text.

- datacontenttype: This OPTIONAL attribute specifies the media type of the data attribute, such as “application/json” or “text/plain”. This informs the consumer how to interpret the data payload.

- dataschema: This OPTIONAL attribute is a URI that points to a schema that describes the structure of the data attribute. This allows consumers to validate the data against a known schema.

- subject: This OPTIONAL attribute provides a context about the subject of the event. For example, it might identify the specific order ID or the issue number related to the event.

Benefits of Using CloudEvents for Event-Driven Systems

Adopting CloudEvents offers several advantages for event-driven architectures. These benefits span across different aspects of system design, implementation, and maintenance, leading to more robust, scalable, and interoperable serverless applications.

- Interoperability: CloudEvents promotes interoperability between different systems and platforms. By using a common event format, systems from different vendors can easily exchange events, reducing the need for custom integrations and simplifying the integration process. This allows for greater flexibility in choosing components and services.

- Portability: Applications built using CloudEvents are more portable. Because the event format is standardized, events can be processed by any system that supports CloudEvents, regardless of the underlying infrastructure. This portability enables applications to be deployed across different cloud providers or on-premises environments with minimal modification.

- Simplified Event Routing and Processing: CloudEvents simplifies event routing and processing. The standardized format allows event brokers and message queues to easily filter and route events based on their type, source, and other attributes. This simplifies the creation of complex event-driven workflows and reduces the complexity of event handling logic.

- Enhanced Observability: CloudEvents improves event observability. The standardized format makes it easier to track events as they flow through a system. This allows for better monitoring, debugging, and troubleshooting. Event metadata, such as event type and source, provides valuable context for understanding event behavior.

- Reduced Vendor Lock-in: By using a vendor-neutral event format, CloudEvents reduces vendor lock-in. Applications can be designed to work with any event broker or platform that supports CloudEvents, providing greater flexibility in choosing the best tools for the job. This also allows for easier migration between different platforms.

- Scalability and Resilience: CloudEvents facilitates the building of scalable and resilient event-driven systems. The loosely coupled nature of event-driven architectures, combined with the standardized event format, allows for easier scaling and fault tolerance. Independent components can process events asynchronously, improving overall system performance and availability.

The Role of Standards in Serverless

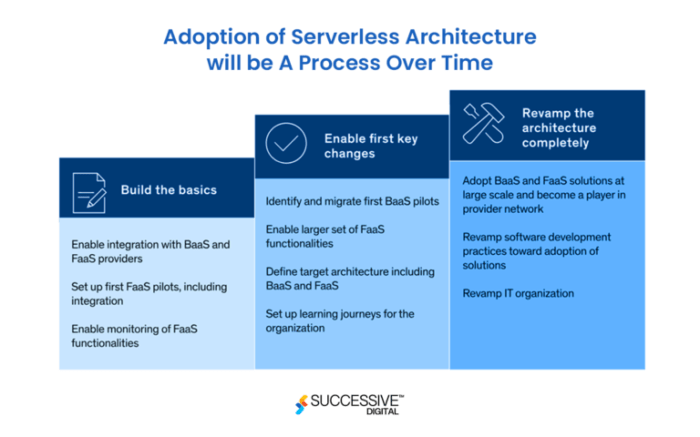

Serverless computing, by its nature, introduces a high degree of distributed functionality, vendor lock-in concerns, and the potential for complex integration scenarios. Establishing and adhering to robust standards becomes critical in this environment. Without them, the promise of portability, interoperability, and vendor neutrality, which are key benefits of serverless, risks being significantly diminished. Standards act as the common language and set of rules that allow different components and platforms to interact seamlessly, ultimately shaping the effectiveness of serverless adoption.

Interoperability in Serverless Environments

Interoperability, the ability of different systems to exchange and use information, is fundamentally improved through the implementation of standards. Serverless architectures often comprise components from multiple vendors, services running on different cloud platforms, and a variety of programming languages. Standards facilitate communication and data exchange between these disparate elements.

- Data Format Consistency: Standards, like CloudEvents, define a common format for event data. This ensures that events produced by one service can be reliably consumed by another, regardless of the underlying platform or vendor. Consider a scenario where a payment processing service on AWS triggers an event to notify a fulfillment service running on Google Cloud. Without a standard event format, the fulfillment service would need custom logic to understand the payment event, leading to complexity and increased maintenance.

With CloudEvents, both services can readily understand the event structure.

- API Definition and Interaction: API standards, such as OpenAPI (formerly Swagger), define the interfaces for serverless functions. This promotes interoperability by providing a clear and consistent way for services to interact with each other. This facilitates the creation of service meshes and orchestrators.

- Protocol Support: Standards like HTTP and gRPC provide the underlying communication protocols that serverless functions use to communicate. Adherence to these protocols ensures that services can reliably exchange data over the network.

Portability and Vendor Neutrality

Standards are instrumental in promoting portability and vendor neutrality in serverless computing. They allow developers to move their applications and functions between different cloud providers or on-premise environments with greater ease. This reduces vendor lock-in and gives organizations more flexibility.

- Abstraction of Underlying Infrastructure: Standards, by providing a common interface, abstract away the complexities of the underlying infrastructure. For example, a serverless function written to the CloudEvents specification can be deployed on AWS Lambda, Azure Functions, or Google Cloud Functions with minimal code changes.

- Reduced Vendor-Specific Dependencies: Adhering to standards minimizes reliance on vendor-specific features and APIs. This makes it easier to switch cloud providers or deploy applications across hybrid cloud environments. Using vendor-neutral libraries and frameworks further enhances portability.

- Simplified Migration Processes: Standards-based architectures streamline migration processes. If an organization decides to migrate its serverless workloads from one cloud provider to another, the presence of standards minimizes the amount of code rewriting and infrastructure reconfiguration required. The migration can be planned and executed in a more controlled manner.

Challenges of a Lack of Standardization

The absence of robust standards in serverless computing can create significant challenges, leading to increased complexity, vendor lock-in, and reduced interoperability. These issues can hinder innovation and slow down the adoption of serverless architectures.

- Vendor Lock-in: Without standards, developers often become reliant on proprietary features and APIs offered by a specific cloud provider. This creates vendor lock-in, making it difficult and costly to switch providers or deploy applications across multiple clouds.

- Interoperability Issues: Without common data formats, APIs, and protocols, serverless functions and services from different vendors may not be able to communicate effectively. This requires custom integrations, which increase development time, maintenance costs, and the risk of errors.

- Complexity and Maintenance Overhead: The lack of standardization leads to a fragmented ecosystem with different implementations of similar functionality. This increases the complexity of serverless applications and adds to the maintenance overhead.

- Limited Portability: Without standards, moving serverless applications between different platforms or environments becomes a significant challenge. Developers may need to rewrite large portions of their code or redesign their infrastructure to accommodate the differences between providers.

- Increased Development Costs: The absence of standards leads to higher development costs, as developers spend more time on custom integrations, debugging interoperability issues, and adapting their code to different platforms.

CloudEvents Specification Details

CloudEvents, at its core, provides a standardized way to describe event data in a vendor-neutral manner. This section delves into the specifics of the CloudEvents specification, examining the core attributes required for a compliant message, the various event types and their associated data schemas, and common extensions that enhance its versatility. Understanding these details is crucial for building interoperable and scalable serverless applications.

Core Attributes of a CloudEvent

The CloudEvents specification defines a set of core attributes that are mandatory for all CloudEvent messages. These attributes provide essential metadata about the event, enabling consistent interpretation and processing across different systems.

- specversion: This attribute specifies the version of the CloudEvents specification to which the event adheres. This ensures backward compatibility and allows for future specification updates. For example, “1.0” indicates compliance with the current version.

- type: This attribute identifies the type of event that occurred. It is a string that provides semantic information about the event. Examples include “com.example.order.created” or “com.github.pull_request.opened.” The type is crucial for routing and processing events.

- source: This attribute identifies the source of the event. It is a URI that indicates the origin of the event, such as a service or application. For instance, “https://example.com/orderservice” specifies the source. This attribute helps in tracing the origin of events and is essential for debugging and auditing.

- id: This attribute provides a unique identifier for the event. It is a string that distinguishes this event from other events. This attribute ensures idempotency and facilitates event tracking.

- datacontenttype: This attribute describes the media type of the data attribute. It uses a MIME type to specify the format of the data payload. Common values include “application/json” or “text/plain”.

- data: This attribute contains the event data itself. The format and content of the data are determined by the `datacontenttype` and the event `type`. It can contain any information relevant to the event, such as order details or user profile changes.

Event Types and Data Schemas

Event types are defined by the sender and describe the kind of event that has occurred. Data schemas provide a structure for the event data, ensuring that consumers can reliably interpret and process the event information. The schema is often described using JSON Schema or similar standards.For example, consider an event of type “com.example.order.created.” The data payload might contain the order details, structured as JSON.“`json “orderId”: “12345”, “customerId”: “67890”, “items”: [ “productId”: “A123”, “quantity”: 2 ], “totalAmount”: 50.00“`Another example, for an event of type “com.github.pull_request.opened”, the data could include the pull request details, such as the repository name, the author, and the title.“`json “repository”: “my-repo”, “author”: “octocat”, “title”: “Fix bug in feature X”“`The schema for each event type ensures that consumers know what data to expect and how to interpret it.

This standardization enables interoperability and simplifies the development of event-driven applications.

Common CloudEvent Extensions and Use Cases

CloudEvent extensions allow for the addition of custom attributes to CloudEvent messages, enabling the inclusion of metadata specific to particular use cases or applications. These extensions enhance the flexibility and utility of CloudEvents.

| Extension Name | Use Case | Description | Example |

|---|---|---|---|

subject | Event Filtering and Routing | Provides a context-specific subject for the event. Useful for routing events based on specific subjects. | An event about an order creation might include the subject “order/12345”. |

time | Event Time Tracking | Specifies the time the event occurred. Useful for analyzing event timelines and ordering. | The time of the event could be: “2024-01-20T10:00:00Z”. |

traceparent | Distributed Tracing | Integrates with distributed tracing systems (e.g., OpenTelemetry). Facilitates tracing events across services. | Includes a trace ID and span ID for correlation with distributed traces. |

partitionkey | Event Partitioning | Specifies a partition key for event distribution in systems like Apache Kafka or Azure Event Hubs. | Used to ensure events with the same key are processed in the same partition, e.g., “customer-123”. |

CloudEvents and Event Delivery

CloudEvents facilitates the reliable and interoperable delivery of events across diverse systems. The specification defines how events are formatted and transported, but it doesn’t dictate how these events are delivered. Instead, it provides a common framework that event delivery mechanisms can leverage. This allows for flexibility in choosing the most appropriate delivery method based on factors like performance requirements, network topology, and the needs of the event consumers.

Event Delivery Methods Supported by CloudEvents

CloudEvents supports various event delivery methods, each with its own characteristics and trade-offs. These methods determine how events are transmitted from the event source to the event consumer. Understanding these methods is crucial for designing efficient and scalable serverless applications.

- HTTP: This is a widely adopted and versatile method. CloudEvents payloads are typically sent as HTTP POST requests. The `Content-Type` header is used to specify the CloudEvents media type (e.g., `application/cloudevents+json` or `application/cloudevents+xml`). HTTP offers features like retry mechanisms, acknowledgements, and security through TLS. For example, an application might send an event to a web server endpoint that triggers a specific function.

The use of HTTP ensures compatibility with a broad range of platforms and services.

- Message Queues (e.g., AMQP, Kafka, MQTT): Message queues provide asynchronous event delivery, allowing event producers and consumers to operate independently. CloudEvents can be serialized and placed in the queue, and consumers subscribe to the queue to receive events. This method supports decoupling, scalability, and resilience. Kafka, in particular, is a popular choice for high-throughput event streams, suitable for real-time data processing and analytics.

- WebSockets: WebSockets offer a persistent, full-duplex communication channel. CloudEvents can be transmitted over WebSockets, allowing for real-time event delivery to connected clients. This is particularly useful for applications requiring immediate updates, such as live dashboards or collaborative tools.

- Server-Sent Events (SSE): SSE provides a unidirectional channel from the server to the client. CloudEvents can be formatted for SSE, allowing the server to push events to connected clients. This is suitable for scenarios where the client needs to receive updates without continuously polling the server.

- gRPC: gRPC, a high-performance, open-source remote procedure call (RPC) framework, can also be utilized for event delivery. CloudEvents can be serialized and transmitted using gRPC’s binary format. gRPC’s efficiency makes it suitable for applications with demanding performance requirements, such as microservices communicating within a data center.

The Role of Event Brokers and their Integration with CloudEvents

Event brokers are crucial components in modern serverless architectures, acting as intermediaries between event producers and consumers. They handle event routing, filtering, and delivery, providing decoupling and scalability. CloudEvents integrates seamlessly with event brokers, leveraging their capabilities to ensure reliable event delivery.

- Decoupling: Event brokers decouple event producers from consumers. Producers send events to the broker, and consumers subscribe to receive events. This allows producers and consumers to evolve independently without direct dependencies.

- Routing and Filtering: Event brokers often provide mechanisms for routing events based on their attributes (e.g., event type, source). Consumers can subscribe to specific event types or use filters to receive only the events they are interested in. This is often achieved using routing rules defined within the broker’s configuration.

- Reliability and Scalability: Event brokers typically offer features like message persistence, retry mechanisms, and horizontal scalability. This ensures that events are delivered reliably, even in the face of failures or high traffic volumes.

- Integration with CloudEvents: CloudEvents specifications ensure that event brokers can understand and process events in a standardized format. Brokers can use the attributes defined in CloudEvents (e.g., `type`, `source`, `data`) to route and filter events.

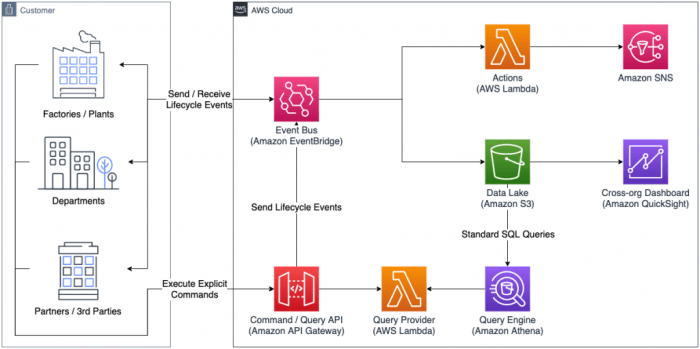

- Examples of Event Brokers: Popular event brokers that integrate with CloudEvents include Apache Kafka, RabbitMQ, Azure Event Grid, AWS EventBridge, and Google Cloud Pub/Sub. These platforms support CloudEvents natively or offer integrations that enable seamless event delivery.

Diagram Illustrating the Flow of a CloudEvent from Source to Consumer

The following diagram illustrates the flow of a CloudEvent from its source to a consumer, showcasing the role of an event broker.

Diagram Description: The diagram is a sequence diagram representing the flow of a CloudEvent. It starts with an “Event Source” component, which generates a CloudEvent. The Event Source is connected to an “Event Broker” via a line with an arrow, indicating that the event is sent to the broker. The Event Broker, which acts as a central hub, receives the CloudEvent.

The broker then routes the event based on its attributes (e.g., event type, source). Connected to the Event Broker is an “Event Consumer”. The Event Broker is connected to the Event Consumer via a line with an arrow, indicating the event is delivered. Finally, the Event Consumer processes the CloudEvent. The diagram also implicitly shows the serialization and deserialization processes that are essential when events are transferred between different systems.

Compliance and Validation

Ensuring the integrity and interoperability of CloudEvents hinges on robust compliance and validation mechanisms. This process guarantees that event messages adhere to the CloudEvents specification, promoting consistent behavior across different event producers and consumers. Validation is crucial for detecting and mitigating errors early in the event processing pipeline, preventing potential issues such as data corruption, incorrect routing, and application failures.

The following sections detail the steps involved in validating CloudEvent messages and provide tools and methods for handling non-compliant events.

Steps for Validating CloudEvent Messages for Compliance

The validation process for CloudEvent messages involves a series of systematic checks to confirm adherence to the specification. These steps are critical to maintaining data integrity and ensuring that event consumers can reliably interpret and process incoming messages.

- Schema Validation: This initial step verifies that the CloudEvent message conforms to the CloudEvents schema. This includes checking the presence and data types of required attributes, such as `specversion`, `type`, `source`, and `id`. Schema validation tools utilize JSON Schema or other schema definition languages to enforce these constraints.

- Attribute Value Validation: Beyond basic schema checks, this stage examines the values of individual attributes. For instance, the `specversion` attribute must match a supported version, and the `source` attribute should represent a valid URI. This level of validation ensures the semantic correctness of the event data.

- Content Validation: If the `datacontenttype` attribute is specified, the validation process extends to the event payload (the `data` attribute). This involves validating the content against the specified content type’s schema. For example, if the `datacontenttype` is `application/json`, the `data` should be valid JSON.

- Signature Verification (if applicable): In cases where CloudEvents are digitally signed for security, this step verifies the signature’s authenticity and integrity. This ensures that the event has not been tampered with during transit and that the sender is who they claim to be. This usually involves checking the digital signature against a trusted public key.

- Custom Validation (if required): Depending on the specific application, additional validation steps may be necessary. These custom checks can enforce business rules, data consistency, or any other application-specific requirements. These are usually implemented as part of the event processing logic.

Common Validation Tools and Their Features

A variety of tools are available to facilitate the validation of CloudEvent messages. These tools offer different features and capabilities, making them suitable for various use cases and environments.

Several tools support validation, each with its own advantages. Here’s a breakdown:

- CloudEvents SDKs: Software Development Kits (SDKs) for various programming languages (e.g., Python, Java, Go) often include built-in validation capabilities. These SDKs typically provide methods for parsing, serializing, and validating CloudEvent messages against the specification. They can also offer helper functions to simplify common validation tasks.

- Command-Line Tools: Command-line tools, such as `cloudevents-cli` (if available and supported), allow for quick validation of CloudEvent messages from the terminal. These tools are useful for testing, debugging, and integrating validation into automated build and deployment pipelines. They typically support input from files, standard input, and URLs.

- Validation Libraries: Dedicated validation libraries, often written in languages like Python (e.g., `cloudevents` library) or Java (e.g., libraries associated with eventing frameworks), provide comprehensive validation functionalities. These libraries offer schema validation, attribute value validation, and content validation, often supporting various content types.

- Eventing Platforms: Many eventing platforms (e.g., Knative Eventing, Apache Kafka with CloudEvents support) incorporate validation as part of their event processing pipelines. These platforms can automatically validate incoming CloudEvent messages and provide error handling mechanisms.

- API Gateways: API gateways can be configured to validate incoming CloudEvent messages before routing them to backend services. This provides a centralized point for enforcing compliance and protecting backend applications from invalid event data.

Methods to Handle Invalid CloudEvent Messages

When a CloudEvent message fails validation, appropriate handling is crucial to prevent disruption and maintain system stability. The approach to handling invalid messages should align with the application’s requirements and the severity of the validation failure.

Several strategies exist for dealing with invalid CloudEvent messages:

- Rejection and Logging: The simplest approach is to reject the invalid message and log the validation errors. This helps identify the source of the problem and track the frequency of non-compliant events. Detailed logging can include the message ID, the validation errors, and the source of the event.

- Dead Letter Queue (DLQ): Invalid messages can be routed to a DLQ. This allows for further investigation and potential reprocessing of the messages after the issue has been resolved. The DLQ can be monitored to identify recurring validation failures and trigger alerts.

- Error Reporting and Alerting: Implement a system to report validation errors to the relevant teams or individuals. This can include sending notifications, creating tickets, or updating dashboards. Timely reporting helps ensure that issues are addressed promptly.

- Transformation and Correction: In some cases, it might be possible to transform or correct invalid messages before processing them. This could involve adjusting attribute values, converting data types, or adding missing information. However, this approach should be used with caution, as it can mask underlying issues.

- Circuit Breakers: If a large number of invalid messages are detected, a circuit breaker can be activated to prevent further processing of events from a specific source. This protects downstream systems from being overwhelmed by invalid data.

- Monitoring and Analysis: Regularly monitor the validation process and analyze the types of validation failures. This can help identify patterns and trends, allowing for proactive measures to improve event quality and reduce the incidence of invalid messages. For example, if a specific source consistently produces invalid messages, this can be investigated.

Implementation Considerations

Implementing CloudEvents effectively necessitates careful consideration of language-specific nuances, performance characteristics, and validation strategies. Choosing the right implementation and understanding its limitations is crucial for building robust and interoperable serverless systems. This section provides practical guidance for developers looking to integrate CloudEvents into their projects.

Best Practices for Implementing CloudEvents in Different Programming Languages

The implementation of CloudEvents varies across programming languages, and adherence to best practices ensures interoperability and efficient event processing. Careful attention to serialization, deserialization, and event routing is essential.

- Serialization and Deserialization: Choosing an appropriate serialization format (e.g., JSON, XML) and library is paramount. Languages like Python, Java, and Go have well-established libraries for handling these tasks. Using libraries that support the CloudEvents specification directly minimizes the risk of errors and ensures compliance. For example, the Python library `cloudevents` provides robust support for encoding and decoding events.

- Event Routing and Delivery: Implementations should provide mechanisms for routing events to the appropriate consumers. This might involve using message queues (e.g., Kafka, RabbitMQ), event brokers, or HTTP-based delivery. Ensure the chosen mechanism supports the CloudEvents format and allows for reliable delivery, potentially including features like retries and dead-letter queues.

- Error Handling: Robust error handling is critical. Implementations should handle potential serialization/deserialization errors, network issues, and consumer-side failures gracefully. Log errors comprehensively and provide mechanisms for retrying failed event deliveries or directing them to a dead-letter queue for later inspection.

- Language-Specific Considerations:

- Python: Utilize libraries like `cloudevents` for easy event creation and consumption. Employ asynchronous processing for event handling to maximize concurrency.

- Java: Leverage libraries such as the CloudEvents SDK for Java to handle event creation, validation, and processing. Utilize frameworks like Spring Cloud Stream for event-driven architectures.

- Go: Use libraries like `go-cloudevents` for efficient event handling. Implement concurrency using Go routines for parallel event processing.

Performance Characteristics of Various CloudEvent Implementations

The performance of CloudEvent implementations depends on factors like serialization/deserialization overhead, network latency, and the efficiency of event processing libraries. Evaluating performance requires considering these elements.

- Serialization/Deserialization Overhead: JSON serialization is generally efficient, but complex event payloads can still introduce overhead. Libraries should be optimized for speed. Benchmarking serialization and deserialization times using different libraries and payload sizes can help identify performance bottlenecks.

- Network Latency: When events are delivered over HTTP or message queues, network latency becomes a significant factor. Implementations should consider using asynchronous communication and optimizing network connections to minimize delays.

- Event Processing Efficiency: The efficiency of event processing libraries can vary. Using libraries that are optimized for speed and memory usage can significantly impact performance. Implementations should also consider using techniques like caching and connection pooling to improve performance.

- Benchmarking and Testing: Thorough benchmarking and testing are essential. Measure event creation, delivery, and processing times under realistic load conditions. Use tools to simulate high event volumes and identify potential bottlenecks.

Code Snippets Demonstrating CloudEvent Creation and Consumption in Python

Python provides several libraries for working with CloudEvents, simplifying the creation, serialization, and consumption of events. The following code snippets demonstrate the basic steps.

Creating a CloudEvent:

This snippet shows how to create a CloudEvent using the `cloudevents` library. It sets the event type, source, and data.

from cloudevents.http import CloudEvent from datetime import datetime event = CloudEvent( attributes= "type": "com.example.event", "source": "my-service", , data= "message": "Hello, CloudEvents!", "timestamp": datetime.utcnow().isoformat() , ) print(event)

Consuming a CloudEvent (HTTP):

This example demonstrates how to receive and process a CloudEvent sent via HTTP. It uses the `cloudevents.http` module to parse the event and extract its data. The event is expected to be in the CloudEvents format, ensuring that it is correctly understood by the receiving application.

from cloudevents.http import from_http from flask import Flask, request, jsonify app = Flask(__name__) @app.route("/", methods=["POST"]) def receive_event(): event = from_http(request.headers, request.get_data()) if event: print(f"Received event: event") return jsonify("status": "received"), 200 else: return jsonify("error": "Invalid CloudEvent"), 400 if __name__ == "__main__": app.run(debug=True)

Interoperability and Ecosystem

CloudEvents plays a pivotal role in fostering interoperability within the serverless ecosystem. Its standardized approach to event definition and delivery mechanisms allows different serverless platforms and services to communicate effectively, regardless of their underlying implementations. This promotes vendor neutrality and reduces the complexities associated with integrating disparate systems.

Role of CloudEvents in Enabling Interoperability

CloudEvents acts as a lingua franca for event-driven architectures. By providing a common format, it simplifies the process of integrating services from different vendors and those built on various platforms. This interoperability is achieved through several key aspects:

- Standardized Event Format: CloudEvents defines a common structure for event data, including attributes such as event type, source, and data. This standardization ensures that events can be understood by any system that adheres to the CloudEvents specification.

- Vendor-Neutrality: CloudEvents is not tied to any specific vendor or platform. This allows developers to choose the best-of-breed services without being locked into a single vendor’s ecosystem.

- Simplified Integration: Instead of writing custom code to translate between different event formats, developers can use CloudEvents-compliant libraries and tools to easily send and receive events. This significantly reduces the effort required to integrate serverless functions and services.

- Event Delivery: CloudEvents also specifies how events should be delivered, supporting various protocols like HTTP and Cloud Native Computing Foundation (CNCF) specifications, ensuring that events can be transmitted reliably across different environments.

Support for CloudEvents by Major Cloud Providers

Major cloud providers have embraced CloudEvents, offering native support and tools to facilitate its adoption. This support varies in depth and implementation but generally includes features like:

- AWS: AWS provides support for CloudEvents through its EventBridge service, which acts as an event bus. EventBridge can receive, filter, and route CloudEvents from various sources, including AWS services, third-party applications, and custom applications. It supports CloudEvents as the native event format.

- Azure: Azure offers CloudEvents support primarily through Azure Event Grid. Event Grid allows developers to send and receive CloudEvents from various sources, including Azure services, third-party services, and custom applications. It supports native CloudEvents ingestion and output.

- GCP: Google Cloud Platform (GCP) supports CloudEvents through Cloud Pub/Sub and Cloud Functions. Cloud Pub/Sub can publish and subscribe to CloudEvents, while Cloud Functions can be triggered by CloudEvents. GCP also provides libraries and tools for working with CloudEvents.

Popular Tools and Libraries for CloudEvent Integration

Several tools and libraries simplify the process of integrating CloudEvents into serverless applications. These tools offer functionalities like event creation, validation, and delivery.

- CloudEvents SDKs: SDKs are available in various programming languages, such as Python, Java, Go, and .NET. These SDKs provide APIs for creating, parsing, and serializing CloudEvents, as well as interacting with event brokers. For example, the Python SDK allows the creation of CloudEvents instances and their serialization to JSON.

- Event Brokers: Event brokers like Apache Kafka, RabbitMQ, and the cloud provider-specific services (EventBridge, Event Grid, and Cloud Pub/Sub) support CloudEvents as a native format. They enable the routing and delivery of CloudEvents across different services and applications.

- Validation Tools: Tools are available to validate CloudEvents against the specification, ensuring that events conform to the defined structure and attributes. This helps to catch errors early in the development process.

- Transformation Tools: Tools can transform events from other formats into CloudEvents and vice versa, enabling integration with systems that do not natively support CloudEvents. This allows for the conversion of legacy events into CloudEvents format for wider adoption.

Future Trends and Developments

The serverless landscape is rapidly evolving, driven by advancements in cloud technologies, evolving developer needs, and the increasing demand for scalable, cost-effective computing solutions. CloudEvents, as a specification for event data, is poised to play a crucial role in these developments. This section explores emerging trends in serverless computing and their impact on CloudEvents, predicts potential future enhancements to the specification, and describes a futuristic serverless architecture that leverages advanced CloudEvent capabilities.

Emerging Trends in Serverless and CloudEvents Impact

Several trends are shaping the future of serverless computing, each with significant implications for CloudEvents. These trends include increased adoption of edge computing, the rise of serverless databases, and the integration of artificial intelligence (AI) and machine learning (ML) within serverless architectures.

- Edge Computing and CloudEvents: The proliferation of edge devices and the need for low-latency processing are driving the adoption of edge computing. This involves moving compute resources closer to the data source, enabling real-time processing and reduced bandwidth consumption. CloudEvents facilitates interoperability between edge devices and cloud-based serverless functions. Events generated at the edge, such as sensor data or device status updates, can be formatted using CloudEvents and routed to serverless functions for processing, analysis, or storage.

This enables a unified event-driven architecture across the entire computing spectrum, from the edge to the cloud.

- Serverless Databases and CloudEvents: Serverless databases, such as FaunaDB, DynamoDB, and MongoDB Atlas Serverless, are gaining popularity due to their scalability, pay-per-use pricing, and automated management. These databases can generate events in response to data changes (e.g., create, update, delete operations). CloudEvents provides a standardized format for these database events, allowing them to be consumed by serverless functions for tasks such as data synchronization, triggering notifications, or initiating other business processes.

The use of CloudEvents ensures that events from various serverless databases can be easily integrated and processed within a unified event-driven system.

- AI/ML in Serverless and CloudEvents: The integration of AI and ML capabilities into serverless applications is becoming increasingly prevalent. Serverless functions can be used to execute ML models for tasks such as image recognition, natural language processing, and predictive analytics. CloudEvents can be used to trigger these ML models based on events. For instance, an event indicating the upload of an image to cloud storage could trigger a serverless function that uses a pre-trained ML model to analyze the image and generate metadata using CloudEvents.

This metadata could then be used for indexing, search, or other downstream processes. This event-driven approach enables a scalable and efficient way to incorporate AI/ML into serverless applications.

Potential Future Enhancements to the CloudEvents Specification

The CloudEvents specification is expected to evolve to address emerging serverless requirements and to improve its usability and capabilities. These enhancements will likely focus on improving performance, enriching event data, and expanding the scope of event sources.

- Performance Optimizations: As serverless applications handle increasing volumes of events, performance becomes critical. Future enhancements may include optimizations to the CloudEvents format and processing mechanisms to reduce latency and improve throughput. This could involve:

- Implementing more efficient serialization and deserialization techniques.

- Adding support for event batching to reduce the overhead of individual event processing.

- Introducing features for event compression to minimize network bandwidth consumption.

- Enriching Event Data: The CloudEvents specification could be extended to support richer event data and metadata, providing more context and information about the events. This could involve:

- Defining standard extensions for common event attributes, such as geolocation data, user identities, and audit trails.

- Supporting more complex data types and structures within event payloads.

- Introducing features for event lineage tracking, enabling users to trace the origin and transformations of events throughout the system.

- Expanding Event Source Support: The scope of CloudEvents is expected to expand to include more event sources and integration with new technologies. This could involve:

- Defining new event types for emerging serverless services, such as serverless databases, AI/ML platforms, and edge computing platforms.

- Developing libraries and SDKs for various programming languages and platforms to simplify the creation and consumption of CloudEvents.

- Creating interoperability with more event brokers and message queues.

Futuristic Serverless Architecture with Advanced CloudEvent Capabilities

Imagine a futuristic serverless architecture for a smart city that leverages advanced CloudEvent capabilities. This architecture is designed to handle a massive influx of data from various sources, process it in real-time, and provide actionable insights to city officials and citizens.

Visual Description: The architecture is represented as a circular diagram. In the center is the “City Data Hub,” a centralized component. Radiating outwards from the center are several interconnected rings, each representing a layer of the architecture. The innermost ring represents data sources, followed by the event processing layer, the analytics layer, and finally, the presentation and interaction layer. Arrows show the flow of data and events between the components.

Components and Functionality:

- Data Sources: This layer includes a wide range of data sources, such as smart traffic sensors, weather stations, public transportation systems, and citizen-submitted reports. Each source generates events formatted as CloudEvents, capturing relevant information like traffic flow, temperature readings, bus locations, and incident reports.

- Event Processing Layer: This layer is the core of the system, handling the ingestion, routing, and transformation of CloudEvents. It consists of:

- Event Brokers: A distributed event broker, such as Apache Kafka or Cloud Pub/Sub, receives CloudEvents from various data sources.

- Serverless Functions: Serverless functions are triggered by events from the event broker. These functions perform various tasks, such as data validation, enrichment, and filtering. They can also integrate with external services and APIs.

- CloudEvent Routers: Intelligent routers direct CloudEvents to the appropriate serverless functions based on event type, source, or other criteria.

- Analytics Layer: This layer focuses on data analysis and insights generation. It leverages:

- Serverless Data Warehouses: Serverless data warehouses, such as Amazon Redshift Serverless or Google BigQuery, store and analyze processed CloudEvents.

- AI/ML Models: Serverless functions execute AI/ML models to perform tasks such as traffic prediction, crime analysis, and energy consumption forecasting.

- Real-time Dashboards: Real-time dashboards provide city officials with actionable insights and visualizations based on the analysis results.

- Presentation and Interaction Layer: This layer provides interfaces for citizens and city officials to interact with the system:

- Mobile Applications: Citizens can access real-time information about traffic, public transportation, and incident reports through mobile applications.

- Web Portals: City officials can use web portals to monitor city operations, analyze data, and make informed decisions.

- Automated Alerts and Notifications: The system automatically sends alerts and notifications to citizens and city officials based on predefined rules and thresholds.

Advanced CloudEvent Capabilities in Action:

- Event Correlation: The system uses CloudEvents to correlate events from different sources. For example, an event from a traffic sensor indicating a traffic jam could be correlated with an event from a public transportation system showing delayed buses.

- Dynamic Event Routing: The system uses CloudEvent routers to dynamically route events to different serverless functions based on real-time conditions. For example, during a major incident, events related to the incident could be routed to a dedicated incident response function.

- Event Transformation: Serverless functions transform CloudEvents to enrich the data and prepare it for analysis. For example, a serverless function could enrich traffic events with weather data to understand how weather conditions impact traffic flow.

This futuristic architecture demonstrates the power of CloudEvents to enable a truly event-driven, intelligent, and responsive smart city. The standardization provided by CloudEvents ensures interoperability, scalability, and flexibility, allowing the city to adapt to changing needs and leverage new technologies.

Final Thoughts

In conclusion, the standards for serverless, specifically those embodied by CloudEvents, are critical for unlocking the full potential of serverless computing. By promoting interoperability and portability, CloudEvents empower developers to build more resilient, scalable, and vendor-agnostic applications. As serverless technologies continue to evolve, understanding and embracing these standards is paramount for navigating the future of cloud-native development. The continued development and adoption of CloudEvents and related standards will be instrumental in shaping the future of distributed systems, making them more accessible, manageable, and ultimately, more powerful.

FAQ Overview

What is the primary benefit of using CloudEvents?

The primary benefit of using CloudEvents is enabling interoperability between different serverless platforms and services by providing a standardized format for event data, ensuring that events can be understood and processed consistently across various environments.

How does CloudEvents differ from other event formats?

CloudEvents distinguishes itself through its vendor-neutral and platform-agnostic design, focusing on a core set of attributes to describe events, rather than imposing specific payload structures. This approach enhances portability and simplifies integration across diverse systems.

What are some common tools for validating CloudEvents?

Common validation tools include libraries within various programming languages (e.g., Python, Java) that parse and validate CloudEvent messages against the specification. Online validators and command-line tools are also available for testing and ensuring compliance.

How can I handle invalid CloudEvent messages?

Handling invalid CloudEvent messages involves implementing error handling mechanisms within your event consumers. This might include logging the invalid message, retrying processing (with appropriate backoff strategies), or routing the message to a dead-letter queue for further investigation and resolution.