The convergence of on-premises infrastructure, cloud resources, and serverless computing presents a compelling architectural paradigm: the hybrid architecture with serverless components. This approach offers a dynamic blend of control, scalability, and cost-efficiency, addressing the evolving demands of modern applications. By strategically combining traditional IT environments with the agility of serverless functions, organizations can unlock new levels of operational flexibility and innovation.

This architecture allows for the deployment of applications and workloads across diverse environments, including private clouds, public clouds, and on-premises data centers. The integration of serverless components, such as functions-as-a-service (FaaS), introduces an event-driven, pay-per-use model, optimizing resource utilization and reducing operational overhead. This document delves into the core principles, benefits, and practical applications of this powerful architectural model.

Defining Hybrid Architecture

Hybrid architecture represents a strategic approach to IT infrastructure, blending the strengths of both on-premises systems and cloud-based services. This integration aims to optimize resource allocation, enhance agility, and reduce operational costs by leveraging the most appropriate environment for specific workloads. The architecture provides flexibility in terms of deployment and management, allowing organizations to tailor their infrastructure to their unique requirements.

Core Characteristics of Hybrid Architecture

The defining features of a hybrid architecture are centered on integration, interoperability, and strategic workload placement. This approach allows organizations to capitalize on the benefits of both on-premises and cloud environments.

- Interoperability: A critical characteristic is the seamless communication and data exchange between on-premises and cloud components. This requires standardized interfaces, protocols, and robust networking capabilities to ensure that different systems can work together effectively. For instance, a hybrid architecture might use APIs (Application Programming Interfaces) to facilitate data transfer between a local database and a cloud-based analytics platform.

- Unified Management: Hybrid architectures demand a centralized management framework that provides visibility and control across the entire infrastructure. This often involves tools for monitoring, security, and resource allocation that can manage both on-premises and cloud resources from a single pane of glass. This allows IT teams to maintain consistent policies and configurations.

- Workload Optimization: Hybrid architectures allow organizations to strategically place workloads based on factors such as cost, performance, compliance, and security requirements. Sensitive data might be kept on-premises, while less critical workloads can be moved to the cloud to take advantage of scalability and cost efficiencies.

- Scalability and Flexibility: Hybrid environments provide the ability to scale resources up or down as needed. This is particularly valuable for handling fluctuating demands. Cloud components can be readily scaled to accommodate peak loads, while on-premises infrastructure can provide a stable foundation for core business functions.

Components of a Hybrid Architecture

Hybrid architectures are composed of various elements that must be carefully integrated to ensure seamless operation and effective workload management. These components work together to create a cohesive and adaptable infrastructure.

- On-Premises Infrastructure: This encompasses the physical servers, storage, networking equipment, and associated software located within an organization’s data center. This forms the foundation of many critical business functions, including databases, legacy applications, and security services.

- Public Cloud: This refers to services offered by providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). These services include compute, storage, databases, and various platform services that can be provisioned on-demand.

- Private Cloud: A private cloud is a cloud computing environment dedicated to a single organization. It can be hosted on-premises or by a third-party provider. It offers greater control over data and security, as well as customization options, making it suitable for workloads with specific compliance requirements.

- Network Connectivity: Robust network connectivity is essential for enabling communication between on-premises and cloud resources. This often involves technologies like VPNs (Virtual Private Networks), direct connections, and high-speed internet links.

- Management and Orchestration Tools: These tools provide the ability to manage and automate the deployment, scaling, and monitoring of resources across the hybrid environment. This includes tools for configuration management, workload orchestration, and infrastructure-as-code.

- Security and Compliance: Security solutions, such as firewalls, intrusion detection systems, and identity and access management (IAM) tools, are critical for protecting data and applications in a hybrid environment. Compliance considerations, such as data residency and regulatory requirements, also play a significant role.

Benefits of Employing a Hybrid Architecture Model

Adopting a hybrid architecture provides several advantages, including enhanced scalability, improved flexibility, and optimized cost management. The architecture provides a platform for organizations to respond effectively to evolving business demands.

- Scalability: Hybrid architectures allow organizations to scale their IT resources on demand. For example, during peak seasons, a retail company can leverage cloud resources to handle increased website traffic and transaction volumes without investing in additional on-premises infrastructure. This scalability ensures that systems can handle fluctuating workloads without performance degradation.

- Flexibility: Hybrid models offer significant flexibility in terms of workload placement and technology choices. Businesses can select the optimal environment for each application or service based on factors such as cost, performance, and security. For example, an organization might choose to run its sensitive customer data in a private cloud or on-premises for security reasons, while leveraging the public cloud for less sensitive applications and development.

- Cost Optimization: Hybrid architectures enable organizations to optimize their IT spending by leveraging the most cost-effective resources for each workload. For instance, organizations can utilize cloud-based services for compute-intensive tasks, paying only for the resources they consume, while maintaining their on-premises infrastructure for core business functions. This approach can lead to significant cost savings compared to solely relying on either on-premises or cloud-only solutions.

- Business Continuity and Disaster Recovery: Hybrid architectures can enhance business continuity and disaster recovery capabilities. Data and applications can be replicated or backed up to the cloud, providing a resilient environment in case of on-premises failures.

- Compliance: Hybrid models can help organizations meet specific regulatory requirements. By keeping sensitive data on-premises or in a private cloud, organizations can ensure compliance with data privacy regulations such as GDPR or HIPAA.

Introduction to Serverless Computing

Serverless computing represents a paradigm shift in cloud computing, enabling developers to build and run applications without managing servers. This approach allows for a more streamlined development process, focusing on code rather than infrastructure, leading to increased agility and cost savings.

Fundamental Principles of Serverless Computing

The core tenets of serverless computing revolve around abstracting server management and automating resource allocation. This abstraction allows developers to focus on writing code, while the serverless platform handles the underlying infrastructure.

- Function-as-a-Service (FaaS): Serverless architectures primarily utilize FaaS, where code is executed in response to events, such as HTTP requests, database updates, or scheduled triggers. Developers deploy individual functions, which are small, self-contained units of code.

- Event-Driven Architecture: Serverless applications are inherently event-driven. Events trigger the execution of functions. This allows for highly scalable and responsive applications. The platform automatically manages the event routing and function invocation.

- Automatic Scaling: Serverless platforms automatically scale resources based on demand. When an event triggers a function, the platform allocates the necessary resources to execute the code. This dynamic scaling eliminates the need for manual capacity planning and provisioning.

- Pay-per-Use Pricing: Serverless providers typically offer a pay-per-use pricing model. Customers are charged only for the actual compute time and resources consumed by their functions. This contrasts with traditional cloud services, where users pay for provisioned resources, regardless of utilization.

- Stateless Functions: Serverless functions are generally designed to be stateless. Each function invocation is independent of previous invocations. Any required state management is handled by external services, such as databases or caches. This stateless nature promotes scalability and resilience.

Key Advantages of Serverless Functions

Serverless functions offer several key advantages, making them an attractive option for various application scenarios. These advantages contribute to both operational efficiency and cost optimization.

- Cost-Efficiency: The pay-per-use pricing model of serverless computing can significantly reduce costs compared to traditional cloud services. Developers pay only for the compute time their functions consume. For applications with sporadic traffic or variable workloads, this can lead to substantial savings. For example, consider a website that experiences peak traffic during specific hours. With serverless, the cost during off-peak hours is minimal.

- Reduced Operational Overhead: Serverless platforms handle server management, including provisioning, patching, and scaling. This reduces the operational burden on developers and operations teams. Developers can focus on writing code and deploying applications, rather than managing the underlying infrastructure.

- Increased Developer Productivity: Serverless simplifies the development process by abstracting away infrastructure concerns. Developers can deploy code quickly and iterate rapidly. This leads to faster time-to-market and increased developer productivity.

- Scalability and Resilience: Serverless platforms automatically scale resources based on demand. This ensures that applications can handle sudden spikes in traffic or workload. Serverless platforms also offer built-in resilience, with automatic failover and redundancy.

- Simplified Deployment: Deploying serverless functions is often straightforward. Developers can upload their code to the serverless platform, which handles the rest. This simplifies the deployment process and reduces the risk of errors.

Automatic Scaling and Resource Allocation

Serverless platforms are designed to automatically scale resources based on the incoming workload. This automated scaling is a critical feature that ensures applications can handle varying levels of demand without manual intervention.

- Trigger-Based Scaling: When an event triggers a serverless function, the platform analyzes the incoming request and determines the required resources. If the current resources are insufficient, the platform automatically provisions additional resources, such as compute instances or memory.

- Concurrency Management: Serverless platforms manage the concurrency of function invocations. They limit the number of concurrent executions to prevent resource exhaustion and ensure fair access to resources. When the demand increases, the platform increases the concurrency limit, allowing more function instances to run concurrently.

- Resource Allocation Optimization: Serverless platforms optimize resource allocation based on the specific requirements of each function. They dynamically allocate CPU, memory, and other resources to meet the needs of the function. This dynamic allocation ensures efficient resource utilization.

- Example: Consider an image processing application built on a serverless platform. When a user uploads an image, a function is triggered to resize the image. If multiple users upload images simultaneously, the platform automatically scales the function instances to handle the increased workload. The platform allocates the necessary compute resources to each function instance, ensuring that images are processed quickly and efficiently.

This automatic scaling ensures that the application remains responsive, even during periods of high demand.

Integrating Serverless Components

Integrating serverless components within a hybrid architecture represents a significant evolution in modern application development. This integration allows organizations to leverage the scalability, cost-effectiveness, and agility of serverless functions while retaining control over existing on-premises or cloud-based resources. The seamless incorporation of serverless elements necessitates careful planning, strategic design, and robust management practices.

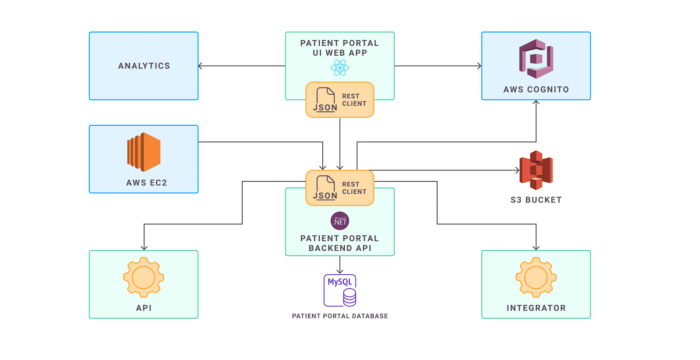

Scenario: Hybrid E-commerce Platform

Consider a hybrid e-commerce platform that handles product catalog management on-premises and order processing in the cloud. This scenario effectively demonstrates the integration of serverless components.The platform operates as follows:* On-premises Catalog Management: Product data, including descriptions, pricing, and inventory levels, resides in an on-premises relational database. This data is updated by internal staff and synchronized with a cloud-based content delivery network (CDN) for global availability.

Cloud-Based Order Processing

Order placement, payment processing, and fulfillment services are handled in the cloud, leveraging serverless functions for scalability and resilience.

Serverless Integration

A serverless function is triggered whenever a new order is placed. This function interacts with both on-premises and cloud-based resources. It retrieves product details from the on-premises catalog, calculates the total order cost, updates inventory levels in the on-premises database, and initiates fulfillment through cloud-based services.This architecture allows the e-commerce platform to maintain its existing catalog management system while benefiting from the scalability and cost efficiency of serverless order processing.

It also provides a clear example of how serverless components can bridge the gap between on-premises and cloud environments.

Procedures: Deployment and Management

Deploying and managing serverless components within a hybrid architecture requires a systematic approach. These procedures ensure the smooth operation, security, and efficient utilization of serverless resources.* Deployment: The deployment process encompasses several key steps:

- Code Development and Packaging: Serverless functions are developed using suitable programming languages (e.g., Python, Node.js) and packaged as deployment artifacts.

- Infrastructure-as-Code (IaC): IaC tools, such as Terraform or AWS CloudFormation, are utilized to define and provision the necessary infrastructure, including serverless function triggers (e.g., HTTP endpoints, event queues), database connections, and network configurations.

- Deployment to Serverless Platforms: The packaged function code and infrastructure definitions are deployed to a serverless platform, such as AWS Lambda, Azure Functions, or Google Cloud Functions.

- Configuration and Testing: The deployed functions are configured with environment variables, resource access permissions, and monitoring tools. Thorough testing is conducted to validate functionality and performance.

* Management: Effective management ensures the continuous operation and optimization of serverless components.

- Monitoring and Logging: Comprehensive monitoring and logging are essential for tracking function performance, identifying errors, and diagnosing issues. Tools such as CloudWatch, Azure Monitor, and Google Cloud Operations are employed to collect and analyze logs, metrics, and traces.

- Security and Access Control: Robust security measures are implemented to protect serverless functions and associated resources. This includes securing API gateways, managing access control using IAM roles, and encrypting sensitive data.

- Scaling and Optimization: Serverless platforms automatically scale functions based on demand. However, optimizing function code, resource allocation, and event triggers can further improve performance and reduce costs.

- Version Control and Rollback: Version control systems are used to manage function code changes. Rollback mechanisms allow for quickly reverting to previous versions in case of issues.

* Hybrid Considerations:

- Network Connectivity: Secure and reliable network connectivity between serverless functions and on-premises resources is crucial. This can be achieved through virtual private networks (VPNs), direct connections, or secure API gateways.

- Data Synchronization: Data synchronization mechanisms may be required to keep data consistent between on-premises and cloud-based systems. This can involve database replication, message queues, or API-based data transfer.

- Authentication and Authorization: Consistent authentication and authorization mechanisms are necessary to ensure secure access to resources across both environments.

Interaction: Serverless and On-Premises Resources

Serverless components can effectively interact with on-premises or cloud-based resources. This interaction often involves secure network connections, data synchronization, and well-defined APIs.* Example: Database Interaction

- A serverless function needs to access data stored in an on-premises database.

- The function can be configured to connect to the on-premises database through a secure VPN connection, establishing a secure channel for data exchange.

- The function utilizes database drivers or connectors to execute queries, retrieve data, and update records in the database.

- Authentication and authorization mechanisms are implemented to ensure only authorized users or functions can access the database.

* Example: Message Queues

- A serverless function needs to communicate with an on-premises application using a message queue.

- The function publishes messages to a cloud-based message queue service (e.g., AWS SQS, Azure Service Bus, Google Cloud Pub/Sub).

- An on-premises application subscribes to the queue and consumes messages, triggering actions within the on-premises system.

- Message queues provide a reliable and asynchronous communication mechanism, allowing for decoupling of components and improved scalability.

* Example: API Integration

- A serverless function needs to access an on-premises API.

- The function makes HTTP requests to the on-premises API through a secure network connection.

- The on-premises API processes the requests and returns data to the function.

- API gateways can be used to manage and secure API access, including authentication, authorization, and rate limiting.

These examples highlight the flexibility and power of serverless components in interacting with diverse resources within a hybrid architecture. The specific implementation details will vary depending on the specific technologies and requirements of the environment.

Use Cases for Hybrid Architectures with Serverless

Hybrid architectures, particularly when combined with serverless components, provide a flexible and scalable solution for a wide range of real-world applications. This approach allows organizations to leverage the benefits of both on-premises infrastructure and cloud services, optimizing for cost, performance, and compliance. The following sections detail specific use cases, industry applications, and common application types that benefit from this architectural model.

Financial Services

Financial institutions often grapple with stringent regulatory requirements, data security concerns, and the need for high availability. Hybrid architectures, incorporating serverless components, offer a compelling solution.

- Fraud Detection and Prevention: Serverless functions can be triggered in real-time to analyze transaction data, identify suspicious patterns, and initiate fraud alerts. This capability can be integrated with existing on-premises systems for secure data access. For instance, a major credit card company leverages serverless functions to analyze transactions against a complex set of rules, flagging potentially fraudulent activities within milliseconds. The rule sets are regularly updated and maintained by the company, and are integrated with their on-premise fraud database.

- Regulatory Compliance: Financial services must comply with regulations such as GDPR, CCPA, and others. Hybrid architectures enable organizations to process sensitive data on-premises while utilizing serverless functions for data transformation, reporting, and analysis in the cloud. For example, a bank may store customer Personally Identifiable Information (PII) on-premises and use serverless functions in the cloud to generate compliance reports, ensuring data security and regulatory adherence.

- Algorithmic Trading: High-frequency trading platforms demand low-latency and scalable infrastructure. Serverless functions can be used to execute trading algorithms in response to market data feeds, integrated with on-premise trading platforms for order execution. This architecture allows firms to rapidly deploy and scale trading strategies, while maintaining strict control over their trading systems.

Healthcare

The healthcare industry faces similar challenges, including data privacy, security, and the need for scalable and cost-effective solutions. Hybrid architectures with serverless components are well-suited to address these needs.

- Patient Data Analytics: Healthcare providers can use serverless functions to analyze patient data stored in on-premises systems, identifying trends and insights. These functions can be triggered by data updates, enabling real-time analysis of patient health information. An example would be a hospital system that uses serverless functions to analyze patient lab results in conjunction with EHR data, helping clinicians identify potential health risks and improve patient care.

- Medical Device Integration: Serverless functions can be used to collect data from medical devices, such as wearable sensors, and securely transmit it to cloud-based analytics platforms. The data can then be analyzed to provide insights into patient health and device performance. This enables remote patient monitoring and proactive healthcare management. For example, a hospital could collect data from patient’s heart rate monitors using serverless functions, allowing medical professionals to remotely track their patients’ vital signs.

- Image Processing and Analysis: Hybrid architectures facilitate the processing and analysis of medical images, such as X-rays and MRIs. Serverless functions can be used to perform image enhancement, analysis, and annotation, while sensitive patient data remains on-premises. This allows medical professionals to quickly analyze medical images.

Retail

Retailers can benefit from hybrid architectures with serverless components by improving customer experience, optimizing operations, and enhancing data-driven decision-making.

- Inventory Management: Serverless functions can be used to monitor inventory levels across multiple locations, triggering alerts when stock levels fall below a certain threshold. This helps retailers optimize inventory management and reduce the risk of stockouts. For instance, a large retail chain can utilize serverless functions to monitor inventory levels across its physical stores and online warehouses, automatically reordering items based on real-time sales data.

- Personalized Recommendations: Serverless functions can be used to analyze customer purchase history, browsing behavior, and other data to provide personalized product recommendations. These recommendations can be displayed on the retailer’s website and in mobile apps.

- Customer Relationship Management (CRM): Hybrid architectures enable retailers to manage customer data securely and efficiently. Serverless functions can be used to automate tasks such as customer segmentation, email marketing, and customer service interactions. For example, a retail company can leverage serverless functions to send personalized marketing emails based on customer purchase history and behavior.

Common Application Types

The following application types are well-suited for hybrid architectures with serverless components.

- Web Applications: Serverless functions can handle user authentication, API requests, and data processing for web applications. The front-end can be hosted in the cloud, while sensitive data and business logic can reside on-premises.

- Mobile Applications: Serverless functions can provide back-end services for mobile applications, such as user authentication, data storage, and push notifications. The mobile app can interact with both cloud-based and on-premises resources.

- IoT Applications: Serverless functions can process data from IoT devices, enabling real-time analytics, device management, and control. The data can be stored and analyzed in the cloud, while device management and control can be managed on-premises.

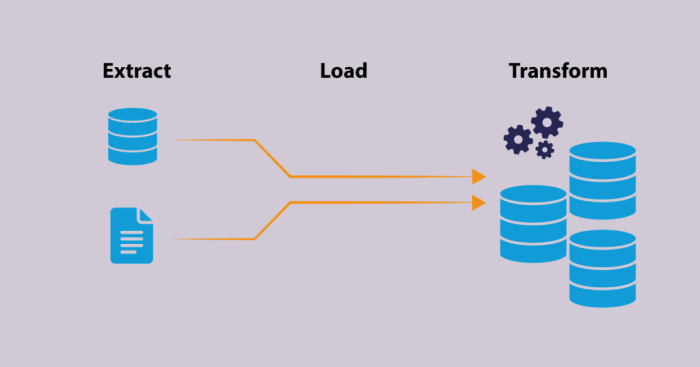

- Data Pipelines: Serverless functions can be used to build data pipelines that ingest, transform, and load data from various sources. This enables organizations to integrate data from on-premises and cloud-based systems.

- API Gateways: Serverless functions can be used to build API gateways that provide a secure and scalable way to access on-premises and cloud-based services. This enables organizations to expose their services to external clients.

Data Management in Hybrid Serverless Systems

Data management in hybrid serverless systems presents unique challenges, requiring careful consideration of data location, access patterns, and consistency. The distributed nature of these systems necessitates strategies for ensuring data integrity and availability across on-premises and cloud environments. Effective data management is crucial for realizing the full potential of hybrid serverless architectures, enabling seamless data access and processing regardless of the deployment location.

Strategies for Data Management

Managing data in a hybrid serverless environment involves several key strategies. These strategies focus on data partitioning, data replication, and leveraging data caching mechanisms to optimize performance and minimize latency.

- Data Partitioning: This involves dividing the data into logical segments, and distributing these segments across different environments (on-premises and cloud). The partitioning strategy should consider data access patterns and the geographic distribution of users. For instance, frequently accessed data might be stored closer to the users, while less frequently accessed data could reside in a more cost-effective cloud storage solution.

This approach minimizes latency and optimizes resource utilization.

- Data Replication: Replication ensures data availability and fault tolerance. Data can be replicated between on-premises and cloud environments using various techniques. The choice of replication strategy depends on the required consistency level and the acceptable latency. Common approaches include asynchronous replication, where data changes are propagated with some delay, and synchronous replication, which ensures immediate consistency.

- Data Caching: Implementing caching mechanisms can significantly improve performance. Caching frequently accessed data in memory or on edge locations reduces the load on the underlying databases and minimizes response times. This can be particularly beneficial for serverless functions, which can quickly access cached data without needing to interact with remote databases. Popular caching solutions include Redis and Memcached.

Methods for Data Synchronization and Consistency

Data synchronization and consistency are paramount in hybrid serverless architectures. Several methods are employed to maintain data integrity across distributed environments.

- Eventual Consistency: This approach prioritizes availability and performance over immediate consistency. Data changes are propagated asynchronously, allowing for faster writes but potentially leading to temporary inconsistencies. This model is suitable for scenarios where eventual consistency is acceptable, such as social media feeds or real-time analytics.

- Strong Consistency: This guarantees that all reads return the most up-to-date data. Strong consistency is achieved through techniques like synchronous replication and distributed transactions. This model is crucial for applications requiring strict data integrity, such as financial transactions or inventory management.

- Two-Phase Commit (2PC): 2PC is a distributed transaction protocol that ensures atomicity, consistency, isolation, and durability (ACID) properties across multiple databases. However, 2PC can introduce performance overhead and complexity, making it less suitable for highly scalable serverless applications.

- Optimistic Locking: This approach assumes that conflicts are rare and attempts to update data without acquiring locks. It uses version numbers or timestamps to detect conflicts. If a conflict occurs, the update is retried. This technique can improve performance in scenarios with low contention.

Data Flow Process Illustration

The following illustrates a data flow process involving on-premises and cloud-based databases. This example demonstrates how data can be synchronized and processed across a hybrid serverless environment.

Scenario: An e-commerce platform utilizes a hybrid serverless architecture. Customer data, order information, and product catalogs are distributed across on-premises and cloud databases.

Data Flow Steps:

- Customer Order Submission: A customer places an order through a web application. This request is routed to a serverless function deployed in the cloud.

- Data Storage (Cloud): The serverless function writes the order details to a cloud-based database (e.g., Amazon DynamoDB or Google Cloud Firestore).

- Data Synchronization (Cloud to On-Premises): A change data capture (CDC) service, such as AWS Database Migration Service (DMS) or a custom solution, monitors the cloud database for changes.

- Data Replication (On-Premises): The CDC service replicates the order data to an on-premises database (e.g., a relational database). This replication can be asynchronous, depending on the consistency requirements.

- Inventory Management (On-Premises): Another serverless function, triggered by the data replication, updates the inventory levels in the on-premises database. This function may perform other tasks, such as generating invoices or sending order confirmations.

- Data Aggregation (Cloud): A separate serverless function periodically aggregates order data from both the cloud and on-premises databases for analytics and reporting.

Visual Description:

The illustration would show a sequence of interconnected components.

The process begins with a user accessing a web application, sending an order. The order information is directed to a serverless function hosted in the cloud, which stores the order details in a cloud database. A CDC service is depicted monitoring this cloud database, transferring data to an on-premises database. A second serverless function, connected to the on-premises database, handles tasks like updating inventory.

Finally, a separate serverless function is depicted pulling aggregated data from both cloud and on-premises databases, for analytics.

Security Considerations

Hybrid architectures incorporating serverless components present a complex security landscape. The distributed nature of these systems, combined with the ephemeral nature of serverless functions, introduces unique challenges. Securing data and applications within this environment requires a multifaceted approach, encompassing careful planning, robust implementation, and continuous monitoring.

Security Challenges in Hybrid Architectures with Serverless Components

The integration of serverless functions into hybrid architectures introduces several security challenges. These challenges arise from the distributed nature of the system, the use of third-party services, and the dynamic scaling of serverless functions. Understanding these challenges is crucial for designing effective security measures.

- Increased Attack Surface: Hybrid architectures, by their nature, expose a larger attack surface than traditional monolithic systems. Serverless functions, often triggered by various events, can introduce new entry points for malicious actors. The integration with on-premises infrastructure further expands the attack surface.

- Shared Responsibility Model Complexity: The shared responsibility model, particularly with cloud providers, requires careful understanding. While the cloud provider secures the underlying infrastructure, the responsibility for securing the application and data within the serverless functions falls on the developer. This necessitates a clear delineation of responsibilities and robust security practices.

- Ephemeral Nature of Serverless Functions: Serverless functions are typically short-lived. This ephemeral nature makes traditional security practices, such as patching and vulnerability scanning, more challenging. Security measures must be automated and integrated into the deployment pipeline.

- Third-Party Dependencies: Serverless functions often rely on third-party services and APIs. These dependencies introduce security risks, including vulnerabilities in the third-party code and potential data breaches if the integrations are not properly secured.

- Visibility and Monitoring: Monitoring and logging in a serverless environment can be complex. The distributed nature of the system and the ephemeral nature of functions make it difficult to track security events and identify potential threats. Effective logging and monitoring are essential for incident response.

- Data Encryption and Access Control: Protecting sensitive data requires robust encryption and access control mechanisms. Data must be encrypted both in transit and at rest. Access control policies must be carefully designed to restrict access to sensitive data based on the principle of least privilege.

Best Practices for Securing Data and Applications

Implementing robust security measures is critical for mitigating the risks associated with hybrid architectures and serverless components. These best practices focus on data protection, access control, and continuous monitoring.

- Implement Strong Authentication and Authorization: Employ multi-factor authentication (MFA) for all user accounts and services. Implement role-based access control (RBAC) to ensure users and services have only the necessary permissions. Regularly review and update access control policies.

- Encrypt Data at Rest and in Transit: Use encryption to protect sensitive data both when it is stored (at rest) and when it is being transmitted over the network (in transit). Implement Transport Layer Security (TLS) for all network communications. Utilize encryption keys managed by a secure key management service.

- Secure API Gateways and Function Triggers: API gateways act as a central point for managing and securing API access. Use API gateways to enforce authentication, authorization, and rate limiting. Secure function triggers by implementing appropriate access control and input validation.

- Automate Security Scanning and Vulnerability Management: Integrate security scanning into the CI/CD pipeline to identify vulnerabilities early in the development process. Automate patching and update processes to address identified vulnerabilities. Regularly conduct penetration testing and vulnerability assessments.

- Implement Robust Logging and Monitoring: Implement comprehensive logging and monitoring to track security events and identify potential threats. Aggregate logs from all components of the system and use security information and event management (SIEM) tools to analyze them. Set up alerts for suspicious activity.

- Secure Serverless Function Code: Follow secure coding practices to prevent vulnerabilities such as cross-site scripting (XSS), SQL injection, and buffer overflows. Regularly review and update function code. Utilize code analysis tools to identify potential security flaws.

- Manage Secrets Securely: Store sensitive information, such as API keys and database credentials, in a secure secrets management service. Avoid hardcoding secrets in function code. Rotate secrets regularly.

- Network Segmentation: Segment the network to isolate different components of the hybrid architecture. This limits the impact of a security breach by preventing attackers from moving laterally within the system.

Security Architecture Diagram

A security architecture diagram provides a visual representation of the security components and their interactions within a hybrid architecture incorporating serverless components. The diagram should illustrate the flow of data and the security measures implemented at each stage.

Diagram Description:The diagram depicts a hybrid architecture with both on-premises and cloud components, secured by a layered approach.* On-Premises Infrastructure: Represents the traditional, physical infrastructure.

Includes a firewall to control network traffic and a VPN gateway to establish secure connections with the cloud.

Cloud Infrastructure (AWS Example)

Illustrates the cloud-based serverless components.

API Gateway

Acts as the entry point for API requests, handling authentication, authorization, and rate limiting.

Serverless Functions (Lambda)

The core of the serverless architecture, responsible for executing application logic.

Data Storage (DynamoDB/S3)

Securely stores data, with encryption at rest and access control policies.

Secrets Manager

Stores sensitive information like API keys and database credentials.

CloudWatch

Collects logs and metrics for monitoring and alerting.

Identity and Access Management (IAM)

Manages user and service identities and access permissions.

VPC (Virtual Private Cloud)

Provides a logically isolated network within the cloud.

Security Components

Web Application Firewall (WAF)

Protects against web application attacks.

Intrusion Detection System (IDS)/Intrusion Prevention System (IPS)

Monitors network traffic for malicious activity.

Security Information and Event Management (SIEM)

Aggregates and analyzes security logs for threat detection and incident response.

Key Management Service (KMS)

Manages encryption keys.

Data Flow

User requests originate from external clients and pass through the API Gateway.

The API Gateway authenticates and authorizes the requests.

Valid requests trigger serverless functions.

Serverless functions access data from secure data storage.

Logs and metrics are sent to CloudWatch for monitoring.

Security components such as WAF, IDS/IPS, and SIEM are deployed to monitor and protect the environment.

The VPN gateway ensures secure communication between on-premises and cloud resources.

This architecture illustrates a comprehensive approach to securing a hybrid serverless environment, emphasizing layered security, access control, data encryption, and continuous monitoring. The specific components and their configurations may vary depending on the chosen cloud provider and the specific requirements of the application.

Cost Optimization

Optimizing costs is a crucial aspect of adopting a hybrid architecture with serverless components. The inherent pay-per-use model of serverless, combined with the flexibility of a hybrid approach, presents significant opportunities for cost reduction. However, it also introduces complexities that require careful planning and monitoring to avoid unexpected expenses. A proactive approach to cost management is essential for realizing the full economic benefits of this architectural pattern.

Strategies for Optimizing Costs

Effective cost optimization in a hybrid serverless environment requires a multi-faceted strategy that considers various factors. It encompasses resource utilization, code optimization, and strategic vendor selection.

- Right-Sizing Resources: Ensure that compute resources, such as serverless functions and provisioned instances, are appropriately sized to meet the actual demand. Over-provisioning leads to unnecessary costs, while under-provisioning can compromise performance. Regularly monitor resource utilization and adjust accordingly.

- Optimizing Code: Efficiently written code directly translates to cost savings. Optimize function execution time and memory usage to reduce the consumption of compute resources. This includes techniques such as code profiling, lazy loading of dependencies, and efficient data processing.

- Leveraging Serverless Features: Utilize serverless-specific features, such as automatic scaling, to dynamically adjust resources based on demand. Implement auto-scaling policies to automatically scale serverless functions up or down based on metrics like CPU utilization or request queue length. This helps to avoid paying for idle resources.

- Choosing the Right Storage: Select storage solutions that align with the data access patterns and performance requirements. Consider object storage for infrequently accessed data, which is often cheaper than more performant storage options.

- Implementing Cost Alerts and Budgets: Set up alerts and budgets to monitor spending and receive notifications when costs exceed predefined thresholds. This proactive approach allows for early detection of potential cost overruns and enables timely corrective actions.

- Optimizing Data Transfer: Minimize data transfer costs by optimizing data transfer patterns and leveraging content delivery networks (CDNs). Consider using data compression techniques to reduce the size of data being transferred.

- Selecting Cost-Effective Regions: Cloud providers often have different pricing structures across different geographical regions. Analyze the pricing of services in different regions and choose the most cost-effective region for deploying your resources. This consideration is particularly relevant for geographically distributed applications.

Cost-Saving Techniques for Serverless Functions and Cloud Resources

Specific techniques can be employed to reduce costs related to serverless functions and cloud resources. These techniques involve careful design and implementation of serverless applications and efficient utilization of cloud services.

- Function Duration Optimization: Minimize the execution time of serverless functions. Short function durations translate directly to lower costs. This involves optimizing code, reducing dependencies, and using efficient algorithms.

- Memory Allocation: Carefully allocate memory to serverless functions. Allocating too much memory can increase costs, while allocating too little can impact performance. Monitor function performance and adjust memory allocation accordingly.

- Event-Driven Architecture: Design applications around an event-driven architecture to reduce the need for constantly running resources. This allows for triggering serverless functions only when specific events occur, minimizing idle time and associated costs.

- Caching: Implement caching mechanisms to reduce the number of requests to backend services. Caching frequently accessed data can significantly reduce the load on databases and other resources, leading to cost savings.

- Batch Processing: Process data in batches to reduce the number of function invocations. Batch processing can be particularly effective for data-intensive tasks.

- Resource Tagging: Tag resources with cost centers or projects to track spending effectively. This allows for granular cost analysis and helps identify areas where costs can be optimized.

- Utilizing Cloud Provider Discounts: Take advantage of discounts offered by cloud providers, such as reserved instances or committed use discounts, to reduce costs for provisioned resources. These discounts are typically available for resources that are used consistently over a specified period.

Cost Models of Different Cloud Providers

Cloud providers offer different pricing models for their services, which can significantly impact the total cost of a hybrid serverless architecture. A comparative analysis of these models is crucial for making informed decisions.

Different providers offer varied pricing for their serverless offerings. This often includes a pay-per-use model, but also varies in terms of pricing tiers, free tiers, and long-term commitment discounts.

| Cloud Provider | Serverless Function Pricing Model | Storage Pricing Model | Key Considerations |

|---|---|---|---|

| AWS (Amazon Web Services) | Pay-per-invocation, based on execution time and memory allocated. Free tier available. | Object storage (S3), various tiers based on storage class and access frequency. | Extensive service offerings, mature ecosystem, potential for vendor lock-in. |

| Azure (Microsoft Azure) | Pay-per-execution, based on execution time and memory consumed. Free grant available. | Blob storage, various tiers based on access frequency and performance. | Strong integration with Microsoft ecosystem, competitive pricing, hybrid cloud capabilities. |

| Google Cloud Platform (GCP) | Pay-per-use, based on execution time, memory usage, and number of invocations. Free tier available. | Cloud Storage, different classes based on access patterns. | Strong in data analytics and machine learning, innovative serverless features, competitive pricing. |

Consider the following formula when calculating the total cost of a serverless function:

Total Cost = (Number of Invocations

- Cost per Invocation) + (Execution Time

- Cost per Unit of Execution Time) + (Memory Usage

- Cost per Unit of Memory)

For instance, if a serverless function is invoked 1000 times, has an execution time of 100ms and uses 128MB of memory, the total cost will be the sum of the cost of 1000 invocations, the cost of 100ms execution time and the cost of 128MB memory usage. The exact numbers depend on the provider and the pricing model.

Monitoring and Observability

In a hybrid serverless architecture, monitoring and observability are critical for maintaining application health, ensuring optimal performance, and facilitating rapid troubleshooting. The distributed nature of such systems, encompassing both serverless components and traditional infrastructure, introduces complexities that demand robust monitoring strategies. Effective monitoring allows for proactive identification of issues, prevents service disruptions, and provides insights into system behavior, ultimately leading to improved user experience and cost efficiency.

Importance of Monitoring and Observability

Observability provides a comprehensive understanding of the internal states of a system by examining its external outputs. This is particularly crucial in hybrid serverless environments where components are often distributed across different platforms and managed by various teams. Without effective monitoring, it becomes challenging to pinpoint the root cause of performance bottlenecks, security breaches, or unexpected behavior. This can lead to prolonged downtime, increased operational costs, and a diminished ability to meet service level agreements (SLAs).

Tools and Techniques for Monitoring Application Performance and Health

A multifaceted approach is required to monitor the performance and health of applications in a hybrid serverless architecture. This involves leveraging a combination of tools and techniques that capture various types of data.

- Metrics Collection: This involves gathering numerical data that represents the system’s performance, such as CPU utilization, memory usage, request latency, and error rates. Cloud providers like AWS (CloudWatch), Google Cloud (Cloud Monitoring), and Azure (Azure Monitor) offer built-in metric collection and visualization tools.

- Logging: Logging is the process of recording events that occur within the system. These logs contain valuable information about application behavior, errors, and user activity. Centralized logging solutions like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk are essential for aggregating and analyzing logs from different components.

- Tracing: Distributed tracing enables tracking of requests as they flow through the different components of a hybrid serverless architecture. Tools like Jaeger, Zipkin, and AWS X-Ray provide insights into the end-to-end performance of transactions, identifying bottlenecks and latency issues.

- Alerting and Notifications: Implementing alerting systems that trigger notifications when predefined thresholds are breached is crucial. This allows for proactive intervention and minimizes the impact of incidents. Alerts can be configured based on metrics, logs, or trace data.

- Synthetic Monitoring: Synthetic monitoring involves simulating user interactions to proactively test the availability and performance of critical functionalities. This can identify issues before they impact real users.

Metrics and Logs for Monitoring and Troubleshooting

The following table illustrates key metrics and logs, along with their typical uses, for effective monitoring and troubleshooting in a hybrid serverless environment.

| Metric/Log Type | Description | Typical Use Cases | Tools |

|---|---|---|---|

| CPU Utilization | Percentage of CPU resources being used. | Identifying overloaded resources, performance bottlenecks. | CloudWatch, Cloud Monitoring, Azure Monitor |

| Memory Usage | Amount of memory being consumed. | Detecting memory leaks, resource exhaustion. | CloudWatch, Cloud Monitoring, Azure Monitor |

| Request Latency | Time taken to process a request. | Identifying slow-performing services, performance degradation. | CloudWatch, Cloud Monitoring, Azure Monitor, X-Ray, Jaeger |

| Error Rates | Percentage of requests resulting in errors. | Detecting application failures, identifying problematic components. | CloudWatch, Cloud Monitoring, Azure Monitor, Logging solutions |

| Invocation Count | Number of times a serverless function is invoked. | Monitoring function usage, identifying scaling needs. | CloudWatch, Cloud Monitoring, Azure Monitor |

| Function Duration | Time taken for a serverless function to execute. | Identifying performance bottlenecks in function execution. | CloudWatch, Cloud Monitoring, Azure Monitor, X-Ray |

| Application Logs | Detailed logs from application code, including errors, warnings, and informational messages. | Debugging application issues, understanding application behavior. | ELK stack, Splunk, CloudWatch Logs, Cloud Logging, Azure Monitor Logs |

| Infrastructure Logs | Logs from underlying infrastructure components (e.g., databases, load balancers). | Troubleshooting infrastructure-related issues, identifying security threats. | ELK stack, Splunk, CloudWatch Logs, Cloud Logging, Azure Monitor Logs |

Deployment and Orchestration

Hybrid serverless architectures present unique challenges and opportunities for deployment and management. The distributed nature of these systems, encompassing both serverless components and traditional infrastructure, necessitates sophisticated strategies to ensure efficient, reliable, and scalable operation. This section details various deployment strategies, automation procedures, and orchestration techniques tailored for hybrid serverless applications.

Deployment Strategies for Hybrid Serverless Applications

Deploying hybrid serverless applications requires careful consideration of how serverless functions interact with on-premise or cloud-based infrastructure. Different strategies can be employed, each with its advantages and disadvantages.

- Blue/Green Deployment: This strategy involves maintaining two identical environments: blue (live) and green (staging). New versions of serverless functions are deployed to the green environment and tested. Once validated, traffic is switched from the blue to the green environment, minimizing downtime and allowing for rollback if issues arise. This strategy is particularly useful for serverless functions, as it facilitates rapid iteration and reduces the risk associated with deployments.

For example, a retail company could use blue/green deployments to update its inventory management functions without disrupting customer access to its online store.

- Canary Deployment: Canary deployments involve gradually rolling out a new version of a serverless function to a small subset of users (the “canary”). This allows for monitoring the new version’s performance and detecting potential issues before affecting the entire user base. If the canary performs well, the rollout is expanded. This strategy is especially valuable in complex hybrid systems, as it helps identify integration problems between serverless components and traditional infrastructure.

A financial institution could use a canary deployment to test a new fraud detection function, gradually exposing it to live transactions before fully deploying it.

- Rolling Deployment: Rolling deployments update serverless functions in batches, gradually replacing the old version with the new version across the entire infrastructure. This approach minimizes downtime, as only a portion of the system is unavailable at any given time. It’s well-suited for applications where immediate availability is critical. A media streaming service might employ rolling deployments to update its video processing functions without interrupting user playback.

- Immutable Infrastructure: This principle advocates for treating infrastructure as immutable, meaning that servers and functions are not modified in place. Instead, new versions are deployed as entirely new instances, and the old instances are decommissioned. This approach simplifies deployments, reduces configuration drift, and improves reliability. For example, a software-as-a-service (SaaS) provider might use immutable infrastructure to deploy updates to its customer relationship management (CRM) application, ensuring consistency across all instances.

Procedure for Automating the Deployment of Serverless Functions

Automating the deployment process is crucial for the efficient management of serverless functions within a hybrid architecture. The following procedure Artikels a standard approach for automating these deployments.

- Code Repository and Version Control: All serverless function code should be stored in a version control system (e.g., Git). This enables tracking changes, collaborating effectively, and reverting to previous versions if necessary. The use of branches for feature development and pull requests for code reviews is essential.

- Build Process: The build process compiles the code, packages dependencies, and prepares the function for deployment. This may involve using build tools specific to the programming language and serverless platform. For example, Node.js functions might use npm or yarn to manage dependencies, while Python functions could use pip.

- Configuration Management: Environment-specific configurations (e.g., database connection strings, API keys) should be managed separately from the code. This can be achieved using environment variables, configuration files, or secret management services. The configuration should be injected into the function during deployment.

- Continuous Integration/Continuous Deployment (CI/CD) Pipeline: A CI/CD pipeline automates the build, test, and deployment processes. Common CI/CD tools include Jenkins, GitLab CI, CircleCI, and AWS CodePipeline. The pipeline is triggered by code changes pushed to the repository.

- Testing: Automated testing is critical to ensure the functionality and stability of serverless functions. Unit tests, integration tests, and end-to-end tests should be included in the CI/CD pipeline. Tests should be executed after the build process and before deployment.

- Deployment Automation: The deployment step uses tools provided by the serverless platform (e.g., AWS SAM, Serverless Framework, Azure Functions CLI, Google Cloud Functions CLI) to deploy the function to the target environment. This includes configuring triggers, resource allocation, and monitoring settings.

- Monitoring and Rollback: After deployment, the CI/CD pipeline should automatically monitor the function’s performance and health. If issues are detected, the pipeline should automatically roll back to the previous version. Alerting mechanisms should notify developers of any failures.

Orchestration Tools for Managing Hybrid Serverless Components

Orchestration tools are essential for managing the complexity of hybrid serverless architectures. These tools provide a centralized platform for coordinating the deployment, scaling, and monitoring of serverless functions and traditional infrastructure components.

- Kubernetes (with Knative): Kubernetes is a container orchestration platform that can be used to manage serverless workloads through Knative. Knative extends Kubernetes with features specifically designed for serverless applications, such as autoscaling, eventing, and traffic management. Kubernetes allows for the orchestration of both containerized applications (e.g., microservices) and serverless functions, providing a unified management layer. A healthcare provider could use Kubernetes with Knative to manage a hybrid system that includes both containerized API services and serverless functions for processing patient data.

- AWS Step Functions: AWS Step Functions is a serverless orchestration service that allows you to coordinate multiple AWS services, including Lambda functions, into workflows. Step Functions can manage the execution of serverless functions, handle error conditions, and provide detailed logging and monitoring. It is particularly useful for building complex, stateful applications. An e-commerce company might use Step Functions to orchestrate a workflow that includes order processing, payment processing, and inventory updates, using serverless functions for each step.

- Azure Logic Apps: Azure Logic Apps is a cloud service that allows you to automate tasks and integrate applications and services using workflows. Logic Apps can connect to various services, including Azure Functions, and on-premise systems. This makes it suitable for orchestrating hybrid serverless applications. For example, a manufacturing company could use Logic Apps to automate data ingestion from on-premise sensors, process the data using Azure Functions, and store the results in Azure Blob Storage.

- Google Cloud Composer: Google Cloud Composer is a managed Apache Airflow service that allows you to create and manage data pipelines. Airflow provides a powerful framework for defining, scheduling, and monitoring workflows. Cloud Composer can integrate with various Google Cloud services, including Cloud Functions, and on-premise systems. A data analytics company could use Cloud Composer to orchestrate a data pipeline that extracts data from various sources, transforms the data using Cloud Functions, and loads the data into BigQuery.

Comparing Hybrid Architectures with Alternative Models

Hybrid architectures, leveraging serverless components, represent a strategic approach to application design and deployment. However, they are not the only architectural paradigm available. Understanding the trade-offs between hybrid models and alternatives like cloud-native and on-premises solutions is crucial for making informed decisions about infrastructure and resource allocation. This section provides a comparative analysis of these architectural approaches.

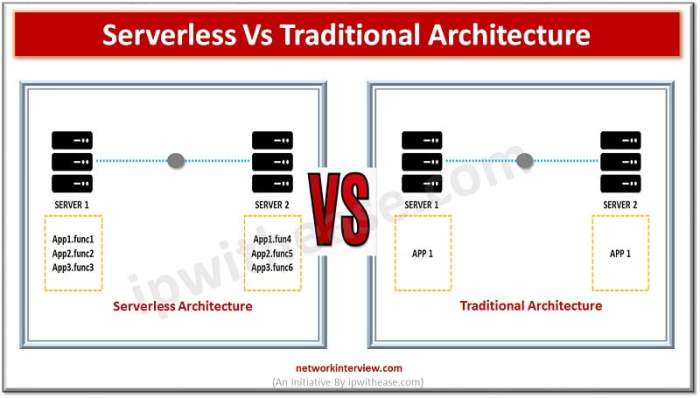

Cloud-Native Architectures

Cloud-native architectures are designed specifically to take advantage of the features and benefits of cloud computing. They emphasize agility, scalability, and resilience through the use of microservices, containers, and automated orchestration. These architectures often leverage serverless functions and other cloud-provided services to achieve high levels of automation and efficiency.

- Pros:

- High Scalability: Cloud-native applications can automatically scale up or down based on demand, providing excellent resource utilization and responsiveness.

- Agility and Speed: Microservices-based architectures allow for rapid development and deployment cycles, enabling faster time-to-market.

- Resilience: Cloud platforms provide built-in redundancy and fault tolerance, minimizing downtime and ensuring application availability.

- Cost Efficiency: Pay-as-you-go pricing models and optimized resource utilization can lead to cost savings.

- Global Reach: Cloud providers offer global infrastructure, allowing for easy deployment and access to users worldwide.

- Cons:

- Vendor Lock-in: Dependence on a specific cloud provider can make it difficult to switch providers or adopt a multi-cloud strategy.

- Complexity: Managing a distributed, microservices-based architecture can be complex, requiring specialized skills and tools.

- Security Concerns: Security is a shared responsibility, and organizations must implement robust security measures to protect their cloud-based assets.

- Network Latency: Applications may experience increased latency if they are heavily reliant on network communication between microservices and cloud resources.

- Cost Management Challenges: While cost-effective, it requires continuous monitoring and optimization to avoid unexpected expenses.

On-Premises Architectures

On-premises architectures involve deploying and managing applications and infrastructure within an organization’s own data center. This approach provides greater control over hardware and data, but it also requires significant investment in infrastructure, personnel, and ongoing maintenance.

- Pros:

- Data Control: Organizations have complete control over their data and infrastructure, which can be important for regulatory compliance and data security.

- Security: Greater control over the physical security of the data center and network.

- Low Latency: Applications can benefit from low latency if the infrastructure is located close to the users.

- Customization: Organizations can customize their infrastructure to meet their specific needs.

- Predictable Costs: Capital expenditure can be more predictable, although operational costs can fluctuate.

- Cons:

- High Upfront Costs: Significant capital investment is required for hardware, software, and data center infrastructure.

- Maintenance and Management: Organizations are responsible for all aspects of infrastructure management, including hardware maintenance, software updates, and security.

- Scalability Limitations: Scaling on-premises infrastructure can be time-consuming and expensive, potentially leading to capacity constraints.

- Lack of Agility: Deployment and update cycles are typically slower compared to cloud-based solutions.

- Disaster Recovery: Requires significant investment in disaster recovery infrastructure and planning.

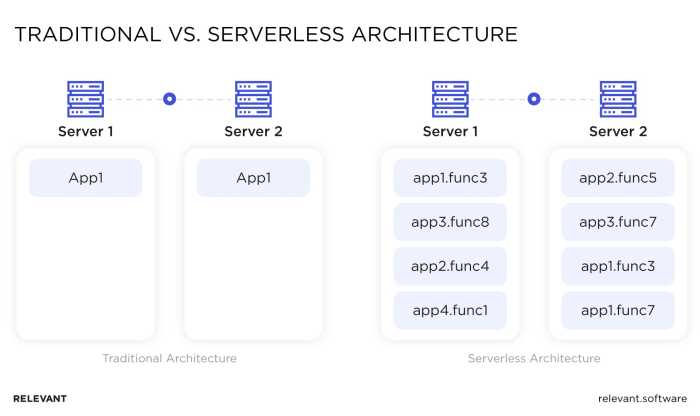

Diagram of Architectural Models

The following diagram illustrates the key differences between cloud-native, on-premises, and hybrid architectures.

Diagram Description: The diagram visually represents three architectural models: Cloud-Native, On-Premises, and Hybrid. Each model is depicted as a distinct section.

Cloud-Native: This section is represented by a cloud icon. It encompasses components such as ‘Microservices’, ‘Containers’, ‘Serverless Functions’, ‘Databases (Cloud-Managed)’, and ‘Orchestration’.

These components are entirely within the cloud environment. Arrows point towards the cloud icon from users representing users interacting with the cloud-native application.

On-Premises: This section is represented by a building icon. It includes components like ‘Servers’, ‘Networking’, ‘Storage’, ‘Databases (On-Premises)’, and ‘Security’. These components are all located within the organization’s physical infrastructure.

Arrows point towards the building icon from users representing users interacting with the on-premises application.

Hybrid: This section is represented by a combination of a cloud icon and a building icon, signifying a blend of cloud and on-premises resources. It shows components distributed between the cloud and on-premises environments, such as ‘Microservices (Partially in Cloud)’, ‘Databases (Cloud & On-Premises)’, ‘Serverless Functions (in Cloud)’, and ‘APIs’.

The diagram shows users interacting with the Hybrid application through the cloud and on-premises components. Arrows indicate the flow of data and interactions between the cloud and on-premises components, highlighting the integration aspect.

Connecting Lines: Lines connect the Hybrid section to both the Cloud-Native and On-Premises sections, emphasizing the integration and communication between these different environments. The diagram underscores the distribution of resources and the interplay between cloud and on-premises components within a hybrid architecture.

Final Review

In conclusion, the hybrid architecture with serverless components represents a strategic evolution in IT infrastructure. By embracing this model, organizations can leverage the strengths of both traditional and cloud-native approaches. The combination of scalability, cost-effectiveness, and enhanced operational flexibility positions this architecture as a key enabler for digital transformation across diverse industries. As businesses navigate the complexities of modern application development and deployment, the hybrid serverless approach offers a robust and adaptable solution for achieving their strategic goals.

Essential Questionnaire

What are the primary advantages of a hybrid architecture with serverless components?

The primary advantages include increased scalability, enhanced flexibility, cost optimization through pay-per-use models, improved resource utilization, and reduced operational overhead by automating infrastructure management.

How does a hybrid serverless architecture improve security?

Security is improved by allowing for the segregation of sensitive data and workloads. Organizations can leverage existing security infrastructure in on-premises environments while utilizing cloud-based security services for other components, creating a layered security approach.

What types of applications are best suited for a hybrid serverless architecture?

Applications that benefit most include those with fluctuating workloads, event-driven processes, data processing tasks, and applications requiring low latency or compliance requirements that necessitate on-premises components. Industries like finance, healthcare, and retail often find this model advantageous.

How can I monitor and troubleshoot a hybrid serverless application?

Monitoring involves collecting metrics and logs from both on-premises and cloud components. Use centralized logging, distributed tracing, and performance monitoring tools to identify bottlenecks, errors, and performance issues across the entire system. Utilize metrics and logs from both on-premises and cloud components for complete observability.