Kubernetes operators are specialized controllers that automate the management of complex applications running within a Kubernetes cluster. They act as intelligent agents, ensuring applications are deployed, configured, and maintained according to predefined specifications. This streamlined approach reduces manual intervention, increases efficiency, and frees up developer time to focus on higher-level tasks.

Imagine a chef following a detailed recipe for a specific dish. The recipe Artikels precise steps, ingredients, and timings. A Kubernetes operator is similar; it takes the Kubernetes environment as its platform and the application’s configuration as its recipe, ensuring everything is orchestrated correctly and consistently.

Introduction to Kubernetes Operators

Kubernetes operators are specialized extensions that automate the management of specific applications or deployments within a Kubernetes cluster. They act as intelligent agents, bridging the gap between the declarative nature of Kubernetes and the complexities of managing complex applications. Instead of relying solely on Kubernetes’s core functionalities, operators provide tailored management and automation for particular software stacks.Operators simplify the deployment, scaling, and maintenance of applications by encapsulating the necessary logic and configurations.

They abstract away the underlying complexities, allowing developers and administrators to focus on application-level tasks. This approach improves operational efficiency and reduces the risk of errors associated with manual management.

Definition of a Kubernetes Operator

A Kubernetes operator is a custom controller that manages the lifecycle of specific applications or resources within a Kubernetes cluster. It continuously monitors the desired state of the application and adjusts the cluster’s resources to maintain that state. This is done through interactions with Kubernetes APIs and manifests, ensuring the application is running as expected.

Role of an Operator in the Kubernetes Ecosystem

Operators automate the management of complex applications, relieving developers and administrators from repetitive tasks. They automate the deployment, scaling, upgrading, and monitoring of applications, ensuring they remain in the desired state. This automation reduces manual intervention and the potential for human error, improving operational efficiency and reducing deployment times. Operators also enhance application portability and maintainability by encapsulating application-specific logic within the operator itself.

Benefits of Using Kubernetes Operators

Operators offer several key benefits for managing applications within a Kubernetes cluster. These benefits include:

- Automation: Operators automate tasks like deployment, scaling, and upgrades, reducing manual intervention and the risk of errors.

- Simplified Management: Operators abstract away the complexities of managing specific applications, allowing developers and administrators to focus on higher-level tasks.

- Improved Consistency: Operators ensure applications are deployed and maintained consistently, minimizing configuration drift and ensuring reliability.

- Enhanced Portability: Operators can encapsulate application-specific logic, facilitating easier portability across different Kubernetes clusters.

- Reduced Operational Overhead: Operators automate tasks that were previously performed manually, reducing operational overhead and freeing up personnel for other tasks.

How Operators Interact with Kubernetes Resources

Operators interact with Kubernetes resources through the Kubernetes API. They observe the desired state of the application, as defined in Kubernetes manifests, and adjust the cluster resources accordingly. Operators use Kubernetes’s event-driven architecture to monitor and respond to changes in the cluster’s state. This ensures that the application remains in the desired state.

- Operators constantly monitor the status of application components.

- They react to changes in the desired state of the application, making necessary adjustments to Kubernetes resources.

- This interaction ensures that the application is consistently running in the desired state, with minimal manual intervention.

Analogy for Non-Technical Audiences

Imagine a chef (the operator) responsible for preparing a specific dish (the application). The recipe (the Kubernetes manifest) Artikels the ingredients and steps. The chef follows the recipe, ensuring the dish is prepared correctly and consistently. The kitchen (the Kubernetes cluster) provides the necessary tools and ingredients, and the chef uses those tools and ingredients to prepare the dish as per the recipe.

The chef also monitors the dish’s progress, adjusting as needed to ensure the dish is cooked to perfection.

Key Components and Architecture

A Kubernetes operator acts as a bridge between user-defined resources and the underlying Kubernetes infrastructure. It simplifies the management of complex applications by automating tasks that would otherwise be handled manually. This automation significantly reduces operational overhead and enhances the reliability and scalability of deployed applications.The operator’s architecture allows for seamless integration with the Kubernetes API, enabling it to interact with and manipulate Kubernetes resources.

This interaction is crucial for the operator to effectively control and manage the lifecycle of the resources it manages. Operators, in essence, automate the tasks associated with deploying, updating, and monitoring applications within the Kubernetes cluster.

Essential Components of an Operator

Operators are not monolithic entities; rather, they are comprised of several interconnected components. These components work together to achieve the desired level of automation. Understanding these components is key to comprehending how operators operate within the Kubernetes ecosystem.

- Custom Resource Definition (CRD): A CRD defines a new type of resource that the operator manages. This allows the operator to interact with Kubernetes resources in a structured and consistent manner. The CRD describes the schema of the custom resource, including its fields and validation rules. This structured approach simplifies the interaction between the application and the operator.

- Control Loop: This is the core logic of the operator. It continuously monitors the desired state of the custom resources and compares it to the actual state. The control loop then applies the necessary changes to achieve the desired state. This loop is essential for maintaining the desired consistency and configuration of the managed resources.

- Reconciler: The reconciler is the component responsible for applying changes to the resources. It’s the engine that drives the control loop, constantly checking the current state against the desired state and making adjustments as needed. The reconciler’s actions are carefully orchestrated to ensure minimal disruption to the cluster.

- Deployment Mechanisms: Operators need a way to be deployed and managed within the Kubernetes cluster. This involves using Kubernetes manifests (YAML or JSON files) to define the operator’s deployment, ensuring it’s integrated seamlessly with the cluster’s infrastructure. This deployment strategy allows for easy scaling and management of the operator within the cluster.

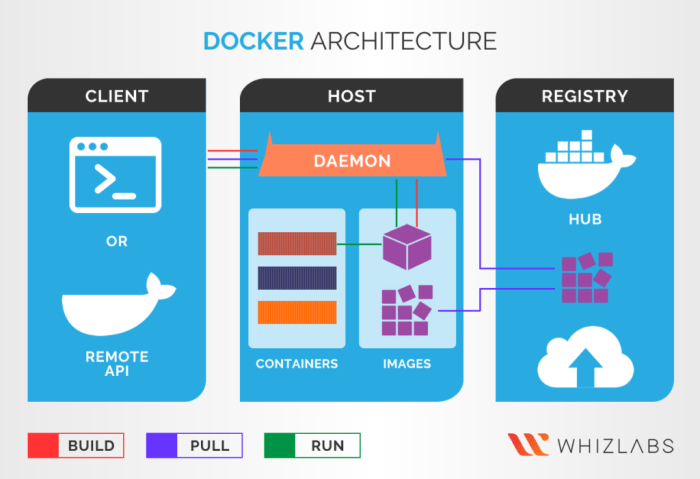

Operator-Kubernetes API Interaction

The operator’s interaction with Kubernetes API objects is fundamental to its functionality. The operator leverages the Kubernetes API server to monitor and manipulate the state of resources. This interaction allows the operator to observe changes and react accordingly.

- API Server Communication: The operator interacts with the Kubernetes API server to monitor custom resources and changes in the cluster. This continuous monitoring is essential for ensuring that the operator can respond to changes in the application’s desired state.

- Resource Events: The operator listens for events related to the custom resources it manages. These events signal changes in the resource’s status, allowing the operator to react appropriately. This allows the operator to react dynamically to changes in the application’s configuration.

- Resource Updates: The operator uses the API server to create, update, or delete Kubernetes resources based on the defined reconciliation logic. This interaction allows the operator to enforce the desired state of the application.

Deployment and Management

Deploying and managing operators involves several crucial steps. Proper deployment ensures seamless integration with the Kubernetes cluster and simplifies the management of the operator.

- Deployment Methodologies: Operators can be deployed using various methodologies, including container images, deploying operator as a Kubernetes daemonset or a deployment. The choice depends on the specific needs and complexity of the application.

- Configuration Management: Proper configuration of the operator is vital for its effective functioning. Configuration management tools ensure consistency and simplify modifications to the operator’s behavior.

- Monitoring and Logging: Continuous monitoring of the operator’s performance is critical for troubleshooting and maintaining its effectiveness. Robust logging mechanisms provide valuable insights into the operator’s behavior.

Operator Lifecycle

The operator lifecycle spans various stages, from deployment to termination. Understanding these stages is crucial for effective operator management.

- Initialization: The operator initializes and connects to the Kubernetes API server, ensuring proper communication with the cluster. This initial stage is crucial for establishing a functional connection to the Kubernetes environment.

- Monitoring: The operator continuously monitors the status of managed resources, ensuring that they align with the desired state. This monitoring is the core function of the operator.

- Reconciliation: The operator reconciles the actual state of the resources with the desired state. This involves creating, updating, or deleting resources to maintain the desired configuration. This is the crucial step in ensuring the consistency of the application.

- Termination: The operator gracefully terminates its operations when necessary. This termination process ensures that any resources are properly cleaned up.

Resource Reconciliation

Resource reconciliation is a crucial aspect of operator functionality. It ensures that the actual state of the managed resources matches the desired state. This ensures that the application remains in the desired state and functions as intended.

- State Comparison: The operator continuously compares the current state of the managed resources to the desired state, which is often defined in the custom resource. This comparison forms the basis for the reconciliation process.

- Change Application: The operator applies the necessary changes to bring the actual state in line with the desired state. This might involve creating, updating, or deleting resources. This change application process ensures that the operator maintains the desired consistency.

- Idempotency: The operator ensures that any operation is idempotent. This means that performing the operation multiple times has the same effect as performing it once. This ensures reliability in the face of failures or intermittent network issues.

Operator Development and Implementation

Developing Kubernetes operators involves creating custom controllers that manage the lifecycle of custom resources. This process often requires a deep understanding of Kubernetes APIs and the intricacies of resource management. Thorough planning and a structured approach are crucial for successful operator development.

Step-by-Step Guide to Building a Basic Operator

A fundamental approach to operator development involves defining a custom resource definition (CRD) that Artikels the structure and behavior of the new resource type. The operator then monitors Kubernetes for changes to this resource and performs the necessary actions. This includes handling events such as creation, update, and deletion.

- Define the Custom Resource Definition (CRD): This crucial step involves specifying the structure of the custom resource. The CRD defines the fields, types, and validation rules for the new resource. Using the `kubectl create` command, or the Kubernetes API, the CRD is created, allowing the operator to interact with the new resource. This is a crucial step as it defines how the operator understands and interacts with the custom resource.

- Implement the Operator Logic: This phase involves crafting the core logic of the operator. Using a chosen programming language, the code interacts with the Kubernetes API to monitor the custom resource. Event handling is essential; the operator should react appropriately to events like resource creation, update, or deletion.

- Implement the Controller Logic: The controller component is responsible for managing the lifecycle of the custom resource. It ensures the resource conforms to the defined rules and executes the necessary actions. The controller interacts with the Kubernetes API, ensuring desired state is achieved.

- Deploy the Operator: The compiled operator code is deployed to the Kubernetes cluster. This involves packaging the code into a container image and deploying it as a Kubernetes deployment. Proper configuration is crucial for successful deployment.

- Monitor and Test: After deployment, monitor the operator’s behavior and the status of the custom resources. This step involves testing the operator’s responsiveness to various events and resource states. The outcome is the successful execution of the operator’s logic in the cluster.

Programming Languages and Tools

Various programming languages and tools are commonly used in operator development. These tools aid in the development process, enhancing efficiency and maintainability.

- Go (Golang): Go is a popular choice due to its efficiency, concurrency features, and rich ecosystem of Kubernetes libraries. It is often the preferred language for creating operators.

- Python: Python, known for its readability and extensive libraries, can also be used for operator development. Its versatility makes it suitable for various tasks within the operator.

- Other Languages: Other languages like Java, Ruby, or Node.js are also used for operator development, depending on the project’s requirements.

- Kubernetes Client Libraries: These libraries provide an interface for interacting with the Kubernetes API, streamlining the process of creating operators.

- Testing Frameworks: Testing frameworks like `testing` in Go are critical for ensuring the operator’s functionality and stability.

Handling Custom Resource Definitions (CRDs)

Custom Resource Definitions (CRDs) are crucial for defining the structure of custom resources. The structure should align with the operator’s intended functionality. Understanding CRDs is essential for building effective operators.

- CRD Specification: The specification Artikels the structure and validation rules for the custom resource. This involves defining the fields, their types, and any constraints or validation requirements.

- CRD Validation: The operator should validate the incoming custom resource data against the CRD specification. This ensures that the data conforms to the expected structure and prevents errors.

- CRD Generation: Tools and libraries can be used to generate the CRD based on a schema or template, automating this process.

Testing and Debugging Operators

Testing and debugging operators are vital for ensuring reliability and stability. These methods ensure the operator behaves as expected in various situations.

- Unit Testing: Unit tests isolate specific components of the operator to verify their individual functionality. This helps identify errors early in the development process.

- Integration Testing: Integration tests verify the interactions between different components of the operator and the Kubernetes API. This approach ensures proper communication and data exchange.

- Debugging Tools: Kubernetes debugging tools aid in identifying and resolving issues within the operator. These tools help diagnose problems in the operator’s behavior.

Sample Operator Code Snippet (Go)

This snippet demonstrates a simple operator that creates a Pod.“`gopackage mainimport ( “context” “fmt” appsv1 “k8s.io/api/apps/v1” metav1 “k8s.io/apimachinery/pkg/apis/meta/v1” “k8s.io/apimachinery/pkg/runtime” “k8s.io/apimachinery/pkg/runtime/schema” “k8s.io/client-go/kubernetes/scheme” “k8s.io/client-go/rest” “k8s.io/client-go/tools/record”)func main() // … (rest config setup) … // Create a Kubernetes clientset config, err := rest.InClusterConfig() if err != nil panic(err) clientset, err := kubernetes.NewForConfig(config) if err != nil panic(err) // … (register custom resource) … // Create a recorder for events recorder := record.NewBroadcaster().NewRecorder(scheme.Scheme, corev1.EventSourceComponent: “my-operator”) // … (rest of the operator logic) … fmt.Println(“Operator started.”)“`

Operator Patterns and Best Practices

Kubernetes operators provide a standardized way to manage complex application deployments and configurations. Understanding common patterns and best practices is crucial for building robust, maintainable, and scalable operators. These practices ensure operators can handle the dynamic nature of Kubernetes clusters and integrate seamlessly with existing infrastructure.Implementing best practices in operator development minimizes potential challenges, leading to efficient management of applications and their resources within the cluster.

This section will Artikel common patterns, highlight best practices, and illustrate common tasks like deployment and scaling management. It will also address potential challenges and Artikel a simplified operator lifecycle.

Common Operator Design Patterns

Operator design often leverages established patterns to ensure consistency and efficiency. A key pattern involves leveraging Kubernetes’ declarative nature. Operators define desired states for managed resources, and the operator orchestrates the changes required to achieve these states. This allows for easier management and rollback procedures. Another important pattern is the use of custom resource definitions (CRDs).

These allow operators to extend Kubernetes with their own resource types, enabling users to manage their specific applications in a standardized manner.

Best Practices for Maintainable and Scalable Operators

Adhering to best practices ensures the longevity and scalability of operators. Operators should be designed with modularity in mind, enabling independent component updates without impacting the entire operator. Employing a well-structured architecture, including clear separation of concerns, promotes maintainability. Thorough documentation, including clear explanations of resource management, is critical for effective maintenance and troubleshooting. Implementing automated testing procedures, including unit and integration tests, ensures the reliability and stability of the operator.

Common Operator Tasks

Operators often handle various tasks, including deployment management and scaling services. For instance, an operator might manage deployments of a specific application, ensuring that the desired number of replicas are running and available. Similarly, an operator might automatically scale services based on metrics such as CPU utilization or incoming request rates. This automation simplifies resource management and optimizes application performance.

Detailed configuration management is also important, as operators can ensure resources conform to specific configuration profiles.

Challenges in Operator Development and Deployment

Developing and deploying operators can present several challenges. One challenge involves ensuring compatibility with different Kubernetes versions. Operators need to be designed with backward compatibility in mind to avoid disruption to existing deployments. Another key challenge involves managing dependencies and maintaining operator code quality. Thorough testing and version control practices are essential.

Furthermore, understanding the complexities of Kubernetes’ event loop and resource management is crucial for effective operator development. Monitoring and logging mechanisms must be implemented to track the operator’s actions and diagnose potential issues.

Operator Lifecycle Workflow

The following diagram illustrates a simplified operator lifecycle:

+-----------------+ +-----------------+ +-----------------+| Operator Deploy | --> | CRD Definition | --> | Resource Creation|+-----------------+ +-----------------+ +-----------------+ | | | v | +-----------------+ | | Resource Change | | +-----------------+ | | | v | +-----------------+ | | Reconciliation | | +-----------------+ | | | v | +-----------------+ | | Resource Update | +-----------------+

The operator is deployed, and a Custom Resource Definition (CRD) is created.

The operator monitors for changes to resources. When a change occurs, the operator reconciles the current state with the desired state. Finally, the operator updates the managed resources accordingly.

Operator Lifecycle Management

Managing the lifecycle of Kubernetes Operators is crucial for ensuring consistent operation and optimal resource utilization within a cluster. This involves overseeing the entire journey of an operator, from deployment to updates, failures, and eventual decommissioning. Effective operator lifecycle management minimizes disruptions, ensures reliability, and simplifies maintenance.

Deployment Strategies

Proper deployment strategies are paramount for minimizing disruption when introducing new operators or updating existing ones. Careful planning and execution are essential to avoid cascading failures or unintended consequences.

- Blue/Green Deployment: This strategy involves deploying a new version of the operator alongside the existing one. Traffic is then gradually shifted to the new version, ensuring a smooth transition. This method allows for quick rollback if issues arise in the new version. For instance, a financial institution deploying a new operator for managing compliance tools might use blue/green to avoid impacting live trading operations.

- Rolling Update: In a rolling update, the operator is updated incrementally. New instances are deployed, and existing ones are gracefully shut down, ensuring that the operator continues to function throughout the update. This strategy reduces downtime but can introduce more complexity than blue/green deployments. For example, a company updating an operator for container orchestration could leverage a rolling update to minimize impact on running applications.

- Canary Deployment: A small subset of nodes or users is assigned to the new operator version. If the new version functions as expected, the update is rolled out to the rest of the cluster. This approach minimizes the risk of widespread problems and allows for controlled testing before a full rollout. For instance, a company might use canary deployment for a new operator for managing data pipelines to prevent data loss during the update process.

Update and Upgrade Mechanisms

Several methods are available for updating and upgrading operators within a Kubernetes cluster. Choosing the appropriate method depends on the specific requirements and the nature of the operator.

- Helm Charts: Helm charts provide a structured way to package and deploy operators. Updates are often managed through chart upgrades, allowing for versioning and dependency management. This method promotes consistency and facilitates tracking changes over time. This is a commonly used approach, particularly when dealing with complex operators that have multiple dependencies.

- Operator SDK: The Operator SDK facilitates the creation of custom operators. It provides tools and frameworks for managing operator versions and dependencies, allowing for streamlined upgrades. Using the Operator SDK promotes consistency in the way operators are developed and updated.

- Direct Deployment: Operators can be deployed directly using Kubernetes manifests. This approach is often used for simple operators or in cases where external tools are not available. While simpler, this method can lack the structure and automation benefits offered by other approaches.

Failure Handling and Recovery

Operators can experience failures during deployment, updates, or runtime. Robust failure handling and recovery strategies are essential to ensure operational stability.

- Automated Rollbacks: Setting up automated rollback mechanisms allows for reverting to a previous stable version of the operator in case of issues. This mitigates the risk of service disruptions and allows for quick recovery. For example, a critical operator managing a company’s security infrastructure might have automatic rollback capabilities to prevent data breaches during updates.

- Monitoring and Alerting: Implementing comprehensive monitoring and alerting systems allows for the early detection of operator issues. This facilitates swift intervention and minimizes the impact of potential failures. Real-time monitoring tools can notify administrators about unusual operator behavior, helping prevent widespread problems.

- Fault Tolerance: Operators should be designed with fault tolerance in mind. Redundancy, backups, and other strategies can help ensure that the operator can continue to function even if some components fail. For instance, an operator managing a distributed database system should incorporate strategies to ensure data consistency and availability in case of node failures.

Operator Management Tools

Several tools aid in managing the lifecycle of operators within a Kubernetes cluster. Each tool has its strengths and weaknesses, depending on the specific needs and complexities of the operators being managed.

| Tool | Strengths | Weaknesses |

|---|---|---|

| kubectl | Built-in to Kubernetes, simple for basic tasks | Limited automation, not ideal for complex operators |

| Helm | Package management, automated upgrades | Can be complex for non-Helm-based operators |

| Operator SDK | Custom operator development, streamlined lifecycle management | Requires familiarity with the SDK |

| External Operator Lifecycle Management Tools | Comprehensive automation, enhanced monitoring | Cost, complexity of integration |

Operator Use Cases and Examples

Kubernetes operators extend the capabilities of Kubernetes by providing custom controllers for specific applications. They automate complex tasks, enabling easier deployment, management, and scaling of applications within the Kubernetes ecosystem. This section explores diverse operator use cases and illustrates their application in real-world scenarios.

Operators streamline application deployment and management, reducing manual intervention and improving automation. They offer a structured approach to handling tasks that might otherwise be complex and error-prone within a Kubernetes cluster.

Database Management Operators

Database management is a crucial aspect of any application. Operators provide automated management for various database systems. They handle tasks such as deployment, scaling, backups, and recovery. These operators can significantly reduce manual administrative overhead.

- PostgreSQL Operator: This operator automates the deployment and management of PostgreSQL databases within Kubernetes. It handles tasks such as provisioning, scaling, and managing backups of PostgreSQL instances, all managed through Kubernetes resources. This frees up administrators to focus on higher-level tasks.

- MySQL Operator: Similar to the PostgreSQL operator, this operator automates the management of MySQL databases. It handles the lifecycle of MySQL deployments, allowing administrators to focus on other aspects of application management. The operator ensures consistency and reliability in database management.

Container Orchestration Operators

Operators are not limited to database management; they can also simplify container orchestration tasks.

- Custom Container Image Operators: An operator can be designed to manage and deploy custom container images. This is especially useful when managing proprietary or specialized images, ensuring consistency and efficiency in deployments. The operator ensures the correct image is used and configured for each deployment.

- Container Registry Operators: These operators automate the deployment and management of container registries, streamlining the process of storing and managing container images. They handle tasks like image storage, security, and retrieval, improving operational efficiency.

Monitoring and Logging Operators

Operators can integrate with monitoring and logging tools, enabling automated collection and analysis of application metrics.

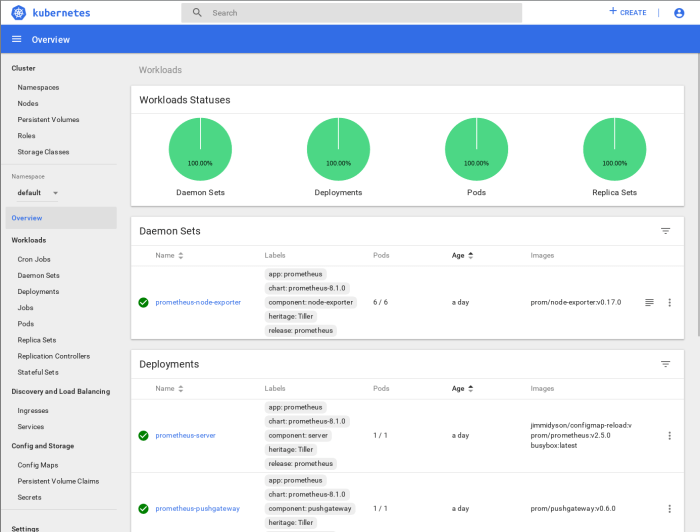

- Prometheus Operator: This operator simplifies the deployment and management of Prometheus, a popular open-source monitoring system. It handles the setup and configuration of Prometheus instances within a Kubernetes cluster, ensuring efficient monitoring of applications. This operator enables consistent and automated monitoring of application health.

- Fluentd Operator: This operator automates the deployment and configuration of Fluentd, a powerful logging system. It simplifies the integration of Fluentd into a Kubernetes environment, making it easier to collect and process logs from various applications. This operator enables automated logging for better troubleshooting and analysis.

Comparison with Other Kubernetes Tools

Operators differ from other Kubernetes tools in their focus on specific application types. While tools like Helm manage deployments, operators manage the entire lifecycle of applications, often integrating with specific tools like databases or monitoring systems.

- Helm Charts vs. Operators: Helm charts focus on application deployment, while operators manage the complete application lifecycle. Operators often integrate with external services, like databases or monitoring tools, offering a more comprehensive solution.

- Advantages of Operators over other tools: Operators provide a standardized approach to managing specific applications within Kubernetes. They automate tasks and enhance the overall reliability and scalability of applications. This results in a reduction of manual intervention, improved operational efficiency, and enhanced automation.

Automation and Manual Intervention

Operators automate complex tasks, significantly reducing manual intervention.

“Operators provide a significant reduction in manual intervention, improving automation and reliability in managing complex applications.”

Operators handle deployment, scaling, upgrades, and other crucial tasks, minimizing errors and allowing administrators to focus on strategic aspects of application management.

Operator Security Considerations

![[Kubernetes] 쿠버네티스 서비스 - 2. 클러스터ip(ClusterIP) — 기록하며 성장하는 주니어 개발자 [Kubernetes] 쿠버네티스 서비스 - 2. 클러스터ip(ClusterIP) — 기록하며 성장하는 주니어 개발자](https://wp.ahmadjn.dev/wp-content/uploads/2025/06/2021-07-12-terraform-plus-helm-a-match-made-in-heaven-hell-dashboard.png)

Kubernetes operators, while enhancing automation and customizability, introduce new security vectors. Understanding these vulnerabilities and implementing robust security practices are crucial for maintaining the integrity and security of your Kubernetes cluster. Proper operator security considerations are essential to prevent malicious actors from exploiting vulnerabilities and ensure the reliability of deployed applications.

Security Vulnerabilities in Operators

Operators, being custom extensions, can introduce vulnerabilities not present in core Kubernetes components. These vulnerabilities can manifest in various ways, ranging from insecure configuration to compromised dependencies. A compromised operator could potentially grant unauthorized access to sensitive cluster resources, inject malicious code, or disrupt application functionality.

Common Security Misconfigurations

Operator deployments often involve complex configurations. Improperly configured operators can lead to security breaches. For instance, allowing unrestricted access to sensitive resources or using weak authentication mechanisms are critical security concerns. Operators might also be misconfigured to permit elevated privileges, opening doors to escalation of privileges attacks. Insufficient logging and monitoring of operator activity also pose a significant risk, as they impede the identification and response to potential threats.

The lack of input validation can allow malicious inputs to disrupt operator behavior. In addition, the use of outdated or vulnerable dependencies within the operator image can expose the cluster to attacks.

Best Practices for Securing Operator Deployments

Implementing secure operator deployments requires a multi-faceted approach. This includes using least privilege principles, enforcing strict access controls, and ensuring secure communication channels. Operators should be deployed in isolated namespaces, limiting their access to necessary resources. Employing role-based access control (RBAC) is essential to restrict the permissions granted to operators. Furthermore, utilizing encrypted communication channels (e.g., TLS/SSL) is critical for protecting sensitive data transmitted between the operator and the cluster.

Regularly updating operators with security patches is essential to mitigate known vulnerabilities.

Validating Operator Images and Configurations

Validating operator images and configurations is paramount to ensure their security. The process of validating operator images involves scanning the images for vulnerabilities, checking for known malicious code, and verifying the integrity of the image. Operator configurations should also be validated to ensure they adhere to security policies and avoid potentially harmful configurations. A thorough examination of operator dependencies, including libraries and external packages, is crucial for detecting vulnerabilities.

Importance of Regular Security Audits

Regular security audits for operators are vital for identifying potential weaknesses. These audits should involve reviewing operator code for security vulnerabilities, assessing the configuration of the operator, and verifying the integrity of the deployed operator image. Automated security scanning tools can significantly enhance the effectiveness of security audits. Implementing regular security audits is crucial for identifying potential risks and vulnerabilities in operators, ensuring the security of the entire Kubernetes cluster.

A comprehensive approach includes periodic reviews of operator code, configurations, and dependencies, proactively addressing any potential security gaps.

Operator Monitoring and Logging

Effective monitoring and logging are crucial for Kubernetes operators to ensure optimal performance, detect and resolve issues promptly, and maintain a high degree of reliability. Comprehensive monitoring allows for proactive management of operator health and performance, reducing downtime and enabling rapid troubleshooting. Thorough logging provides valuable insights into operator behavior, facilitating understanding of its interactions with the Kubernetes cluster.

Monitoring and logging strategies for operators must be carefully designed to address the unique needs and complexities of operator deployments. This involves selecting appropriate tools, implementing efficient data collection mechanisms, and establishing clear procedures for analyzing and interpreting the collected data. A robust monitoring strategy can significantly enhance the overall stability and usability of the operators.

Monitoring Operator Performance and Health

Operator performance is crucial for the smooth functioning of applications and services deployed within the Kubernetes cluster. Monitoring tools can track various aspects of operator behavior, including resource consumption (CPU, memory), request latency, and error rates. These metrics are vital for understanding operator performance and identifying potential bottlenecks. Tools like Prometheus, Grafana, and custom metrics allow operators to effectively track resource usage, resource requests, and overall performance trends.

Collecting and Analyzing Operator Logs

Collecting and analyzing logs is a fundamental aspect of troubleshooting and identifying issues with operators. Operator logs provide insights into the operator’s internal state, actions taken, and interactions with the Kubernetes API. Tools like Fluentd or Elasticsearch, Logstash, Kibana (ELK stack) are often used to aggregate, process, and analyze these logs. Centralized logging enables efficient searching, filtering, and correlation of events to identify patterns and root causes of issues.

Implementing log rotation policies ensures efficient storage and retrieval of historical data.

Identifying and Troubleshooting Operator Issues

Identifying and troubleshooting operator issues requires a systematic approach that leverages monitoring data and logs. Analyzing metrics, such as error rates, latency, and resource consumption, can pinpoint performance problems. Analyzing logs helps understand the reasons behind errors, exceptions, or unexpected behavior. Correlating metrics and logs allows for a comprehensive understanding of the problem, leading to efficient troubleshooting.

Establishing clear procedures for logging and monitoring helps operators quickly identify and resolve problems, minimizing disruption to the cluster and deployed applications.

Tools and Techniques for Tracking Operator Metrics

Various tools and techniques are employed for tracking operator metrics. Kubernetes provides built-in tools for exposing metrics, which can be consumed by external monitoring systems. Prometheus, a popular open-source monitoring system, can collect and visualize metrics from operators. Grafana, a powerful visualization tool, provides dashboards for monitoring operator metrics and their trends. Custom metrics can be defined and exposed for specific operator functionalities, enabling more granular monitoring and analysis.

This granular view of metrics provides a deeper understanding of operator behavior, and allows operators to tailor their performance and stability monitoring based on the specific needs of the application they are managing.

Monitoring Tools and Functionalities

This table Artikels common monitoring tools and their functionalities:

| Tool | Functionality |

|---|---|

| Prometheus | Collects and stores metrics from various sources, including operators. Provides a query language for retrieving and analyzing metrics. |

| Grafana | Visualizes metrics collected by Prometheus and other monitoring systems. Allows creation of dashboards for tracking key performance indicators (KPIs). |

| Fluentd | Collects and routes logs from various sources, including operators, to other systems like Elasticsearch. |

| Elasticsearch, Logstash, Kibana (ELK stack) | Centralized logging platform that stores, processes, and analyzes logs. Enables advanced searching, filtering, and visualization of logs. |

| Kubernetes Metrics Server | Provides metrics about Kubernetes resources, including those managed by operators, to external monitoring systems. |

Scaling and Performance of Operators

Operators, designed to automate Kubernetes deployments, must be robust and scalable to manage complex and evolving workloads. Efficient operator scaling and performance are critical for maintaining application availability and responsiveness, especially in large-scale deployments. This section details strategies for achieving this, highlighting potential bottlenecks and providing architectural frameworks for building scalable operators.

Scaling Strategies for Operators

Effective scaling strategies are essential for operators to handle increasing workloads without compromising performance. These strategies encompass both horizontal and vertical scaling, along with considerations for resource allocation. Horizontal scaling involves deploying multiple operator instances, distributing the load across them. Vertical scaling, on the other hand, involves increasing the resources allocated to individual operator instances.

- Horizontal Scaling: Deploying multiple operator instances allows distributing the workload and handling a larger number of resources. This approach can significantly enhance the operator’s ability to manage demanding deployments. Load balancing mechanisms are crucial to ensure equitable distribution of requests across instances.

- Vertical Scaling: Increasing the resources (CPU, memory) allocated to individual operator instances can improve performance. However, this strategy may not be sufficient for very large deployments, and it may lead to increased costs.

- Resource Allocation Optimization: Proper resource allocation is crucial. Over-provisioning resources may lead to unnecessary costs, while under-provisioning can cause performance bottlenecks. A meticulous analysis of resource requirements is essential for effective operator scaling.

Optimizing Operator Performance

Optimizing operator performance involves various techniques to enhance responsiveness and efficiency. These techniques span code optimization, efficient data processing, and careful resource management.

- Code Optimization: Employing efficient algorithms and data structures is critical. Minimizing unnecessary computations and streamlining code logic can drastically improve performance. Profiling tools can be instrumental in identifying performance bottlenecks within the operator’s code.

- Efficient Data Processing: Strategies for handling data efficiently are paramount. Operators often interact with large amounts of data. Caching frequently accessed data, utilizing optimized data structures, and avoiding unnecessary data transformations are vital.

- Asynchronous Operations: Employing asynchronous operations can enhance operator performance, especially when dealing with long-running tasks. This allows the operator to handle multiple requests concurrently, preventing delays and improving responsiveness.

Common Bottlenecks in Operator Performance

Understanding potential bottlenecks in operator performance is crucial for effective troubleshooting and mitigation. These bottlenecks often arise from resource constraints, inefficient code, or communication issues.

- Resource Contention: Competition for resources (CPU, memory, network bandwidth) among the operator, other Kubernetes components, and applications can lead to performance degradation. Careful resource management and scheduling are vital.

- Network Latency: Network communication delays can introduce significant bottlenecks. Operators often need to communicate with various components in the cluster. Optimizing network communication protocols and ensuring low latency connections are essential.

- Data Processing Delays: Inefficient data processing within the operator can cause substantial delays. Complex data transformations or inefficient data structures can significantly hinder performance. Analyzing and optimizing data processing steps is essential.

Designing Scalable Operator Architectures

Designing scalable operator architectures is a critical aspect of ensuring operator robustness and reliability. These architectures must be modular, maintainable, and capable of handling increasing workloads.

- Modular Design: Dividing the operator into smaller, independent modules allows for better maintainability, scalability, and testing. This modular approach isolates concerns and facilitates easier updates.

- Microservices Architecture: Adopting a microservices architecture for operators can improve scalability and resilience. This approach allows for independent scaling of different parts of the operator.

- Caching Strategies: Implementing caching strategies can significantly improve performance by reducing the need to repeatedly fetch data. This approach reduces the operator’s workload and enhances responsiveness.

Comparison of Scaling Approaches

Different approaches to scaling operator deployments have varying trade-offs. Careful consideration of these trade-offs is essential for selecting the most appropriate approach.

| Scaling Approach | Advantages | Disadvantages |

|---|---|---|

| Horizontal Scaling | Improved resource utilization, reduced latency, enhanced availability | Increased operational complexity, potential for load imbalance |

| Vertical Scaling | Simpler implementation, easier management | Limited scalability, potential for resource bottlenecks, cost implications |

Integrating Operators with Other Tools

Operators, while powerful, are most effective when seamlessly integrated with other Kubernetes tools and services. This integration enhances automation, observability, and overall efficiency of deployments and management. Effective integration allows for a cohesive and streamlined workflow within the Kubernetes ecosystem.

Effective integration with other tools is crucial for achieving a cohesive and streamlined Kubernetes workflow. This integration extends beyond simple interaction; it encompasses a comprehensive approach to managing the entire lifecycle of an operator and its associated resources. By connecting operators to CI/CD pipelines, monitoring tools, and existing infrastructure, organizations can optimize resource utilization and ensure consistent performance.

Integrating with CI/CD Pipelines

Operators, by nature, are complex deployments. Integrating them into CI/CD pipelines ensures that updates and deployments are handled reliably and consistently. This automated process reduces the likelihood of errors and allows for continuous improvement. The integration process typically involves packaging the operator code within the CI/CD pipeline, triggering deployments on successful builds, and validating the operator’s functionality against pre-defined tests.

This integration approach enables continuous delivery of operators, thereby enhancing agility and responsiveness to changing needs.

Integrating with Monitoring and Logging Systems

Monitoring and logging are essential for understanding the health and performance of operators. Integration with monitoring and logging systems provides valuable insights into operator behavior, resource consumption, and potential issues. This integration often involves exposing metrics and logs from the operator to the monitoring system, allowing for real-time tracking and analysis. For instance, operators can report metrics like CPU usage, memory consumption, and request latency, which can be visualized on dashboards.

Similarly, logging functionalities can capture events like deployments, failures, and configuration changes, allowing for comprehensive analysis of the operator’s lifecycle.

Integrating with Existing Infrastructure

Integrating operators with existing infrastructure is often crucial for smooth transition and efficient utilization of resources. This integration involves defining the operator’s interaction with the existing infrastructure and ensuring compatibility. For example, if the operator interacts with databases or other external services, clear communication channels need to be established. A well-defined API contract between the operator and the existing infrastructure facilitates seamless integration.

Careful consideration must be given to the security implications of this interaction to prevent unauthorized access and ensure data integrity.

Interaction Diagram

This diagram depicts a simplified interaction between operators and various Kubernetes tools and services. The operator (represented by the central box) interacts with the Kubernetes API server, CI/CD pipeline, monitoring system, logging system, and existing infrastructure (external services). The arrows illustrate the data flow and communication channels between these components. The operator interacts with the Kubernetes API to manage resources.

The CI/CD pipeline handles deployments and updates. Monitoring and logging systems track the operator’s performance. The operator interacts with the external infrastructure to manage external resources.

Future Trends in Operator Development

Kubernetes operators, now a crucial component of cloud-native deployments, are poised for significant evolution. Emerging trends and technologies are driving innovation, pushing the boundaries of automation and management in complex systems. This section explores these advancements, examining new frameworks, tools, and the potential impact of AI and cloud-native technologies on operator development.

Emerging Trends and Technologies

Operator development is moving beyond basic deployments to encompass more sophisticated functionalities. This includes integrating advanced automation techniques, enhanced monitoring capabilities, and improved security features. Furthermore, there’s a growing emphasis on interoperability and standardized approaches to operator creation and deployment, enabling greater flexibility and maintainability.

New Operator Frameworks and Tools

Several new frameworks and tools are simplifying operator development and deployment. These tools often leverage existing technologies, like declarative configuration management, to streamline the process. They may incorporate features like automated testing, improved debugging capabilities, and enhanced documentation generation. Examples include open-source frameworks that automate aspects of operator development, offering a more streamlined process for developers. These frameworks can help accelerate operator development, reduce errors, and improve the overall quality of operators.

AI and Machine Learning in Operator Development

The application of AI and machine learning techniques promises to revolutionize operator development. AI can assist in automating complex tasks, like resource allocation and fault detection. Machine learning models can be trained on historical data to predict potential issues and optimize operator performance. For example, operators could be trained to anticipate and address resource bottlenecks before they impact applications, enhancing overall system stability and efficiency.

The potential to leverage machine learning for self-healing and proactive management is particularly significant.

Cloud-Native Technologies and their Impact

Cloud-native technologies are significantly influencing operator development. The increasing adoption of serverless functions, container orchestration, and microservices architectures necessitates operators that can adapt and integrate with these technologies seamlessly. Operators must be able to manage and orchestrate resources across these diverse environments, which demands a deep understanding of the cloud-native landscape. The interplay between cloud-native technologies and operator development will continue to shape the future of application deployment and management.

Future Challenges and Opportunities

The operator landscape faces challenges in scalability, security, and maintenance. Maintaining compatibility across different Kubernetes versions, ensuring security of operators themselves, and ensuring the long-term maintainability of operators are significant concerns. However, these challenges also present opportunities for innovation. New solutions to these issues could result in more robust, reliable, and secure operator deployments, benefiting enterprises adopting Kubernetes and cloud-native technologies.

There is significant potential for future growth in this space.

Ending Remarks

In summary, Kubernetes operators provide a powerful and efficient way to manage applications in Kubernetes. They automate complex tasks, improve consistency, and reduce operational overhead. Understanding their architecture, development process, and various use cases is crucial for leveraging their potential in modern application deployments. By understanding and implementing operators effectively, organizations can significantly streamline their application lifecycle management.

User Queries

What are the common challenges in operator development?

Common challenges in operator development include maintaining compatibility with Kubernetes releases, ensuring proper resource reconciliation, and addressing potential security vulnerabilities. Thorough testing and debugging are crucial for creating robust and reliable operators.

How do operators interact with Kubernetes API objects?

Operators interact with Kubernetes API objects through custom resource definitions (CRDs). These CRDs define the structure of the custom resources managed by the operator, enabling the operator to understand and manage application-specific configurations.

What are some common operator use cases beyond database management?

Operators are not limited to database management. They can be used for container orchestration, monitoring, logging, and other aspects of application lifecycle management. They empower users to automate specific workflows and tasks within their applications.

What are the key differences between operators and other Kubernetes tools?

Operators differ from other Kubernetes tools in their focus on automating the management of specific applications or tasks. While tools like deployments and services manage general Kubernetes resources, operators target particular applications and their unique requirements.