Embarking on the journey of microservices architecture often leads to a critical question: how do we manage data effectively? The Database-per-Service pattern emerges as a powerful approach, advocating for each microservice to have its own dedicated database. This pattern stands in stark contrast to the monolithic approach, offering benefits like independent scalability and fault isolation. But what exactly does this entail, and how can it revolutionize your approach to building distributed systems?

This comprehensive exploration delves into the core concepts of the Database-per-Service pattern. We’ll uncover its advantages and disadvantages, dissect implementation strategies, and examine crucial aspects like data replication, communication, and design considerations. Moreover, we’ll look at real-world examples and alternative patterns, equipping you with the knowledge to make informed decisions about your microservices architecture.

Introduction to Database-per-Service

The Database-per-Service pattern is a crucial architectural approach in microservices, significantly impacting data management and service independence. It addresses the challenges of data access and ownership in a distributed environment, promoting autonomy and scalability. This introduction explores the core principles, relationships with microservices, and practical applications of this pattern.

Core Concept of Database-per-Service

The fundamental principle of the Database-per-Service pattern is that each microservice has its own dedicated database. This means that a single microservice owns and manages its own data, independent of other services. This contrasts with a monolithic architecture, where a single database often serves the entire application. This approach fosters loose coupling and allows for independent scaling and evolution of individual services.

Microservices and the Pattern

Microservices are an architectural style that structures an application as a collection of small, autonomous services, modeled around a business domain. These services communicate with each other, often through APIs. The Database-per-Service pattern is a natural fit for microservices because it reinforces their independent nature. Each microservice operates on its data, isolated from other services, which reduces the risk of cascading failures and allows for independent technology choices for each service’s database.

Beneficial Business Use Case Scenario

Consider an e-commerce platform. Using the Database-per-Service pattern, we can structure the platform with microservices like:

- Product Service: Manages product information, including details, descriptions, and pricing. Its database would store product-specific data.

- Order Service: Handles order creation, processing, and tracking. Its database would store order-related data, such as customer details, order items, and shipping information.

- Customer Service: Manages customer profiles, including contact information, purchase history, and preferences. Its database would store customer-specific data.

In this scenario, each service operates on its dedicated database. For example, when a customer places an order, the Order Service interacts with its database to store the order details, while the Product Service’s database remains unaffected. This design ensures that a failure in the Product Service’s database does not necessarily impact the Order Service, increasing the overall resilience of the e-commerce platform.

Furthermore, each service can scale independently. If there’s a surge in orders, the Order Service and its associated database can scale without affecting the Customer Service or Product Service databases. This independent scaling is a key benefit of the Database-per-Service pattern.

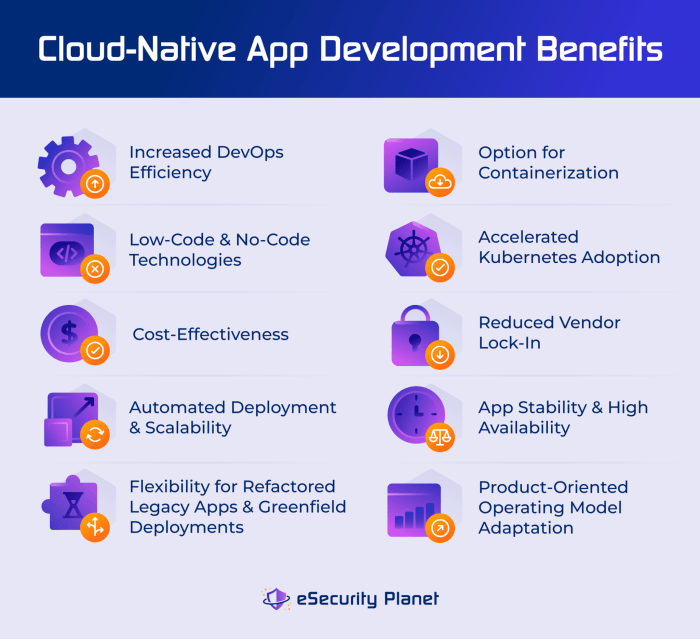

Advantages of the Pattern

The database-per-service pattern offers significant advantages in building and maintaining microservice architectures. By isolating data stores for each service, this pattern unlocks benefits related to scalability, fault tolerance, and data management. These advantages contribute to more resilient, independent, and manageable microservice systems.

Independent Scalability for Each Microservice

The database-per-service pattern enables each microservice to scale independently based on its specific needs. This is a crucial advantage in systems where different services experience varying levels of load.

- Optimized Resource Allocation: Each service can be scaled up or down independently, based on its resource demands. For example, a “product catalog” service might experience heavy read traffic, requiring a database optimized for read operations and a larger number of read replicas. Conversely, a “user profile” service, handling less frequent updates, might need less database capacity.

- Reduced Infrastructure Costs: Scaling only the services that require it prevents over-provisioning resources. Instead of scaling the entire database infrastructure, only the databases associated with the services experiencing increased load are scaled. This leads to more efficient resource utilization and cost savings.

- Enhanced Performance: Independent scaling allows for performance tuning specific to each service’s data access patterns. Different database technologies or configurations can be chosen based on the service’s requirements. For example, a service requiring complex analytical queries might use a data warehouse, while a service needing high-speed transactional operations could utilize a NoSQL database.

- Example: Consider an e-commerce platform. During a seasonal sale, the “product catalog” and “order processing” services will likely experience significantly higher traffic than the “user profile” service. With the database-per-service pattern, the databases supporting the catalog and order processing can be scaled up to handle the increased load, while the user profile database can remain at its normal capacity, ensuring optimal performance across the platform.

Improved Fault Isolation Within a System

The database-per-service pattern enhances fault isolation, preventing failures in one service from cascading and affecting other parts of the system. This isolation is crucial for maintaining system availability and resilience.

- Limited Blast Radius: A failure in one service’s database, such as a database outage or data corruption, will primarily impact only that service. Other services, with their own isolated databases, will continue to function, maintaining overall system availability.

- Reduced Dependencies: Services are less dependent on each other’s data stores. This means that a change or issue in one database is less likely to directly affect other services.

- Faster Recovery: In the event of a database failure, recovery efforts can be focused on the affected service without impacting the entire system. This allows for faster restoration of service and reduced downtime.

- Example: Imagine a social media platform. If the database for the “post feed” service experiences an issue, users might not see new posts. However, the “user profile” service, managing user accounts and settings, should remain unaffected, allowing users to log in, update their profiles, and interact with other parts of the platform.

Simplified Data Management and Reduced Dependencies

The database-per-service pattern simplifies data management by giving each service ownership and control over its data. This promotes autonomy and reduces dependencies between services, making the overall system more manageable.

- Clear Data Ownership: Each service is responsible for its own data model and schema. This simplifies data governance and reduces the risk of data inconsistencies across the system.

- Decoupled Data Models: Services can evolve their data models independently without affecting other services. This flexibility allows for faster development cycles and easier adaptation to changing business requirements.

- Reduced Data Dependencies: Services only need to understand and interact with the data relevant to their specific functions. This minimizes the need for complex joins and data transformations across service boundaries.

- Easier Technology Selection: Services can choose the database technology that best suits their needs, without being constrained by the requirements of other services. For instance, one service might use a relational database for structured data, while another uses a NoSQL database for unstructured data.

- Example: An e-commerce platform’s “product catalog” service can independently manage its product data, including details like product descriptions, prices, and images, using a database optimized for search and retrieval. The “order processing” service, on the other hand, can manage order-related data, such as order details, customer information, and payment status, using a database optimized for transactional operations. Each service can evolve its data model without impacting the other.

Disadvantages of the Pattern

While the database-per-service pattern offers significant benefits, it’s crucial to acknowledge its inherent drawbacks. Adopting this pattern introduces complexities that must be carefully considered and addressed to ensure successful microservice implementation. These challenges primarily revolve around data consistency, transaction management, and operational overhead.

Data Consistency Challenges

Maintaining data consistency across multiple databases presents a significant hurdle. Each microservice owns its data, leading to potential inconsistencies if not managed correctly.

- Data Duplication and Synchronization: Data may need to be duplicated across services to facilitate queries or relationships. For instance, consider an e-commerce system where customer information is stored in the `customer` service and order details in the `order` service. To display customer details alongside order information, the `order` service might need a copy of the customer’s name. This duplication requires robust synchronization mechanisms to ensure data remains consistent across all databases.

Failure to do so can lead to stale or incorrect data being presented to users.

- Referential Integrity: Enforcing referential integrity across multiple databases is complex because traditional foreign key constraints are limited to within a single database. Imagine a scenario where an order in the `order` service references a product in the `product` service. Without proper coordination, deleting a product in the `product` service could leave the `order` service with orphaned references, leading to data corruption.

Solutions involve implementing application-level checks, employing eventual consistency models, or using techniques like Saga patterns.

- Complex Joins: Performing joins across databases owned by different microservices can be challenging. Queries requiring data from multiple services necessitate techniques like data aggregation, materialized views, or using a service that acts as a data gateway. These approaches add complexity to query design and execution.

Complexities of Distributed Transactions

Implementing distributed transactions is another significant challenge with the database-per-service pattern. Transactions that span multiple databases require careful management to maintain data integrity.

- Two-Phase Commit (2PC): Traditionally, two-phase commit (2PC) is used to manage distributed transactions. This involves a coordinator that orchestrates the transaction across multiple participants (databases). However, 2PC can introduce performance bottlenecks and availability issues. If the coordinator fails, the entire transaction might be blocked, and it also can block resources.

- Saga Pattern: The Saga pattern provides an alternative approach to managing distributed transactions. A Saga is a sequence of local transactions, where each transaction updates a single service’s database. If a transaction fails, a compensating transaction is executed to undo the changes. This approach avoids the need for a central coordinator, improving availability.

- Eventual Consistency: Embracing eventual consistency is often necessary to achieve high availability and scalability. This means that data changes are propagated across services asynchronously. While the data will eventually be consistent, there might be a short period where data inconsistencies exist. This requires careful design to handle potential inconsistencies in applications.

Operational Overhead

The database-per-service pattern introduces increased operational overhead compared to a monolithic architecture.

- Database Management: Each microservice’s database requires individual management, including backups, monitoring, and performance tuning. This increases the operational workload and requires expertise in managing various database technologies.

- Deployment Complexity: Deploying and managing multiple databases and microservices can be complex. Automation tools and robust CI/CD pipelines are crucial to streamline deployments and minimize errors.

- Monitoring and Alerting: Monitoring the health and performance of each database and microservice becomes more complex. Effective monitoring and alerting systems are essential to quickly identify and resolve issues.

- Increased Infrastructure Costs: Running multiple databases and services can lead to increased infrastructure costs. Resource allocation must be carefully managed to avoid over-provisioning and unnecessary expenses.

Implementation Strategies

Implementing the database-per-service pattern requires careful consideration of various strategies. These strategies encompass selecting appropriate databases for each microservice, managing data consistency across service boundaries, and effectively handling data schema evolution in a distributed environment. Success hinges on thoughtful planning and execution of these key areas.

Choosing a Database for Each Microservice

Selecting the right database for each microservice is crucial for performance, scalability, and maintainability. The choice should align with the specific requirements of the service, considering factors like data access patterns, data volume, consistency needs, and the existing technology stack.

- Data Access Patterns: Consider how the service will interact with its data. Will it primarily perform reads, writes, or a mix of both? Are there complex queries or simple lookups? For example, a service focused on real-time analytics might benefit from a database optimized for fast reads and aggregations, such as a column-oriented database like Apache Cassandra or a time-series database like InfluxDB.

A service primarily focused on transactions might require a relational database like PostgreSQL or MySQL.

- Data Volume and Scalability: Estimate the expected data volume and growth rate. The chosen database should be able to scale to accommodate the anticipated load. Consider the database’s horizontal and vertical scalability capabilities. For instance, if a service is expected to handle massive amounts of data, a NoSQL database like MongoDB, designed for horizontal scalability, might be a better fit than a traditional relational database that requires more complex sharding strategies.

- Consistency Requirements: Determine the level of consistency required. Do transactions need to be ACID-compliant? If strong consistency is essential, a relational database might be preferred. However, if eventual consistency is acceptable, a NoSQL database or a distributed database with weaker consistency guarantees could be a suitable choice.

- Existing Technology Stack and Team Expertise: Leverage the existing technology stack and the team’s familiarity with different database technologies. Using technologies already in use can reduce development time and operational overhead. For example, if the team has extensive experience with Java and Spring, choosing a database that integrates well with these technologies might be beneficial.

- Data Structure and Modeling: Analyze the data structure and modeling requirements. Relational databases excel at handling structured data with well-defined relationships. NoSQL databases offer more flexibility for handling unstructured or semi-structured data. For example, if a service needs to store and retrieve complex, nested data structures, a document-oriented database like MongoDB could be a good choice.

Strategies for Handling Data Consistency Across Service Boundaries

Maintaining data consistency across microservices, each with its own database, is a significant challenge. Several strategies can be employed to address this, ranging from simple techniques for less critical operations to more complex distributed transaction management for critical data.

- Eventual Consistency: Implement eventual consistency where updates are propagated asynchronously. This approach allows services to operate independently and improves performance, but data might be temporarily inconsistent. For example, a service that updates a user’s profile could publish an event to a message queue. Other services, such as a service that updates the user’s activity feed, can consume this event and update their own databases accordingly.

- Idempotent Operations: Design operations to be idempotent, meaning they can be executed multiple times without changing the result beyond the initial execution. This is essential for handling retries and ensuring data integrity in the face of failures. For example, when updating a user’s profile, the update operation should be designed to handle multiple identical requests without corrupting the data.

- Compensating Transactions: Implement compensating transactions to roll back changes made by a service if a related operation fails in another service. This approach ensures that data remains consistent, even if distributed transactions are not fully supported. For example, if an order creation service fails after reserving inventory, a compensating transaction would release the reserved inventory.

- Two-Phase Commit (2PC): Utilize the two-phase commit protocol for distributed transactions when strong consistency is required. This approach ensures that all participating services either commit or rollback changes together. However, 2PC can introduce performance overhead and increase complexity. For example, when transferring funds between two accounts managed by different services, 2PC can ensure that both the debit and credit operations either succeed or fail together.

- Saga Pattern: Employ the Saga pattern to manage distributed transactions. A Saga is a sequence of local transactions, where each local transaction updates a single service’s database. If a local transaction fails, the Saga executes compensating transactions to undo the changes. There are two main types of Sagas: Choreography-based and Orchestration-based.

- Choreography-based Sagas: Services react to events published by other services to coordinate their actions.

This approach is decentralized and can be simpler to implement for less complex scenarios.

- Orchestration-based Sagas: A central orchestrator coordinates the execution of the Saga and handles failures. This approach provides more control and visibility but introduces a central point of failure.

- Choreography-based Sagas: Services react to events published by other services to coordinate their actions.

Designing a Plan for Managing Data Schema Evolution in a Distributed Environment

Evolving data schemas in a microservices architecture is a complex task. Changes to a service’s data model can impact other services that depend on it. A well-defined plan for managing schema evolution is critical to avoid breaking dependencies and ensuring system stability.

- Backward Compatibility: Prioritize backward compatibility whenever possible. Ensure that new versions of a service’s data schema are compatible with older versions. This allows other services to continue operating without requiring immediate updates. For example, when adding a new field to a data object, provide a default value to ensure compatibility with older versions.

- Forward Compatibility: Consider forward compatibility to allow newer versions of a service to understand data produced by older versions. This enables services to gradually adopt new schema versions without disrupting the system.

- Schema Versioning: Implement schema versioning to track changes to data schemas. This can involve using version numbers in the schema definition or in the data itself. This allows services to identify the schema version of the data they are processing.

- Data Migration Strategies: Develop data migration strategies to handle schema changes. This might involve creating migration scripts to update data in existing databases to the new schema. Tools like Liquibase or Flyway can automate database schema migrations.

- Consumer-Driven Contracts: Use consumer-driven contracts to ensure that changes to a service’s data schema do not break the services that consume its data. This involves defining contracts that specify the data format and the expected behavior of the service.

- Decoupling: Decouple services as much as possible to minimize the impact of schema changes. Use message queues or event buses to communicate between services, allowing them to evolve independently. For example, a service publishing an event about a user’s profile update should avoid tightly coupling the event format to the internal data model of the user profile service.

- Monitoring and Alerting: Implement robust monitoring and alerting to detect and address schema-related issues quickly. Monitor for data validation errors, compatibility issues, and other anomalies.

Data Replication and Synchronization

Data replication and synchronization are critical components within the database-per-service architecture, ensuring data consistency and availability across distributed databases. Implementing these strategies correctly is essential for maintaining data integrity and enabling seamless operation of microservices. These processes address the inherent challenges of data distribution, allowing services to access the information they need while mitigating the risks associated with data silos.

Techniques for Replicating Data

Data replication involves copying data from one database to another to maintain data availability, improve performance, and enable fault tolerance. Various techniques are employed, each with its own set of advantages and disadvantages. Understanding these techniques is crucial for selecting the appropriate method for a given microservice and its data requirements.

- Snapshot Replication: This approach involves creating a complete copy of the data at a specific point in time. It’s simple to implement but can be resource-intensive, especially for large datasets, as it requires transferring the entire dataset whenever a refresh is needed. Snapshot replication is suitable for read-heavy workloads where data consistency requirements are less stringent.

- Transactional Replication: Transactional replication involves replicating individual transactions from the source database to the target databases. It provides high data consistency, as changes are applied in the same order as they occur in the source database. This method is more complex to implement but ensures that the target databases closely reflect the source database’s state.

- Merge Replication: Merge replication allows for changes to be made at multiple databases and then merged into a single database. This technique is suitable for scenarios where data needs to be updated in different locations and then synchronized. Conflict resolution mechanisms are required to handle conflicting updates.

- Eventual Consistency: This is a crucial concept in distributed systems. It implies that data changes will eventually propagate to all replicas, but there might be a temporary inconsistency. This is often achieved using asynchronous replication methods. Eventual consistency is a trade-off, offering high availability and performance at the cost of potentially stale data.

Comparison of Data Synchronization Approaches

Data synchronization ensures that data across different databases remains consistent. Several approaches exist, each suited to different requirements regarding data latency, consistency, and complexity. The choice of synchronization method significantly impacts the overall performance and reliability of the microservice architecture.

- CDC (Change Data Capture): CDC is a technique that identifies and captures changes made to data in a database. These changes can then be propagated to other systems, such as data warehouses or other microservices. CDC offers several advantages:

- Real-time or Near Real-time Data Synchronization: CDC enables rapid propagation of data changes.

- Minimal Impact on Source Database: CDC mechanisms often operate without significantly impacting the performance of the source database.

- Flexibility: CDC can be used to synchronize data across various databases and systems.

- Polling: Polling involves regularly querying the source database for changes. While simple to implement, polling can be inefficient, especially if there are frequent changes. The frequency of polling directly impacts the latency of data synchronization.

- Trigger-based Synchronization: Triggers are database objects that automatically execute a set of actions in response to certain events on a table, such as an insert, update, or delete. Triggers can be used to propagate changes to other databases. This approach can provide near real-time synchronization, but it can also increase the complexity of database management and potentially impact performance.

- Message Queues: Message queues, such as Kafka or RabbitMQ, can be used to propagate data changes asynchronously. When a change occurs in a database, a message is published to the queue. Subscribers (other microservices or databases) then consume the message and apply the changes. This approach offers high scalability and decoupling but introduces complexity related to message handling and potential message loss.

Implementing Two-Phase Commit (2PC) in a Distributed System

Two-phase commit (2PC) is a distributed transaction protocol that ensures atomicity across multiple databases. Atomicity means that either all operations succeed or none of them do. 2PC is often used in scenarios where a single transaction spans multiple microservices and their associated databases.

The 2PC protocol consists of two phases:

- Prepare Phase: The transaction coordinator sends a “prepare” request to all participating databases. Each database checks if it can commit the transaction. If all databases respond positively, the coordinator proceeds to the commit phase.

- Commit Phase: The coordinator sends a “commit” request to all participating databases. Each database then commits the transaction. If any database fails to commit, the coordinator sends a “rollback” request to undo the changes.

Trade-offs of 2PC:

- Increased Latency: 2PC introduces significant latency because it requires multiple round trips between the coordinator and the participating databases.

- Complexity: Implementing 2PC is complex, requiring careful handling of failures and distributed consensus.

- Availability: 2PC can reduce availability. If the coordinator fails, the entire transaction can be blocked until the coordinator recovers.

Alternative approaches to achieve atomicity in distributed systems:

- Saga Pattern: A saga is a sequence of local transactions. If one transaction fails, a compensating transaction is executed to undo the changes. Sagas offer better availability than 2PC but require careful design to handle potential data inconsistencies.

- Eventual Consistency: Embracing eventual consistency can avoid the need for distributed transactions in some cases. This approach allows for temporary inconsistencies but prioritizes availability and performance.

Example: Consider an e-commerce system with two microservices: an order service and a payment service. When a customer places an order, the order service needs to create an order record in its database, and the payment service needs to process the payment. 2PC can be used to ensure that both operations succeed or fail together. However, due to the trade-offs of 2PC, a saga pattern might be a more suitable approach in this scenario to handle potential failures gracefully and maintain high availability.

Communication and Data Access

Microservices, designed to be independent and autonomous, rely on robust communication and data access strategies to function effectively. The database-per-service pattern necessitates careful consideration of how these services interact with their dedicated data stores and how external clients access this information. This section explores the intricacies of these interactions, emphasizing the importance of well-defined data access patterns and the role of API gateways.

Interactions with Databases

Microservices interact with their respective databases primarily through well-defined interfaces. Each service typically has exclusive access to its own database, promoting autonomy and minimizing the impact of changes in other services.

- Direct Database Access: In this approach, a microservice directly connects to its database to perform CRUD (Create, Read, Update, Delete) operations. This is often the simplest model for smaller applications or when performance is paramount. However, it can lead to tight coupling if not managed carefully.

- Data Abstraction Layers: To improve maintainability and reduce coupling, services often employ data abstraction layers, such as data access objects (DAOs) or repositories. These layers encapsulate the database interaction logic, providing a consistent API for the service to interact with the data.

- Database-Specific APIs: Some services expose database-specific APIs to other services. These APIs allow other services to query or update data within the database. However, this approach can lead to a “distributed monolith” if not carefully managed.

- Event-Driven Communication: Services can communicate data changes through events. When a service updates its database, it publishes an event that other interested services can consume. This is a common pattern for achieving eventual consistency and decoupling services.

Data Access Patterns: Direct Access vs. API Gateways

Choosing the right data access pattern is critical for microservice architecture. Two primary approaches are direct database access and API gateway-mediated access. Each has its own advantages and disadvantages.

- Direct Database Access: This pattern allows a service to directly interact with its database. It’s often simpler to implement, particularly for read-heavy operations.

- Advantages:

- Potentially higher performance for read operations.

- Simpler to implement for specific scenarios.

- Disadvantages:

- Tight coupling between services and the database schema.

- Increased risk of cascading failures if a database becomes unavailable.

- Security risks if not implemented properly (e.g., direct exposure of database credentials).

- Advantages:

- API Gateway-Mediated Access: In this pattern, all external access to microservices goes through an API gateway. The gateway acts as a single entry point, routing requests to the appropriate microservices and potentially aggregating data from multiple services.

- Advantages:

- Improved security by hiding the internal structure of the microservices.

- Centralized management of authentication, authorization, and rate limiting.

- Ability to aggregate data from multiple services.

- Decoupling of clients from the underlying microservices.

- Disadvantages:

- Adds an extra hop, potentially increasing latency.

- Increases complexity and requires careful management of the gateway itself.

- Can become a single point of failure if not designed for high availability.

- Advantages:

The Role of an API Gateway

An API gateway is a crucial component in microservice architectures, especially when implementing the database-per-service pattern. It acts as a reverse proxy, mediating all external requests to the backend microservices.

- Data Access Management: The API gateway can translate client requests into the appropriate format for the backend microservices. It can also aggregate data from multiple microservices, simplifying the client’s interaction.

- Security: The gateway is responsible for authentication, authorization, and request validation. It can enforce security policies, such as rate limiting and access control, to protect the backend services. For example, a gateway might use JSON Web Tokens (JWTs) to authenticate users before allowing access to protected resources.

- Routing and Load Balancing: The API gateway routes requests to the correct microservices based on the request path or other criteria. It also load balances traffic across multiple instances of a microservice to ensure high availability and performance.

- Monitoring and Observability: The API gateway provides a central point for monitoring and logging. It can track API usage, identify performance bottlenecks, and provide insights into the health of the microservices.

Design Considerations

When adopting the Database-per-Service pattern, careful design is crucial for ensuring the maintainability, scalability, and overall success of your microservice architecture. This section provides guidelines and considerations to aid in the effective implementation of this pattern, focusing on domain-driven design (DDD) principles and addressing the complexities of data relationships across service boundaries.

Guidelines for Designing Microservices with the Database-per-Service Pattern and Domain-Driven Design

Following these guidelines will help to create a well-structured and maintainable microservice architecture. These principles are rooted in Domain-Driven Design (DDD) to ensure alignment with business requirements.

- Define Service Boundaries Based on Bounded Contexts: Identify distinct business domains (bounded contexts) and map each to a microservice. Each bounded context should encapsulate a specific area of business responsibility. This ensures that each microservice owns its data related to its specific domain. For example, an e-commerce platform might have separate bounded contexts for “Product Catalog,” “Order Management,” and “Customer Accounts.”

- Embrace Independent Deployability: Each microservice should be independently deployable. This means that changes to one service should not require redeployment of other services. This independence is facilitated by each service having its own database.

- Prioritize Data Ownership and Autonomy: Each microservice should have exclusive ownership and control over its data. Avoid direct database access from other services. This promotes loose coupling and reduces the risk of cascading failures.

- Design Services Around Business Capabilities: Structure microservices around the core business capabilities they provide. This approach aligns the architecture with the business logic and makes it easier to understand and maintain. For instance, a “Payment Processing” service handles payment-related operations, while an “Inventory Management” service manages inventory levels.

- Use Context Mapping to Handle Cross-Context Relationships: When data needs to be shared between services, employ context mapping techniques such as shared kernels, customer-supplier relationships, or conformist relationships. For example, if the “Order Management” service needs product details, it could subscribe to events published by the “Product Catalog” service.

- Favor Eventual Consistency: Recognize that data consistency across services will often be eventually consistent rather than strongly consistent. Implement mechanisms to handle eventual consistency, such as event sourcing and compensating transactions.

- Implement Idempotent Operations: Ensure that operations are idempotent, meaning that they can be executed multiple times without changing the result beyond the initial execution. This is crucial when dealing with distributed systems and potential failures.

- Use Appropriate Data Access Patterns: Select data access patterns that best suit the needs of each microservice. Options include the repository pattern, data transfer objects (DTOs), and aggregate roots, ensuring data is accessed and manipulated efficiently and securely.

- Apply Polyglot Persistence: Choose the most suitable database technology for each microservice based on its specific requirements. For example, a service requiring complex graph traversals might use a graph database, while others may use relational or NoSQL databases.

- Monitor and Log Extensively: Implement robust monitoring and logging to track the performance and health of each microservice. This includes monitoring database performance, request latency, and error rates. Comprehensive logging is essential for troubleshooting and debugging.

Handling Relationships Between Data Owned by Different Microservices

Managing relationships between data owned by different microservices is a core challenge in the Database-per-Service pattern. Several strategies can be employed to handle these relationships effectively.

- Eventual Consistency and Event-Driven Architecture: This is the most common approach. When a change occurs in one service (e.g., a product is created in the “Product Catalog” service), the service publishes an event. Other services (e.g., “Order Management”) subscribe to these events and update their local data accordingly. This leads to eventual consistency, where data across services converges over time.

- Data Denormalization: Denormalize data across services to reduce the need for joins and improve read performance. For instance, the “Order Management” service might store a copy of the product name and price when an order is created. However, this increases data redundancy and requires careful management of updates.

- API Composition and Aggregation: Create an API gateway or a dedicated service to aggregate data from multiple microservices when a client needs to retrieve data that spans service boundaries. This service orchestrates requests to the underlying microservices and combines the results.

- CQRS (Command Query Responsibility Segregation): Separate read and write operations. Use a write model optimized for updates and a read model optimized for queries. This allows each service to use the data representation that best suits its needs.

- Distributed Transactions (with caution): Use distributed transactions (e.g., two-phase commit) sparingly, as they can introduce significant performance and complexity overhead. Consider using compensating transactions or eventual consistency mechanisms instead.

- Example: Consider an order that references a product. The “Order Management” service would store the product ID. When a user views the order, the “Order Management” service can either:

- Query the “Product Catalog” service to get product details (API Composition).

- Store a denormalized copy of the product name and price in the “Order Management” service (Data Denormalization).

- Listen to product updates via events to keep the local product information up-to-date (Eventual Consistency).

Impact of the Pattern on Data Modeling and Database Schema Design

The Database-per-Service pattern significantly influences data modeling and database schema design. Each microservice owns its data, leading to decentralized data models.

- Decentralized Data Models: Each microservice has its own database and data model. This allows each team to choose the most appropriate database technology and design schemas optimized for its specific needs.

- Database Schema Design:

- Schemas are tailored to the specific needs of each service.

- Services may use different database technologies (polyglot persistence).

- Data is often denormalized to improve read performance and reduce the need for joins across services.

- Data Modeling Considerations:

- Aggregate Roots: Within each service, model data using aggregate roots to ensure consistency within the service’s boundaries.

- Bounded Contexts: Use bounded contexts to define the scope of each service’s data model.

- Event Sourcing: Consider event sourcing for services where the history of data changes is important.

- Example:

- “Product Catalog” Service: Might use a relational database with a schema optimized for product information, including attributes like name, description, price, and inventory levels.

- “Order Management” Service: Might use a NoSQL database to store order data, potentially including denormalized product information for faster read access. The schema would be optimized for order-related data and its relationships.

- Schema Evolution: Database schemas must evolve independently within each service. Consider using schema migration tools and backward-compatible changes to minimize disruption.

- Data Consistency Challenges: Since data is distributed, maintaining consistency across services becomes more complex. Implement strategies such as eventual consistency, compensating transactions, and event-driven architectures to handle these challenges.

Monitoring and Observability

Effective monitoring and observability are crucial for the database-per-service pattern. This distributed architecture introduces complexities in tracking application health, performance, and data consistency. Implementing robust monitoring and observability strategies enables proactive issue detection, efficient troubleshooting, and informed decision-making. A well-defined monitoring strategy helps to maintain service-level agreements (SLAs) and ensure the overall reliability of the microservice ecosystem.

Monitoring the Health and Performance of Each Database

Monitoring the health and performance of each database is fundamental to the success of the database-per-service pattern. Each service has its own dedicated database, so understanding the performance characteristics of each instance is vital for identifying bottlenecks, preventing outages, and optimizing resource allocation. A proactive approach to monitoring allows for early detection of potential issues before they impact service availability.To effectively monitor the health and performance of each database, consider the following strategies:

- Database Connection Monitoring: Track the number of active connections, connection pool utilization, and connection timeouts. High connection counts or frequent timeouts can indicate resource exhaustion or inefficient connection management.

- Query Performance Monitoring: Monitor slow queries, query execution times, and query frequency. Identify and optimize poorly performing queries that consume excessive resources. Use database-specific tools (e.g., `EXPLAIN` in MySQL, execution plans in SQL Server) to analyze query performance.

- Resource Utilization Monitoring: Monitor CPU usage, memory consumption, disk I/O, and network traffic for each database instance. High resource utilization can indicate performance bottlenecks or the need for scaling.

- Storage Monitoring: Monitor disk space usage, data growth rates, and storage I/O. Proactively manage storage capacity to prevent data loss and ensure sufficient space for future growth. Implement alerts for low disk space thresholds.

- Replication Monitoring: If using data replication, monitor replication lag, replication errors, and replication status. Ensure data consistency across replicas and minimize data loss in case of a failure.

- Error Rate Monitoring: Track database error rates, including connection errors, query errors, and transaction failures. High error rates may indicate underlying issues within the database or related to the application.

- Transaction Monitoring: Monitor the number of transactions, transaction duration, and transaction throughput. Identify long-running transactions or transaction bottlenecks that impact performance.

- Backup and Recovery Monitoring: Verify the successful completion of database backups and test the recovery process periodically. Ensure that data can be restored in case of a failure.

Tracing Transactions Across Multiple Microservices and Databases

Tracing transactions across multiple microservices and databases is essential for understanding the flow of requests and identifying the root cause of issues in a distributed system. This is especially critical in the database-per-service pattern, where a single business transaction may involve multiple database interactions across different services. Transaction tracing enables developers to visualize the path of a request, pinpoint performance bottlenecks, and debug complex issues.Implement transaction tracing by employing the following methods:

- Distributed Tracing Systems: Integrate a distributed tracing system like Jaeger, Zipkin, or Datadog. These systems automatically collect and correlate trace data across microservices, providing a visual representation of transaction flows. They use unique trace IDs to link related operations across services.

- Propagating Trace Context: Ensure that trace context (e.g., trace ID, span ID) is propagated across service boundaries. This is typically done by passing trace headers in HTTP requests or using message brokers like Kafka.

- Instrumentation Libraries: Utilize instrumentation libraries or agents provided by the tracing system to automatically instrument code and capture relevant data (e.g., method calls, database queries).

- Database Query Instrumentation: Instrument database queries to capture query execution times, database connection details, and other relevant information. This provides insight into the database interactions within a transaction.

- Log Correlation: Correlate logs across microservices using trace IDs or other identifiers. This enables developers to correlate log entries related to a specific transaction and identify the root cause of issues.

- Visualizing Transaction Flows: Use the tracing system’s user interface to visualize transaction flows, including service dependencies, latency metrics, and error information. This helps to identify performance bottlenecks and troubleshoot issues.

- Example: Consider an e-commerce application where a user places an order. The transaction might involve multiple services (e.g., order service, payment service, inventory service) and their respective databases. With distributed tracing, you can track the entire transaction, from the initial order request to the final confirmation, even as it spans several microservices. You can quickly identify if the payment service is taking too long or if there’s an issue with inventory updates.

Gathering Metrics and Logs for Troubleshooting Issues Within a Distributed System

Gathering comprehensive metrics and logs is vital for troubleshooting issues within a distributed system. Metrics provide real-time insights into the performance and health of services, while logs offer detailed information about events, errors, and application behavior. By collecting and analyzing these data sources, developers can quickly identify the root cause of issues and resolve them efficiently.Employ these strategies to gather metrics and logs effectively:

- Centralized Logging: Implement a centralized logging system (e.g., ELK Stack, Splunk, Graylog) to collect and store logs from all microservices. This enables easy searching, filtering, and analysis of log data.

- Structured Logging: Use structured logging formats (e.g., JSON) to make logs easily parsable and searchable. Include relevant information in each log entry, such as timestamps, log levels, service names, and correlation IDs.

- Log Levels: Use appropriate log levels (e.g., DEBUG, INFO, WARN, ERROR) to categorize log messages based on their severity. This helps to filter out noise and focus on critical issues.

- Metric Collection: Collect metrics using a metrics collection system (e.g., Prometheus, Datadog, Grafana). Define key performance indicators (KPIs) for each service and database.

- Alerting: Set up alerts based on metrics and log patterns to notify developers of potential issues. Configure alerts for critical errors, performance degradation, and resource exhaustion.

- Dashboards: Create dashboards to visualize metrics and logs. Dashboards provide a consolidated view of service health and performance.

- Log Aggregation and Analysis: Utilize log aggregation tools to analyze log data and identify patterns, anomalies, and potential issues. Tools like the ELK stack (Elasticsearch, Logstash, Kibana) can perform advanced log analysis and reporting.

- Metrics Aggregation and Analysis: Use metrics aggregation tools to calculate aggregate statistics, such as average response times, error rates, and resource utilization. Analyze metrics to identify trends, performance bottlenecks, and capacity issues.

- Example: Imagine a service experiences an unexpected spike in errors. By examining the logs, you might discover a problem with a database connection. The logs would show connection timeout errors and database query failures, pointing directly to the root cause. Furthermore, metrics on the database server might show a corresponding spike in CPU usage or disk I/O, confirming the bottleneck.

Alternative Patterns

When designing data management strategies for microservices, the database-per-service pattern is not the only approach. Understanding alternative patterns allows for informed decisions based on specific project requirements and constraints. Choosing the right pattern depends on factors like the level of data isolation needed, the complexity of transactions, and the team’s familiarity with different architectural styles.

Alternative Data Management Patterns

Several alternative data management patterns exist, each with its own set of trade-offs. Some popular alternatives include the shared database pattern and the saga pattern.* Shared Database Pattern: In this pattern, multiple microservices share a single database. This can simplify data consistency and transactions, but it can also introduce tight coupling between services, making independent evolution challenging.

Saga Pattern

The saga pattern addresses transactions that span multiple microservices. A saga is a sequence of local transactions. If one transaction fails, the saga orchestrates compensating transactions to undo the changes made by the preceding transactions. This pattern is particularly useful for complex business processes that require data consistency across multiple services.

Eventual Consistency

This approach allows for data to eventually become consistent across different services. It is often used with the database-per-service pattern. Changes are published as events, and services react to these events to update their local data.

CQRS (Command Query Responsibility Segregation)

CQRS separates read and write operations. This can improve performance and scalability by allowing read operations to be optimized independently from write operations.

Comparison: Database-per-Service vs. Shared Database

Comparing the database-per-service pattern and the shared database pattern reveals distinct strengths and weaknesses. The choice between these patterns depends on the specific needs of the microservice architecture. The following table highlights the key differences:

| Feature | Database-per-Service | Shared Database | Strengths | Weaknesses |

|---|---|---|---|---|

| Data Isolation | High: Each service owns its data. | Low: Services share the database schema. | – Promotes independent service evolution.

| – Can lead to tight coupling between services.

|

| Data Consistency | Complex: Requires careful data synchronization strategies. | Simple: Data consistency is managed within the shared database. | – Reduces the risk of cascading failures.

| – Potential for database bottlenecks.

|

| Scalability | Easier: Services can be scaled independently. | More Challenging: Scaling the database can become a bottleneck. | – Easier to scale services independently.

| – Potential for database bottlenecks.

|

| Complexity | Higher: Requires implementing data synchronization and communication mechanisms. | Lower: Simpler data access and management. | – Promotes independent service evolution.

| – Can lead to tight coupling between services.

|

Scenarios for Other Patterns

While the database-per-service pattern is often a good starting point, other patterns may be more appropriate in certain scenarios.* Shared Database: The shared database pattern might be suitable for small, simple applications or when rapid development is prioritized over long-term scalability and independence. For example, a system with a few microservices that all need to access the same set of data, and the data model is relatively simple.

Saga Pattern

The saga pattern is essential for managing transactions that span multiple microservices. Consider using a saga when a business process involves several steps that need to be coordinated across different services, and failures require compensating actions. An example is an e-commerce system where ordering, payment, and shipping involve separate services.

Eventual Consistency

This approach is well-suited for systems where immediate consistency is not critical, and eventual consistency can provide significant performance benefits. For instance, a social media platform can use eventual consistency for updating user profiles or displaying feed data.

CQRS

CQRS is beneficial when read and write operations have significantly different performance requirements or data models. It’s especially effective in systems with a high volume of read operations. A good example is a reporting system that requires optimized read models for fast data retrieval.

Real-World Examples

The Database-per-Service pattern has gained significant traction in the microservices architecture, enabling independent service development, scaling, and maintenance. Several prominent organizations have successfully adopted this pattern, demonstrating its effectiveness in real-world scenarios. Examining these examples provides valuable insights into the benefits and challenges of implementing this approach.

Companies Utilizing the Database-per-Service Pattern

Many companies have embraced the Database-per-Service pattern to facilitate agility and scalability within their microservices architectures.

- Netflix: Netflix is a prime example of a company that leverages the Database-per-Service pattern extensively. Their microservices architecture, powering streaming services to millions of users, relies on this pattern to ensure each service can operate independently. This independence allows for faster development cycles, independent scaling of services based on demand, and reduced impact from failures within individual services. They use a variety of databases, each tailored to the specific needs of the service it supports.

For instance, a service managing user profiles might use a NoSQL database for flexible data storage, while a billing service could utilize a relational database for transactional integrity.

- Amazon: Amazon, with its vast e-commerce platform, also employs the Database-per-Service pattern. Each microservice responsible for different aspects of the platform, such as order processing, product catalog management, and recommendation engines, has its dedicated database. This architecture allows Amazon to handle massive traffic and ensure high availability, with each service scaling independently based on its specific needs. For example, the product catalog service has a dedicated database that allows the team to rapidly update product information and manage large volumes of data without impacting other services.

- Spotify: Spotify, the music streaming giant, uses microservices and, consequently, the Database-per-Service pattern. This pattern enables them to manage various functionalities like user accounts, playlists, and music recommendations. Each service has its own database, allowing for efficient data management and independent scaling. This architecture supports their ability to handle millions of users and provide a seamless music streaming experience.

Case Studies Illustrating Benefits and Challenges

Analyzing specific case studies offers a more granular understanding of the practical implications of the Database-per-Service pattern. These case studies highlight both the advantages and the difficulties encountered during implementation.

- E-commerce Platform: An e-commerce platform implemented the Database-per-Service pattern to modernize its monolithic application. The platform was divided into services such as product catalog, order management, and user accounts. Each service was assigned its own database, allowing for independent development and deployment.

- Benefits: Increased agility, faster release cycles, and improved scalability. Each team could work on their service independently, leading to faster development and deployment times.

The ability to scale services independently meant that the platform could handle peak traffic loads more efficiently.

- Challenges: Data consistency issues and the need for complex distributed transactions. Ensuring data consistency across multiple databases required careful design and implementation of data replication and synchronization mechanisms. Implementing distributed transactions added complexity to the system.

- Benefits: Increased agility, faster release cycles, and improved scalability. Each team could work on their service independently, leading to faster development and deployment times.

- Financial Services Application: A financial services company adopted the Database-per-Service pattern to build a new trading platform. The platform was composed of services like order execution, risk management, and market data. Each service had its dedicated database.

- Benefits: Enhanced fault isolation and improved security. If one service failed, it did not necessarily impact other services, ensuring higher system availability.

The isolation of data within each service also improved security.

- Challenges: Increased operational overhead and the need for robust monitoring and observability. Managing multiple databases required additional operational expertise. Monitoring and observability became more complex, requiring tools and processes to track performance and troubleshoot issues across multiple services.

- Benefits: Enhanced fault isolation and improved security. If one service failed, it did not necessarily impact other services, ensuring higher system availability.

Descriptive Illustration of the Architecture

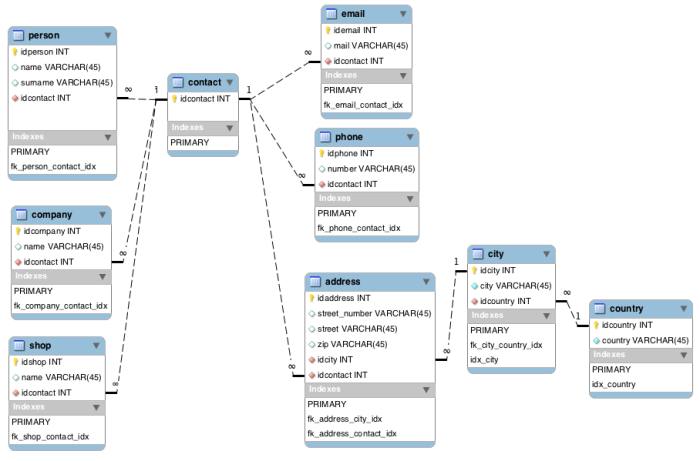

The architecture of a system using the Database-per-Service pattern is characterized by the independent nature of each service and its associated database.

Imagine a system for managing an online bookstore. The system comprises several microservices, each with its own dedicated database.

1. User Service: Manages user accounts, profiles, and authentication. It has its own database, likely a relational database, to store user information such as usernames, passwords, and contact details.

2. Catalog Service: Manages the product catalog, including book details, authors, and pricing. It uses a database optimized for product information, such as a document database or a graph database, enabling flexible data modeling and efficient querying.

3. Order Service: Handles order placement, order tracking, and payment processing. It has its own database, often a relational database, to store order details, customer information, and payment records.

4. Recommendation Service: Generates personalized book recommendations. It utilizes a database optimized for recommendation algorithms, which could be a graph database or a specialized recommendation engine database.

5. Interaction and Data Flow: When a user places an order, the following interactions occur:

- The User Service authenticates the user.

- The Catalog Service is queried for book details and availability.

- The Order Service creates a new order record, referencing user and product information.

- The Recommendation Service updates user preferences based on the order.

6. Communication: Services communicate through APIs, message queues (e.g., Kafka), or other inter-service communication mechanisms. For example, the Order Service might publish an event to a message queue when an order is placed, triggering the Recommendation Service to update user preferences.

7. Data Access: Each service is responsible for accessing and managing its own data. Services do not directly access databases of other services. Instead, they interact with other services through their APIs.

8. Deployment and Scaling: Each service can be deployed and scaled independently. For example, if the Catalog Service experiences a surge in traffic, it can be scaled up without impacting the User Service or Order Service.

9. Monitoring: Monitoring tools are used to track the performance and health of each service and its associated database. This enables early detection of issues and facilitates rapid troubleshooting.

Final Wrap-Up

In conclusion, the Database-per-Service pattern presents a robust strategy for managing data within a microservices environment. While it introduces complexities such as data consistency challenges and operational overhead, the advantages of independent scalability, improved fault isolation, and simplified data management are compelling. By carefully considering implementation strategies, data synchronization techniques, and design guidelines, you can harness the power of this pattern to build resilient, scalable, and maintainable microservices architectures.

The right approach hinges on understanding the trade-offs and tailoring the solution to your specific business needs.

FAQs

What are the primary benefits of the Database-per-Service pattern?

The main benefits include independent scalability for each service, improved fault isolation (a failure in one service’s database doesn’t necessarily affect others), and simplified data management within individual services.

What are the main challenges of the Database-per-Service pattern?

Key challenges include data consistency across multiple databases, complexities introduced by distributed transactions, and increased operational overhead associated with managing multiple databases.

How does data consistency get handled in this pattern?

Data consistency is often addressed using techniques like eventual consistency, data replication, and careful transaction management. Techniques like Change Data Capture (CDC) and the Saga pattern are also commonly employed.

When is the Database-per-Service pattern most appropriate?

This pattern is most appropriate when microservices have distinct data requirements and need to evolve independently. It’s especially valuable in large, complex systems where scalability and fault tolerance are paramount.